SCARLET-NAS: Bridging the Gap between Stability and Scalability in Weight-sharing Neural Architecture Search

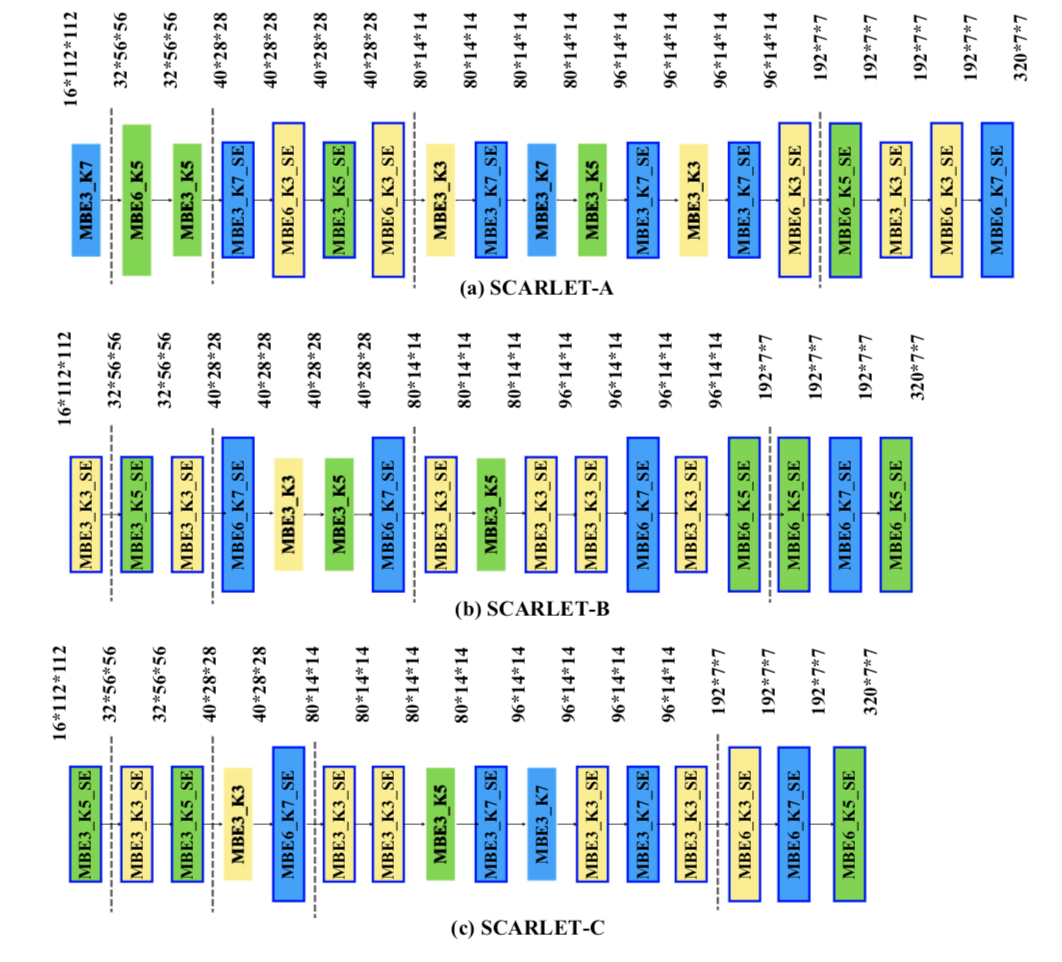

To discover powerful yet compact models is an important goal of neural architecture search. Previous two-stage one-shot approaches are limited by search space with a fixed depth. It seems handy to include an additional skip connection in the search space to make depths variable. However, it creates a large range of perturbation during supernet training and it has difficulty giving a confident ranking for subnetworks. In this paper, we discover that skip connections bring about significant feature inconsistency compared with other operations, which potentially degrades the supernet performance. Based on this observation, we tackle the problem by imposing an equivariant learnable stabilizer to homogenize such disparities. Experiments show that our proposed stabilizer helps to improve the supernet's convergence as well as ranking performance. With an evolutionary search backend that incorporates the stabilized supernet as an evaluator, we derive a family of state-of-the-art architectures, the SCARLET series of several depths, especially SCARLET-A obtains 76.9% top-1 accuracy on ImageNet. Code is available at https://github.com/xiaomi-automl/ScarletNAS.

PDF Abstract

CIFAR-10

CIFAR-10

ImageNet

ImageNet

MS COCO

MS COCO

NAS-Bench-101

NAS-Bench-101