Frequency and Multi-Scale Selective Kernel Attention for Speaker Verification

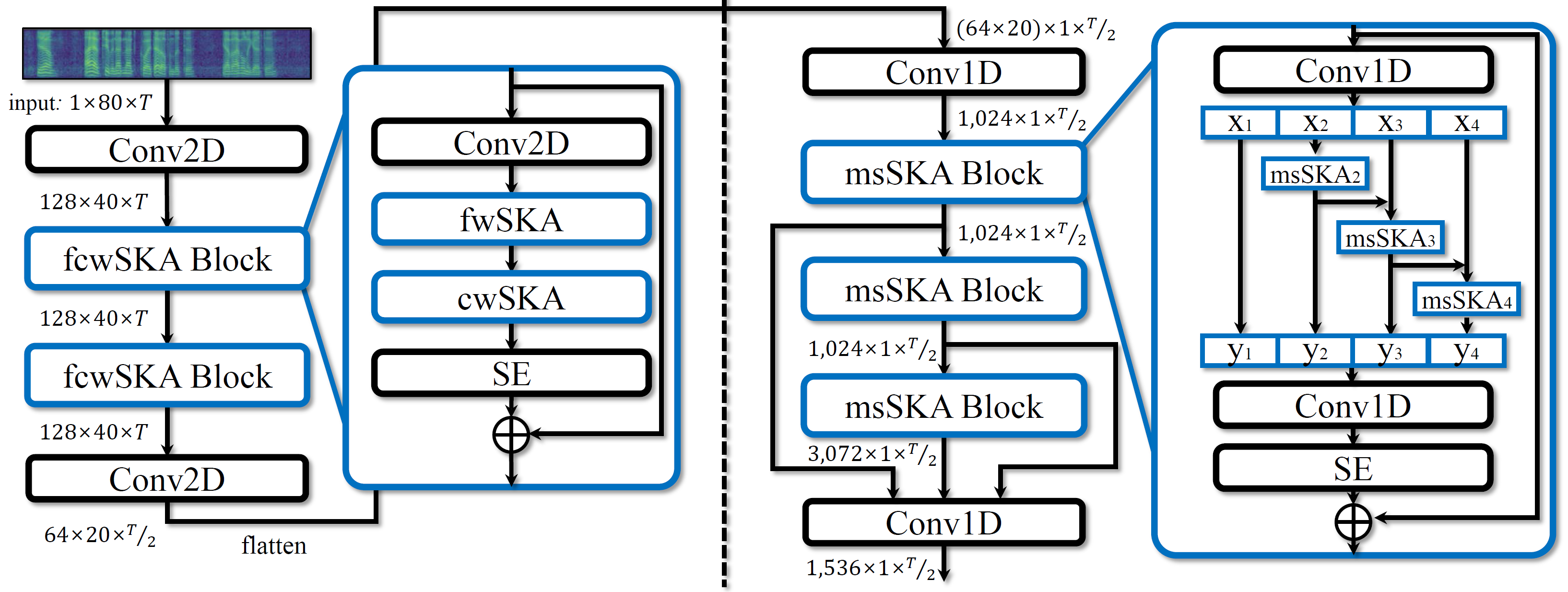

The majority of recent state-of-the-art speaker verification architectures adopt multi-scale processing and frequency-channel attention mechanisms. Convolutional layers of these models typically have a fixed kernel size, e.g., 3 or 5. In this study, we further contribute to this line of research utilising a selective kernel attention (SKA) mechanism. The SKA mechanism allows each convolutional layer to adaptively select the kernel size in a data-driven fashion. It is based on an attention mechanism which exploits both frequency and channel domain. We first apply existing SKA module to our baseline. Then we propose two SKA variants where the first variant is applied in front of the ECAPA-TDNN model and the other is combined with the Res2net backbone block. Through extensive experiments, we demonstrate that our two proposed SKA variants consistently improves the performance and are complementary when tested on three different evaluation protocols.

PDF Abstract

VoxCeleb1

VoxCeleb1

VoxCeleb2

VoxCeleb2