Self-Supervised Learning with Swin Transformers

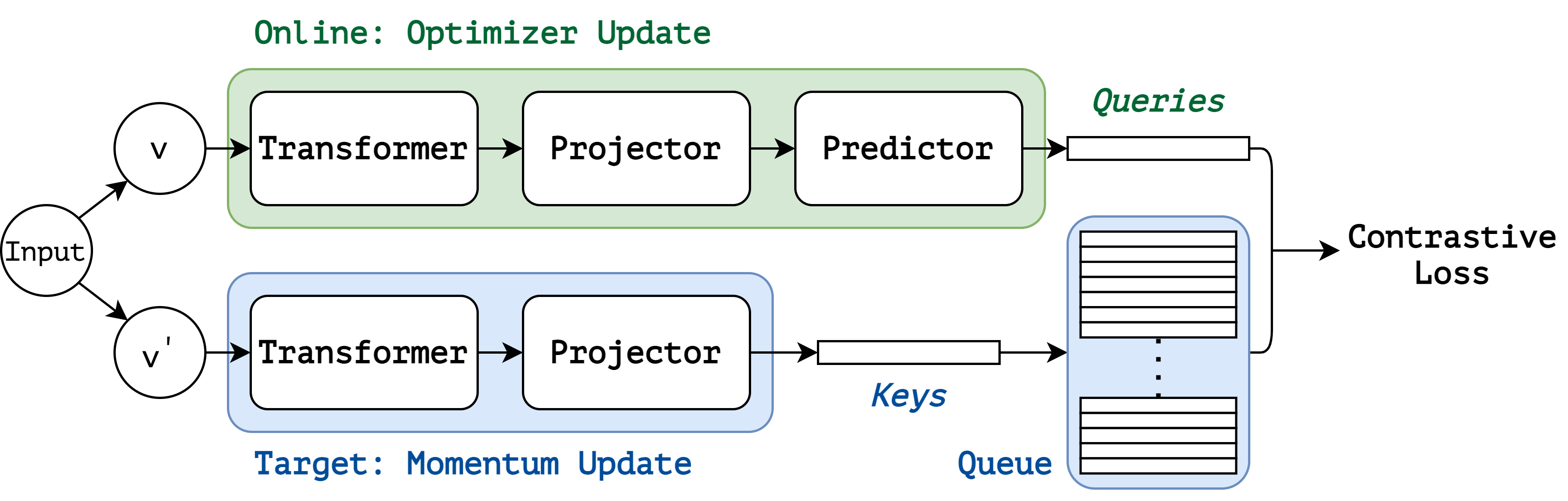

We are witnessing a modeling shift from CNN to Transformers in computer vision. In this work, we present a self-supervised learning approach called MoBY, with Vision Transformers as its backbone architecture. The approach basically has no new inventions, which is combined from MoCo v2 and BYOL and tuned to achieve reasonably high accuracy on ImageNet-1K linear evaluation: 72.8% and 75.0% top-1 accuracy using DeiT-S and Swin-T, respectively, by 300-epoch training. The performance is slightly better than recent works of MoCo v3 and DINO which adopt DeiT as the backbone, but with much lighter tricks. More importantly, the general-purpose Swin Transformer backbone enables us to also evaluate the learnt representations on downstream tasks such as object detection and semantic segmentation, in contrast to a few recent approaches built on ViT/DeiT which only report linear evaluation results on ImageNet-1K due to ViT/DeiT not tamed for these dense prediction tasks. We hope our results can facilitate more comprehensive evaluation of self-supervised learning methods designed for Transformer architectures. Our code and models are available at https://github.com/SwinTransformer/Transformer-SSL, which will be continually enriched.

PDF Abstract

ImageNet

ImageNet

ADE20K

ADE20K