Kernelized Similarity Learning and Embedding for Dynamic Texture Synthesis

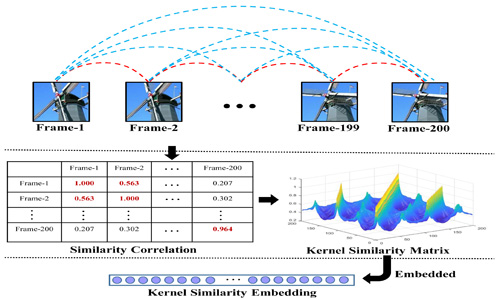

Dynamic texture (DT) exhibits statistical stationarity in the spatial domain and stochastic repetitiveness in the temporal dimension, indicating that different frames of DT possess a high similarity correlation that is critical prior knowledge. However, existing methods cannot effectively learn a promising synthesis model for high-dimensional DT from a small number of training data. In this paper, we propose a novel DT synthesis method, which makes full use of similarity prior knowledge to address this issue. Our method bases on the proposed kernel similarity embedding, which not only can mitigate the high-dimensionality and small sample issues, but also has the advantage of modeling nonlinear feature relationship. Specifically, we first raise two hypotheses that are essential for DT model to generate new frames using similarity correlation. Then, we integrate kernel learning and extreme learning machine into a unified synthesis model to learn kernel similarity embedding for representing DT. Extensive experiments on DT videos collected from the internet and two benchmark datasets, i.e., Gatech Graphcut Textures and Dyntex, demonstrate that the learned kernel similarity embedding can effectively exhibit the discriminative representation for DT. Accordingly, our method is capable of preserving the long-term temporal continuity of the synthesized DT sequences with excellent sustainability and generalization. Meanwhile, it effectively generates realistic DT videos with fast speed and low computation, compared with the state-of-the-art methods. The code and more synthesis videos are available at our project page https://shiming-chen.github.io/Similarity-page/Similarit.html.

PDF Abstract