SpatialFlow: Bridging All Tasks for Panoptic Segmentation

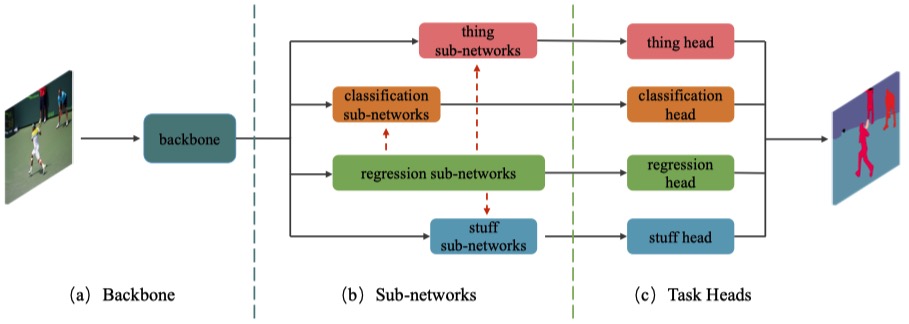

Object location is fundamental to panoptic segmentation as it is related to all things and stuff in the image scene. Knowing the locations of objects in the image provides clues for segmenting and helps the network better understand the scene. How to integrate object location in both thing and stuff segmentation is a crucial problem. In this paper, we propose spatial information flows to achieve this objective. The flows can bridge all sub-tasks in panoptic segmentation by delivering the object's spatial context from the box regression task to others. More importantly, we design four parallel sub-networks to get a preferable adaptation of object spatial information in sub-tasks. Upon the sub-networks and the flows, we present a location-aware and unified framework for panoptic segmentation, denoted as SpatialFlow. We perform a detailed ablation study on each component and conduct extensive experiments to prove the effectiveness of SpatialFlow. Furthermore, we achieve state-of-the-art results, which are $47.9$ PQ and $62.5$ PQ respectively on MS-COCO and Cityscapes panoptic benchmarks. Code will be available at https://github.com/chensnathan/SpatialFlow.

PDF Abstract

MS COCO

MS COCO

Cityscapes

Cityscapes