Stable and Controllable Neural Texture Synthesis and Style Transfer Using Histogram Losses

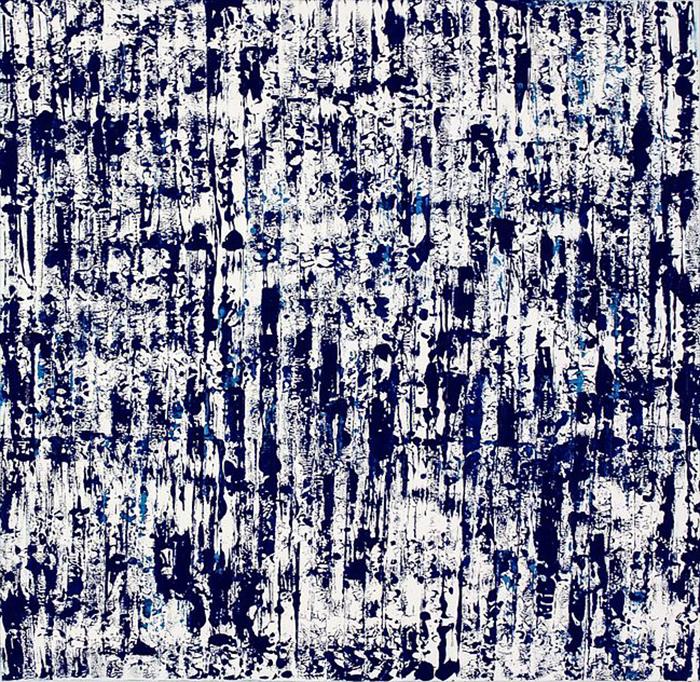

Recently, methods have been proposed that perform texture synthesis and style transfer by using convolutional neural networks (e.g. Gatys et al. [2015,2016]). These methods are exciting because they can in some cases create results with state-of-the-art quality. However, in this paper, we show these methods also have limitations in texture quality, stability, requisite parameter tuning, and lack of user controls. This paper presents a multiscale synthesis pipeline based on convolutional neural networks that ameliorates these issues. We first give a mathematical explanation of the source of instabilities in many previous approaches. We then improve these instabilities by using histogram losses to synthesize textures that better statistically match the exemplar. We also show how to integrate localized style losses in our multiscale framework. These losses can improve the quality of large features, improve the separation of content and style, and offer artistic controls such as paint by numbers. We demonstrate that our approach offers improved quality, convergence in fewer iterations, and more stability over the optimization.

PDF Abstract