Transfer Learning from Synthetic to Real LiDAR Point Cloud for Semantic Segmentation

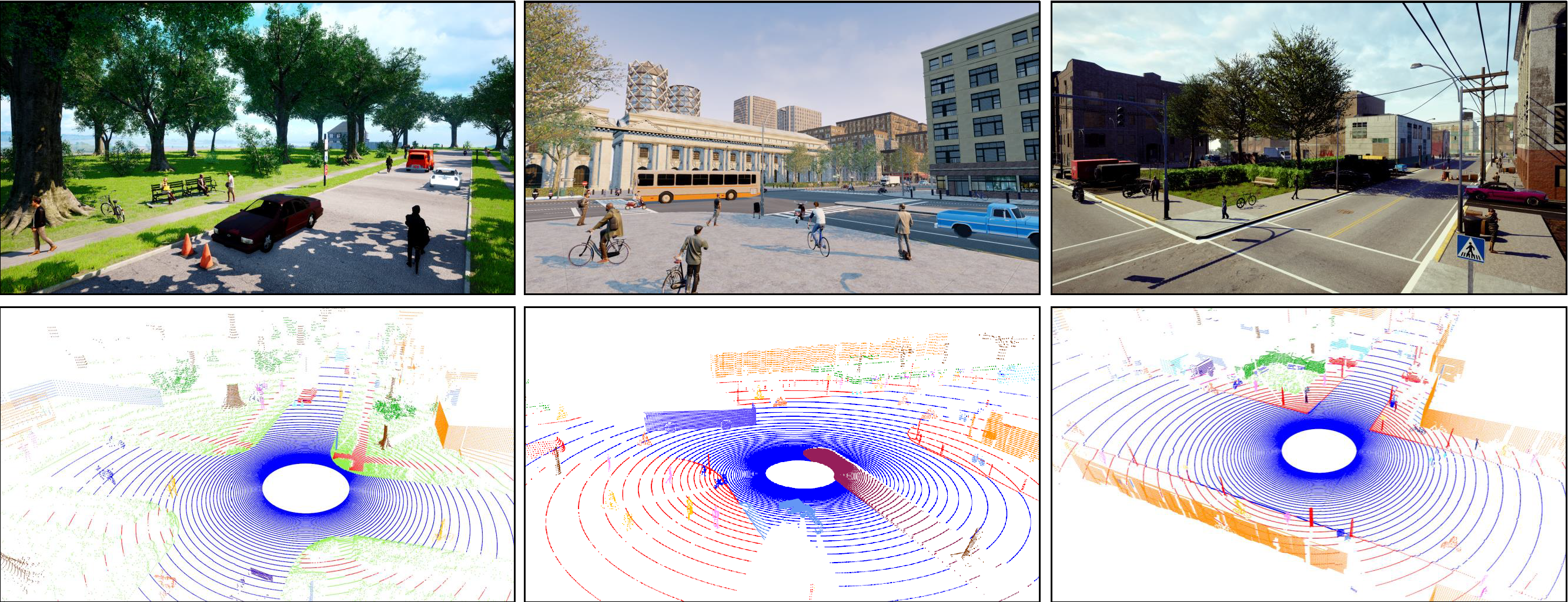

Knowledge transfer from synthetic to real data has been widely studied to mitigate data annotation constraints in various computer vision tasks such as semantic segmentation. However, the study focused on 2D images and its counterpart in 3D point clouds segmentation lags far behind due to the lack of large-scale synthetic datasets and effective transfer methods. We address this issue by collecting SynLiDAR, a large-scale synthetic LiDAR dataset that contains point-wise annotated point clouds with accurate geometric shapes and comprehensive semantic classes. SynLiDAR was collected from multiple virtual environments with rich scenes and layouts which consists of over 19 billion points of 32 semantic classes. In addition, we design PCT, a novel point cloud translator that effectively mitigates the gap between synthetic and real point clouds. Specifically, we decompose the synthetic-to-real gap into an appearance component and a sparsity component and handle them separately which improves the point cloud translation greatly. We conducted extensive experiments over three transfer learning setups including data augmentation, semi-supervised domain adaptation and unsupervised domain adaptation. Extensive experiments show that SynLiDAR provides a high-quality data source for studying 3D transfer and the proposed PCT achieves superior point cloud translation consistently across the three setups. SynLiDAR project page: \url{https://github.com/xiaoaoran/SynLiDAR}

PDF Abstract

SynLiDAR

SynLiDAR

nuScenes

nuScenes

SemanticKITTI

SemanticKITTI

SemanticPOSS

SemanticPOSS