Team DETR: Guide Queries as a Professional Team in Detection Transformers

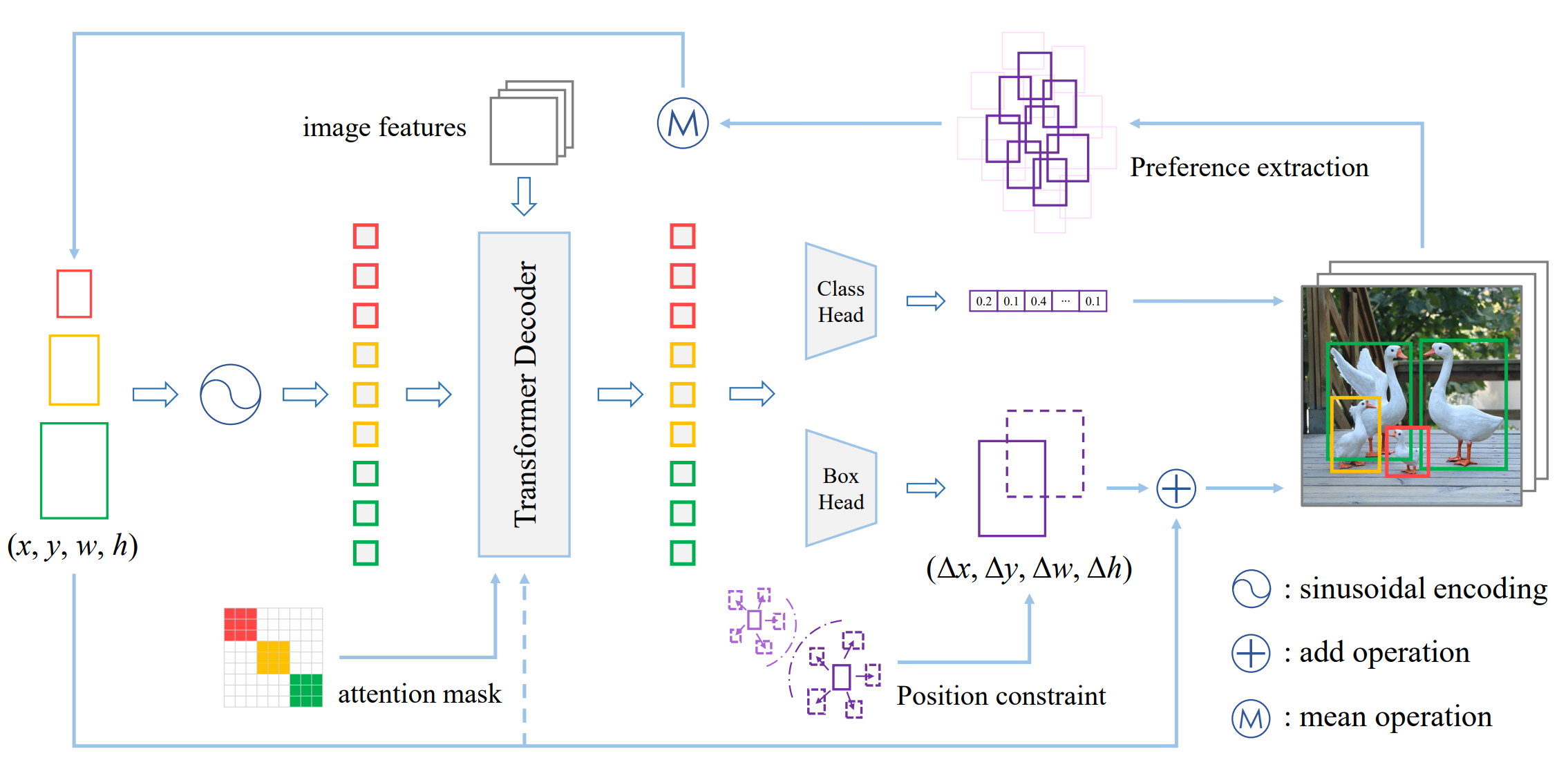

Recent proposed DETR variants have made tremendous progress in various scenarios due to their streamlined processes and remarkable performance. However, the learned queries usually explore the global context to generate the final set prediction, resulting in redundant burdens and unfaithful results. More specifically, a query is commonly responsible for objects of different scales and positions, which is a challenge for the query itself, and will cause spatial resource competition among queries. To alleviate this issue, we propose Team DETR, which leverages query collaboration and position constraints to embrace objects of interest more precisely. We also dynamically cater to each query member's prediction preference, offering the query better scale and spatial priors. In addition, the proposed Team DETR is flexible enough to be adapted to other existing DETR variants without increasing parameters and calculations. Extensive experiments on the COCO dataset show that Team DETR achieves remarkable gains, especially for small and large objects. Code is available at \url{https://github.com/horrible-dong/TeamDETR}.

PDF Abstract

MS COCO

MS COCO