TellMeWhy: A Dataset for Answering Why-Questions in Narratives

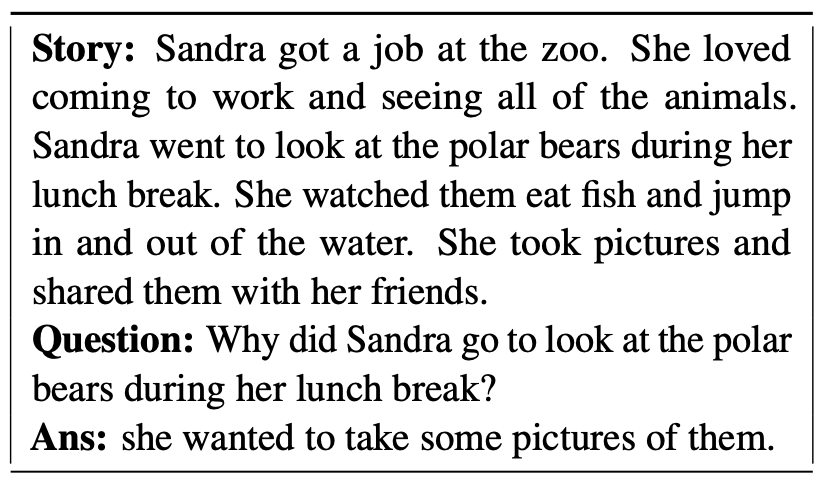

Answering questions about why characters perform certain actions is central to understanding and reasoning about narratives. Despite recent progress in QA, it is not clear if existing models have the ability to answer "why" questions that may require commonsense knowledge external to the input narrative. In this work, we introduce TellMeWhy, a new crowd-sourced dataset that consists of more than 30k questions and free-form answers concerning why characters in short narratives perform the actions described. For a third of this dataset, the answers are not present within the narrative. Given the limitations of automated evaluation for this task, we also present a systematized human evaluation interface for this dataset. Our evaluation of state-of-the-art models show that they are far below human performance on answering such questions. They are especially worse on questions whose answers are external to the narrative, thus providing a challenge for future QA and narrative understanding research.

PDF Abstract Findings (ACL) 2021 PDF Findings (ACL) 2021 AbstractCode

Tasks

Datasets

Introduced in the Paper:

TellMeWhy