Temporal Convolution for Real-time Keyword Spotting on Mobile Devices

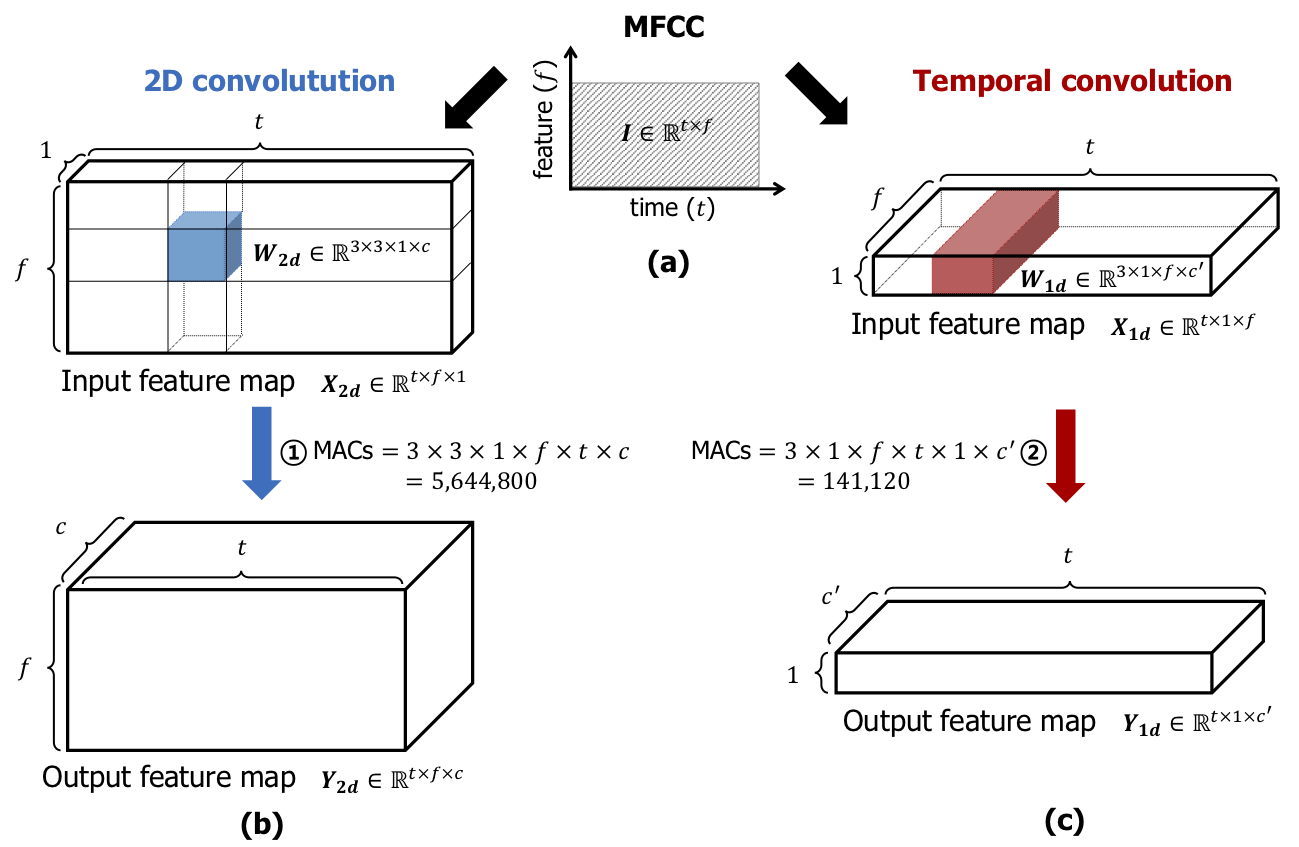

Keyword spotting (KWS) plays a critical role in enabling speech-based user interactions on smart devices. Recent developments in the field of deep learning have led to wide adoption of convolutional neural networks (CNNs) in KWS systems due to their exceptional accuracy and robustness. The main challenge faced by KWS systems is the trade-off between high accuracy and low latency. Unfortunately, there has been little quantitative analysis of the actual latency of KWS models on mobile devices. This is especially concerning since conventional convolution-based KWS approaches are known to require a large number of operations to attain an adequate level of performance. In this paper, we propose a temporal convolution for real-time KWS on mobile devices. Unlike most of the 2D convolution-based KWS approaches that require a deep architecture to fully capture both low- and high-frequency domains, we exploit temporal convolutions with a compact ResNet architecture. In Google Speech Command Dataset, we achieve more than \textbf{385x} speedup on Google Pixel 1 and surpass the accuracy compared to the state-of-the-art model. In addition, we release the implementation of the proposed and the baseline models including an end-to-end pipeline for training models and evaluating them on mobile devices.

PDF AbstractCode

Tasks

Datasets

Results from the Paper

Ranked #14 on

Keyword Spotting

on Google Speech Commands

(Google Speech Commands V2 12 metric)

Ranked #14 on

Keyword Spotting

on Google Speech Commands

(Google Speech Commands V2 12 metric)

Speech Commands

Speech Commands