The Atari Grand Challenge Dataset

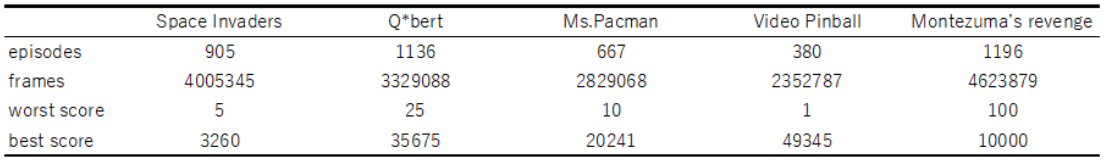

Recent progress in Reinforcement Learning (RL), fueled by its combination, with Deep Learning has enabled impressive results in learning to interact with complex virtual environments, yet real-world applications of RL are still scarce. A key limitation is data efficiency, with current state-of-the-art approaches requiring millions of training samples. A promising way to tackle this problem is to augment RL with learning from human demonstrations. However, human demonstration data is not yet readily available. This hinders progress in this direction. The present work addresses this problem as follows. We (i) collect and describe a large dataset of human Atari 2600 replays -- the largest and most diverse such data set publicly released to date, (ii) illustrate an example use of this dataset by analyzing the relation between demonstration quality and imitation learning performance, and (iii) outline possible research directions that are opened up by our work.

PDF AbstractDatasets

Introduced in the Paper:

Atari Grand Challenge

Atari Grand Challenge