The Optimal Hard Threshold for Singular Values is 4/sqrt(3)

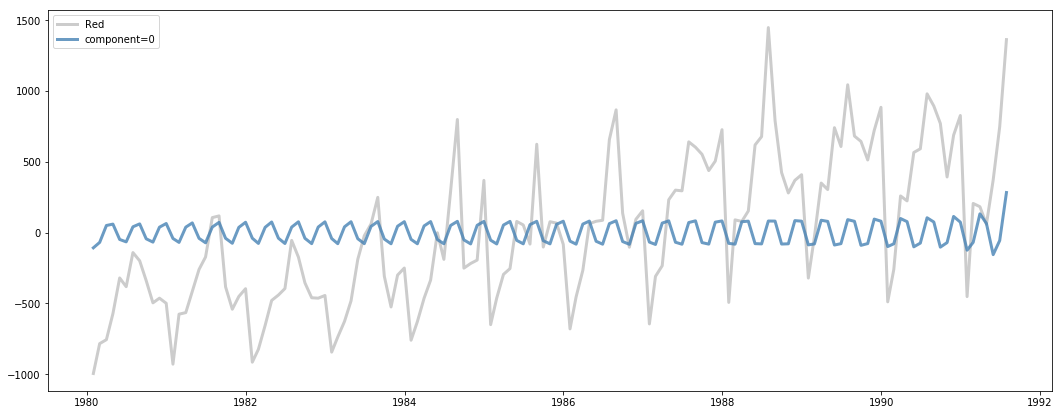

We consider recovery of low-rank matrices from noisy data by hard thresholding of singular values, where singular values below a prescribed threshold $\lambda$ are set to 0. We study the asymptotic MSE in a framework where the matrix size is large compared to the rank of the matrix to be recovered, and the signal-to-noise ratio of the low-rank piece stays constant. The AMSE-optimal choice of hard threshold, in the case of n-by-n matrix in noise level \sigma, is simply $(4/\sqrt{3}) \sqrt{n}\sigma \approx 2.309 \sqrt{n}\sigma$ when $\sigma$ is known, or simply $2.858\cdot y_{med}$ when $\sigma$ is unknown, where $y_{med}$ is the median empirical singular value. For nonsquare $m$ by $n$ matrices with $m \neq n$, these thresholding coefficients are replaced with different provided constants. In our asymptotic framework, this thresholding rule adapts to unknown rank and to unknown noise level in an optimal manner: it is always better than hard thresholding at any other value, no matter what the matrix is that we are trying to recover, and is always better than ideal Truncated SVD (TSVD), which truncates at the true rank of the low-rank matrix we are trying to recover. Hard thresholding at the recommended value to recover an n-by-n matrix of rank r guarantees an AMSE at most $3nr\sigma^2$. In comparison, the guarantee provided by TSVD is $5nr\sigma^2$, the guarantee provided by optimally tuned singular value soft thresholding is $6nr\sigma^2$, and the best guarantee achievable by any shrinkage of the data singular values is $2nr\sigma^2$. Empirical evidence shows that these AMSE properties of the $4/\sqrt{3}$ thresholding rule remain valid even for relatively small n, and that performance improvement over TSVD and other shrinkage rules is substantial, turning it into the practical hard threshold of choice.

PDF Abstract