Towards All-in-one Pre-training via Maximizing Multi-modal Mutual Information

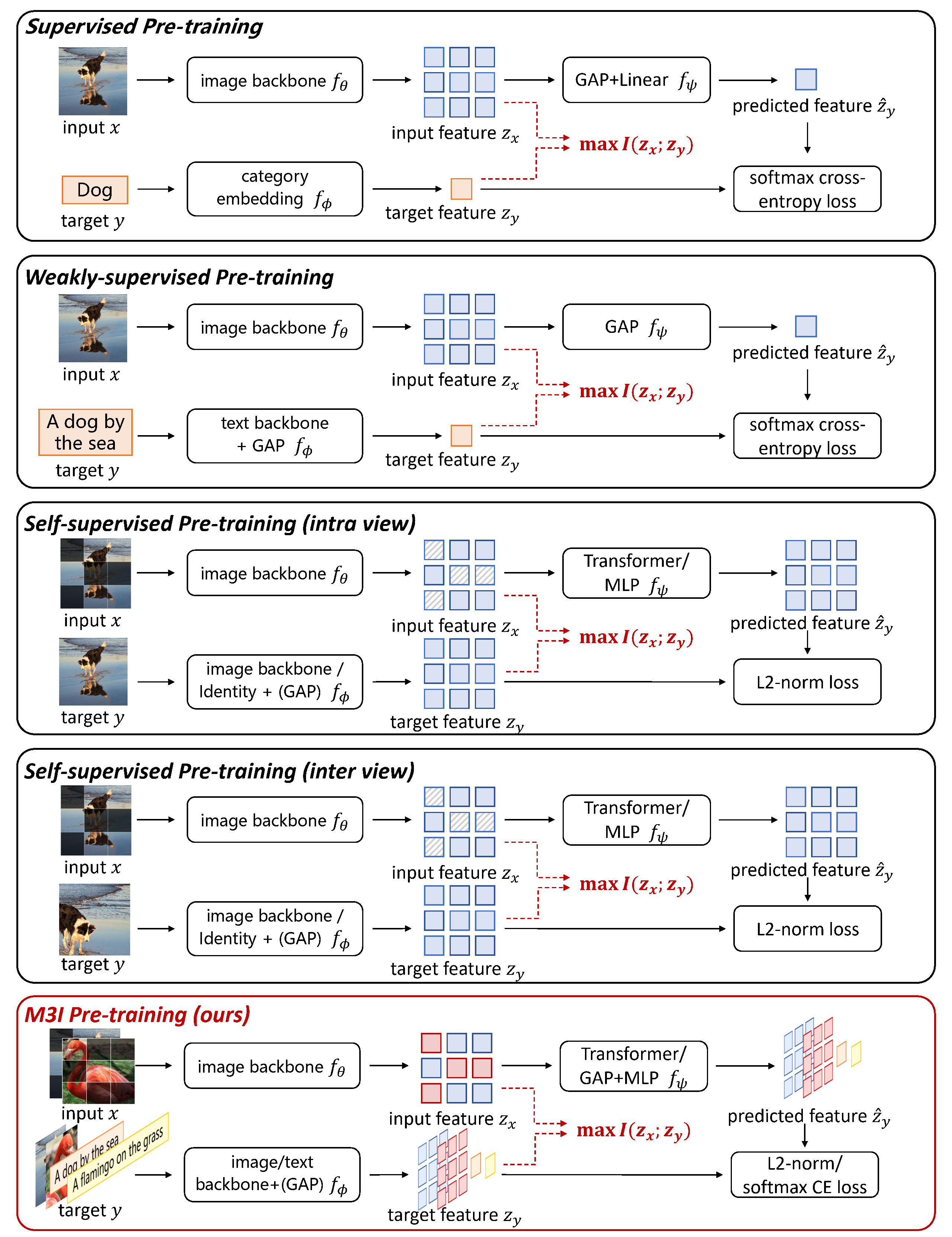

To effectively exploit the potential of large-scale models, various pre-training strategies supported by massive data from different sources are proposed, including supervised pre-training, weakly-supervised pre-training, and self-supervised pre-training. It has been proved that combining multiple pre-training strategies and data from various modalities/sources can greatly boost the training of large-scale models. However, current works adopt a multi-stage pre-training system, where the complex pipeline may increase the uncertainty and instability of the pre-training. It is thus desirable that these strategies can be integrated in a single-stage manner. In this paper, we first propose a general multi-modal mutual information formula as a unified optimization target and demonstrate that all existing approaches are special cases of our framework. Under this unified perspective, we propose an all-in-one single-stage pre-training approach, named Maximizing Multi-modal Mutual Information Pre-training (M3I Pre-training). Our approach achieves better performance than previous pre-training methods on various vision benchmarks, including ImageNet classification, COCO object detection, LVIS long-tailed object detection, and ADE20k semantic segmentation. Notably, we successfully pre-train a billion-level parameter image backbone and achieve state-of-the-art performance on various benchmarks. Code shall be released at https://github.com/OpenGVLab/M3I-Pretraining.

PDF Abstract CVPR 2023 PDF CVPR 2023 AbstractDatasets

Results from the Paper

Ranked #2 on

Semantic Segmentation

on ADE20K

(using extra training data)

Ranked #2 on

Semantic Segmentation

on ADE20K

(using extra training data)

ImageNet

ImageNet

MS COCO

MS COCO

ADE20K

ADE20K

LVIS

LVIS

LAION-400M

LAION-400M