Towards Data-Free Domain Generalization

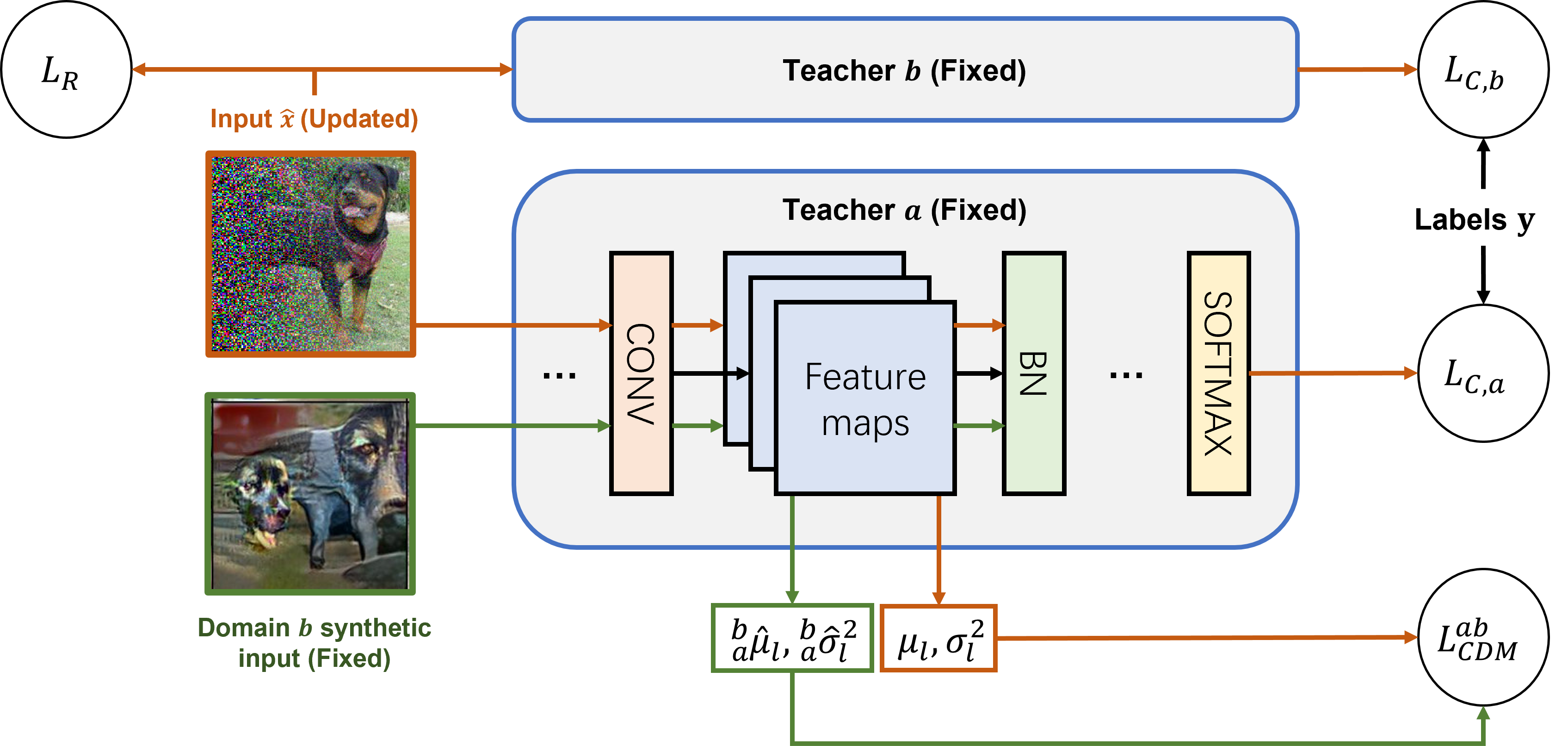

In this work, we investigate the unexplored intersection of domain generalization (DG) and data-free learning. In particular, we address the question: How can knowledge contained in models trained on different source domains be merged into a single model that generalizes well to unseen target domains, in the absence of source and target domain data? Machine learning models that can cope with domain shift are essential for real-world scenarios with often changing data distributions. Prior DG methods typically rely on using source domain data, making them unsuitable for private decentralized data. We define the novel problem of Data-Free Domain Generalization (DFDG), a practical setting where models trained on the source domains separately are available instead of the original datasets, and investigate how to effectively solve the domain generalization problem in that case. We propose DEKAN, an approach that extracts and fuses domain-specific knowledge from the available teacher models into a student model robust to domain shift. Our empirical evaluation demonstrates the effectiveness of our method which achieves first state-of-the-art results in DFDG by significantly outperforming data-free knowledge distillation and ensemble baselines.

PDF Abstract

MNIST

MNIST

SVHN

SVHN

PACS

PACS

USPS

USPS