Towards General and Efficient Active Learning

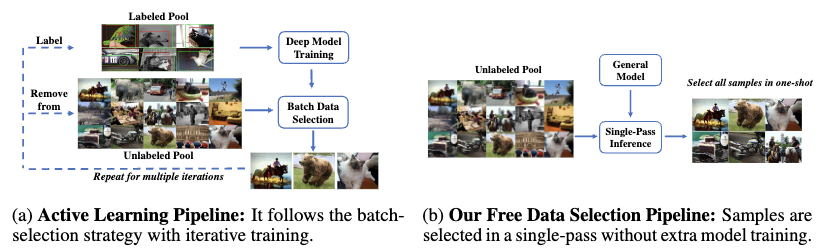

Active learning selects the most informative samples to exploit limited annotation budgets. Existing work follows a cumbersome pipeline that repeats the time-consuming model training and batch data selection multiple times. In this paper, we challenge this status quo by proposing a novel general and efficient active learning (GEAL) method following our designed new pipeline. Utilizing a publicly available pretrained model, our method selects data from different datasets with a single-pass inference of the same model without extra training or supervision. To capture subtle local information, we propose knowledge clusters extracted from intermediate features. Free from the troublesome batch selection strategy, all data samples are selected in one-shot through a distance-based sampling in the fine-grained knowledge cluster level. This whole process is faster than prior arts by hundreds of times. Extensive experiments verify the effectiveness of our method on object detection, image classification, and semantic segmentation. Our code is publicly available in https://github.com/yichen928/GEAL_active_learning.

PDF Abstract

CIFAR-10

CIFAR-10

Cityscapes

Cityscapes

ssd

ssd