Towards General Purpose Geometry-Preserving Single-View Depth Estimation

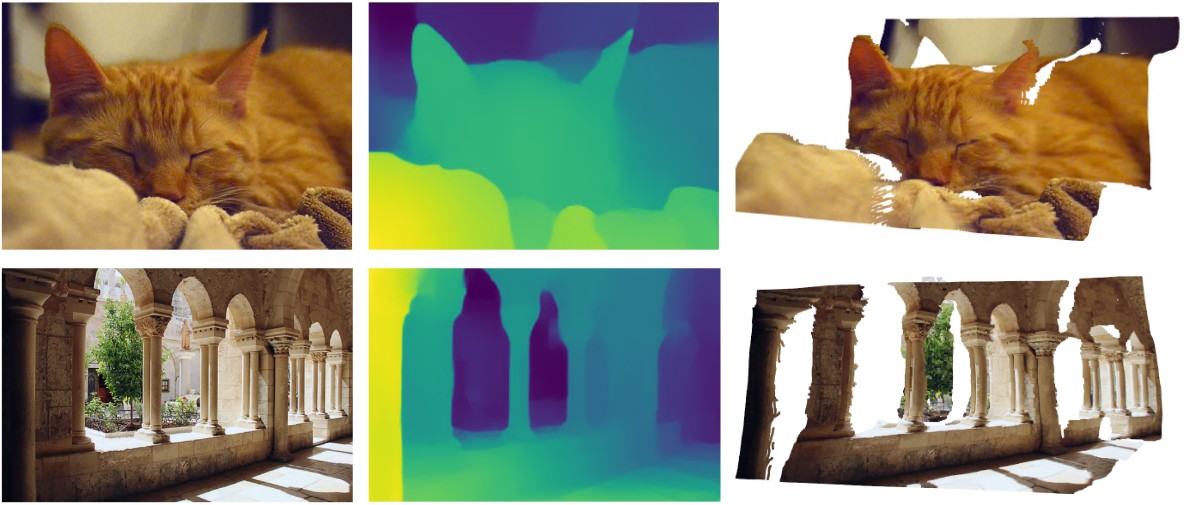

Single-view depth estimation (SVDE) plays a crucial role in scene understanding for AR applications, 3D modeling, and robotics, providing the geometry of a scene based on a single image. Recent works have shown that a successful solution strongly relies on the diversity and volume of training data. This data can be sourced from stereo movies and photos. However, they do not provide geometrically complete depth maps (as disparities contain unknown shift value). Therefore, existing models trained on this data are not able to recover correct 3D representations. Our work shows that a model trained on this data along with conventional datasets can gain accuracy while predicting correct scene geometry. Surprisingly, only a small portion of geometrically correct depth maps are required to train a model that performs equally to a model trained on the full geometrically correct dataset. After that, we train computationally efficient models on a mixture of datasets using the proposed method. Through quantitative comparison on completely unseen datasets and qualitative comparison of 3D point clouds, we show that our model defines the new state of the art in general-purpose SVDE.

PDF Abstract

ImageNet

ImageNet

NYUv2

NYUv2

TUM RGB-D

TUM RGB-D

MegaDepth

MegaDepth

ETH3D

ETH3D

ReDWeb

ReDWeb