Towards Grand Unification of Object Tracking

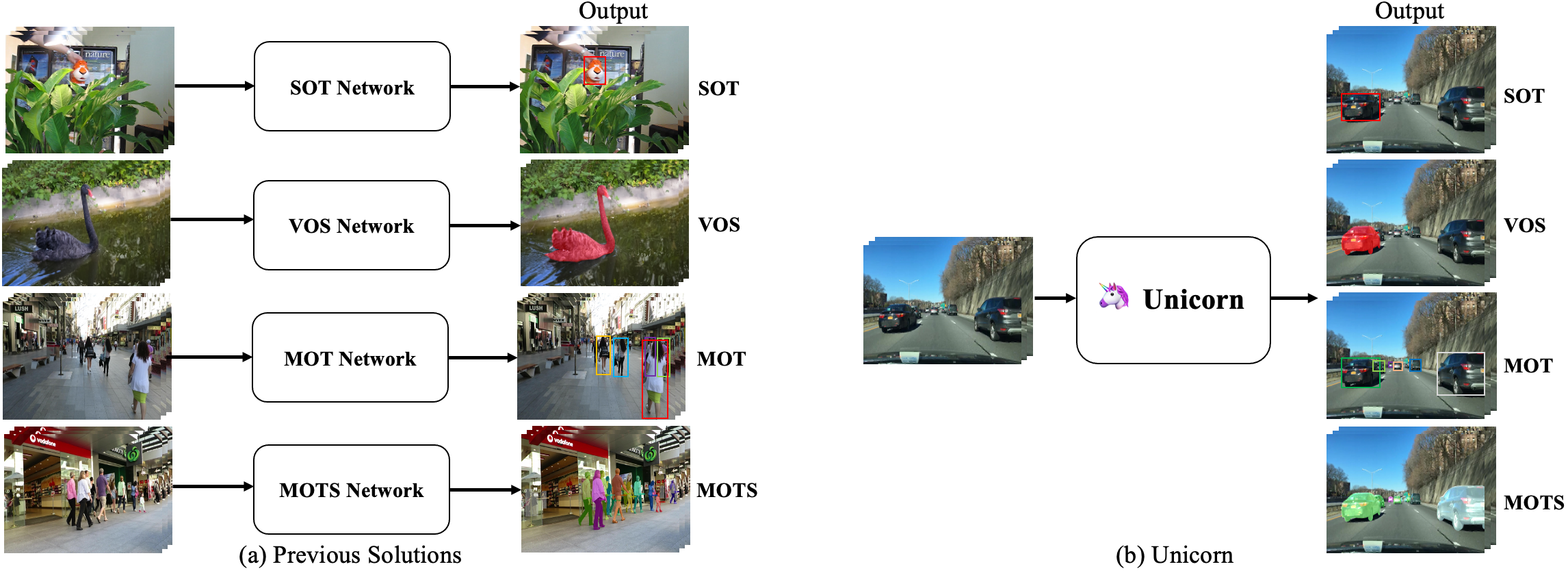

We present a unified method, termed Unicorn, that can simultaneously solve four tracking problems (SOT, MOT, VOS, MOTS) with a single network using the same model parameters. Due to the fragmented definitions of the object tracking problem itself, most existing trackers are developed to address a single or part of tasks and overspecialize on the characteristics of specific tasks. By contrast, Unicorn provides a unified solution, adopting the same input, backbone, embedding, and head across all tracking tasks. For the first time, we accomplish the great unification of the tracking network architecture and learning paradigm. Unicorn performs on-par or better than its task-specific counterparts in 8 tracking datasets, including LaSOT, TrackingNet, MOT17, BDD100K, DAVIS16-17, MOTS20, and BDD100K MOTS. We believe that Unicorn will serve as a solid step towards the general vision model. Code is available at https://github.com/MasterBin-IIAU/Unicorn.

PDF Abstract

BDD100K

BDD100K

MOT17

MOT17

LaSOT

LaSOT

TrackingNet

TrackingNet

MOTChallenge

MOTChallenge