Towards Musically Meaningful Explanations Using Source Separation

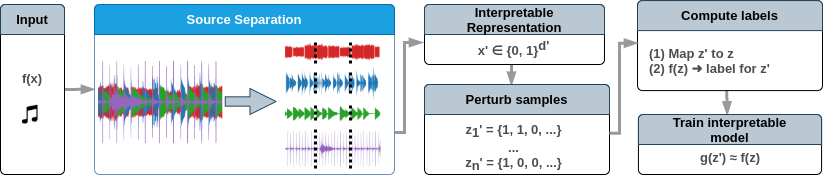

Deep neural networks (DNNs) are successfully applied in a wide variety of music information retrieval (MIR) tasks. Such models are usually considered "black boxes", meaning that their predictions are not interpretable. Prior work on explainable models in MIR has generally used image processing tools to produce explanations for DNN predictions, but these are not necessarily musically meaningful, or can be listened to (which, arguably, is important in music). We propose audioLIME, a method based on Local Interpretable Model-agnostic Explanation (LIME), extended by a musical definition of locality. LIME learns locally linear models on perturbations of an example that we want to explain. Instead of extracting components of the spectrogram using image segmentation as part of the LIME pipeline, we propose using source separation. The perturbations are created by switching on/off sources which makes our explanations listenable. We first validate audioLIME on a classifier that was deliberately trained to confuse the true target with a spurious signal, and show that this can easily be detected using our method. We then show that it passes a sanity check that many available explanation methods fail. Finally, we demonstrate the general applicability of our (model-agnostic) method on a third-party music tagger.

PDF Abstract

MUSDB18

MUSDB18

Slakh2100

Slakh2100