Towards Robust and Reproducible Active Learning Using Neural Networks

Active learning (AL) is a promising ML paradigm that has the potential to parse through large unlabeled data and help reduce annotation cost in domains where labeling data can be prohibitive. Recently proposed neural network based AL methods use different heuristics to accomplish this goal. In this study, we demonstrate that under identical experimental settings, different types of AL algorithms (uncertainty based, diversity based, and committee based) produce an inconsistent gain over random sampling baseline. Through a variety of experiments, controlling for sources of stochasticity, we show that variance in performance metrics achieved by AL algorithms can lead to results that are not consistent with the previously reported results. We also found that under strong regularization, AL methods show marginal or no advantage over the random sampling baseline under a variety of experimental conditions. Finally, we conclude with a set of recommendations on how to assess the results using a new AL algorithm to ensure results are reproducible and robust under changes in experimental conditions. We share our codes to facilitate AL evaluations. We believe our findings and recommendations will help advance reproducible research in AL using neural networks. We open source our code at https://github.com/PrateekMunjal/TorchAL

PDF Abstract CVPR 2022 PDF CVPR 2022 Abstract

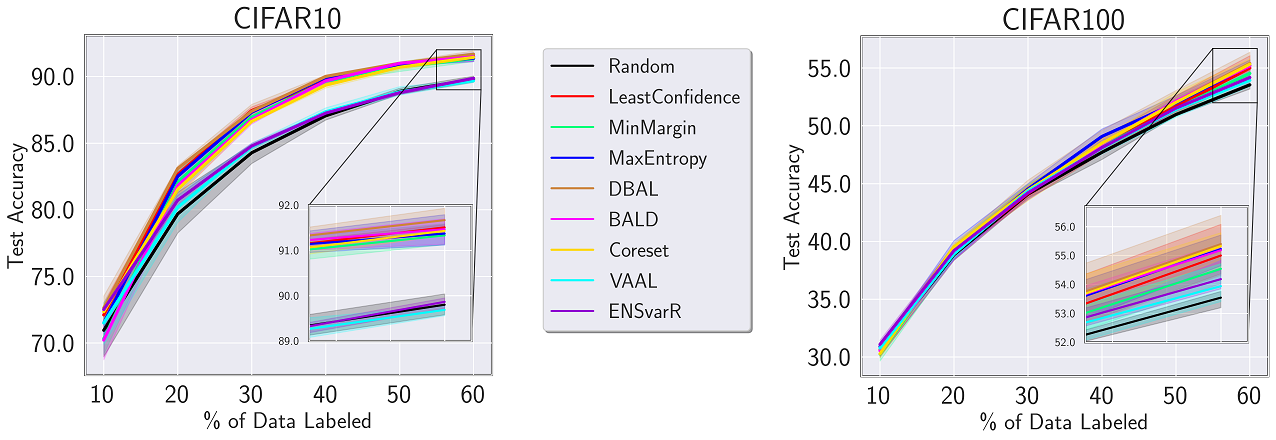

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100