Towards Smooth Video Composition

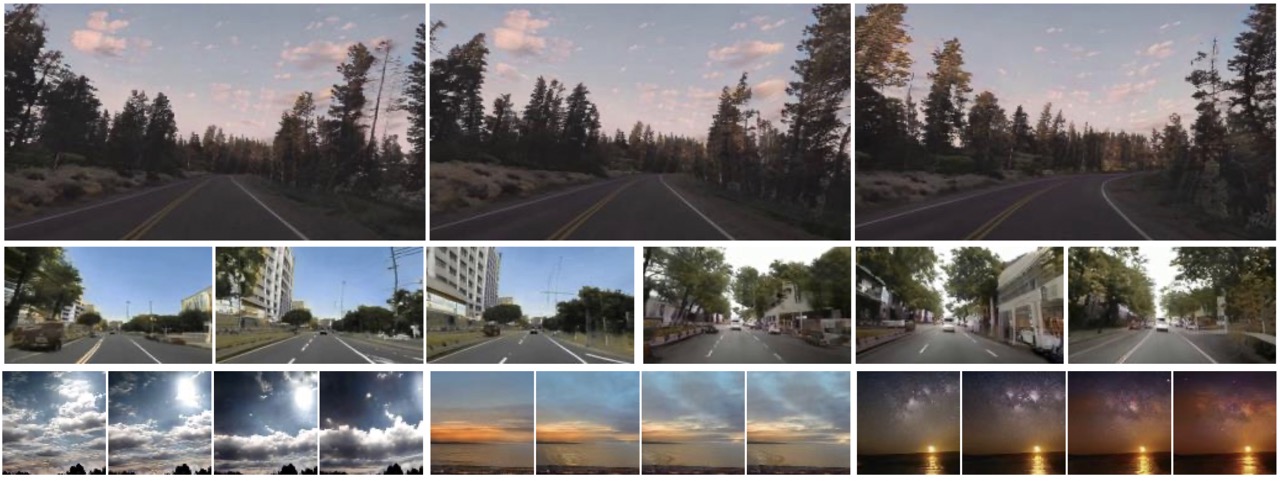

Video generation requires synthesizing consistent and persistent frames with dynamic content over time. This work investigates modeling the temporal relations for composing video with arbitrary length, from a few frames to even infinite, using generative adversarial networks (GANs). First, towards composing adjacent frames, we show that the alias-free operation for single image generation, together with adequately pre-learned knowledge, brings a smooth frame transition without compromising the per-frame quality. Second, by incorporating the temporal shift module (TSM), originally designed for video understanding, into the discriminator, we manage to advance the generator in synthesizing more consistent dynamics. Third, we develop a novel B-Spline based motion representation to ensure temporal smoothness to achieve infinite-length video generation. It can go beyond the frame number used in training. A low-rank temporal modulation is also proposed to alleviate repeating contents for long video generation. We evaluate our approach on various datasets and show substantial improvements over video generation baselines. Code and models will be publicly available at https://genforce.github.io/StyleSV.

PDF Abstract