Training Deep AutoEncoders for Collaborative Filtering

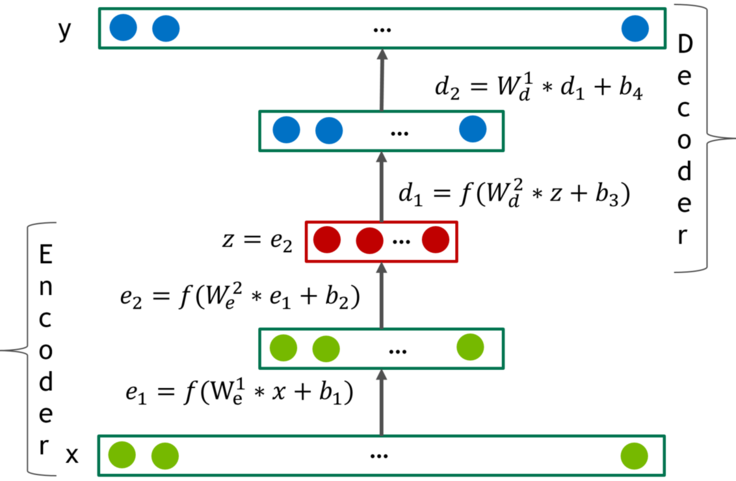

This paper proposes a novel model for the rating prediction task in recommender systems which significantly outperforms previous state-of-the art models on a time-split Netflix data set. Our model is based on deep autoencoder with 6 layers and is trained end-to-end without any layer-wise pre-training. We empirically demonstrate that: a) deep autoencoder models generalize much better than the shallow ones, b) non-linear activation functions with negative parts are crucial for training deep models, and c) heavy use of regularization techniques such as dropout is necessary to prevent over-fiting. We also propose a new training algorithm based on iterative output re-feeding to overcome natural sparseness of collaborate filtering. The new algorithm significantly speeds up training and improves model performance. Our code is available at https://github.com/NVIDIA/DeepRecommender

PDF Abstract

Netflix Prize

Netflix Prize