Transformation Driven Visual Reasoning

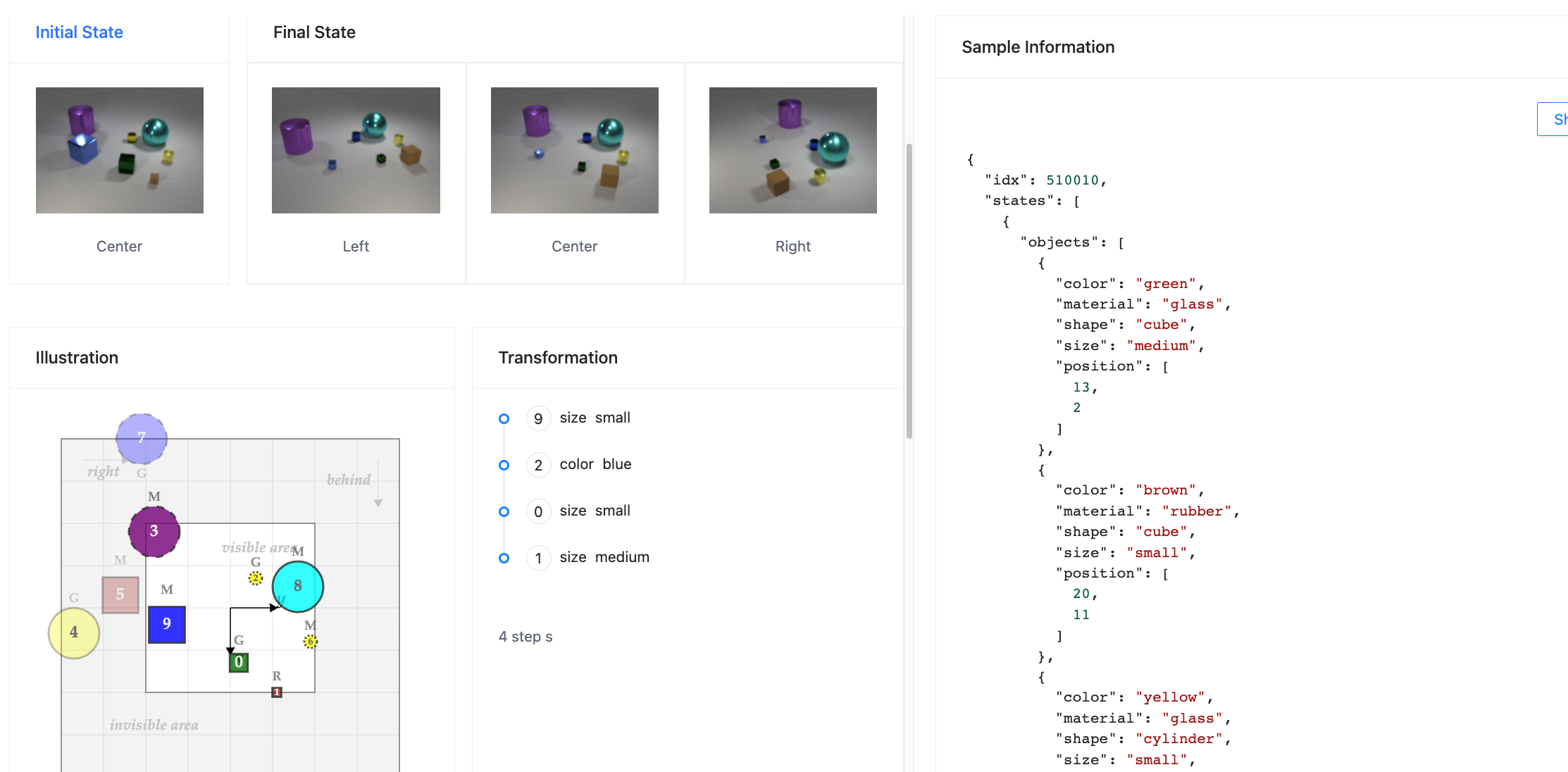

This paper defines a new visual reasoning paradigm by introducing an important factor, i.e.~transformation. The motivation comes from the fact that most existing visual reasoning tasks, such as CLEVR in VQA, are solely defined to test how well the machine understands the concepts and relations within static settings, like one image. We argue that this kind of \textbf{state driven visual reasoning} approach has limitations in reflecting whether the machine has the ability to infer the dynamics between different states, which has been shown as important as state-level reasoning for human cognition in Piaget's theory. To tackle this problem, we propose a novel \textbf{transformation driven visual reasoning} task. Given both the initial and final states, the target is to infer the corresponding single-step or multi-step transformation, represented as a triplet (object, attribute, value) or a sequence of triplets, respectively. Following this definition, a new dataset namely TRANCE is constructed on the basis of CLEVR, including three levels of settings, i.e.~Basic (single-step transformation), Event (multi-step transformation), and View (multi-step transformation with variant views). Experimental results show that the state-of-the-art visual reasoning models perform well on Basic, but are still far from human-level intelligence on Event and View. We believe the proposed new paradigm will boost the development of machine visual reasoning. More advanced methods and real data need to be investigated in this direction. The resource of TVR is available at https://hongxin2019.github.io/TVR.

PDF Abstract CVPR 2021 PDF CVPR 2021 Abstract

TRANCE

TRANCE

CLEVR

CLEVR

GQA

GQA

VCR

VCR

NLVR

NLVR

CATER

CATER