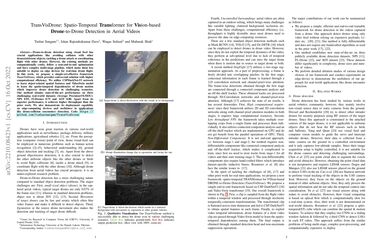

TransVisDrone: Spatio-Temporal Transformer for Vision-based Drone-to-Drone Detection in Aerial Videos

Drone-to-drone detection using visual feed has crucial applications, such as detecting drone collisions, detecting drone attacks, or coordinating flight with other drones. However, existing methods are computationally costly, follow non-end-to-end optimization, and have complex multi-stage pipelines, making them less suitable for real-time deployment on edge devices. In this work, we propose a simple yet effective framework, \textit{TransVisDrone}, that provides an end-to-end solution with higher computational efficiency. We utilize CSPDarkNet-53 network to learn object-related spatial features and VideoSwin model to improve drone detection in challenging scenarios by learning spatio-temporal dependencies of drone motion. Our method achieves state-of-the-art performance on three challenging real-world datasets (Average Precision@0.5IOU): NPS 0.95, FLDrones 0.75, and AOT 0.80, and a higher throughput than previous methods. We also demonstrate its deployment capability on edge devices and its usefulness in detecting drone-collision (encounter). Project: \url{https://tusharsangam.github.io/TransVisDrone-project-page/}.

PDF Abstract