Unified Perceptual Parsing for Scene Understanding

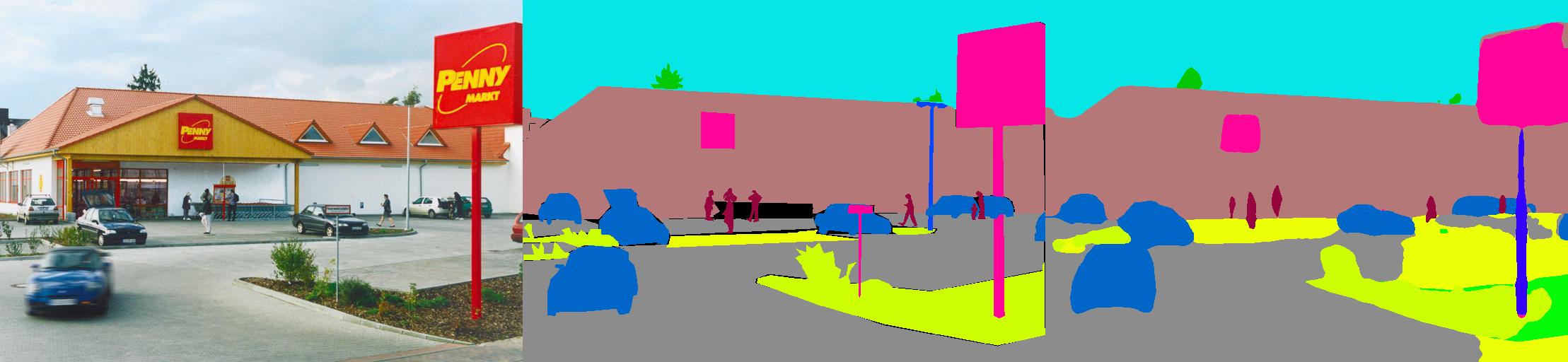

Humans recognize the visual world at multiple levels: we effortlessly categorize scenes and detect objects inside, while also identifying the textures and surfaces of the objects along with their different compositional parts. In this paper, we study a new task called Unified Perceptual Parsing, which requires the machine vision systems to recognize as many visual concepts as possible from a given image. A multi-task framework called UPerNet and a training strategy are developed to learn from heterogeneous image annotations. We benchmark our framework on Unified Perceptual Parsing and show that it is able to effectively segment a wide range of concepts from images. The trained networks are further applied to discover visual knowledge in natural scenes. Models are available at \url{https://github.com/CSAILVision/unifiedparsing}.

PDF Abstract ECCV 2018 PDF ECCV 2018 Abstract

ADE20K

ADE20K

DTD

DTD

Places205

Places205

PASCAL-Part

PASCAL-Part