UniSumm and SummZoo: Unified Model and Diverse Benchmark for Few-Shot Summarization

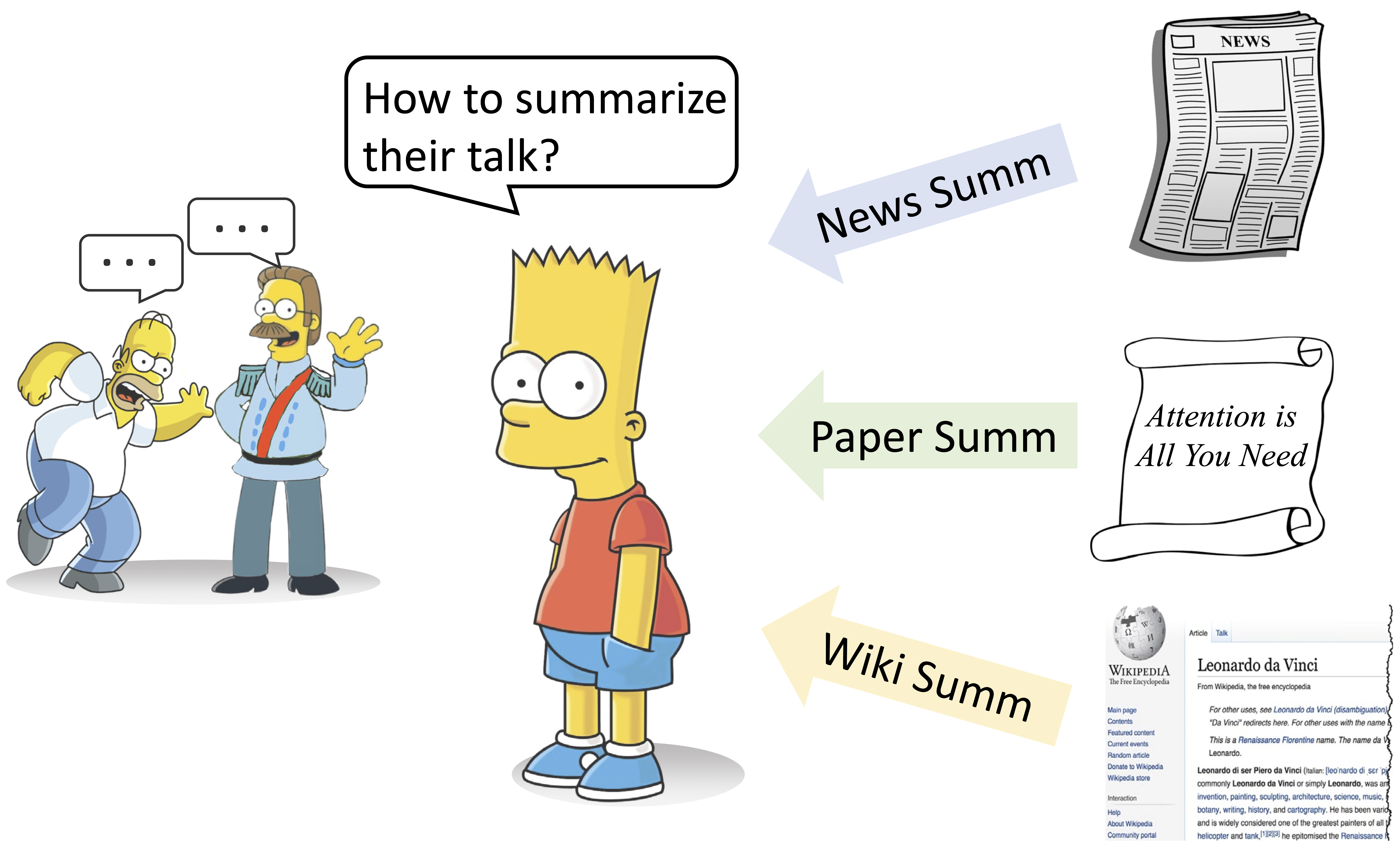

The high annotation costs and diverse demands of various summarization tasks motivate the development of few-shot summarization. However, despite the emergence of many summarization tasks and datasets, the current training paradigm for few-shot summarization systems ignores potentially shareable knowledge in heterogeneous datasets. To this end, we propose \textsc{UniSumm}, a unified few-shot summarization model pre-trained with multiple summarization tasks and can be prefix-tuned to excel at any few-shot summarization task. Meanwhile, to better evaluate few-shot summarizers, under the principles of diversity and robustness, we assemble and release a new benchmark \textsc{SummZoo}. It consists of $8$ summarization tasks with multiple sets of few-shot samples for each task, covering diverse domains. Experimental results and analysis show that \textsc{UniSumm} outperforms strong baselines by a large margin across all sub-tasks in \textsc{SummZoo} under both automatic and human evaluations and achieves comparable results in human evaluation compared with a GPT-3.5 model.

PDF AbstractCode

Tasks

Datasets

Introduced in the Paper:

SummZoo

SummZoo

Used in the Paper:

Pubmed CNN/Daily Mail

CNN/Daily Mail

WikiHow

SAMSum

WikiHow

SAMSum

Multi-News

GovReport

Multi-News

GovReport

QMSum

SummScreen

QMSum

SummScreen

Reddit TIFU

Reddit TIFU

DialogSum

DialogSum

BillSum

BillSum