Unsupervised Neural Text Simplification

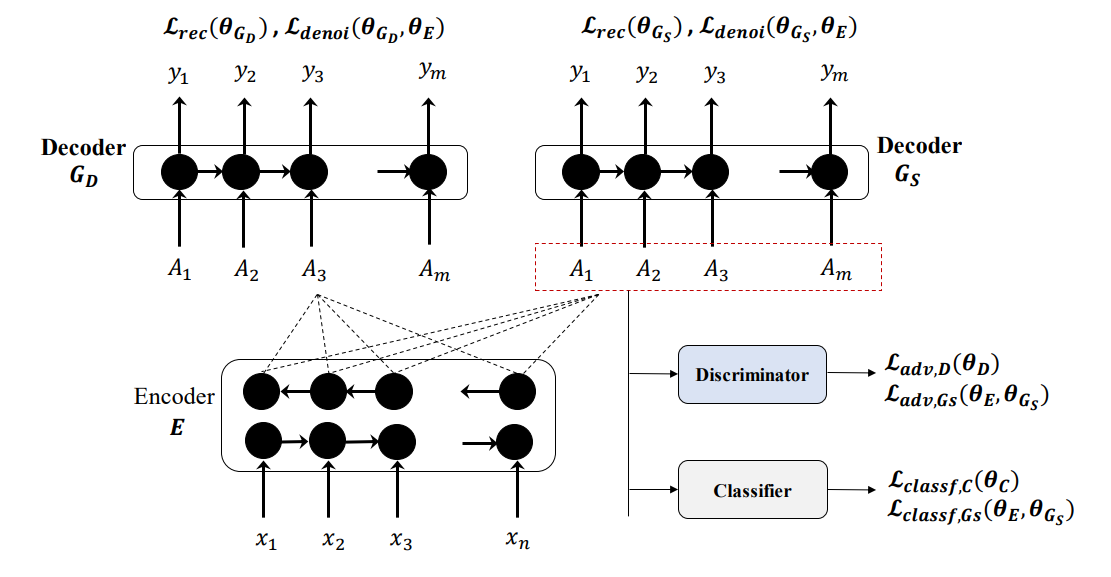

The paper presents a first attempt towards unsupervised neural text simplification that relies only on unlabeled text corpora. The core framework is composed of a shared encoder and a pair of attentional-decoders and gains knowledge of simplification through discrimination based-losses and denoising. The framework is trained using unlabeled text collected from en-Wikipedia dump. Our analysis (both quantitative and qualitative involving human evaluators) on a public test data shows that the proposed model can perform text-simplification at both lexical and syntactic levels, competitive to existing supervised methods. Addition of a few labelled pairs also improves the performance further.

PDF Abstract ACL 2019 PDF ACL 2019 Abstract

ASSET

ASSET