UPANets: Learning from the Universal Pixel Attention Networks

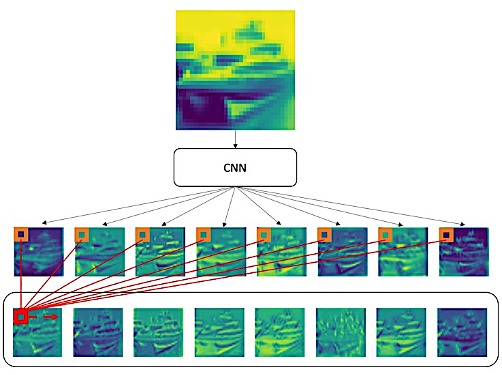

Among image classification, skip and densely-connection-based networks have dominated most leaderboards. Recently, from the successful development of multi-head attention in natural language processing, it is sure that now is a time of either using a Transformer-like model or hybrid CNNs with attention. However, the former need a tremendous resource to train, and the latter is in the perfect balance in this direction. In this work, to make CNNs handle global and local information, we proposed UPANets, which equips channel-wise attention with a hybrid skip-densely-connection structure. Also, the extreme-connection structure makes UPANets robust with a smoother loss landscape. In experiments, UPANets surpassed most well-known and widely-used SOTAs with an accuracy of 96.47% in Cifar-10, 80.29% in Cifar-100, and 67.67% in Tiny Imagenet. Most importantly, these performances have high parameters efficiency and only trained in one customer-based GPU. We share implementing code of UPANets in https://github.com/hanktseng131415go/UPANets.

PDF AbstractTasks

Datasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Image Classification | CIFAR-10 | UPANets | Percentage correct | 96.47 | # 104 | ||

| Image Classification | CIFAR-100 | UPANets | Percentage correct | 80.29 | # 124 | ||

| Image Classification | Tiny-ImageNet | UPANets | Top 1 Accuracy | 67.67 | # 1 | ||

| Image Classification | Tiny ImageNet Classification | UPANets | Validation Acc | 67.67 | # 18 |

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

Tiny ImageNet

Tiny ImageNet