Who's Waldo? Linking People Across Text and Images

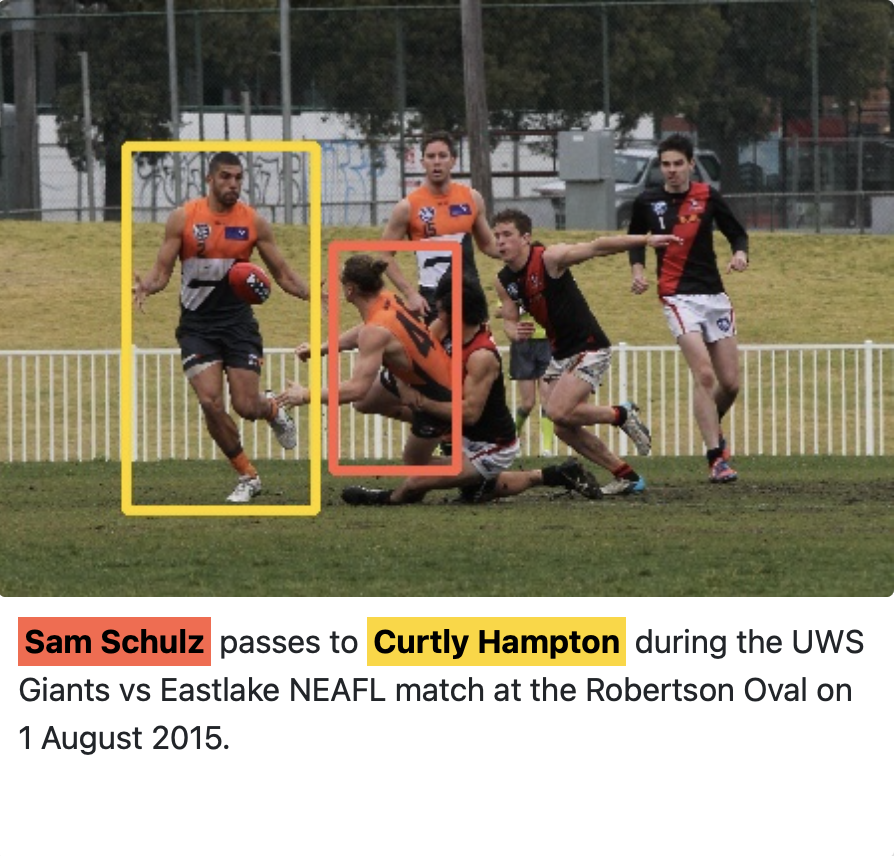

We present a task and benchmark dataset for person-centric visual grounding, the problem of linking between people named in a caption and people pictured in an image. In contrast to prior work in visual grounding, which is predominantly object-based, our new task masks out the names of people in captions in order to encourage methods trained on such image-caption pairs to focus on contextual cues (such as rich interactions between multiple people), rather than learning associations between names and appearances. To facilitate this task, we introduce a new dataset, Who's Waldo, mined automatically from image-caption data on Wikimedia Commons. We propose a Transformer-based method that outperforms several strong baselines on this task, and are releasing our data to the research community to spur work on contextual models that consider both vision and language.

PDF Abstract ICCV 2021 PDF ICCV 2021 AbstractCode

Datasets

Introduced in the Paper:

Who’s Waldo

Who’s Waldo

Results from the Paper

Ranked #1 on

Person-centric Visual Grounding

on Who’s Waldo

(using extra training data)

Ranked #1 on

Person-centric Visual Grounding

on Who’s Waldo

(using extra training data)