Winoground: Probing Vision and Language Models for Visio-Linguistic Compositionality

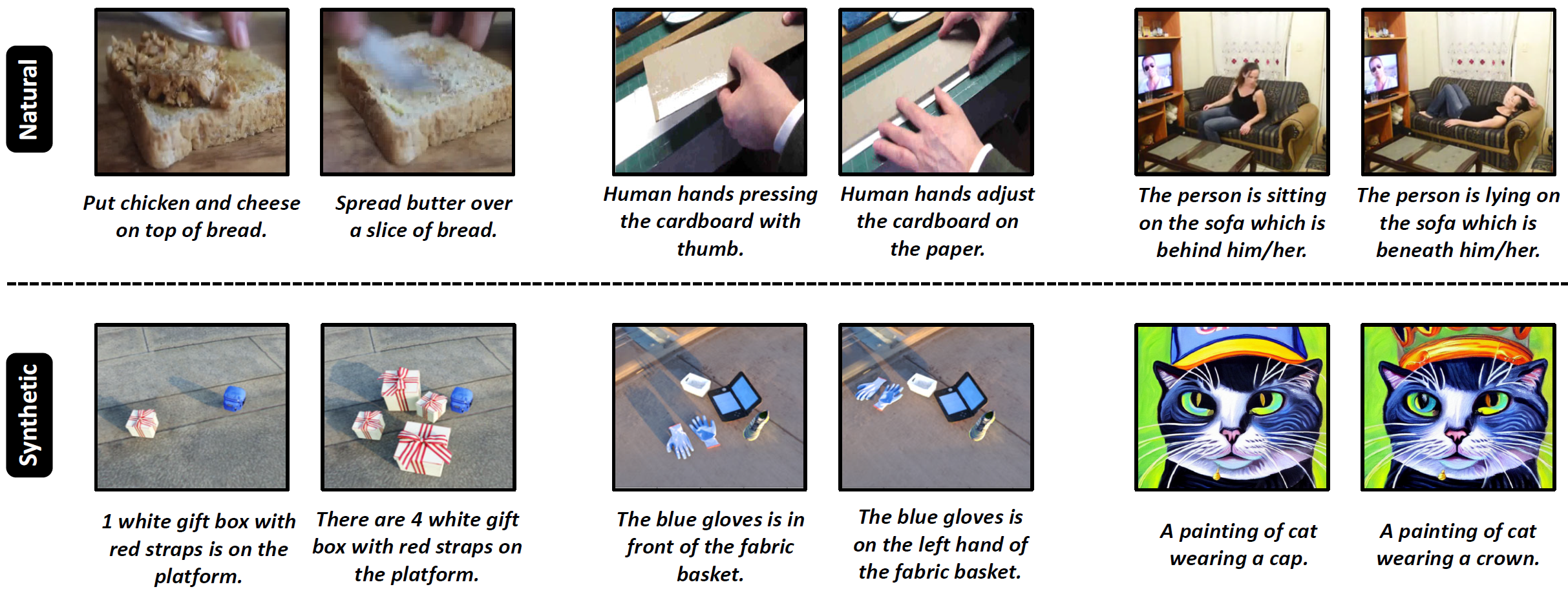

We present a novel task and dataset for evaluating the ability of vision and language models to conduct visio-linguistic compositional reasoning, which we call Winoground. Given two images and two captions, the goal is to match them correctly - but crucially, both captions contain a completely identical set of words, only in a different order. The dataset was carefully hand-curated by expert annotators and is labeled with a rich set of fine-grained tags to assist in analyzing model performance. We probe a diverse range of state-of-the-art vision and language models and find that, surprisingly, none of them do much better than chance. Evidently, these models are not as skilled at visio-linguistic compositional reasoning as we might have hoped. We perform an extensive analysis to obtain insights into how future work might try to mitigate these models' shortcomings. We aim for Winoground to serve as a useful evaluation set for advancing the state of the art and driving further progress in the field. The dataset is available at https://huggingface.co/datasets/facebook/winoground.

PDF Abstract CVPR 2022 PDF CVPR 2022 AbstractCode

Tasks

Datasets

Introduced in the Paper:

WinogroundUsed in the Paper:

MS COCO

MS COCO

SST

SST-2

SST

SST-2

Visual Genome

Visual Genome

Flickr30k

Flickr30k

WSC

WSC

YFCC100M

YFCC100M

WIT

WIT