WORD: A large scale dataset, benchmark and clinical applicable study for abdominal organ segmentation from CT image

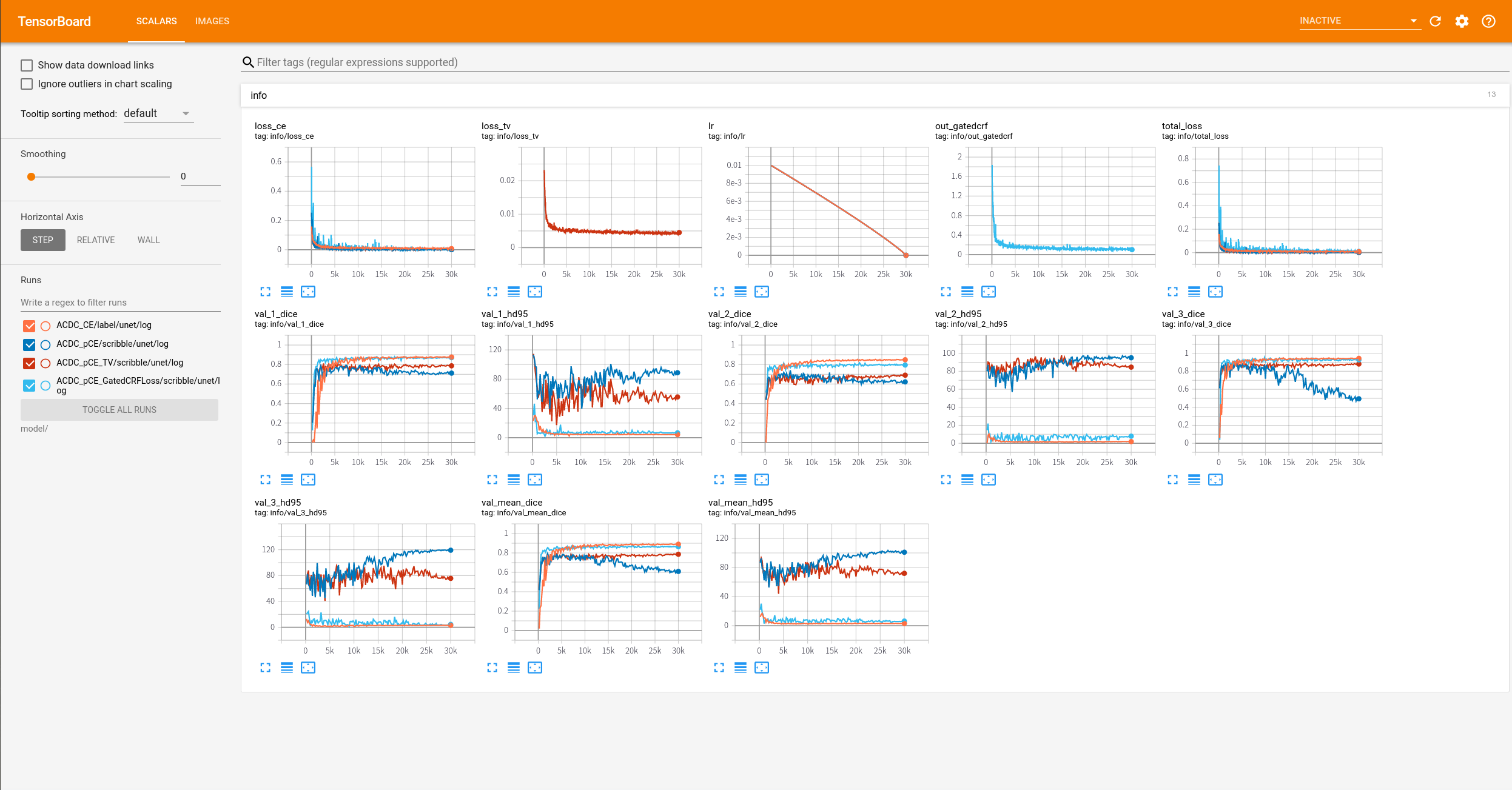

Whole abdominal organ segmentation is important in diagnosing abdomen lesions, radiotherapy, and follow-up. However, oncologists' delineating all abdominal organs from 3D volumes is time-consuming and very expensive. Deep learning-based medical image segmentation has shown the potential to reduce manual delineation efforts, but it still requires a large-scale fine annotated dataset for training, and there is a lack of large-scale datasets covering the whole abdomen region with accurate and detailed annotations for the whole abdominal organ segmentation. In this work, we establish a new large-scale \textit{W}hole abdominal \textit{OR}gan \textit{D}ataset (\textit{WORD}) for algorithm research and clinical application development. This dataset contains 150 abdominal CT volumes (30495 slices). Each volume has 16 organs with fine pixel-level annotations and scribble-based sparse annotations, which may be the largest dataset with whole abdominal organ annotation. Several state-of-the-art segmentation methods are evaluated on this dataset. And we also invited three experienced oncologists to revise the model predictions to measure the gap between the deep learning method and oncologists. Afterwards, we investigate the inference-efficient learning on the WORD, as the high-resolution image requires large GPU memory and a long inference time in the test stage. We further evaluate the scribble-based annotation-efficient learning on this dataset, as the pixel-wise manual annotation is time-consuming and expensive. The work provided a new benchmark for the abdominal multi-organ segmentation task, and these experiments can serve as the baseline for future research and clinical application development.

PDF Abstract

WORD

WORD