ZeroQ: A Novel Zero Shot Quantization Framework

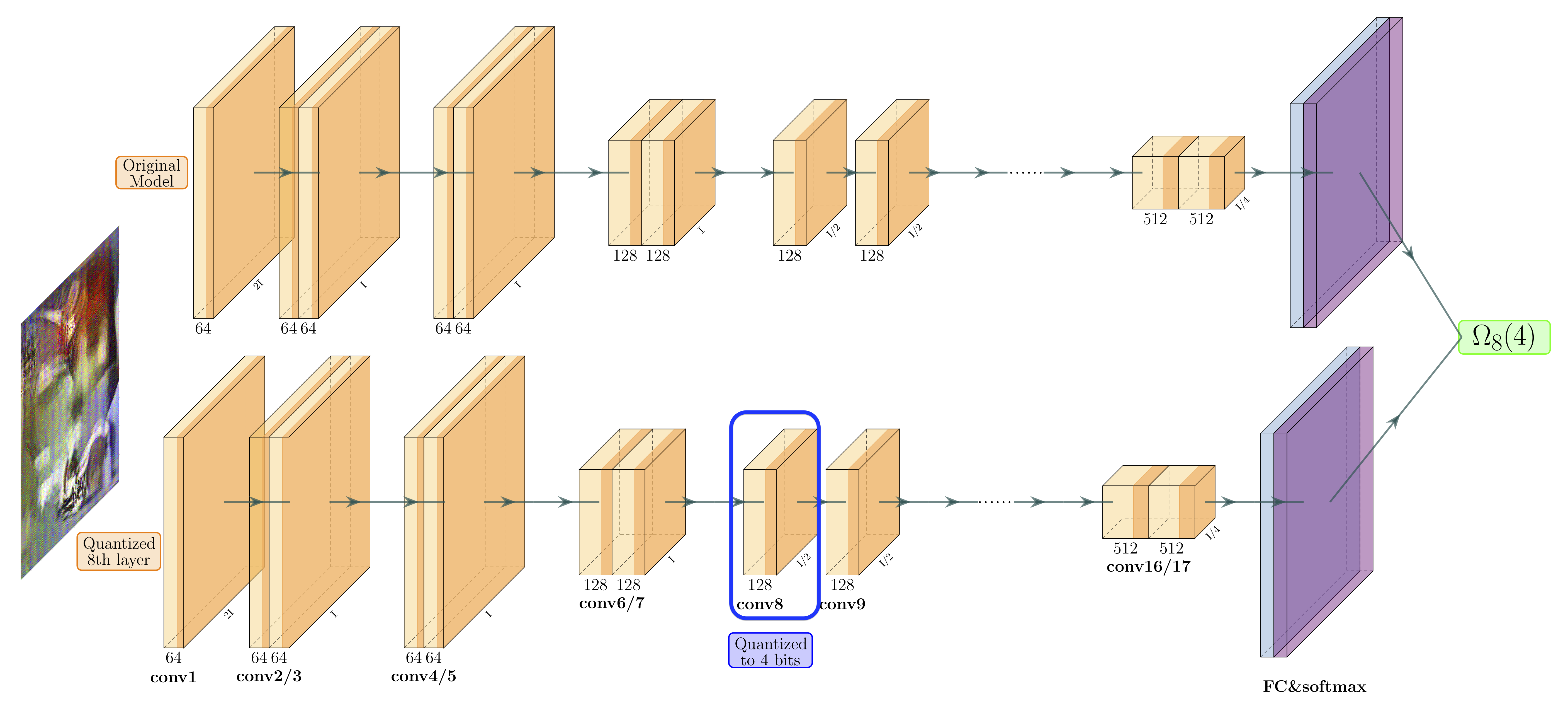

Quantization is a promising approach for reducing the inference time and memory footprint of neural networks. However, most existing quantization methods require access to the original training dataset for retraining during quantization. This is often not possible for applications with sensitive or proprietary data, e.g., due to privacy and security concerns. Existing zero-shot quantization methods use different heuristics to address this, but they result in poor performance, especially when quantizing to ultra-low precision. Here, we propose ZeroQ , a novel zero-shot quantization framework to address this. ZeroQ enables mixed-precision quantization without any access to the training or validation data. This is achieved by optimizing for a Distilled Dataset, which is engineered to match the statistics of batch normalization across different layers of the network. ZeroQ supports both uniform and mixed-precision quantization. For the latter, we introduce a novel Pareto frontier based method to automatically determine the mixed-precision bit setting for all layers, with no manual search involved. We extensively test our proposed method on a diverse set of models, including ResNet18/50/152, MobileNetV2, ShuffleNet, SqueezeNext, and InceptionV3 on ImageNet, as well as RetinaNet-ResNet50 on the Microsoft COCO dataset. In particular, we show that ZeroQ can achieve 1.71\% higher accuracy on MobileNetV2, as compared to the recently proposed DFQ method. Importantly, ZeroQ has a very low computational overhead, and it can finish the entire quantization process in less than 30s (0.5\% of one epoch training time of ResNet50 on ImageNet). We have open-sourced the ZeroQ framework\footnote{https://github.com/amirgholami/ZeroQ}.

PDF Abstract CVPR 2020 PDF CVPR 2020 AbstractCode

Results from the Paper

Ranked #1 on

Data Free Quantization

on CIFAR10

(CIFAR-10 W8A8 Top-1 Accuracy metric)

Ranked #1 on

Data Free Quantization

on CIFAR10

(CIFAR-10 W8A8 Top-1 Accuracy metric)

MS COCO

MS COCO