Search Results for author: Bo Wan

Found 12 papers, 8 papers with code

LocCa: Visual Pretraining with Location-aware Captioners

no code implementations • 28 Mar 2024 • Bo Wan, Michael Tschannen, Yongqin Xian, Filip Pavetic, Ibrahim Alabdulmohsin, Xiao Wang, André Susano Pinto, Andreas Steiner, Lucas Beyer, Xiaohua Zhai

In this paper, we propose a simple visual pretraining method with location-aware captioners (LocCa).

Animate Your Motion: Turning Still Images into Dynamic Videos

no code implementations • 15 Mar 2024 • Mingxiao Li, Bo Wan, Marie-Francine Moens, Tinne Tuytelaars

For the first time, we integrate both semantic and motion cues within a diffusion model for video generation, as demonstrated in Fig 1.

Exploiting CLIP for Zero-shot HOI Detection Requires Knowledge Distillation at Multiple Levels

1 code implementation • 10 Sep 2023 • Bo Wan, Tinne Tuytelaars

In this paper, we investigate the task of zero-shot human-object interaction (HOI) detection, a novel paradigm for identifying HOIs without the need for task-specific annotations.

Human-Object Interaction Detection

Human-Object Interaction Detection

Knowledge Distillation

+1

Knowledge Distillation

+1

UniPT: Universal Parallel Tuning for Transfer Learning with Efficient Parameter and Memory

1 code implementation • 28 Aug 2023 • Haiwen Diao, Bo Wan, Ying Zhang, Xu Jia, Huchuan Lu, Long Chen

Parameter-efficient transfer learning (PETL), i. e., fine-tuning a small portion of parameters, is an effective strategy for adapting pre-trained models to downstream domains.

A Study of Autoregressive Decoders for Multi-Tasking in Computer Vision

1 code implementation • 30 Mar 2023 • Lucas Beyer, Bo Wan, Gagan Madan, Filip Pavetic, Andreas Steiner, Alexander Kolesnikov, André Susano Pinto, Emanuele Bugliarello, Xiao Wang, Qihang Yu, Liang-Chieh Chen, Xiaohua Zhai

A key finding is that a small decoder learned on top of a frozen pretrained encoder works surprisingly well.

Weakly-supervised HOI Detection via Prior-guided Bi-level Representation Learning

no code implementations • 2 Mar 2023 • Bo Wan, Yongfei Liu, Desen Zhou, Tinne Tuytelaars, Xuming He

Human object interaction (HOI) detection plays a crucial role in human-centric scene understanding and serves as a fundamental building-block for many vision tasks.

Human-Object Interaction Detection

Human-Object Interaction Detection

Knowledge Distillation

+3

Knowledge Distillation

+3

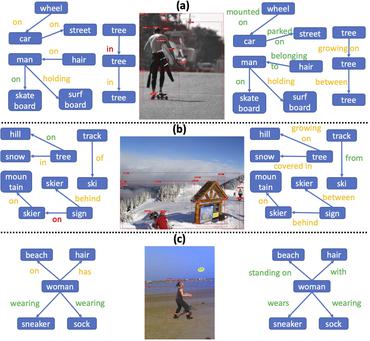

Unsupervised Vision-Language Grammar Induction with Shared Structure Modeling

no code implementations • ICLR 2022 • Bo Wan, Wenjuan Han, Zilong Zheng, Tinne Tuytelaars

We introduce a new task, unsupervised vision-language (VL) grammar induction.

Single Image 3D Object Estimation with Primitive Graph Networks

1 code implementation • 9 Sep 2021 • Qian He, Desen Zhou, Bo Wan, Xuming He

To address those challenges, we adopt a primitive-based representation for 3D object, and propose a two-stage graph network for primitive-based 3D object estimation, which consists of a sequential proposal module and a graph reasoning module.

Bipartite Graph Network with Adaptive Message Passing for Unbiased Scene Graph Generation

3 code implementations • CVPR 2021 • Rongjie Li, Songyang Zhang, Bo Wan, Xuming He

Scene graph generation is an important visual understanding task with a broad range of vision applications.

Relation-aware Instance Refinement for Weakly Supervised Visual Grounding

1 code implementation • CVPR 2021 • Yongfei Liu, Bo Wan, Lin Ma, Xuming He

Visual grounding, which aims to build a correspondence between visual objects and their language entities, plays a key role in cross-modal scene understanding.

Learning Cross-modal Context Graph for Visual Grounding

2 code implementations • 20 Nov 2019 • Yongfei Liu, Bo Wan, Xiaodan Zhu, Xuming He

To address their limitations, this paper proposes a language-guided graph representation to capture the global context of grounding entities and their relations, and develop a cross-modal graph matching strategy for the multiple-phrase visual grounding task.

Pose-aware Multi-level Feature Network for Human Object Interaction Detection

1 code implementation • ICCV 2019 • Bo Wan, Desen Zhou, Yongfei Liu, Rongjie Li, Xuming He

Reasoning human object interactions is a core problem in human-centric scene understanding and detecting such relations poses a unique challenge to vision systems due to large variations in human-object configurations, multiple co-occurring relation instances and subtle visual difference between relation categories.