Search Results for author: Chung-Ching Lin

Found 28 papers, 9 papers with code

Adaptive As-Natural-As-Possible Image Stitching

no code implementations • CVPR 2015 • Chung-Ching Lin, Sharathchandra U. Pankanti, Karthikeyan Natesan Ramamurthy, Aleksandr Y. Aravkin

Computing the warp is fully automated and uses a combination of local homography and global similarity transformations, both of which are estimated with respect to the target.

Distributed Bundle Adjustment

no code implementations • 26 Aug 2017 • Karthikeyan Natesan Ramamurthy, Chung-Ching Lin, Aleksandr Aravkin, Sharath Pankanti, Raphael Viguier

The runtime of our implementation scales linearly with the number of observed points.

Collaborative Human-AI (CHAI): Evidence-Based Interpretable Melanoma Classification in Dermoscopic Images

1 code implementation • 30 May 2018 • Noel C. F. Codella, Chung-Ching Lin, Allan Halpern, Michael Hind, Rogerio Feris, John R. Smith

Quantitative relevance of results, according to non-expert similarity, as well as localized image regions, are also significantly improved.

A Prior-Less Method for Multi-Face Tracking in Unconstrained Videos

no code implementations • CVPR 2018 • Chung-Ching Lin, Ying Hung

This paper presents a prior-less method for tracking and clustering an unknown number of human faces and maintaining their individual identities in unconstrained videos.

Video Instance Segmentation Tracking With a Modified VAE Architecture

no code implementations • CVPR 2020 • Chung-Ching Lin, Ying Hung, Rogerio Feris, Linglin He

We propose a modified variational autoencoder (VAE) architecture built on top of Mask R-CNN for instance-level video segmentation and tracking.

True-Time-Delay Arrays for Fast Beam Training in Wideband Millimeter-Wave Systems

no code implementations • 17 Jul 2020 • Veljko Boljanovic, Han Yan, Chung-Ching Lin, Soumen Mohapatra, Deukhyoun Heo, Subhanshu Gupta, Danijela Cabric

We also propose a suitable algorithm that requires a single pilot to achieve high-accuracy estimation of angle of arrival.

AR-Net: Adaptive Frame Resolution for Efficient Action Recognition

1 code implementation • ECCV 2020 • Yue Meng, Chung-Ching Lin, Rameswar Panda, Prasanna Sattigeri, Leonid Karlinsky, Aude Oliva, Kate Saenko, Rogerio Feris

Specifically, given a video frame, a policy network is used to decide what input resolution should be used for processing by the action recognition model, with the goal of improving both accuracy and efficiency.

AdaFuse: Adaptive Temporal Fusion Network for Efficient Action Recognition

no code implementations • ICLR 2021 • Yue Meng, Rameswar Panda, Chung-Ching Lin, Prasanna Sattigeri, Leonid Karlinsky, Kate Saenko, Aude Oliva, Rogerio Feris

Temporal modelling is the key for efficient video action recognition.

VA-RED$^2$: Video Adaptive Redundancy Reduction

no code implementations • ICLR 2021 • Bowen Pan, Rameswar Panda, Camilo Fosco, Chung-Ching Lin, Alex Andonian, Yue Meng, Kate Saenko, Aude Oliva, Rogerio Feris

An inherent property of real-world videos is the high correlation of information across frames which can translate into redundancy in either temporal or spatial feature maps of the models, or both.

A 4-Element 800MHz-BW 29mW True-Time-Delay Spatial Signal Processor Enabling Fast Beam-Training with Data Communications

no code implementations • 2 Jun 2021 • Chung-Ching Lin, Chase Puglisi, Veljko Boljanovic, Soumen Mohapatra, Han Yan, Erfan Ghaderi, Deukhyoun Heo, Danijela Cabric, Subhanshu Gupta

In this work, we demonstrate a true-time-delay (TTD) array with digitally reconfigurable delay elements enabling both fast beam-training at the receiver with wideband data communications.

Mutual Information Continuity-constrained Estimator

no code implementations • 29 Sep 2021 • Tsun-An Hsieh, Cheng Yu, Ying Hung, Chung-Ching Lin, Yu Tsao

Accordingly, we propose Mutual Information Continuity-constrained Estimator (MICE).

SwinBERT: End-to-End Transformers with Sparse Attention for Video Captioning

1 code implementation • CVPR 2022 • Kevin Lin, Linjie Li, Chung-Ching Lin, Faisal Ahmed, Zhe Gan, Zicheng Liu, Yumao Lu, Lijuan Wang

Based on this model architecture, we show that video captioning can benefit significantly from more densely sampled video frames as opposed to previous successes with sparsely sampled video frames for video-and-language understanding tasks (e. g., video question answering).

Wideband Beamforming with Rainbow Beam Training using Reconfigurable True-Time-Delay Arrays for Millimeter-Wave Wireless

no code implementations • 30 Nov 2021 • Chung-Ching Lin, Veljko Boljanovic, Han Yan, Erfan Ghaderi, Mohammad Ali Mokri, Jayce Jeron Gaddis, Aditya Wadaskar, Chase Puglisi, Soumen Mohapatra, Qiuyan Xu, Sreeni Poolakkal, Deukhyoun Heo, Subhanshu Gupta, Danijela Cabric

The decadal research in integrated true-time-delay arrays have seen organic growth enabling realization of wideband beamformers for large arrays with wide aperture widths.

Multi-Mode Spatial Signal Processor with Rainbow-like Fast Beam Training and Wideband Communications using True-Time-Delay Arrays

no code implementations • 8 Jan 2022 • Chung-Ching Lin, Chase Puglisi, Veljko Boljanovic, Han Yan, Erfan Ghaderi, Jayce Gaddis, Qiuyan Xu, Sreeni Poolakkal, Danijela Cabric, Subhanshu Gupta

Initial access in millimeter-wave (mmW) wireless is critical toward successful realization of the fifth-generation (5G) wireless networks and beyond.

Cross-modal Representation Learning for Zero-shot Action Recognition

no code implementations • CVPR 2022 • Chung-Ching Lin, Kevin Lin, Linjie Li, Lijuan Wang, Zicheng Liu

The model design provides a natural mechanism for visual and semantic representations to be learned in a shared knowledge space, whereby it encourages the learned visual embedding to be discriminative and more semantically consistent.

Ranked #3 on

Zero-Shot Action Recognition

on ActivityNet

Ranked #3 on

Zero-Shot Action Recognition

on ActivityNet

LAVENDER: Unifying Video-Language Understanding as Masked Language Modeling

1 code implementation • CVPR 2023 • Linjie Li, Zhe Gan, Kevin Lin, Chung-Ching Lin, Zicheng Liu, Ce Liu, Lijuan Wang

In this work, we explore a unified VidL framework LAVENDER, where Masked Language Modeling (MLM) is used as the common interface for all pre-training and downstream tasks.

MPT: Mesh Pre-Training with Transformers for Human Pose and Mesh Reconstruction

no code implementations • 24 Nov 2022 • Kevin Lin, Chung-Ching Lin, Lin Liang, Zicheng Liu, Lijuan Wang

Traditional methods of reconstructing 3D human pose and mesh from single images rely on paired image-mesh datasets, which can be difficult and expensive to obtain.

Ranked #14 on

3D Human Pose Estimation

on 3DPW

Ranked #14 on

3D Human Pose Estimation

on 3DPW

Equivariant Similarity for Vision-Language Foundation Models

1 code implementation • ICCV 2023 • Tan Wang, Kevin Lin, Linjie Li, Chung-Ching Lin, Zhengyuan Yang, Hanwang Zhang, Zicheng Liu, Lijuan Wang

Unlike the existing image-text similarity objective which only categorizes matched pairs as similar and unmatched pairs as dissimilar, equivariance also requires similarity to vary faithfully according to the semantic changes.

Ranked #7 on

Visual Reasoning

on Winoground

Ranked #7 on

Visual Reasoning

on Winoground

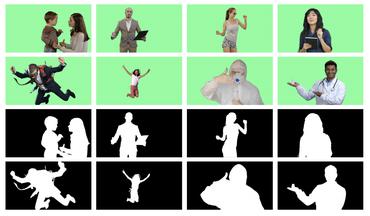

Adaptive Human Matting for Dynamic Videos

1 code implementation • CVPR 2023 • Chung-Ching Lin, Jiang Wang, Kun Luo, Kevin Lin, Linjie Li, Lijuan Wang, Zicheng Liu

The most recent efforts in video matting have focused on eliminating trimap dependency since trimap annotations are expensive and trimap-based methods are less adaptable for real-time applications.

Neural Voting Field for Camera-Space 3D Hand Pose Estimation

no code implementations • CVPR 2023 • Lin Huang, Chung-Ching Lin, Kevin Lin, Lin Liang, Lijuan Wang, Junsong Yuan, Zicheng Liu

We present a unified framework for camera-space 3D hand pose estimation from a single RGB image based on 3D implicit representation.

Ranked #4 on

3D Hand Pose Estimation

on HO-3D

Ranked #4 on

3D Hand Pose Estimation

on HO-3D

PaintSeg: Training-free Segmentation via Painting

1 code implementation • 30 May 2023 • Xiang Li, Chung-Ching Lin, Yinpeng Chen, Zicheng Liu, Jinglu Wang, Bhiksha Raj

The paper introduces PaintSeg, a new unsupervised method for segmenting objects without any training.

DisCo: Disentangled Control for Realistic Human Dance Generation

1 code implementation • 30 Jun 2023 • Tan Wang, Linjie Li, Kevin Lin, Yuanhao Zhai, Chung-Ching Lin, Zhengyuan Yang, Hanwang Zhang, Zicheng Liu, Lijuan Wang

In this paper, we depart from the traditional paradigm of human motion transfer and emphasize two additional critical attributes for the synthesis of human dance content in social media contexts: (i) Generalizability: the model should be able to generalize beyond generic human viewpoints as well as unseen human subjects, backgrounds, and poses; (ii) Compositionality: it should allow for the seamless composition of seen/unseen subjects, backgrounds, and poses from different sources.

The Dawn of LMMs: Preliminary Explorations with GPT-4V(ision)

1 code implementation • 29 Sep 2023 • Zhengyuan Yang, Linjie Li, Kevin Lin, JianFeng Wang, Chung-Ching Lin, Zicheng Liu, Lijuan Wang

We hope that this preliminary exploration will inspire future research on the next-generation multimodal task formulation, new ways to exploit and enhance LMMs to solve real-world problems, and gaining better understanding of multimodal foundation models.

Completing Visual Objects via Bridging Generation and Segmentation

no code implementations • 1 Oct 2023 • Xiang Li, Yinpeng Chen, Chung-Ching Lin, Hao Chen, Kai Hu, Rita Singh, Bhiksha Raj, Lijuan Wang, Zicheng Liu

This paper presents a novel approach to object completion, with the primary goal of reconstructing a complete object from its partially visible components.

Idea2Img: Iterative Self-Refinement with GPT-4V(ision) for Automatic Image Design and Generation

no code implementations • 12 Oct 2023 • Zhengyuan Yang, JianFeng Wang, Linjie Li, Kevin Lin, Chung-Ching Lin, Zicheng Liu, Lijuan Wang

We introduce ``Idea to Image,'' a system that enables multimodal iterative self-refinement with GPT-4V(ision) for automatic image design and generation.

MM-VID: Advancing Video Understanding with GPT-4V(ision)

no code implementations • 30 Oct 2023 • Kevin Lin, Faisal Ahmed, Linjie Li, Chung-Ching Lin, Ehsan Azarnasab, Zhengyuan Yang, JianFeng Wang, Lin Liang, Zicheng Liu, Yumao Lu, Ce Liu, Lijuan Wang

We present MM-VID, an integrated system that harnesses the capabilities of GPT-4V, combined with specialized tools in vision, audio, and speech, to facilitate advanced video understanding.

MM-Narrator: Narrating Long-form Videos with Multimodal In-Context Learning

no code implementations • 29 Nov 2023 • Chaoyi Zhang, Kevin Lin, Zhengyuan Yang, JianFeng Wang, Linjie Li, Chung-Ching Lin, Zicheng Liu, Lijuan Wang

We present MM-Narrator, a novel system leveraging GPT-4 with multimodal in-context learning for the generation of audio descriptions (AD).

A Unified Gaussian Process for Branching and Nested Hyperparameter Optimization

no code implementations • 19 Jan 2024 • Jiazhao Zhang, Ying Hung, Chung-Ching Lin, Zicheng Liu

To capture the conditional dependence between branching and nested parameters, a unified Bayesian optimization framework is proposed.