Search Results for author: Chunjing Xu

Found 72 papers, 36 papers with code

Open-sourced Data Ecosystem in Autonomous Driving: the Present and Future

2 code implementations • 6 Dec 2023 • Hongyang Li, Yang Li, Huijie Wang, Jia Zeng, Huilin Xu, Pinlong Cai, Li Chen, Junchi Yan, Feng Xu, Lu Xiong, Jingdong Wang, Futang Zhu, Chunjing Xu, Tiancai Wang, Fei Xia, Beipeng Mu, Zhihui Peng, Dahua Lin, Yu Qiao

With the continuous maturation and application of autonomous driving technology, a systematic examination of open-source autonomous driving datasets becomes instrumental in fostering the robust evolution of the industry ecosystem.

FlowMap: Path Generation for Automated Vehicles in Open Space Using Traffic Flow

no code implementations • 2 May 2023 • Wenchao Ding, Jieru Zhao, Yubin Chu, Haihui Huang, Tong Qin, Chunjing Xu, Yuxiang Guan, Zhongxue Gan

However, how to cognize the ``road'' for automated vehicles where there is no well-defined ``roads'' remains an open problem.

Graph-based Topology Reasoning for Driving Scenes

1 code implementation • 11 Apr 2023 • Tianyu Li, Li Chen, Huijie Wang, Yang Li, Jiazhi Yang, Xiangwei Geng, Shengyin Jiang, Yuting Wang, Hang Xu, Chunjing Xu, Junchi Yan, Ping Luo, Hongyang Li

Understanding the road genome is essential to realize autonomous driving.

Ranked #5 on

3D Lane Detection

on OpenLane-V2 val

Ranked #5 on

3D Lane Detection

on OpenLane-V2 val

Visual Exemplar Driven Task-Prompting for Unified Perception in Autonomous Driving

no code implementations • CVPR 2023 • Xiwen Liang, Minzhe Niu, Jianhua Han, Hang Xu, Chunjing Xu, Xiaodan Liang

Multi-task learning has emerged as a powerful paradigm to solve a range of tasks simultaneously with good efficiency in both computation resources and inference time.

NLIP: Noise-robust Language-Image Pre-training

no code implementations • 14 Dec 2022 • Runhui Huang, Yanxin Long, Jianhua Han, Hang Xu, Xiwen Liang, Chunjing Xu, Xiaodan Liang

Large-scale cross-modal pre-training paradigms have recently shown ubiquitous success on a wide range of downstream tasks, e. g., zero-shot classification, retrieval and image captioning.

Fine-grained Visual-Text Prompt-Driven Self-Training for Open-Vocabulary Object Detection

no code implementations • 2 Nov 2022 • Yanxin Long, Jianhua Han, Runhui Huang, Xu Hang, Yi Zhu, Chunjing Xu, Xiaodan Liang

Inspired by the success of vision-language methods (VLMs) in zero-shot classification, recent works attempt to extend this line of work into object detection by leveraging the localization ability of pre-trained VLMs and generating pseudo labels for unseen classes in a self-training manner.

DetCLIP: Dictionary-Enriched Visual-Concept Paralleled Pre-training for Open-world Detection

no code implementations • 20 Sep 2022 • Lewei Yao, Jianhua Han, Youpeng Wen, Xiaodan Liang, Dan Xu, Wei zhang, Zhenguo Li, Chunjing Xu, Hang Xu

We further design a concept dictionary~(with descriptions) from various online sources and detection datasets to provide prior knowledge for each concept.

Effective Adaptation in Multi-Task Co-Training for Unified Autonomous Driving

no code implementations • 19 Sep 2022 • Xiwen Liang, Yangxin Wu, Jianhua Han, Hang Xu, Chunjing Xu, Xiaodan Liang

Aiming towards a holistic understanding of multiple downstream tasks simultaneously, there is a need for extracting features with better transferability.

Open-world Semantic Segmentation via Contrasting and Clustering Vision-Language Embedding

no code implementations • 18 Jul 2022 • Quande Liu, Youpeng Wen, Jianhua Han, Chunjing Xu, Hang Xu, Xiaodan Liang

To bridge the gap between supervised semantic segmentation and real-world applications that acquires one model to recognize arbitrary new concepts, recent zero-shot segmentation attracts a lot of attention by exploring the relationships between unseen and seen object categories, yet requiring large amounts of densely-annotated data with diverse base classes.

Task-Customized Self-Supervised Pre-training with Scalable Dynamic Routing

no code implementations • 26 May 2022 • Zhili Liu, Jianhua Han, Lanqing Hong, Hang Xu, Kai Chen, Chunjing Xu, Zhenguo Li

On the other hand, for existing SSL methods, it is burdensome and infeasible to use different downstream-task-customized datasets in pre-training for different tasks.

ManiTrans: Entity-Level Text-Guided Image Manipulation via Token-wise Semantic Alignment and Generation

1 code implementation • CVPR 2022 • Jianan Wang, Guansong Lu, Hang Xu, Zhenguo Li, Chunjing Xu, Yanwei Fu

Existing text-guided image manipulation methods aim to modify the appearance of the image or to edit a few objects in a virtual or simple scenario, which is far from practical application.

Laneformer: Object-aware Row-Column Transformers for Lane Detection

no code implementations • 18 Mar 2022 • Jianhua Han, Xiajun Deng, Xinyue Cai, Zhen Yang, Hang Xu, Chunjing Xu, Xiaodan Liang

We present Laneformer, a conceptually simple yet powerful transformer-based architecture tailored for lane detection that is a long-standing research topic for visual perception in autonomous driving.

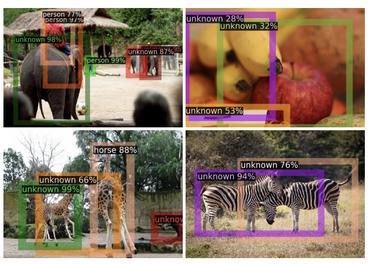

CODA: A Real-World Road Corner Case Dataset for Object Detection in Autonomous Driving

no code implementations • 15 Mar 2022 • Kaican Li, Kai Chen, Haoyu Wang, Lanqing Hong, Chaoqiang Ye, Jianhua Han, Yukuai Chen, Wei zhang, Chunjing Xu, Dit-yan Yeung, Xiaodan Liang, Zhenguo Li, Hang Xu

One main reason that impedes the development of truly reliably self-driving systems is the lack of public datasets for evaluating the performance of object detectors on corner cases.

Wukong: A 100 Million Large-scale Chinese Cross-modal Pre-training Benchmark

1 code implementation • 14 Feb 2022 • Jiaxi Gu, Xiaojun Meng, Guansong Lu, Lu Hou, Minzhe Niu, Xiaodan Liang, Lewei Yao, Runhui Huang, Wei zhang, Xin Jiang, Chunjing Xu, Hang Xu

Experiments show that Wukong can serve as a promising Chinese pre-training dataset and benchmark for different cross-modal learning methods.

Ranked #6 on

Image Retrieval

on MUGE Retrieval

Ranked #6 on

Image Retrieval

on MUGE Retrieval

GhostNets on Heterogeneous Devices via Cheap Operations

8 code implementations • 10 Jan 2022 • Kai Han, Yunhe Wang, Chang Xu, Jianyuan Guo, Chunjing Xu, Enhua Wu, Qi Tian

The proposed C-Ghost module can be taken as a plug-and-play component to upgrade existing convolutional neural networks.

An Empirical Study of Adder Neural Networks for Object Detection

no code implementations • NeurIPS 2021 • Xinghao Chen, Chang Xu, Minjing Dong, Chunjing Xu, Yunhe Wang

Adder neural networks (AdderNets) have shown impressive performance on image classification with only addition operations, which are more energy efficient than traditional convolutional neural networks built with multiplications.

FILIP: Fine-grained Interactive Language-Image Pre-Training

1 code implementation • ICLR 2022 • Lewei Yao, Runhui Huang, Lu Hou, Guansong Lu, Minzhe Niu, Hang Xu, Xiaodan Liang, Zhenguo Li, Xin Jiang, Chunjing Xu

In this paper, we introduce a large-scale Fine-grained Interactive Language-Image Pre-training (FILIP) to achieve finer-level alignment through a cross-modal late interaction mechanism, which uses a token-wise maximum similarity between visual and textual tokens to guide the contrastive objective.

SOFT: Softmax-free Transformer with Linear Complexity

2 code implementations • NeurIPS 2021 • Jiachen Lu, Jinghan Yao, Junge Zhang, Xiatian Zhu, Hang Xu, Weiguo Gao, Chunjing Xu, Tao Xiang, Li Zhang

Crucially, with a linear complexity, much longer token sequences are permitted in SOFT, resulting in superior trade-off between accuracy and complexity.

Learning Versatile Convolution Filters for Efficient Visual Recognition

no code implementations • 20 Sep 2021 • Kai Han, Yunhe Wang, Chang Xu, Chunjing Xu, Enhua Wu, DaCheng Tao

A series of secondary filters can be derived from a primary filter with the help of binary masks.

Voxel Transformer for 3D Object Detection

1 code implementation • ICCV 2021 • Jiageng Mao, Yujing Xue, Minzhe Niu, Haoyue Bai, Jiashi Feng, Xiaodan Liang, Hang Xu, Chunjing Xu

We present Voxel Transformer (VoTr), a novel and effective voxel-based Transformer backbone for 3D object detection from point clouds.

Ranked #3 on

3D Object Detection

on waymo vehicle

(L1 mAP metric)

Ranked #3 on

3D Object Detection

on waymo vehicle

(L1 mAP metric)

Pyramid R-CNN: Towards Better Performance and Adaptability for 3D Object Detection

1 code implementation • ICCV 2021 • Jiageng Mao, Minzhe Niu, Haoyue Bai, Xiaodan Liang, Hang Xu, Chunjing Xu

To resolve the problems, we propose a novel second-stage module, named pyramid RoI head, to adaptively learn the features from the sparse points of interest.

Ranked #2 on

3D Object Detection

on waymo vehicle

(AP metric)

Ranked #2 on

3D Object Detection

on waymo vehicle

(AP metric)

Greedy Network Enlarging

1 code implementation • 31 Jul 2021 • Chuanjian Liu, Kai Han, An Xiao, Yiping Deng, Wei zhang, Chunjing Xu, Yunhe Wang

Recent studies on deep convolutional neural networks present a simple paradigm of architecture design, i. e., models with more MACs typically achieve better accuracy, such as EfficientNet and RegNet.

$S^3$: Sign-Sparse-Shift Reparametrization for Effective Training of Low-bit Shift Networks

1 code implementation • NeurIPS 2021 • Xinlin Li, Bang Liu, YaoLiang Yu, Wulong Liu, Chunjing Xu, Vahid Partovi Nia

Shift neural networks reduce computation complexity by removing expensive multiplication operations and quantizing continuous weights into low-bit discrete values, which are fast and energy efficient compared to conventional neural networks.

SODA10M: A Large-Scale 2D Self/Semi-Supervised Object Detection Dataset for Autonomous Driving

no code implementations • 21 Jun 2021 • Jianhua Han, Xiwen Liang, Hang Xu, Kai Chen, Lanqing Hong, Jiageng Mao, Chaoqiang Ye, Wei zhang, Zhenguo Li, Xiaodan Liang, Chunjing Xu

Experiments show that SODA10M can serve as a promising pre-training dataset for different self-supervised learning methods, which gives superior performance when fine-tuning with different downstream tasks (i. e., detection, semantic/instance segmentation) in autonomous driving domain.

One Million Scenes for Autonomous Driving: ONCE Dataset

1 code implementation • 21 Jun 2021 • Jiageng Mao, Minzhe Niu, Chenhan Jiang, Hanxue Liang, Jingheng Chen, Xiaodan Liang, Yamin Li, Chaoqiang Ye, Wei zhang, Zhenguo Li, Jie Yu, Hang Xu, Chunjing Xu

To facilitate future research on exploiting unlabeled data for 3D detection, we additionally provide a benchmark in which we reproduce and evaluate a variety of self-supervised and semi-supervised methods on the ONCE dataset.

ReNAS: Relativistic Evaluation of Neural Architecture Search

7 code implementations • CVPR 2021 • Yixing Xu, Yunhe Wang, Kai Han, Yehui Tang, Shangling Jui, Chunjing Xu, Chang Xu

An effective and efficient architecture performance evaluation scheme is essential for the success of Neural Architecture Search (NAS).

Positive-Unlabeled Data Purification in the Wild for Object Detection

no code implementations • CVPR 2021 • Jianyuan Guo, Kai Han, Han Wu, Chao Zhang, Xinghao Chen, Chunjing Xu, Chang Xu, Yunhe Wang

In this paper, we present a positive-unlabeled learning based scheme to expand training data by purifying valuable images from massive unlabeled ones, where the original training data are viewed as positive data and the unlabeled images in the wild are unlabeled data.

Learning Student Networks in the Wild

1 code implementation • CVPR 2021 • Hanting Chen, Tianyu Guo, Chang Xu, Wenshuo Li, Chunjing Xu, Chao Xu, Yunhe Wang

Experiments on various datasets demonstrate that the student networks learned by the proposed method can achieve comparable performance with those using the original dataset.

Data-Free Knowledge Distillation for Image Super-Resolution

no code implementations • CVPR 2021 • Yiman Zhang, Hanting Chen, Xinghao Chen, Yiping Deng, Chunjing Xu, Yunhe Wang

Experiments on various datasets and architectures demonstrate that the proposed method is able to be utilized for effectively learning portable student networks without the original data, e. g., with 0. 16dB PSNR drop on Set5 for x2 super resolution.

Universal Adder Neural Networks

no code implementations • 29 May 2021 • Hanting Chen, Yunhe Wang, Chang Xu, Chao Xu, Chunjing Xu, Tong Zhang

The widely-used convolutions in deep neural networks are exactly cross-correlation to measure the similarity between input feature and convolution filters, which involves massive multiplications between float values.

S$^3$: Sign-Sparse-Shift Reparametrization for Effective Training of Low-bit Shift Networks

no code implementations • NeurIPS 2021 • Xinlin Li, Bang Liu, YaoLiang Yu, Wulong Liu, Chunjing Xu, Vahid Partovi Nia

Shift neural networks reduce computation complexity by removing expensive multiplication operations and quantizing continuous weights into low-bit discrete values, which are fast and energy-efficient compared to conventional neural networks.

Winograd Algorithm for AdderNet

no code implementations • 12 May 2021 • Wenshuo Li, Hanting Chen, Mingqiang Huang, Xinghao Chen, Chunjing Xu, Yunhe Wang

Adder neural network (AdderNet) is a new kind of deep model that replaces the original massive multiplications in convolutions by additions while preserving the high performance.

Distilling Object Detectors via Decoupled Features

1 code implementation • CVPR 2021 • Jianyuan Guo, Kai Han, Yunhe Wang, Han Wu, Xinghao Chen, Chunjing Xu, Chang Xu

To this end, we present a novel distillation algorithm via decoupled features (DeFeat) for learning a better student detector.

Learning Frequency-aware Dynamic Network for Efficient Super-Resolution

no code implementations • ICCV 2021 • Wenbin Xie, Dehua Song, Chang Xu, Chunjing Xu, HUI ZHANG, Yunhe Wang

Extensive experiments conducted on benchmark SISR models and datasets show that the frequency-aware dynamic network can be employed for various SISR neural architectures to obtain the better tradeoff between visual quality and computational complexity.

Learning Frequency Domain Approximation for Binary Neural Networks

3 code implementations • NeurIPS 2021 • Yixing Xu, Kai Han, Chang Xu, Yehui Tang, Chunjing Xu, Yunhe Wang

Binary neural networks (BNNs) represent original full-precision weights and activations into 1-bit with sign function.

Transformer in Transformer

12 code implementations • NeurIPS 2021 • Kai Han, An Xiao, Enhua Wu, Jianyuan Guo, Chunjing Xu, Yunhe Wang

In this paper, we point out that the attention inside these local patches are also essential for building visual transformers with high performance and we explore a new architecture, namely, Transformer iN Transformer (TNT).

AdderNet and its Minimalist Hardware Design for Energy-Efficient Artificial Intelligence

no code implementations • 25 Jan 2021 • Yunhe Wang, Mingqiang Huang, Kai Han, Hanting Chen, Wei zhang, Chunjing Xu, DaCheng Tao

With a comprehensive comparison on the performance, power consumption, hardware resource consumption and network generalization capability, we conclude the AdderNet is able to surpass all the other competitors including the classical CNN, novel memristor-network, XNOR-Net and the shift-kernel based network, indicating its great potential in future high performance and energy-efficient artificial intelligence applications.

A Survey on Visual Transformer

no code implementations • 23 Dec 2020 • Kai Han, Yunhe Wang, Hanting Chen, Xinghao Chen, Jianyuan Guo, Zhenhua Liu, Yehui Tang, An Xiao, Chunjing Xu, Yixing Xu, Zhaohui Yang, Yiman Zhang, DaCheng Tao

Transformer, first applied to the field of natural language processing, is a type of deep neural network mainly based on the self-attention mechanism.

Model Rubik’s Cube: Twisting Resolution, Depth and Width for TinyNets

3 code implementations • NeurIPS 2020 • Kai Han, Yunhe Wang, Qiulin Zhang, Wei zhang, Chunjing Xu, Tong Zhang

To this end, we summarize a tiny formula for downsizing neural architectures through a series of smaller models derived from the EfficientNet-B0 with the FLOPs constraint.

Pre-Trained Image Processing Transformer

6 code implementations • CVPR 2021 • Hanting Chen, Yunhe Wang, Tianyu Guo, Chang Xu, Yiping Deng, Zhenhua Liu, Siwei Ma, Chunjing Xu, Chao Xu, Wen Gao

To maximally excavate the capability of transformer, we present to utilize the well-known ImageNet benchmark for generating a large amount of corrupted image pairs.

Ranked #1 on

Single Image Deraining

on Rain100L

(using extra training data)

Ranked #1 on

Single Image Deraining

on Rain100L

(using extra training data)

VEGA: Towards an End-to-End Configurable AutoML Pipeline

1 code implementation • 3 Nov 2020 • Bochao Wang, Hang Xu, Jiajin Zhang, Chen Chen, Xiaozhi Fang, Yixing Xu, Ning Kang, Lanqing Hong, Chenhan Jiang, Xinyue Cai, Jiawei Li, Fengwei Zhou, Yong Li, Zhicheng Liu, Xinghao Chen, Kai Han, Han Shu, Dehua Song, Yunhe Wang, Wei zhang, Chunjing Xu, Zhenguo Li, Wenzhi Liu, Tong Zhang

Automated Machine Learning (AutoML) is an important industrial solution for automatic discovery and deployment of the machine learning models.

Model Rubik's Cube: Twisting Resolution, Depth and Width for TinyNets

10 code implementations • 28 Oct 2020 • Kai Han, Yunhe Wang, Qiulin Zhang, Wei zhang, Chunjing Xu, Tong Zhang

To this end, we summarize a tiny formula for downsizing neural architectures through a series of smaller models derived from the EfficientNet-B0 with the FLOPs constraint.

Ranked #692 on

Image Classification

on ImageNet

Ranked #692 on

Image Classification

on ImageNet

SCOP: Scientific Control for Reliable Neural Network Pruning

4 code implementations • NeurIPS 2020 • Yehui Tang, Yunhe Wang, Yixing Xu, DaCheng Tao, Chunjing Xu, Chao Xu, Chang Xu

To increase the reliability of the results, we prefer to have a more rigorous research design by including a scientific control group as an essential part to minimize the effect of all factors except the association between the filter and expected network output.

Training Binary Neural Networks through Learning with Noisy Supervision

1 code implementation • ICML 2020 • Kai Han, Yunhe Wang, Yixing Xu, Chunjing Xu, Enhua Wu, Chang Xu

This paper formalizes the binarization operations over neural networks from a learning perspective.

Kernel Based Progressive Distillation for Adder Neural Networks

no code implementations • NeurIPS 2020 • Yixing Xu, Chang Xu, Xinghao Chen, Wei zhang, Chunjing Xu, Yunhe Wang

A convolutional neural network (CNN) with the same architecture is simultaneously initialized and trained as a teacher network, features and weights of ANN and CNN will be transformed to a new space to eliminate the accuracy drop.

AdderSR: Towards Energy Efficient Image Super-Resolution

no code implementations • CVPR 2021 • Dehua Song, Yunhe Wang, Hanting Chen, Chang Xu, Chunjing Xu, DaCheng Tao

To this end, we thoroughly analyze the relationship between an adder operation and the identity mapping and insert shortcuts to enhance the performance of SR models using adder networks.

Searching for Low-Bit Weights in Quantized Neural Networks

1 code implementation • NeurIPS 2020 • Zhaohui Yang, Yunhe Wang, Kai Han, Chunjing Xu, Chao Xu, DaCheng Tao, Chang Xu

Quantized neural networks with low-bit weights and activations are attractive for developing AI accelerators.

Video Super-resolution with Temporal Group Attention

1 code implementation • CVPR 2020 • Takashi Isobe, Songjiang Li, Xu Jia, Shanxin Yuan, Gregory Slabaugh, Chunjing Xu, Ya-Li Li, Shengjin Wang, Qi Tian

Video super-resolution, which aims at producing a high-resolution video from its corresponding low-resolution version, has recently drawn increasing attention.

Optical Flow Distillation: Towards Efficient and Stable Video Style Transfer

no code implementations • ECCV 2020 • Xinghao Chen, Yiman Zhang, Yunhe Wang, Han Shu, Chunjing Xu, Chang Xu

This paper proposes to learn a lightweight video style transfer network via knowledge distillation paradigm.

HourNAS: Extremely Fast Neural Architecture Search Through an Hourglass Lens

6 code implementations • CVPR 2021 • Zhaohui Yang, Yunhe Wang, Xinghao Chen, Jianyuan Guo, Wei zhang, Chao Xu, Chunjing Xu, DaCheng Tao, Chang Xu

To achieve an extremely fast NAS while preserving the high accuracy, we propose to identify the vital blocks and make them the priority in the architecture search.

A Semi-Supervised Assessor of Neural Architectures

no code implementations • CVPR 2020 • Yehui Tang, Yunhe Wang, Yixing Xu, Hanting Chen, Chunjing Xu, Boxin Shi, Chao Xu, Qi Tian, Chang Xu

A graph convolutional neural network is introduced to predict the performance of architectures based on the learned representations and their relation modeled by the graph.

Distilling portable Generative Adversarial Networks for Image Translation

no code implementations • 7 Mar 2020 • Hanting Chen, Yunhe Wang, Han Shu, Changyuan Wen, Chunjing Xu, Boxin Shi, Chao Xu, Chang Xu

To promote the capability of student generator, we include a student discriminator to measure the distances between real images, and images generated by student and teacher generators.

Beyond Dropout: Feature Map Distortion to Regularize Deep Neural Networks

2 code implementations • 23 Feb 2020 • Yehui Tang, Yunhe Wang, Yixing Xu, Boxin Shi, Chao Xu, Chunjing Xu, Chang Xu

On one hand, massive trainable parameters significantly enhance the performance of these deep networks.

Discernible Image Compression

no code implementations • 17 Feb 2020 • Zhaohui Yang, Yunhe Wang, Chang Xu, Peng Du, Chao Xu, Chunjing Xu, Qi Tian

Experiments on benchmarks demonstrate that images compressed by using the proposed method can also be well recognized by subsequent visual recognition and detection models.

Widening and Squeezing: Towards Accurate and Efficient QNNs

no code implementations • 3 Feb 2020 • Chuanjian Liu, Kai Han, Yunhe Wang, Hanting Chen, Qi Tian, Chunjing Xu

Quantization neural networks (QNNs) are very attractive to the industry because their extremely cheap calculation and storage overhead, but their performance is still worse than that of networks with full-precision parameters.

AdderNet: Do We Really Need Multiplications in Deep Learning?

7 code implementations • CVPR 2020 • Hanting Chen, Yunhe Wang, Chunjing Xu, Boxin Shi, Chao Xu, Qi Tian, Chang Xu

The widely-used convolutions in deep neural networks are exactly cross-correlation to measure the similarity between input feature and convolution filters, which involves massive multiplications between float values.

GhostNet: More Features from Cheap Operations

34 code implementations • CVPR 2020 • Kai Han, Yunhe Wang, Qi Tian, Jianyuan Guo, Chunjing Xu, Chang Xu

Deploying convolutional neural networks (CNNs) on embedded devices is difficult due to the limited memory and computation resources.

Ranked #864 on

Image Classification

on ImageNet

Ranked #864 on

Image Classification

on ImageNet

Unsupervised Image Super-Resolution with an Indirect Supervised Path

no code implementations • 7 Oct 2019 • Zhen Han, Enyan Dai, Xu Jia, Xiaoying Ren, Shuaijun Chen, Chunjing Xu, Jianzhuang Liu, Qi Tian

The task of single image super-resolution (SISR) aims at reconstructing a high-resolution (HR) image from a low-resolution (LR) image.

ReNAS:Relativistic Evaluation of Neural Architecture Search

4 code implementations • 30 Sep 2019 • Yixing Xu, Yunhe Wang, Kai Han, Yehui Tang, Shangling Jui, Chunjing Xu, Chang Xu

An effective and efficient architecture performance evaluation scheme is essential for the success of Neural Architecture Search (NAS).

Efficient Residual Dense Block Search for Image Super-Resolution

3 code implementations • 25 Sep 2019 • Dehua Song, Chang Xu, Xu Jia, Yiyi Chen, Chunjing Xu, Yunhe Wang

Focusing on this issue, we propose an efficient residual dense block search algorithm with multiple objectives to hunt for fast, lightweight and accurate networks for image super-resolution.

Positive-Unlabeled Compression on the Cloud

2 code implementations • NeurIPS 2019 • Yixing Xu, Yunhe Wang, Hanting Chen, Kai Han, Chunjing Xu, DaCheng Tao, Chang Xu

In practice, only a small portion of the original training set is required as positive examples and more useful training examples can be obtained from the massive unlabeled data on the cloud through a PU classifier with an attention based multi-scale feature extractor.

Searching for Accurate Binary Neural Architectures

no code implementations • 16 Sep 2019 • Mingzhu Shen, Kai Han, Chunjing Xu, Yunhe Wang

Binary neural networks have attracted tremendous attention due to the efficiency for deploying them on mobile devices.

CARS: Continuous Evolution for Efficient Neural Architecture Search

1 code implementation • CVPR 2020 • Zhaohui Yang, Yunhe Wang, Xinghao Chen, Boxin Shi, Chao Xu, Chunjing Xu, Qi Tian, Chang Xu

Architectures in the population that share parameters within one SuperNet in the latest generation will be tuned over the training dataset with a few epochs.

Full-Stack Filters to Build Minimum Viable CNNs

1 code implementation • 6 Aug 2019 • Kai Han, Yunhe Wang, Yixing Xu, Chunjing Xu, DaCheng Tao, Chang Xu

Existing works used to decrease the number or size of requested convolution filters for a minimum viable CNN on edge devices.

Attribute Aware Pooling for Pedestrian Attribute Recognition

no code implementations • 27 Jul 2019 • Kai Han, Yunhe Wang, Han Shu, Chuanjian Liu, Chunjing Xu, Chang Xu

This paper expands the strength of deep convolutional neural networks (CNNs) to the pedestrian attribute recognition problem by devising a novel attribute aware pooling algorithm.

Learning Instance-wise Sparsity for Accelerating Deep Models

no code implementations • 27 Jul 2019 • Chuanjian Liu, Yunhe Wang, Kai Han, Chunjing Xu, Chang Xu

Exploring deep convolutional neural networks of high efficiency and low memory usage is very essential for a wide variety of machine learning tasks.

Co-Evolutionary Compression for Unpaired Image Translation

2 code implementations • ICCV 2019 • Han Shu, Yunhe Wang, Xu Jia, Kai Han, Hanting Chen, Chunjing Xu, Qi Tian, Chang Xu

Generative adversarial networks (GANs) have been successfully used for considerable computer vision tasks, especially the image-to-image translation.

Deep Fitting Degree Scoring Network for Monocular 3D Object Detection

no code implementations • CVPR 2019 • Lijie Liu, Jiwen Lu, Chunjing Xu, Qi Tian, Jie zhou

In this paper, we propose to learn a deep fitting degree scoring network for monocular 3D object detection, which aims to score fitting degree between proposals and object conclusively.

Ranked #7 on

Vehicle Pose Estimation

on KITTI Cars Hard

Ranked #7 on

Vehicle Pose Estimation

on KITTI Cars Hard

BridgeNet: A Continuity-Aware Probabilistic Network for Age Estimation

no code implementations • CVPR 2019 • Wanhua Li, Jiwen Lu, Jianjiang Feng, Chunjing Xu, Jie zhou, Qi Tian

Existing methods for age estimation usually apply a divide-and-conquer strategy to deal with heterogeneous data caused by the non-stationary aging process.

Ranked #2 on

Age Estimation

on FGNET

Ranked #2 on

Age Estimation

on FGNET

Data-Free Learning of Student Networks

3 code implementations • ICCV 2019 • Hanting Chen, Yunhe Wang, Chang Xu, Zhaohui Yang, Chuanjian Liu, Boxin Shi, Chunjing Xu, Chao Xu, Qi Tian

Learning portable neural networks is very essential for computer vision for the purpose that pre-trained heavy deep models can be well applied on edge devices such as mobile phones and micro sensors.

Learning Versatile Filters for Efficient Convolutional Neural Networks

no code implementations • NeurIPS 2018 • Yunhe Wang, Chang Xu, Chunjing Xu, Chao Xu, DaCheng Tao

A series of secondary filters can be derived from a primary filter.

Transitive Distance Clustering with K-Means Duality

no code implementations • CVPR 2014 • Zhiding Yu, Chunjing Xu, Deyu Meng, Zhuo Hui, Fanyi Xiao, Wenbo Liu, Jianzhuang Liu

We propose a very intuitive and simple approximation for the conventional spectral clustering methods.