Search Results for author: George E. Dahl

Found 23 papers, 9 papers with code

Benchmarking Neural Network Training Algorithms

3 code implementations • 12 Jun 2023 • George E. Dahl, Frank Schneider, Zachary Nado, Naman Agarwal, Chandramouli Shama Sastry, Philipp Hennig, Sourabh Medapati, Runa Eschenhagen, Priya Kasimbeg, Daniel Suo, Juhan Bae, Justin Gilmer, Abel L. Peirson, Bilal Khan, Rohan Anil, Mike Rabbat, Shankar Krishnan, Daniel Snider, Ehsan Amid, Kongtao Chen, Chris J. Maddison, Rakshith Vasudev, Michal Badura, Ankush Garg, Peter Mattson

In order to address these challenges, we introduce a new, competitive, time-to-result benchmark using multiple workloads running on fixed hardware, the AlgoPerf: Training Algorithms benchmark.

Adaptive Gradient Methods at the Edge of Stability

no code implementations • 29 Jul 2022 • Jeremy M. Cohen, Behrooz Ghorbani, Shankar Krishnan, Naman Agarwal, Sourabh Medapati, Michal Badura, Daniel Suo, David Cardoze, Zachary Nado, George E. Dahl, Justin Gilmer

Very little is known about the training dynamics of adaptive gradient methods like Adam in deep learning.

Pre-training helps Bayesian optimization too

1 code implementation • 7 Jul 2022 • Zi Wang, George E. Dahl, Kevin Swersky, Chansoo Lee, Zelda Mariet, Zachary Nado, Justin Gilmer, Jasper Snoek, Zoubin Ghahramani

Contrary to a common belief that BO is suited to optimizing black-box functions, it actually requires domain knowledge on characteristics of those functions to deploy BO successfully.

AI system for fetal ultrasound in low-resource settings

no code implementations • 18 Mar 2022 • Ryan G. Gomes, Bellington Vwalika, Chace Lee, Angelica Willis, Marcin Sieniek, Joan T. Price, Christina Chen, Margaret P. Kasaro, James A. Taylor, Elizabeth M. Stringer, Scott Mayer McKinney, Ntazana Sindano, George E. Dahl, William Goodnight III, Justin Gilmer, Benjamin H. Chi, Charles Lau, Terry Spitz, T Saensuksopa, Kris Liu, Jonny Wong, Rory Pilgrim, Akib Uddin, Greg Corrado, Lily Peng, Katherine Chou, Daniel Tse, Jeffrey S. A. Stringer, Shravya Shetty

Using a simplified sweep protocol with real-time AI feedback on sweep quality, we have demonstrated the generalization of model performance to minimally trained novice ultrasound operators using low cost ultrasound devices with on-device AI integration.

Predicting the utility of search spaces for black-box optimization:a simple, budget-aware approach

no code implementations • 15 Dec 2021 • Setareh Ariafar, Justin Gilmer, Zack Nado, Jasper Snoek, Rodolphe Jenatton, George E. Dahl

For example, when tuning hyperparameters for machine learning pipelines on a new problem given a limited budget, one must strike a balance between excluding potentially promising regions and keeping the search space small enough to be tractable.

Pre-trained Gaussian processes for Bayesian optimization

4 code implementations • 16 Sep 2021 • Zi Wang, George E. Dahl, Kevin Swersky, Chansoo Lee, Zachary Nado, Justin Gilmer, Jasper Snoek, Zoubin Ghahramani

Contrary to a common expectation that BO is suited to optimizing black-box functions, it actually requires domain knowledge about those functions to deploy BO successfully.

What Will it Take to Fix Benchmarking in Natural Language Understanding?

no code implementations • NAACL 2021 • Samuel R. Bowman, George E. Dahl

Evaluation for many natural language understanding (NLU) tasks is broken: Unreliable and biased systems score so highly on standard benchmarks that there is little room for researchers who develop better systems to demonstrate their improvements.

A Large Batch Optimizer Reality Check: Traditional, Generic Optimizers Suffice Across Batch Sizes

no code implementations • NeurIPS 2021 • Zachary Nado, Justin M. Gilmer, Christopher J. Shallue, Rohan Anil, George E. Dahl

Recently the LARS and LAMB optimizers have been proposed for training neural networks faster using large batch sizes.

Ranked #1 on

Question Answering

on SQuAD1.1

(Hardware Burden metric)

Ranked #1 on

Question Answering

on SQuAD1.1

(Hardware Burden metric)

On Empirical Comparisons of Optimizers for Deep Learning

1 code implementation • 11 Oct 2019 • Dami Choi, Christopher J. Shallue, Zachary Nado, Jaehoon Lee, Chris J. Maddison, George E. Dahl

In particular, we find that the popular adaptive gradient methods never underperform momentum or gradient descent.

Faster Neural Network Training with Data Echoing

1 code implementation • 12 Jul 2019 • Dami Choi, Alexandre Passos, Christopher J. Shallue, George E. Dahl

In the twilight of Moore's law, GPUs and other specialized hardware accelerators have dramatically sped up neural network training.

Which Algorithmic Choices Matter at Which Batch Sizes? Insights From a Noisy Quadratic Model

1 code implementation • NeurIPS 2019 • Guodong Zhang, Lala Li, Zachary Nado, James Martens, Sushant Sachdeva, George E. Dahl, Christopher J. Shallue, Roger Grosse

Increasing the batch size is a popular way to speed up neural network training, but beyond some critical batch size, larger batch sizes yield diminishing returns.

Measuring the Effects of Data Parallelism on Neural Network Training

no code implementations • 8 Nov 2018 • Christopher J. Shallue, Jaehoon Lee, Joseph Antognini, Jascha Sohl-Dickstein, Roy Frostig, George E. Dahl

Along the way, we show that disagreements in the literature on how batch size affects model quality can largely be explained by differences in metaparameter tuning and compute budgets at different batch sizes.

The Importance of Generation Order in Language Modeling

no code implementations • EMNLP 2018 • Nicolas Ford, Daniel Duckworth, Mohammad Norouzi, George E. Dahl

Neural language models are a critical component of state-of-the-art systems for machine translation, summarization, audio transcription, and other tasks.

Motivating the Rules of the Game for Adversarial Example Research

no code implementations • 18 Jul 2018 • Justin Gilmer, Ryan P. Adams, Ian Goodfellow, David Andersen, George E. Dahl

Advances in machine learning have led to broad deployment of systems with impressive performance on important problems.

Embedding Text in Hyperbolic Spaces

no code implementations • WS 2018 • Bhuwan Dhingra, Christopher J. Shallue, Mohammad Norouzi, Andrew M. Dai, George E. Dahl

Ideally, we could incorporate our prior knowledge of this hierarchical structure into unsupervised learning algorithms that work on text data.

Parallel Architecture and Hyperparameter Search via Successive Halving and Classification

1 code implementation • 25 May 2018 • Manoj Kumar, George E. Dahl, Vijay Vasudevan, Mohammad Norouzi

We present a simple and powerful algorithm for parallel black box optimization called Successive Halving and Classification (SHAC).

Large scale distributed neural network training through online distillation

no code implementations • ICLR 2018 • Rohan Anil, Gabriel Pereyra, Alexandre Passos, Robert Ormandi, George E. Dahl, Geoffrey E. Hinton

Two neural networks trained on disjoint subsets of the data can share knowledge by encouraging each model to agree with the predictions the other model would have made.

Neural Message Passing for Quantum Chemistry

21 code implementations • ICML 2017 • Justin Gilmer, Samuel S. Schoenholz, Patrick F. Riley, Oriol Vinyals, George E. Dahl

Supervised learning on molecules has incredible potential to be useful in chemistry, drug discovery, and materials science.

Ranked #5 on

Graph Regression

on ZINC 100k

Ranked #5 on

Graph Regression

on ZINC 100k

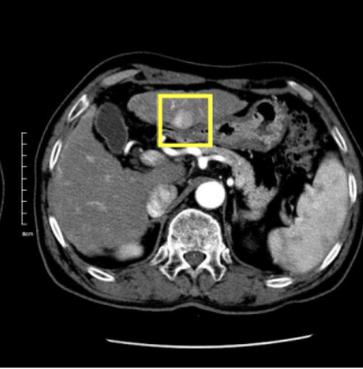

Detecting Cancer Metastases on Gigapixel Pathology Images

6 code implementations • 3 Mar 2017 • Yun Liu, Krishna Gadepalli, Mohammad Norouzi, George E. Dahl, Timo Kohlberger, Aleksey Boyko, Subhashini Venugopalan, Aleksei Timofeev, Philip Q. Nelson, Greg S. Corrado, Jason D. Hipp, Lily Peng, Martin C. Stumpe

At 8 false positives per image, we detect 92. 4% of the tumors, relative to 82. 7% by the previous best automated approach.

Ranked #2 on

Medical Object Detection

on Barrett’s Esophagus

Ranked #2 on

Medical Object Detection

on Barrett’s Esophagus

Machine learning prediction errors better than DFT accuracy

no code implementations • J. Chem. Theory Comput. 2017 • Felix A. Faber, Luke Hutchison, Bing Huang, Justin Gilmer, Samuel S. Schoenholz, George E. Dahl, Oriol Vinyals, Steven Kearnes, Patrick F. Riley, O. Anatole von Lilienfeld

We investigate the impact of choosing regressors and molecular representations for the construction of fast machine learning (ML) models of thirteen electronic ground-state properties of organic molecules.

Ranked #18 on

Formation Energy

on QM9

Ranked #18 on

Formation Energy

on QM9

Incorporating Side Information in Probabilistic Matrix Factorization with Gaussian Processes

no code implementations • 9 Aug 2014 • Ryan Prescott Adams, George E. Dahl, Iain Murray

Probabilistic matrix factorization (PMF) is a powerful method for modeling data associ- ated with pairwise relationships, Finding use in collaborative Filtering, computational bi- ology, and document analysis, among other areas.

Multi-task Neural Networks for QSAR Predictions

no code implementations • 4 Jun 2014 • George E. Dahl, Navdeep Jaitly, Ruslan Salakhutdinov

Although artificial neural networks have occasionally been used for Quantitative Structure-Activity/Property Relationship (QSAR/QSPR) studies in the past, the literature has of late been dominated by other machine learning techniques such as random forests.

Improvements to deep convolutional neural networks for LVCSR

no code implementations • 5 Sep 2013 • Tara N. Sainath, Brian Kingsbury, Abdel-rahman Mohamed, George E. Dahl, George Saon, Hagen Soltau, Tomas Beran, Aleksandr Y. Aravkin, Bhuvana Ramabhadran

We find that with these improvements, particularly with fMLLR and dropout, we are able to achieve an additional 2-3% relative improvement in WER on a 50-hour Broadcast News task over our previous best CNN baseline.