Search Results for author: Hao Shao

Found 18 papers, 13 papers with code

Visual CoT: Unleashing Chain-of-Thought Reasoning in Multi-Modal Language Models

1 code implementation • 25 Mar 2024 • Hao Shao, Shengju Qian, Han Xiao, Guanglu Song, Zhuofan Zong, Letian Wang, Yu Liu, Hongsheng Li

This paper presents Visual CoT, a novel pipeline that leverages the reasoning capabilities of multi-modal large language models (MLLMs) by incorporating visual Chain-of-Thought (CoT) reasoning.

SmartRefine: A Scenario-Adaptive Refinement Framework for Efficient Motion Prediction

1 code implementation • 18 Mar 2024 • Yang Zhou, Hao Shao, Letian Wang, Steven L. Waslander, Hongsheng Li, Yu Liu

Context information, such as road maps and surrounding agents' states, provides crucial geometric and semantic information for motion behavior prediction.

SPHINX-X: Scaling Data and Parameters for a Family of Multi-modal Large Language Models

1 code implementation • 8 Feb 2024 • Peng Gao, Renrui Zhang, Chris Liu, Longtian Qiu, Siyuan Huang, Weifeng Lin, Shitian Zhao, Shijie Geng, Ziyi Lin, Peng Jin, Kaipeng Zhang, Wenqi Shao, Chao Xu, Conghui He, Junjun He, Hao Shao, Pan Lu, Hongsheng Li, Yu Qiao

We propose SPHINX-X, an extensive Multimodality Large Language Model (MLLM) series developed upon SPHINX.

Ranked #4 on

Video Question Answering

on MVBench

Ranked #4 on

Video Question Answering

on MVBench

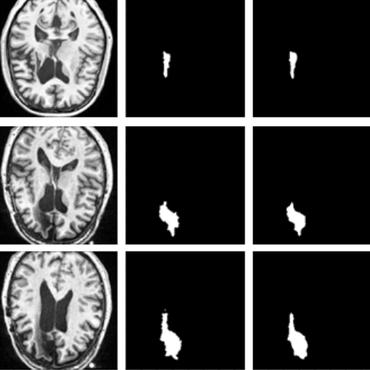

MCANet: Medical Image Segmentation with Multi-Scale Cross-Axis Attention

1 code implementation • 14 Dec 2023 • Hao Shao, Quansheng Zeng, Qibin Hou, Jufeng Yang

To process the significant variations of lesion regions or organs in individual sizes and shapes, we also use multiple convolutions of strip-shape kernels with different kernel sizes in each axial attention path to improve the efficiency of the proposed MCA in encoding spatial information.

Polyper: Boundary Sensitive Polyp Segmentation

1 code implementation • 14 Dec 2023 • Hao Shao, Yang Zhang, Qibin Hou

We present a new boundary sensitive framework for polyp segmentation, called Polyper.

LMDrive: Closed-Loop End-to-End Driving with Large Language Models

1 code implementation • 12 Dec 2023 • Hao Shao, Yuxuan Hu, Letian Wang, Steven L. Waslander, Yu Liu, Hongsheng Li

On the other hand, previous autonomous driving methods tend to rely on limited-format inputs (e. g. sensor data and navigation waypoints), restricting the vehicle's ability to understand language information and interact with humans.

ReasonNet: End-to-End Driving with Temporal and Global Reasoning

no code implementations • CVPR 2023 • Hao Shao, Letian Wang, RuoBing Chen, Steven L. Waslander, Hongsheng Li, Yu Liu

The large-scale deployment of autonomous vehicles is yet to come, and one of the major remaining challenges lies in urban dense traffic scenarios.

Ranked #1 on

Autonomous Driving

on CARLA Leaderboard

Ranked #1 on

Autonomous Driving

on CARLA Leaderboard

Efficient Reinforcement Learning for Autonomous Driving with Parameterized Skills and Priors

1 code implementation • 8 May 2023 • Letian Wang, Jie Liu, Hao Shao, Wenshuo Wang, RuoBing Chen, Yu Liu, Steven L. Waslander

Inspired by this, we propose ASAP-RL, an efficient reinforcement learning algorithm for autonomous driving that simultaneously leverages motion skills and expert priors.

Safety-Enhanced Autonomous Driving Using Interpretable Sensor Fusion Transformer

1 code implementation • 28 Jul 2022 • Hao Shao, Letian Wang, RuoBing Chen, Hongsheng Li, Yu Liu

Large-scale deployment of autonomous vehicles has been continually delayed due to safety concerns.

Ranked #2 on

Autonomous Driving

on CARLA Leaderboard

Ranked #2 on

Autonomous Driving

on CARLA Leaderboard

Blending Anti-Aliasing into Vision Transformer

no code implementations • NeurIPS 2021 • Shengju Qian, Hao Shao, Yi Zhu, Mu Li, Jiaya Jia

In this work, we analyze the uncharted problem of aliasing in vision transformer and explore to incorporate anti-aliasing properties.

Leaning Compact and Representative Features for Cross-Modality Person Re-Identification

1 code implementation • 26 Mar 2021 • Guangwei Gao, Hao Shao, Fei Wu, Meng Yang, Yi Yu

This paper pays close attention to the cross-modality visible-infrared person re-identification (VI Re-ID) task, which aims to match pedestrian samples between visible and infrared modes.

Cross-Modality Person Re-identification

Cross-Modality Person Re-identification

Knowledge Distillation

+1

Knowledge Distillation

+1

Self-supervised Temporal Learning

no code implementations • 1 Jan 2021 • Hao Shao, Yu Liu, Hongsheng Li

Inspired by spatial-based contrastive SSL, we show that significant improvement can be achieved by a proposed temporal-based contrastive learning approach, which includes three novel and efficient modules: temporal augmentations, temporal memory bank and SSTL loss.

Complementary Boundary Generator with Scale-Invariant Relation Modeling for Temporal Action Localization: Submission to ActivityNet Challenge 2020

no code implementations • 20 Jul 2020 • Haisheng Su, Jinyuan Feng, Hao Shao, Zhenyu Jiang, Manyuan Zhang, Wei Wu, Yu Liu, Hongsheng Li, Junjie Yan

Specifically, in order to generate high-quality proposals, we consider several factors including the video feature encoder, the proposal generator, the proposal-proposal relations, the scale imbalance, and ensemble strategy.

1st place solution for AVA-Kinetics Crossover in AcitivityNet Challenge 2020

2 code implementations • 16 Jun 2020 • Siyu Chen, Junting Pan, Guanglu Song, Manyuan Zhang, Hao Shao, Ziyi Lin, Jing Shao, Hongsheng Li, Yu Liu

This technical report introduces our winning solution to the spatio-temporal action localization track, AVA-Kinetics Crossover, in ActivityNet Challenge 2020.

Top-1 Solution of Multi-Moments in Time Challenge 2019

1 code implementation • 12 Mar 2020 • Manyuan Zhang, Hao Shao, Guanglu Song, Yu Liu, Junjie Yan

In this technical report, we briefly introduce the solutions of our team 'Efficient' for the Multi-Moments in Time challenge in ICCV 2019.

Overview of the CCKS 2019 Knowledge Graph Evaluation Track: Entity, Relation, Event and QA

no code implementations • 9 Mar 2020 • Xianpei Han, Zhichun Wang, Jiangtao Zhang, Qinghua Wen, Wenqi Li, Buzhou Tang, Qi. Wang, Zhifan Feng, Yang Zhang, Yajuan Lu, Haitao Wang, Wenliang Chen, Hao Shao, Yubo Chen, Kang Liu, Jun Zhao, Taifeng Wang, Kezun Zhang, Meng Wang, Yinlin Jiang, Guilin Qi, Lei Zou, Sen Hu, Minhao Zhang, Yinnian Lin

Knowledge graph models world knowledge as concepts, entities, and the relationships between them, which has been widely used in many real-world tasks.

Temporal Interlacing Network

4 code implementations • 17 Jan 2020 • Hao Shao, Shengju Qian, Yu Liu

In this way, a heavy temporal model is replaced by a simple interlacing operator.

CCKS 2019 Shared Task on Inter-Personal Relationship Extraction

1 code implementation • 29 Aug 2019 • Haitao Wang, Zhengqiu He, Tong Zhu, Hao Shao, Wenliang Chen, Min Zhang

In this paper, we present the task definition, the description of data and the evaluation methodology used during this shared task.