Search Results for author: Huiyu Wang

Found 28 papers, 16 papers with code

Micro-Batch Training with Batch-Channel Normalization and Weight Standardization

8 code implementations • 25 Mar 2019 • Siyuan Qiao, Huiyu Wang, Chenxi Liu, Wei Shen, Alan Yuille

Batch Normalization (BN) has become an out-of-box technique to improve deep network training.

Ranked #76 on

Instance Segmentation

on COCO minival

Ranked #76 on

Instance Segmentation

on COCO minival

Axial-DeepLab: Stand-Alone Axial-Attention for Panoptic Segmentation

5 code implementations • ECCV 2020 • Huiyu Wang, Yukun Zhu, Bradley Green, Hartwig Adam, Alan Yuille, Liang-Chieh Chen

In this paper, we attempt to remove this constraint by factorizing 2D self-attention into two 1D self-attentions.

Ranked #4 on

Panoptic Segmentation

on Cityscapes val

(using extra training data)

Ranked #4 on

Panoptic Segmentation

on Cityscapes val

(using extra training data)

MaX-DeepLab: End-to-End Panoptic Segmentation with Mask Transformers

3 code implementations • CVPR 2021 • Huiyu Wang, Yukun Zhu, Hartwig Adam, Alan Yuille, Liang-Chieh Chen

As a result, MaX-DeepLab shows a significant 7. 1% PQ gain in the box-free regime on the challenging COCO dataset, closing the gap between box-based and box-free methods for the first time.

Ranked #12 on

Panoptic Segmentation

on COCO test-dev

Ranked #12 on

Panoptic Segmentation

on COCO test-dev

DeepLab2: A TensorFlow Library for Deep Labeling

4 code implementations • 17 Jun 2021 • Mark Weber, Huiyu Wang, Siyuan Qiao, Jun Xie, Maxwell D. Collins, Yukun Zhu, Liangzhe Yuan, Dahun Kim, Qihang Yu, Daniel Cremers, Laura Leal-Taixe, Alan L. Yuille, Florian Schroff, Hartwig Adam, Liang-Chieh Chen

DeepLab2 is a TensorFlow library for deep labeling, aiming to provide a state-of-the-art and easy-to-use TensorFlow codebase for general dense pixel prediction problems in computer vision.

kMaX-DeepLab: k-means Mask Transformer

2 code implementations • 8 Jul 2022 • Qihang Yu, Huiyu Wang, Siyuan Qiao, Maxwell Collins, Yukun Zhu, Hartwig Adam, Alan Yuille, Liang-Chieh Chen

However, we observe that most existing transformer-based vision models simply borrow the idea from NLP, neglecting the crucial difference between languages and images, particularly the extremely large sequence length of spatially flattened pixel features.

Ranked #2 on

Panoptic Segmentation

on COCO test-dev

Ranked #2 on

Panoptic Segmentation

on COCO test-dev

iBOT: Image BERT Pre-Training with Online Tokenizer

1 code implementation • 15 Nov 2021 • Jinghao Zhou, Chen Wei, Huiyu Wang, Wei Shen, Cihang Xie, Alan Yuille, Tao Kong

We present a self-supervised framework iBOT that can perform masked prediction with an online tokenizer.

Ranked #1 on

Unsupervised Image Classification

on ImageNet

Ranked #1 on

Unsupervised Image Classification

on ImageNet

Masked Autoencoders Enable Efficient Knowledge Distillers

1 code implementation • CVPR 2023 • Yutong Bai, Zeyu Wang, Junfei Xiao, Chen Wei, Huiyu Wang, Alan Yuille, Yuyin Zhou, Cihang Xie

For example, by distilling the knowledge from an MAE pre-trained ViT-L into a ViT-B, our method achieves 84. 0% ImageNet top-1 accuracy, outperforming the baseline of directly distilling a fine-tuned ViT-L by 1. 2%.

ELASTIC: Improving CNNs with Dynamic Scaling Policies

1 code implementation • CVPR 2019 • Huiyu Wang, Aniruddha Kembhavi, Ali Farhadi, Alan Yuille, Mohammad Rastegari

We formulate the scaling policy as a non-linear function inside the network's structure that (a) is learned from data, (b) is instance specific, (c) does not add extra computation, and (d) can be applied on any network architecture.

CMT-DeepLab: Clustering Mask Transformers for Panoptic Segmentation

2 code implementations • CVPR 2022 • Qihang Yu, Huiyu Wang, Dahun Kim, Siyuan Qiao, Maxwell Collins, Yukun Zhu, Hartwig Adam, Alan Yuille, Liang-Chieh Chen

We propose Clustering Mask Transformer (CMT-DeepLab), a transformer-based framework for panoptic segmentation designed around clustering.

Ranked #6 on

Panoptic Segmentation

on COCO test-dev

Ranked #6 on

Panoptic Segmentation

on COCO test-dev

SpecTr: Spectral Transformer for Hyperspectral Pathology Image Segmentation

1 code implementation • 5 Mar 2021 • Boxiang Yun, Yan Wang, Jieneng Chen, Huiyu Wang, Wei Shen, Qingli Li

Hyperspectral imaging (HSI) unlocks the huge potential to a wide variety of applications relied on high-precision pathology image segmentation, such as computational pathology and precision medicine.

Rethinking Normalization and Elimination Singularity in Neural Networks

1 code implementation • 21 Nov 2019 • Siyuan Qiao, Huiyu Wang, Chenxi Liu, Wei Shen, Alan Yuille

To address this issue, we propose BatchChannel Normalization (BCN), which uses batch knowledge to avoid the elimination singularities in the training of channel-normalized models.

Unleashing the Power of Visual Prompting At the Pixel Level

1 code implementation • 20 Dec 2022 • Junyang Wu, Xianhang Li, Chen Wei, Huiyu Wang, Alan Yuille, Yuyin Zhou, Cihang Xie

This paper presents a simple and effective visual prompting method for adapting pre-trained models to downstream recognition tasks.

Semantic-Aware Knowledge Preservation for Zero-Shot Sketch-Based Image Retrieval

1 code implementation • ICCV 2019 • Qing Liu, Lingxi Xie, Huiyu Wang, Alan Yuille

Sketch-based image retrieval (SBIR) is widely recognized as an important vision problem which implies a wide range of real-world applications.

In Defense of Image Pre-Training for Spatiotemporal Recognition

1 code implementation • 3 May 2022 • Xianhang Li, Huiyu Wang, Chen Wei, Jieru Mei, Alan Yuille, Yuyin Zhou, Cihang Xie

Inspired by this observation, we hypothesize that the key to effectively leveraging image pre-training lies in the decomposition of learning spatial and temporal features, and revisiting image pre-training as the appearance prior to initializing 3D kernels.

A Simple Data Mixing Prior for Improving Self-Supervised Learning

1 code implementation • CVPR 2022 • Sucheng Ren, Huiyu Wang, Zhengqi Gao, Shengfeng He, Alan Yuille, Yuyin Zhou, Cihang Xie

More notably, our SDMP is the first method that successfully leverages data mixing to improve (rather than hurt) the performance of Vision Transformers in the self-supervised setting.

CP2: Copy-Paste Contrastive Pretraining for Semantic Segmentation

1 code implementation • 22 Mar 2022 • Feng Wang, Huiyu Wang, Chen Wei, Alan Yuille, Wei Shen

Recent advances in self-supervised contrastive learning yield good image-level representation, which favors classification tasks but usually neglects pixel-level detailed information, leading to unsatisfactory transfer performance to dense prediction tasks such as semantic segmentation.

Combining Compositional Models and Deep Networks For Robust Object Classification under Occlusion

no code implementations • 28 May 2019 • Adam Kortylewski, Qing Liu, Huiyu Wang, Zhishuai Zhang, Alan Yuille

In this work, we combine DCNNs and compositional object models to retain the best of both approaches: a discriminative model that is robust to partial occlusion and mask attacks.

Localizing Occluders with Compositional Convolutional Networks

no code implementations • 18 Nov 2019 • Adam Kortylewski, Qing Liu, Huiyu Wang, Zhishuai Zhang, Alan Yuille

Our experimental results demonstrate that the proposed extensions increase the model's performance at localizing occluders as well as at classifying partially occluded objects.

CO2: Consistent Contrast for Unsupervised Visual Representation Learning

no code implementations • ICLR 2021 • Chen Wei, Huiyu Wang, Wei Shen, Alan Yuille

Regarding the similarity of the query crop to each crop from other images as "unlabeled", the consistency term takes the corresponding similarity of a positive crop as a pseudo label, and encourages consistency between these two similarities.

Scaling Wide Residual Networks for Panoptic Segmentation

no code implementations • 23 Nov 2020 • Liang-Chieh Chen, Huiyu Wang, Siyuan Qiao

The Wide Residual Networks (Wide-ResNets), a shallow but wide model variant of the Residual Networks (ResNets) by stacking a small number of residual blocks with large channel sizes, have demonstrated outstanding performance on multiple dense prediction tasks.

Ranked #2 on

Panoptic Segmentation

on Cityscapes test

(using extra training data)

Ranked #2 on

Panoptic Segmentation

on Cityscapes test

(using extra training data)

Image BERT Pre-training with Online Tokenizer

no code implementations • ICLR 2022 • Jinghao Zhou, Chen Wei, Huiyu Wang, Wei Shen, Cihang Xie, Alan Yuille, Tao Kong

The success of language Transformers is primarily attributed to the pretext task of masked language modeling (MLM), where texts are first tokenized into semantically meaningful pieces.

Searching for TrioNet: Combining Convolution with Local and Global Self-Attention

no code implementations • 15 Nov 2021 • Huaijin Pi, Huiyu Wang, Yingwei Li, Zizhang Li, Alan Yuille

In order to effectively search in this huge architecture space, we propose Hierarchical Sampling for better training of the supernet.

TubeFormer-DeepLab: Video Mask Transformer

no code implementations • CVPR 2022 • Dahun Kim, Jun Xie, Huiyu Wang, Siyuan Qiao, Qihang Yu, Hong-Seok Kim, Hartwig Adam, In So Kweon, Liang-Chieh Chen

We present TubeFormer-DeepLab, the first attempt to tackle multiple core video segmentation tasks in a unified manner.

SMAUG: Sparse Masked Autoencoder for Efficient Video-Language Pre-training

no code implementations • ICCV 2023 • Yuanze Lin, Chen Wei, Huiyu Wang, Alan Yuille, Cihang Xie

Coupling all these designs allows our method to enjoy both competitive performances on text-to-video retrieval and video question answering tasks, and much less pre-training costs by 1. 9X or more.

Ego-Only: Egocentric Action Detection without Exocentric Transferring

no code implementations • ICCV 2023 • Huiyu Wang, Mitesh Kumar Singh, Lorenzo Torresani

We find that this renders exocentric transferring unnecessary by showing remarkably strong results achieved by this simple Ego-Only approach on three established egocentric video datasets: Ego4D, EPIC-Kitchens-100, and Charades-Ego.

Diffusion Models as Masked Autoencoders

no code implementations • ICCV 2023 • Chen Wei, Karttikeya Mangalam, Po-Yao Huang, Yanghao Li, Haoqi Fan, Hu Xu, Huiyu Wang, Cihang Xie, Alan Yuille, Christoph Feichtenhofer

There has been a longstanding belief that generation can facilitate a true understanding of visual data.

Ego-Exo4D: Understanding Skilled Human Activity from First- and Third-Person Perspectives

no code implementations • 30 Nov 2023 • Kristen Grauman, Andrew Westbury, Lorenzo Torresani, Kris Kitani, Jitendra Malik, Triantafyllos Afouras, Kumar Ashutosh, Vijay Baiyya, Siddhant Bansal, Bikram Boote, Eugene Byrne, Zach Chavis, Joya Chen, Feng Cheng, Fu-Jen Chu, Sean Crane, Avijit Dasgupta, Jing Dong, Maria Escobar, Cristhian Forigua, Abrham Gebreselasie, Sanjay Haresh, Jing Huang, Md Mohaiminul Islam, Suyog Jain, Rawal Khirodkar, Devansh Kukreja, Kevin J Liang, Jia-Wei Liu, Sagnik Majumder, Yongsen Mao, Miguel Martin, Effrosyni Mavroudi, Tushar Nagarajan, Francesco Ragusa, Santhosh Kumar Ramakrishnan, Luigi Seminara, Arjun Somayazulu, Yale Song, Shan Su, Zihui Xue, Edward Zhang, Jinxu Zhang, Angela Castillo, Changan Chen, Xinzhu Fu, Ryosuke Furuta, Cristina Gonzalez, Prince Gupta, Jiabo Hu, Yifei HUANG, Yiming Huang, Weslie Khoo, Anush Kumar, Robert Kuo, Sach Lakhavani, Miao Liu, Mi Luo, Zhengyi Luo, Brighid Meredith, Austin Miller, Oluwatumininu Oguntola, Xiaqing Pan, Penny Peng, Shraman Pramanick, Merey Ramazanova, Fiona Ryan, Wei Shan, Kiran Somasundaram, Chenan Song, Audrey Southerland, Masatoshi Tateno, Huiyu Wang, Yuchen Wang, Takuma Yagi, Mingfei Yan, Xitong Yang, Zecheng Yu, Shengxin Cindy Zha, Chen Zhao, Ziwei Zhao, Zhifan Zhu, Jeff Zhuo, Pablo Arbelaez, Gedas Bertasius, David Crandall, Dima Damen, Jakob Engel, Giovanni Maria Farinella, Antonino Furnari, Bernard Ghanem, Judy Hoffman, C. V. Jawahar, Richard Newcombe, Hyun Soo Park, James M. Rehg, Yoichi Sato, Manolis Savva, Jianbo Shi, Mike Zheng Shou, Michael Wray

We present Ego-Exo4D, a diverse, large-scale multimodal multiview video dataset and benchmark challenge.

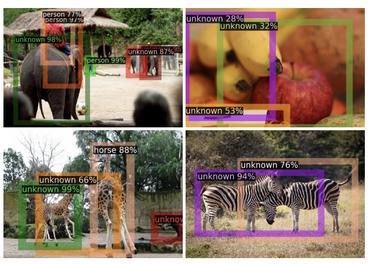

Finding Dino: A plug-and-play framework for unsupervised detection of out-of-distribution objects using prototypes

no code implementations • 11 Apr 2024 • Poulami Sinhamahapatra, Franziska Schwaiger, Shirsha Bose, Huiyu Wang, Karsten Roscher, Stephan Guennemann

It is an inference-based method that does not require training on the domain dataset and relies on extracting relevant features from self-supervised pre-trained models.