Search Results for author: Kris Kitani

Found 81 papers, 27 papers with code

G-HOP: Generative Hand-Object Prior for Interaction Reconstruction and Grasp Synthesis

no code implementations • 18 Apr 2024 • Yufei Ye, Abhinav Gupta, Kris Kitani, Shubham Tulsiani

We propose G-HOP, a denoising diffusion based generative prior for hand-object interactions that allows modeling both the 3D object and a human hand, conditioned on the object category.

Bootstrapping Linear Models for Fast Online Adaptation in Human-Agent Collaboration

no code implementations • 16 Apr 2024 • Benjamin A Newman, Chris Paxton, Kris Kitani, Henny Admoni

Initializing policies to maximize performance with unknown partners can be achieved by bootstrapping nonlinear models using imitation learning over large, offline datasets.

Zero-Shot Multi-Object Shape Completion

no code implementations • 21 Mar 2024 • Shun Iwase, Katherine Liu, Vitor Guizilini, Adrien Gaidon, Kris Kitani, Rares Ambrus, Sergey Zakharov

We present a 3D shape completion method that recovers the complete geometry of multiple objects in complex scenes from a single RGB-D image.

Real-Time Simulated Avatar from Head-Mounted Sensors

no code implementations • 11 Mar 2024 • Zhengyi Luo, Jinkun Cao, Rawal Khirodkar, Alexander Winkler, Kris Kitani, Weipeng Xu

We present SimXR, a method for controlling a simulated avatar from information (headset pose and cameras) obtained from AR / VR headsets.

Learning Human-to-Humanoid Real-Time Whole-Body Teleoperation

no code implementations • 7 Mar 2024 • Tairan He, Zhengyi Luo, Wenli Xiao, Chong Zhang, Kris Kitani, Changliu Liu, Guanya Shi

We present Human to Humanoid (H2O), a reinforcement learning (RL) based framework that enables real-time whole-body teleoperation of a full-sized humanoid robot with only an RGB camera.

Multi-Object Tracking by Hierarchical Visual Representations

no code implementations • 24 Feb 2024 • Jinkun Cao, Jiangmiao Pang, Kris Kitani

We propose a new visual hierarchical representation paradigm for multi-object tracking.

Mixed Gaussian Flow for Diverse Trajectory Prediction

no code implementations • 19 Feb 2024 • Jiahe Chen, Jinkun Cao, Dahua Lin, Kris Kitani, Jiangmiao Pang

However, mapping from a standard Gaussian by a flow-based model hurts the capacity to capture complicated patterns of trajectories, ignoring the under-represented motion intentions in the training data.

Multi-Person 3D Pose Estimation from Multi-View Uncalibrated Depth Cameras

no code implementations • 28 Jan 2024 • Yu-Jhe Li, Yan Xu, Rawal Khirodkar, Jinhyung Park, Kris Kitani

In order to evaluate our proposed pipeline, we collect three video sets of RGBD videos recorded from multiple sparse-view depth cameras and ground truth 3D poses are manually annotated.

Multi-View Person Matching and 3D Pose Estimation with Arbitrary Uncalibrated Camera Networks

no code implementations • 4 Dec 2023 • Yan Xu, Kris Kitani

The 2D human poses used in clustering are obtained through a pre-trained 2D pose detector, so our method does not require expensive 3D training data for each new scene.

Ego-Exo4D: Understanding Skilled Human Activity from First- and Third-Person Perspectives

no code implementations • 30 Nov 2023 • Kristen Grauman, Andrew Westbury, Lorenzo Torresani, Kris Kitani, Jitendra Malik, Triantafyllos Afouras, Kumar Ashutosh, Vijay Baiyya, Siddhant Bansal, Bikram Boote, Eugene Byrne, Zach Chavis, Joya Chen, Feng Cheng, Fu-Jen Chu, Sean Crane, Avijit Dasgupta, Jing Dong, Maria Escobar, Cristhian Forigua, Abrham Gebreselasie, Sanjay Haresh, Jing Huang, Md Mohaiminul Islam, Suyog Jain, Rawal Khirodkar, Devansh Kukreja, Kevin J Liang, Jia-Wei Liu, Sagnik Majumder, Yongsen Mao, Miguel Martin, Effrosyni Mavroudi, Tushar Nagarajan, Francesco Ragusa, Santhosh Kumar Ramakrishnan, Luigi Seminara, Arjun Somayazulu, Yale Song, Shan Su, Zihui Xue, Edward Zhang, Jinxu Zhang, Angela Castillo, Changan Chen, Xinzhu Fu, Ryosuke Furuta, Cristina Gonzalez, Prince Gupta, Jiabo Hu, Yifei HUANG, Yiming Huang, Weslie Khoo, Anush Kumar, Robert Kuo, Sach Lakhavani, Miao Liu, Mi Luo, Zhengyi Luo, Brighid Meredith, Austin Miller, Oluwatumininu Oguntola, Xiaqing Pan, Penny Peng, Shraman Pramanick, Merey Ramazanova, Fiona Ryan, Wei Shan, Kiran Somasundaram, Chenan Song, Audrey Southerland, Masatoshi Tateno, Huiyu Wang, Yuchen Wang, Takuma Yagi, Mingfei Yan, Xitong Yang, Zecheng Yu, Shengxin Cindy Zha, Chen Zhao, Ziwei Zhao, Zhifan Zhu, Jeff Zhuo, Pablo Arbelaez, Gedas Bertasius, David Crandall, Dima Damen, Jakob Engel, Giovanni Maria Farinella, Antonino Furnari, Bernard Ghanem, Judy Hoffman, C. V. Jawahar, Richard Newcombe, Hyun Soo Park, James M. Rehg, Yoichi Sato, Manolis Savva, Jianbo Shi, Mike Zheng Shou, Michael Wray

We present Ego-Exo4D, a diverse, large-scale multimodal multiview video dataset and benchmark challenge.

Universal Humanoid Motion Representations for Physics-Based Control

no code implementations • 6 Oct 2023 • Zhengyi Luo, Jinkun Cao, Josh Merel, Alexander Winkler, Jing Huang, Kris Kitani, Weipeng Xu

We close this gap by significantly increasing the coverage of our motion representation space.

TEMPO: Efficient Multi-View Pose Estimation, Tracking, and Forecasting

no code implementations • ICCV 2023 • Rohan Choudhury, Kris Kitani, Laszlo A. Jeni

In doing so, our model is able to use spatiotemporal context to predict more accurate human poses without sacrificing efficiency.

EgoHumans: An Egocentric 3D Multi-Human Benchmark

no code implementations • 25 May 2023 • Rawal Khirodkar, Aayush Bansal, Lingni Ma, Richard Newcombe, Minh Vo, Kris Kitani

We present EgoHumans, a new multi-view multi-human video benchmark to advance the state-of-the-art of egocentric human 3D pose estimation and tracking.

Type-to-Track: Retrieve Any Object via Prompt-based Tracking

no code implementations • NeurIPS 2023 • Pha Nguyen, Kha Gia Quach, Kris Kitani, Khoa Luu

This paper introduces a novel paradigm for Multiple Object Tracking called Type-to-Track, which allows users to track objects in videos by typing natural language descriptions.

Grounded Multiple Object Tracking

Grounded Multiple Object Tracking

Multiple Object Tracking

+1

Multiple Object Tracking

+1

Joint Metrics Matter: A Better Standard for Trajectory Forecasting

1 code implementation • ICCV 2023 • Erica Weng, Hana Hoshino, Deva Ramanan, Kris Kitani

In response to the limitations of marginal metrics, we present the first comprehensive evaluation of state-of-the-art (SOTA) trajectory forecasting methods with respect to multi-agent metrics (joint metrics): JADE, JFDE, and collision rate.

Perpetual Humanoid Control for Real-time Simulated Avatars

no code implementations • ICCV 2023 • Zhengyi Luo, Jinkun Cao, Alexander Winkler, Kris Kitani, Weipeng Xu

We present a physics-based humanoid controller that achieves high-fidelity motion imitation and fault-tolerant behavior in the presence of noisy input (e. g. pose estimates from video or generated from language) and unexpected falls.

Trace and Pace: Controllable Pedestrian Animation via Guided Trajectory Diffusion

no code implementations • CVPR 2023 • Davis Rempe, Zhengyi Luo, Xue Bin Peng, Ye Yuan, Kris Kitani, Karsten Kreis, Sanja Fidler, Or Litany

We introduce a method for generating realistic pedestrian trajectories and full-body animations that can be controlled to meet user-defined goals.

3D-CLFusion: Fast Text-to-3D Rendering with Contrastive Latent Diffusion

no code implementations • 21 Mar 2023 • Yu-Jhe Li, Tao Xu, Ji Hou, Bichen Wu, Xiaoliang Dai, Albert Pumarola, Peizhao Zhang, Peter Vajda, Kris Kitani

We note that the novelty of our model lies in that we introduce contrastive learning during training the diffusion prior which enables the generation of the valid view-invariant latent code.

Deep OC-SORT: Multi-Pedestrian Tracking by Adaptive Re-Identification

3 code implementations • 23 Feb 2023 • Gerard Maggiolino, Adnan Ahmad, Jinkun Cao, Kris Kitani

Motion-based association for Multi-Object Tracking (MOT) has recently re-achieved prominence with the rise of powerful object detectors.

![]() Ranked #6 on

Multi-Object Tracking

on MOT17

(using extra training data)

Ranked #6 on

Multi-Object Tracking

on MOT17

(using extra training data)

Ego-Humans: An Ego-Centric 3D Multi-Human Benchmark

no code implementations • ICCV 2023 • Rawal Khirodkar, Aayush Bansal, Lingni Ma, Richard Newcombe, Minh Vo, Kris Kitani

We present EgoHumans, a new multi-view multi-human video benchmark to advance the state-of-the-art of egocentric human 3D pose estimation and tracking.

Azimuth Super-Resolution for FMCW Radar in Autonomous Driving

no code implementations • CVPR 2023 • Yu-Jhe Li, Shawn Hunt, Jinhyung Park, Matthew O’Toole, Kris Kitani

We also propose a hybrid super-resolution model (Hybrid-SR) combining our ADC-SR with a standard RAD super-resolution model, and show that performance can be improved by a large margin.

Learnable Spatio-Temporal Map Embeddings for Deep Inertial Localization

no code implementations • 14 Nov 2022 • Dennis Melamed, Karnik Ram, Vivek Roy, Kris Kitani

To address the robustness problem in map utilization, we propose a data-driven prior on possible user locations in a map by combining learned spatial map embeddings and temporal odometry embeddings.

3D-Aware Encoding for Style-based Neural Radiance Fields

no code implementations • 12 Nov 2022 • Yu-Jhe Li, Tao Xu, Bichen Wu, Ningyuan Zheng, Xiaoliang Dai, Albert Pumarola, Peizhao Zhang, Peter Vajda, Kris Kitani

In the first stage, we introduce a base encoder that converts the input image to a latent code.

Track Targets by Dense Spatio-Temporal Position Encoding

no code implementations • 17 Oct 2022 • Jinkun Cao, Hao Wu, Kris Kitani

Experiments on video multi-object tracking (MOT) and multi-object tracking and segmentation (MOTS) datasets demonstrate the effectiveness of the proposed DST position encoding.

Multi-Object Tracking

Multi-Object Tracking

Multi-Object Tracking and Segmentation

+2

Multi-Object Tracking and Segmentation

+2

Time Will Tell: New Outlooks and A Baseline for Temporal Multi-View 3D Object Detection

1 code implementation • 5 Oct 2022 • Jinhyung Park, Chenfeng Xu, Shijia Yang, Kurt Keutzer, Kris Kitani, Masayoshi Tomizuka, Wei Zhan

While recent camera-only 3D detection methods leverage multiple timesteps, the limited history they use significantly hampers the extent to which temporal fusion can improve object perception.

Ranked #1 on

Robust Camera Only 3D Object Detection

on nuScenes-C

Ranked #1 on

Robust Camera Only 3D Object Detection

on nuScenes-C

Embodied Scene-aware Human Pose Estimation

no code implementations • 18 Jun 2022 • Zhengyi Luo, Shun Iwase, Ye Yuan, Kris Kitani

Since 2D third-person observations are coupled with the camera pose, we propose to disentangle the camera pose and use a multi-step projection gradient defined in the global coordinate frame as the movement cue for our embodied agent.

Ranked #306 on

3D Human Pose Estimation

on Human3.6M

Ranked #306 on

3D Human Pose Estimation

on Human3.6M

Online No-regret Model-Based Meta RL for Personalized Navigation

no code implementations • 5 Apr 2022 • Yuda Song, Ye Yuan, Wen Sun, Kris Kitani

Our theoretical analysis shows that our method is a no-regret algorithm and we provide the convergence rate in the agnostic setting.

Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking

7 code implementations • CVPR 2023 • Jinkun Cao, Jiangmiao Pang, Xinshuo Weng, Rawal Khirodkar, Kris Kitani

Instead of relying only on the linear state estimate (i. e., estimation-centric approach), we use object observations (i. e., the measurements by object detector) to compute a virtual trajectory over the occlusion period to fix the error accumulation of filter parameters during the occlusion period.

![]() Ranked #2 on

Multiple Object Tracking

on KITTI Tracking test

Ranked #2 on

Multiple Object Tracking

on KITTI Tracking test

Occluded Human Mesh Recovery

no code implementations • CVPR 2022 • Rawal Khirodkar, Shashank Tripathi, Kris Kitani

Along with the input image, we condition the top-down model on spatial context from the image in the form of body-center heatmaps.

Ranked #64 on

3D Human Pose Estimation

on 3DPW

(using extra training data)

Ranked #64 on

3D Human Pose Estimation

on 3DPW

(using extra training data)

Whose Track Is It Anyway? Improving Robustness to Tracking Errors With Affinity-Based Trajectory Prediction

no code implementations • CVPR 2022 • Xinshuo Weng, Boris Ivanovic, Kris Kitani, Marco Pavone

This is typically caused by the propagation of errors from tracking to prediction, such as noisy tracks, fragments, and identity switches.

Modality-Agnostic Learning for Radar-Lidar Fusion in Vehicle Detection

no code implementations • CVPR 2022 • Yu-Jhe Li, Jinhyung Park, Matthew O'Toole, Kris Kitani

To mitigate this problem, we propose the Self-Training Multimodal Vehicle Detection Network (ST-MVDNet) which leverages a Teacher-Student mutual learning framework and a simulated sensor noise model used in strong data augmentation for Lidar and Radar.

GLAMR: Global Occlusion-Aware Human Mesh Recovery with Dynamic Cameras

1 code implementation • CVPR 2022 • Ye Yuan, Umar Iqbal, Pavlo Molchanov, Kris Kitani, Jan Kautz

Since the joint reconstruction of human motions and camera poses is underconstrained, we propose a global trajectory predictor that generates global human trajectories based on local body movements.

Ranked #1 on

Global 3D Human Pose Estimation

on EMDB

Ranked #1 on

Global 3D Human Pose Estimation

on EMDB

DanceTrack: Multi-Object Tracking in Uniform Appearance and Diverse Motion

3 code implementations • CVPR 2022 • Peize Sun, Jinkun Cao, Yi Jiang, Zehuan Yuan, Song Bai, Kris Kitani, Ping Luo

A typical pipeline for multi-object tracking (MOT) is to use a detector for object localization, and following re-identification (re-ID) for object association.

Cross-Domain Adaptive Teacher for Object Detection

2 code implementations • CVPR 2022 • Yu-Jhe Li, Xiaoliang Dai, Chih-Yao Ma, Yen-Cheng Liu, Kan Chen, Bichen Wu, Zijian He, Kris Kitani, Peter Vajda

To mitigate this problem, we propose a teacher-student framework named Adaptive Teacher (AT) which leverages domain adversarial learning and weak-strong data augmentation to address the domain gap.

AEI: Actors-Environment Interaction with Adaptive Attention for Temporal Action Proposals Generation

1 code implementation • 21 Oct 2021 • Khoa Vo, Hyekang Joo, Kashu Yamazaki, Sang Truong, Kris Kitani, Minh-Triet Tran, Ngan Le

In this paper, we make an attempt to simulate that ability of a human by proposing Actor Environment Interaction (AEI) network to improve the video representation for temporal action proposals generation.

Ego4D: Around the World in 3,000 Hours of Egocentric Video

6 code implementations • CVPR 2022 • Kristen Grauman, Andrew Westbury, Eugene Byrne, Zachary Chavis, Antonino Furnari, Rohit Girdhar, Jackson Hamburger, Hao Jiang, Miao Liu, Xingyu Liu, Miguel Martin, Tushar Nagarajan, Ilija Radosavovic, Santhosh Kumar Ramakrishnan, Fiona Ryan, Jayant Sharma, Michael Wray, Mengmeng Xu, Eric Zhongcong Xu, Chen Zhao, Siddhant Bansal, Dhruv Batra, Vincent Cartillier, Sean Crane, Tien Do, Morrie Doulaty, Akshay Erapalli, Christoph Feichtenhofer, Adriano Fragomeni, Qichen Fu, Abrham Gebreselasie, Cristina Gonzalez, James Hillis, Xuhua Huang, Yifei HUANG, Wenqi Jia, Weslie Khoo, Jachym Kolar, Satwik Kottur, Anurag Kumar, Federico Landini, Chao Li, Yanghao Li, Zhenqiang Li, Karttikeya Mangalam, Raghava Modhugu, Jonathan Munro, Tullie Murrell, Takumi Nishiyasu, Will Price, Paola Ruiz Puentes, Merey Ramazanova, Leda Sari, Kiran Somasundaram, Audrey Southerland, Yusuke Sugano, Ruijie Tao, Minh Vo, Yuchen Wang, Xindi Wu, Takuma Yagi, Ziwei Zhao, Yunyi Zhu, Pablo Arbelaez, David Crandall, Dima Damen, Giovanni Maria Farinella, Christian Fuegen, Bernard Ghanem, Vamsi Krishna Ithapu, C. V. Jawahar, Hanbyul Joo, Kris Kitani, Haizhou Li, Richard Newcombe, Aude Oliva, Hyun Soo Park, James M. Rehg, Yoichi Sato, Jianbo Shi, Mike Zheng Shou, Antonio Torralba, Lorenzo Torresani, Mingfei Yan, Jitendra Malik

We introduce Ego4D, a massive-scale egocentric video dataset and benchmark suite.

Transform2Act: Learning a Transform-and-Control Policy for Efficient Agent Design

1 code implementation • ICLR 2022 • Ye Yuan, Yuda Song, Zhengyi Luo, Wen Sun, Kris Kitani

Specifically, we learn a conditional policy that, in an episode, first applies a sequence of transform actions to modify an agent's skeletal structure and joint attributes, and then applies control actions under the new design.

Multi-Echo LiDAR for 3D Object Detection

no code implementations • ICCV 2021 • Yunze Man, Xinshuo Weng, Prasanna Kumar Sivakuma, Matthew O'Toole, Kris Kitani

LiDAR sensors can be used to obtain a wide range of measurement signals other than a simple 3D point cloud, and those signals can be leveraged to improve perception tasks like 3D object detection.

Multi-Modality Task Cascade for 3D Object Detection

1 code implementation • 8 Jul 2021 • Jinhyung Park, Xinshuo Weng, Yunze Man, Kris Kitani

To provide a more integrated approach, we propose a novel Multi-Modality Task Cascade network (MTC-RCNN) that leverages 3D box proposals to improve 2D segmentation predictions, which are then used to further refine the 3D boxes.

Dynamics-Regulated Kinematic Policy for Egocentric Pose Estimation

1 code implementation • NeurIPS 2021 • Zhengyi Luo, Ryo Hachiuma, Ye Yuan, Kris Kitani

By comparing the pose instructed by the kinematic model against the pose generated by the dynamics model, we can use their misalignment to further improve the kinematic model.

Egocentric Pose Estimation

Egocentric Pose Estimation

Human-Object Interaction Detection

+2

Human-Object Interaction Detection

+2

Wide-Baseline Multi-Camera Calibration using Person Re-Identification

no code implementations • CVPR 2021 • Yan Xu, Yu-Jhe Li, Xinshuo Weng, Kris Kitani

We address the problem of estimating the 3D pose of a network of cameras for large-environment wide-baseline scenarios, e. g., cameras for construction sites, sports stadiums, and public spaces.

SimPoE: Simulated Character Control for 3D Human Pose Estimation

no code implementations • CVPR 2021 • Ye Yuan, Shih-En Wei, Tomas Simon, Kris Kitani, Jason Saragih

Based on this refined kinematic pose, the policy learns to compute dynamics-based control (e. g., joint torques) of the character to advance the current-frame pose estimate to the pose estimate of the next frame.

Ranked #229 on

3D Human Pose Estimation

on Human3.6M

Ranked #229 on

3D Human Pose Estimation

on Human3.6M

AgentFormer: Agent-Aware Transformers for Socio-Temporal Multi-Agent Forecasting

2 code implementations • ICCV 2021 • Ye Yuan, Xinshuo Weng, Yanglan Ou, Kris Kitani

Instead, we would prefer a method that allows an agent's state at one time to directly affect another agent's state at a future time.

Ranked #10 on

Trajectory Prediction

on ETH/UCY

Ranked #10 on

Trajectory Prediction

on ETH/UCY

Efficient Model Performance Estimation via Feature Histories

no code implementations • 7 Mar 2021 • Shengcao Cao, Xiaofang Wang, Kris Kitani

Using a sampling-based search algorithm and parallel computing, our method can find an architecture which is better than DARTS and with an 80% reduction in wall-clock search time.

Inverse Reinforcement Learning with Explicit Policy Estimates

no code implementations • 4 Mar 2021 • Navyata Sanghvi, Shinnosuke Usami, Mohit Sharma, Joachim Groeger, Kris Kitani

Various methods for solving the inverse reinforcement learning (IRL) problem have been developed independently in machine learning and economics.

DeepBLE: Generalizing RSSI-based Localization Across Different Devices

no code implementations • 27 Feb 2021 • Harsh Agarwal, Navyata Sanghvi, Vivek Roy, Kris Kitani

Accurate smartphone localization (< 1-meter error) for indoor navigation using only RSSI received from a set of BLE beacons remains a challenging problem, due to the inherent noise of RSSI measurements.

IDOL: Inertial Deep Orientation-Estimation and Localization

1 code implementation • 8 Feb 2021 • Scott Sun, Dennis Melamed, Kris Kitani

Many smartphone applications use inertial measurement units (IMUs) to sense movement, but the use of these sensors for pedestrian localization can be challenging due to their noise characteristics.

AutoSelect: Automatic and Dynamic Detection Selection for 3D Multi-Object Tracking

no code implementations • 10 Dec 2020 • Xinshuo Weng, Kris Kitani

Also, this threshold is sensitive to many factors such as target object category so we need to re-search the threshold if these factors change.

Rethinking Transformer-based Set Prediction for Object Detection

1 code implementation • ICCV 2021 • Zhiqing Sun, Shengcao Cao, Yiming Yang, Kris Kitani

DETR is a recently proposed Transformer-based method which views object detection as a set prediction problem and achieves state-of-the-art performance but demands extra-long training time to converge.

Audio-Visual Self-Supervised Terrain Type Discovery for Mobile Platforms

no code implementations • 13 Oct 2020 • Akiyoshi Kurobe, Yoshikatsu Nakajima, Hideo Saito, Kris Kitani

The ability to both recognize and discover terrain characteristics is an important function required for many autonomous ground robots such as social robots, assistive robots, autonomous vehicles, and ground exploration robots.

End-to-End 3D Multi-Object Tracking and Trajectory Forecasting

no code implementations • 25 Aug 2020 • Xinshuo Weng, Ye Yuan, Kris Kitani

To evaluate this hypothesis, we propose a unified solution for 3D MOT and trajectory forecasting which also incorporates two additional novel computational units.

Few-Shot Learning with Intra-Class Knowledge Transfer

no code implementations • 22 Aug 2020 • Vivek Roy, Yan Xu, Yu-Xiong Wang, Kris Kitani, Ruslan Salakhutdinov, Martial Hebert

Recent works have proposed to solve this task by augmenting the training data of the few-shot classes using generative models with the few-shot training samples as the seeds.

Graph Neural Networks for 3D Multi-Object Tracking

no code implementations • 20 Aug 2020 • Xinshuo Weng, Yongxin Wang, Yunze Man, Kris Kitani

3D Multi-object tracking (MOT) is crucial to autonomous systems.

AB3DMOT: A Baseline for 3D Multi-Object Tracking and New Evaluation Metrics

no code implementations • 18 Aug 2020 • Xinshuo Weng, Jianren Wang, David Held, Kris Kitani

Additionally, 3D MOT datasets such as KITTI evaluate MOT methods in 2D space and standardized 3D MOT evaluation tools are missing for a fair comparison of 3D MOT methods.

Efficient Non-Line-of-Sight Imaging from Transient Sinograms

no code implementations • ECCV 2020 • Mariko Isogawa, Dorian Chan, Ye Yuan, Kris Kitani, Matthew O'Toole

Non-line-of-sight (NLOS) imaging techniques use light that diffusely reflects off of visible surfaces (e. g., walls) to see around corners.

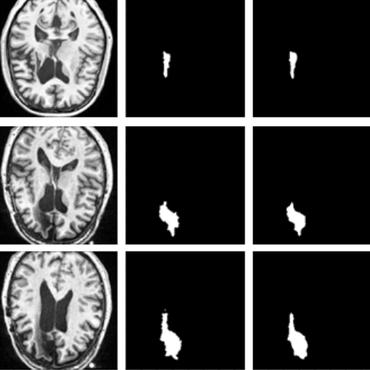

Improving Lesion Segmentation for Diabetic Retinopathy using Adversarial Learning

1 code implementation • 27 Jul 2020 • Qiqi Xiao, Jiaxu Zou, Muqiao Yang, Alex Gaudio, Kris Kitani, Asim Smailagic, Pedro Costa, Min Xu

Diabetic Retinopathy (DR) is a leading cause of blindness in working age adults.

Joint Object Detection and Multi-Object Tracking with Graph Neural Networks

1 code implementation • 23 Jun 2020 • Yongxin Wang, Kris Kitani, Xinshuo Weng

Despite the fact that the two components are dependent on each other, prior works often design detection and data association modules separately which are trained with separate objectives.

![]() Ranked #1 on

Multi-Object Tracking

on 2D MOT 2015

Ranked #1 on

Multi-Object Tracking

on 2D MOT 2015

When We First Met: Visual-Inertial Person Localization for Co-Robot Rendezvous

no code implementations • 17 Jun 2020 • Xi Sun, Xinshuo Weng, Kris Kitani

We propose a method to learn a visual-inertial feature space in which the motion of a person in video can be easily matched to the motion measured by a wearable inertial measurement unit (IMU).

Residual Force Control for Agile Human Behavior Imitation and Extended Motion Synthesis

1 code implementation • NeurIPS 2020 • Ye Yuan, Kris Kitani

Our approach is the first humanoid control method that successfully learns from a large-scale human motion dataset (Human3. 6M) and generates diverse long-term motions.

GNN3DMOT: Graph Neural Network for 3D Multi-Object Tracking with Multi-Feature Learning

1 code implementation • 12 Jun 2020 • Xinshuo Weng, Yongxin Wang, Yunze Man, Kris Kitani

As a result, the feature of one object is informed of the features of other objects so that the object feature can lean towards the object with similar feature (i. e., object probably with a same ID) and deviate from objects with dissimilar features (i. e., object probably with different IDs), leading to a more discriminative feature for each object; (2) instead of obtaining the feature from either 2D or 3D space in prior work, we propose a novel joint feature extractor to learn appearance and motion features from 2D and 3D space simultaneously.

No-Reference Image Quality Assessment via Feature Fusion and Multi-Task Learning

no code implementations • 6 Jun 2020 • S. Alireza Golestaneh, Kris Kitani

In our experiments, we demonstrate that by utilizing multi-task learning and our proposed feature fusion method, our model yields better performance for the NR-IQA task.

Multi-Task Learning

Multi-Task Learning

No-Reference Image Quality Assessment

+1

No-Reference Image Quality Assessment

+1

Optical Non-Line-of-Sight Physics-based 3D Human Pose Estimation

1 code implementation • CVPR 2020 • Mariko Isogawa, Ye Yuan, Matthew O'Toole, Kris Kitani

We bring together a diverse set of technologies from NLOS imaging, human pose estimation and deep reinforcement learning to construct an end-to-end data processing pipeline that converts a raw stream of photon measurements into a full 3D human pose sequence estimate.

DLow: Diversifying Latent Flows for Diverse Human Motion Prediction

1 code implementation • ECCV 2020 • Ye Yuan, Kris Kitani

To obtain samples from a pretrained generative model, most existing generative human motion prediction methods draw a set of independent Gaussian latent codes and convert them to motion samples.

Ranked #1 on

Human Pose Forecasting

on AMASS

(APD metric)

Ranked #1 on

Human Pose Forecasting

on AMASS

(APD metric)

Inverting the Pose Forecasting Pipeline with SPF2: Sequential Pointcloud Forecasting for Sequential Pose Forecasting

no code implementations • 18 Mar 2020 • Xinshuo Weng, Jianren Wang, Sergey Levine, Kris Kitani, Nicholas Rhinehart

Through experiments on a robotic manipulation dataset and two driving datasets, we show that SPFNet is effective for the SPF task, our forecast-then-detect pipeline outperforms the detect-then-forecast approaches to which we compared, and that pose forecasting performance improves with the addition of unlabeled data.

PTP: Parallelized Tracking and Prediction with Graph Neural Networks and Diversity Sampling

no code implementations • 17 Mar 2020 • Xinshuo Weng, Ye Yuan, Kris Kitani

We evaluate on KITTI and nuScenes datasets showing that our method with socially-aware feature learning and diversity sampling achieves new state-of-the-art performance on 3D MOT and trajectory prediction.

Estimating 3D Camera Pose from 2D Pedestrian Trajectories

no code implementations • 12 Dec 2019 • Yan Xu, Vivek Roy, Kris Kitani

We propose an alternative strategy for extracting 3D information to solve for camera pose by using pedestrian trajectories.

Incremental Class Discovery for Semantic Segmentation with RGBD Sensing

no code implementations • ICCV 2019 • Yoshikatsu Nakajima, Byeongkeun Kang, Hideo Saito, Kris Kitani

This work addresses the task of open world semantic segmentation using RGBD sensing to discover new semantic classes over time.

Diverse Trajectory Forecasting with Determinantal Point Processes

no code implementations • ICLR 2020 • Ye Yuan, Kris Kitani

To learn the parameters of the DSF, the diversity of the trajectory samples is evaluated by a diversity loss based on a determinantal point process (DPP).

Ranked #5 on

Human Pose Forecasting

on HumanEva-I

Ranked #5 on

Human Pose Forecasting

on HumanEva-I

3D Multi-Object Tracking: A Baseline and New Evaluation Metrics

1 code implementation • 9 Jul 2019 • Xinshuo Weng, Jianren Wang, David Held, Kris Kitani

Additionally, 3D MOT datasets such as KITTI evaluate MOT methods in the 2D space and standardized 3D MOT evaluation tools are missing for a fair comparison of 3D MOT methods.

![]() Ranked #3 on

3D Multi-Object Tracking

on KITTI

Ranked #3 on

3D Multi-Object Tracking

on KITTI

Ego-Pose Estimation and Forecasting as Real-Time PD Control

1 code implementation • ICCV 2019 • Ye Yuan, Kris Kitani

We propose the use of a proportional-derivative (PD) control based policy learned via reinforcement learning (RL) to estimate and forecast 3D human pose from egocentric videos.

Doctor of Crosswise: Reducing Over-parametrization in Neural Networks

1 code implementation • 24 May 2019 • J. D. Curtó, I. C. Zarza, Kris Kitani, Irwin King, Michael R. Lyu

Dr. of Crosswise proposes a new architecture to reduce over-parametrization in Neural Networks.

Learning Spatio-Temporal Features with Two-Stream Deep 3D CNNs for Lipreading

no code implementations • 4 May 2019 • Xinshuo Weng, Kris Kitani

We evaluate different combinations of front-end and back-end modules with the grayscale video and optical flow inputs on the LRW dataset.

PRECOG: PREdiction Conditioned On Goals in Visual Multi-Agent Settings

2 code implementations • ICCV 2019 • Nicholas Rhinehart, Rowan Mcallister, Kris Kitani, Sergey Levine

For autonomous vehicles (AVs) to behave appropriately on roads populated by human-driven vehicles, they must be able to reason about the uncertain intentions and decisions of other drivers from rich perceptual information.

Monocular 3D Object Detection with Pseudo-LiDAR Point Cloud

1 code implementation • 23 Mar 2019 • Xinshuo Weng, Kris Kitani

Following the pipeline of two-stage 3D detection algorithms, we detect 2D object proposals in the input image and extract a point cloud frustum from the pseudo-LiDAR for each proposal.

MGpi: A Computational Model of Multiagent Group Perception and Interaction

1 code implementation • 4 Mar 2019 • Navyata Sanghvi, Ryo Yonetani, Kris Kitani

Toward enabling next-generation robots capable of socially intelligent interaction with humans, we present a $\mathbf{computational\; model}$ of interactions in a social environment of multiple agents and multiple groups.

GroundNet: Monocular Ground Plane Normal Estimation with Geometric Consistency

no code implementations • 17 Nov 2018 • Yunze Man, Xinshuo Weng, Xi Li, Kris Kitani

We focus on estimating the 3D orientation of the ground plane from a single image.

3D Ego-Pose Estimation via Imitation Learning

no code implementations • ECCV 2018 • Ye Yuan, Kris Kitani

Motivated by this, we propose a novel control-based approach to model human motion with physics simulation and use imitation learning to learn a video-conditioned control policy for ego-pose estimation.

Understanding hand-object manipulation by modeling the contextual relationship between actions, grasp types and object attributes

no code implementations • 22 Jul 2018 • Minjie Cai, Kris Kitani, Yoichi Sato

In the proposed model, we explore various semantic relationships between actions, grasp types and object attributes, and show how the context can be used to boost the recognition of each component.

Personalized Dynamics Models for Adaptive Assistive Navigation Systems

no code implementations • 11 Apr 2018 • Eshed Ohn-Bar, Kris Kitani, Chieko Asakawa

Consider an assistive system that guides visually impaired users through speech and haptic feedback to their destination.

Rotational Rectification Network: Enabling Pedestrian Detection for Mobile Vision

no code implementations • 19 Jun 2017 • Xinshuo Weng, Shangxuan Wu, Fares Beainy, Kris Kitani

To address this issue, we propose a Rotational Rectification Network (R2N) that can be inserted into any CNN-based pedestrian (or object) detector to adapt it to significant changes in camera rotation.

Visual Compiler: Synthesizing a Scene-Specific Pedestrian Detector and Pose Estimator

no code implementations • 15 Dec 2016 • Namhoon Lee, Xinshuo Weng, Vishnu Naresh Boddeti, Yu Zhang, Fares Beainy, Kris Kitani, Takeo Kanade

We introduce the concept of a Visual Compiler that generates a scene specific pedestrian detector and pose estimator without any pedestrian observations.