Search Results for author: Kyle Min

Found 12 papers, 8 papers with code

Hierarchical Novelty Detection for Visual Object Recognition

no code implementations • CVPR 2018 • Kibok Lee, Kimin Lee, Kyle Min, Yuting Zhang, Jinwoo Shin, Honglak Lee

The essential ingredients of our methods are confidence-calibrated classifiers, data relabeling, and the leave-one-out strategy for modeling novel classes under the hierarchical taxonomy.

TASED-Net: Temporally-Aggregating Spatial Encoder-Decoder Network for Video Saliency Detection

1 code implementation • ICCV 2019 • Kyle Min, Jason J. Corso

It consists of two building blocks: first, the encoder network extracts low-resolution spatiotemporal features from an input clip of several consecutive frames, and then the following prediction network decodes the encoded features spatially while aggregating all the temporal information.

Adversarial Background-Aware Loss for Weakly-supervised Temporal Activity Localization

1 code implementation • ECCV 2020 • Kyle Min, Jason J. Corso

Two triplets of the feature space are considered in our approach: one triplet is used to learn discriminative features for each activity class, and the other one is used to distinguish the features where no activity occurs (i. e. background features) from activity-related features for each video.

Integrating Human Gaze into Attention for Egocentric Activity Recognition

1 code implementation • 8 Nov 2020 • Kyle Min, Jason J. Corso

In addition, we model the distribution of gaze fixations using a variational method.

Ranked #2 on

Egocentric Activity Recognition

on EGTEA

Ranked #2 on

Egocentric Activity Recognition

on EGTEA

Learning Spatial-Temporal Graphs for Active Speaker Detection

no code implementations • 2 Dec 2021 • Sourya Roy, Kyle Min, Subarna Tripathi, Tanaya Guha, Somdeb Majumdar

We address the problem of active speaker detection through a new framework, called SPELL, that learns long-range multimodal graphs to encode the inter-modal relationship between audio and visual data.

Learning Long-Term Spatial-Temporal Graphs for Active Speaker Detection

2 code implementations • 15 Jul 2022 • Kyle Min, Sourya Roy, Subarna Tripathi, Tanaya Guha, Somdeb Majumdar

Active speaker detection (ASD) in videos with multiple speakers is a challenging task as it requires learning effective audiovisual features and spatial-temporal correlations over long temporal windows.

Ranked #1 on

Node Classification

on AVA

Ranked #1 on

Node Classification

on AVA

Intel Labs at Ego4D Challenge 2022: A Better Baseline for Audio-Visual Diarization

no code implementations • 14 Oct 2022 • Kyle Min

This report describes our approach for the Audio-Visual Diarization (AVD) task of the Ego4D Challenge 2022.

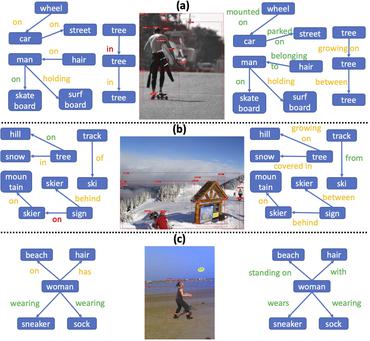

Unbiased Scene Graph Generation in Videos

1 code implementation • CVPR 2023 • Sayak Nag, Kyle Min, Subarna Tripathi, Amit K. Roy Chowdhury

The task of dynamic scene graph generation (SGG) from videos is complicated and challenging due to the inherent dynamics of a scene, temporal fluctuation of model predictions, and the long-tailed distribution of the visual relationships in addition to the already existing challenges in image-based SGG.

SViTT: Temporal Learning of Sparse Video-Text Transformers

1 code implementation • CVPR 2023 • Yi Li, Kyle Min, Subarna Tripathi, Nuno Vasconcelos

Do video-text transformers learn to model temporal relationships across frames?

Ranked #4 on

Video Question Answering

on AGQA 2.0 balanced

(Average Accuracy metric)

Ranked #4 on

Video Question Answering

on AGQA 2.0 balanced

(Average Accuracy metric)

WOUAF: Weight Modulation for User Attribution and Fingerprinting in Text-to-Image Diffusion Models

no code implementations • 7 Jun 2023 • Changhoon Kim, Kyle Min, Maitreya Patel, Sheng Cheng, Yezhou Yang

The rapid advancement of generative models, facilitating the creation of hyper-realistic images from textual descriptions, has concurrently escalated critical societal concerns such as misinformation.

STHG: Spatial-Temporal Heterogeneous Graph Learning for Advanced Audio-Visual Diarization

2 code implementations • 18 Jun 2023 • Kyle Min

This report introduces our novel method named STHG for the Audio-Visual Diarization task of the Ego4D Challenge 2023.

Action Scene Graphs for Long-Form Understanding of Egocentric Videos

1 code implementation • 6 Dec 2023 • Ivan Rodin, Antonino Furnari, Kyle Min, Subarna Tripathi, Giovanni Maria Farinella

We present Egocentric Action Scene Graphs (EASGs), a new representation for long-form understanding of egocentric videos.