Search Results for author: Matthew Brown

Found 24 papers, 10 papers with code

MoViNets: Mobile Video Networks for Efficient Video Recognition

3 code implementations • CVPR 2021 • Dan Kondratyuk, Liangzhe Yuan, Yandong Li, Li Zhang, Mingxing Tan, Matthew Brown, Boqing Gong

We present Mobile Video Networks (MoViNets), a family of computation and memory efficient video networks that can operate on streaming video for online inference.

Ranked #3 on

Action Classification

on Charades

Ranked #3 on

Action Classification

on Charades

Unsupervised Learning of Depth and Ego-Motion from Video

2 code implementations • CVPR 2017 • Tinghui Zhou, Matthew Brown, Noah Snavely, David G. Lowe

We present an unsupervised learning framework for the task of monocular depth and camera motion estimation from unstructured video sequences.

Measuring the Effects of Non-Identical Data Distribution for Federated Visual Classification

8 code implementations • 13 Sep 2019 • Tzu-Ming Harry Hsu, Hang Qi, Matthew Brown

In this work, we look at the effect such non-identical data distributions has on visual classification via Federated Learning.

AirSim Drone Racing Lab

2 code implementations • 12 Mar 2020 • Ratnesh Madaan, Nicholas Gyde, Sai Vemprala, Matthew Brown, Keiko Nagami, Tim Taubner, Eric Cristofalo, Davide Scaramuzza, Mac Schwager, Ashish Kapoor

Autonomous drone racing is a challenging research problem at the intersection of computer vision, planning, state estimation, and control.

Rethinking Class-Balanced Methods for Long-Tailed Visual Recognition from a Domain Adaptation Perspective

1 code implementation • CVPR 2020 • Muhammad Abdullah Jamal, Matthew Brown, Ming-Hsuan Yang, Liqiang Wang, Boqing Gong

Object frequency in the real world often follows a power law, leading to a mismatch between datasets with long-tailed class distributions seen by a machine learning model and our expectation of the model to perform well on all classes.

Ranked #27 on

Long-tail Learning

on Places-LT

Ranked #27 on

Long-tail Learning

on Places-LT

2.5D Visual Relationship Detection

1 code implementation • 26 Apr 2021 • Yu-Chuan Su, Soravit Changpinyo, Xiangning Chen, Sathish Thoppay, Cho-Jui Hsieh, Lior Shapira, Radu Soricut, Hartwig Adam, Matthew Brown, Ming-Hsuan Yang, Boqing Gong

To enable progress on this task, we create a new dataset consisting of 220k human-annotated 2. 5D relationships among 512K objects from 11K images.

Federated Visual Classification with Real-World Data Distribution

1 code implementation • ECCV 2020 • Tzu-Ming Harry Hsu, Hang Qi, Matthew Brown

Furthermore, differing quantities of data are typically available at each device (imbalance).

Decision Forests, Convolutional Networks and the Models in-Between

1 code implementation • 3 Mar 2016 • Yani Ioannou, Duncan Robertson, Darko Zikic, Peter Kontschieder, Jamie Shotton, Matthew Brown, Antonio Criminisi

We present a systematic analysis of how to fuse conditional computation with representation learning and achieve a continuum of hybrid models with different ratios of accuracy vs. efficiency.

Low-Shot Learning with Imprinted Weights

1 code implementation • CVPR 2018 • Hang Qi, Matthew Brown, David G. Lowe

We call this process weight imprinting as it directly sets weights for a new category based on an appropriately scaled copy of the embedding layer activations for that training example.

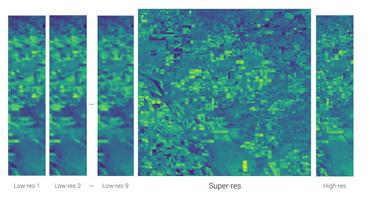

Frame-Recurrent Video Super-Resolution

no code implementations • CVPR 2018 • Mehdi S. M. Sajjadi, Raviteja Vemulapalli, Matthew Brown

Recent advances in video super-resolution have shown that convolutional neural networks combined with motion compensation are able to merge information from multiple low-resolution (LR) frames to generate high-quality images.

Ranked #6 on

Video Super-Resolution

on MSU Video Upscalers: Quality Enhancement

(VMAF metric)

Ranked #6 on

Video Super-Resolution

on MSU Video Upscalers: Quality Enhancement

(VMAF metric)

Learning to Segment via Cut-and-Paste

1 code implementation • ECCV 2018 • Tal Remez, Jonathan Huang, Matthew Brown

This paper presents a weakly-supervised approach to object instance segmentation.

Deep Reinforcement Learning for Dexterous Manipulation with Concept Networks

no code implementations • 20 Sep 2017 • Aditya Gudimella, Ross Story, Matineh Shaker, Ruofan Kong, Matthew Brown, Victor Shnayder, Marcos Campos

Deep reinforcement learning yields great results for a large array of problems, but models are generally retrained anew for each new problem to be solved.

Pose2Instance: Harnessing Keypoints for Person Instance Segmentation

no code implementations • 4 Apr 2017 • Subarna Tripathi, Maxwell Collins, Matthew Brown, Serge Belongie

In a more realistic environment, without the oracle keypoints, the proposed deep person instance segmentation model conditioned on human pose achieves 3. 8% to 10. 5% relative improvements comparing with its strongest baseline of a deep network trained only for segmentation.

Nonrigid Optical Flow Ground Truth for Real-World Scenes with Time-Varying Shading Effects

no code implementations • 26 Mar 2016 • Wenbin Li, Darren Cosker, Zhihan Lv, Matthew Brown

In this paper we present a dense ground truth dataset of nonrigidly deforming real-world scenes.

Drift Robust Non-rigid Optical Flow Enhancement for Long Sequences

no code implementations • 7 Mar 2016 • Wenbin Li, Darren Cosker, Matthew Brown

We demonstrate the success of our approach by showing significant error reduction on 6 popular optical flow algorithms applied to a range of real-world nonrigid benchmarks.

Extreme Augmentation : Can deep learning based medical image segmentation be trained using a single manually delineated scan?

no code implementations • 3 Oct 2018 • Bilwaj Gaonkar, Matthew Edwards, Alex Bui, Matthew Brown, Luke Macyszyn

In the extreme, we observed that a model trained on patches extracted from just one scan, with each patch augmented 50 times; achieved a Dice score of 0. 73 in a validation set of 40 cases.

Optical Flow Estimation Using Laplacian Mesh Energy

no code implementations • CVPR 2013 • Wenbin Li, Darren Cosker, Matthew Brown, Rui Tang

In this paper we present a novel non-rigid optical flow algorithm for dense image correspondence and non-rigid registration.

Learning Similarity Metrics for Dynamic Scene Segmentation

no code implementations • CVPR 2015 • Damien Teney, Matthew Brown, Dmitry Kit, Peter Hall

This paper addresses the segmentation of videos with arbitrary motion, including dynamic textures, using novel motion features and a supervised learning approach.

Enhancing Video Summarization via Vision-Language Embedding

no code implementations • CVPR 2017 • Bryan A. Plummer, Matthew Brown, Svetlana Lazebnik

This paper addresses video summarization, or the problem of distilling a raw video into a shorter form while still capturing the original story.

When Ensembling Smaller Models is More Efficient than Single Large Models

no code implementations • 1 May 2020 • Dan Kondratyuk, Mingxing Tan, Matthew Brown, Boqing Gong

Ensembling is a simple and popular technique for boosting evaluation performance by training multiple models (e. g., with different initializations) and aggregating their predictions.

GeLaTO: Generative Latent Textured Objects

no code implementations • ECCV 2020 • Ricardo Martin-Brualla, Rohit Pandey, Sofien Bouaziz, Matthew Brown, Dan B. Goldman

Accurate modeling of 3D objects exhibiting transparency, reflections and thin structures is an extremely challenging problem.

FiG-NeRF: Figure-Ground Neural Radiance Fields for 3D Object Category Modelling

no code implementations • 17 Apr 2021 • Christopher Xie, Keunhong Park, Ricardo Martin-Brualla, Matthew Brown

We investigate the use of Neural Radiance Fields (NeRF) to learn high quality 3D object category models from collections of input images.

Exploring Temporal Granularity in Self-Supervised Video Representation Learning

no code implementations • 8 Dec 2021 • Rui Qian, Yeqing Li, Liangzhe Yuan, Boqing Gong, Ting Liu, Matthew Brown, Serge Belongie, Ming-Hsuan Yang, Hartwig Adam, Yin Cui

The training objective consists of two parts: a fine-grained temporal learning objective to maximize the similarity between corresponding temporal embeddings in the short clip and the long clip, and a persistent temporal learning objective to pull together global embeddings of the two clips.

Towards a Unified Foundation Model: Jointly Pre-Training Transformers on Unpaired Images and Text

no code implementations • 14 Dec 2021 • Qing Li, Boqing Gong, Yin Cui, Dan Kondratyuk, Xianzhi Du, Ming-Hsuan Yang, Matthew Brown

The experiments show that the resultant unified foundation transformer works surprisingly well on both the vision-only and text-only tasks, and the proposed knowledge distillation and gradient masking strategy can effectively lift the performance to approach the level of separately-trained models.