Search Results for author: Mohammad Norouzi

Found 88 papers, 49 papers with code

Neural Program Synthesis with Priority Queue Training

4 code implementations • 10 Jan 2018 • Daniel A. Abolafia, Mohammad Norouzi, Jonathan Shen, Rui Zhao, Quoc V. Le

Models and examples built with TensorFlow

Trust-PCL: An Off-Policy Trust Region Method for Continuous Control

1 code implementation • ICLR 2018 • Ofir Nachum, Mohammad Norouzi, Kelvin Xu, Dale Schuurmans

When evaluated on a number of continuous control tasks, Trust-PCL improves the solution quality and sample efficiency of TRPO.

Bridging the Gap Between Value and Policy Based Reinforcement Learning

1 code implementation • NeurIPS 2017 • Ofir Nachum, Mohammad Norouzi, Kelvin Xu, Dale Schuurmans

We establish a new connection between value and policy based reinforcement learning (RL) based on a relationship between softmax temporal value consistency and policy optimality under entropy regularization.

Filtering Variational Objectives

3 code implementations • NeurIPS 2017 • Chris J. Maddison, Dieterich Lawson, George Tucker, Nicolas Heess, Mohammad Norouzi, andriy mnih, Arnaud Doucet, Yee Whye Teh

When used as a surrogate objective for maximum likelihood estimation in latent variable models, the evidence lower bound (ELBO) produces state-of-the-art results.

A Simple Framework for Contrastive Learning of Visual Representations

90 code implementations • ICML 2020 • Ting Chen, Simon Kornblith, Mohammad Norouzi, Geoffrey Hinton

This paper presents SimCLR: a simple framework for contrastive learning of visual representations.

Ranked #4 on

Contrastive Learning

on imagenet-1k

Ranked #4 on

Contrastive Learning

on imagenet-1k

Contrastive Learning

Contrastive Learning

Self-Supervised Image Classification

+3

Self-Supervised Image Classification

+3

Discovery of Latent 3D Keypoints via End-to-end Geometric Reasoning

1 code implementation • NeurIPS 2018 • Supasorn Suwajanakorn, Noah Snavely, Jonathan Tompson, Mohammad Norouzi

We demonstrate this framework on 3D pose estimation by proposing a differentiable objective that seeks the optimal set of keypoints for recovering the relative pose between two views of an object.

Similarity of Neural Network Representations Revisited

9 code implementations • ICML 2019 2019 • Simon Kornblith, Mohammad Norouzi, Honglak Lee, Geoffrey Hinton

We introduce a similarity index that measures the relationship between representational similarity matrices and does not suffer from this limitation.

Learning to Generalize from Sparse and Underspecified Rewards

1 code implementation • 19 Feb 2019 • Rishabh Agarwal, Chen Liang, Dale Schuurmans, Mohammad Norouzi

The parameters of the auxiliary reward function are optimized with respect to the validation performance of a trained policy.

Dream to Control: Learning Behaviors by Latent Imagination

20 code implementations • ICLR 2020 • Danijar Hafner, Timothy Lillicrap, Jimmy Ba, Mohammad Norouzi

Learned world models summarize an agent's experience to facilitate learning complex behaviors.

WaveGrad: Estimating Gradients for Waveform Generation

7 code implementations • ICLR 2021 • Nanxin Chen, Yu Zhang, Heiga Zen, Ron J. Weiss, Mohammad Norouzi, William Chan

This paper introduces WaveGrad, a conditional model for waveform generation which estimates gradients of the data density.

RL Unplugged: A Suite of Benchmarks for Offline Reinforcement Learning

2 code implementations • 24 Jun 2020 • Caglar Gulcehre, Ziyu Wang, Alexander Novikov, Tom Le Paine, Sergio Gomez Colmenarejo, Konrad Zolna, Rishabh Agarwal, Josh Merel, Daniel Mankowitz, Cosmin Paduraru, Gabriel Dulac-Arnold, Jerry Li, Mohammad Norouzi, Matt Hoffman, Ofir Nachum, George Tucker, Nicolas Heess, Nando de Freitas

We hope that our suite of benchmarks will increase the reproducibility of experiments and make it possible to study challenging tasks with a limited computational budget, thus making RL research both more systematic and more accessible across the community.

RL Unplugged: A Collection of Benchmarks for Offline Reinforcement Learning

1 code implementation • NeurIPS 2020 • Caglar Gulcehre, Ziyu Wang, Alexander Novikov, Thomas Paine, Sergio Gómez, Konrad Zolna, Rishabh Agarwal, Josh S. Merel, Daniel J. Mankowitz, Cosmin Paduraru, Gabriel Dulac-Arnold, Jerry Li, Mohammad Norouzi, Matthew Hoffman, Nicolas Heess, Nando de Freitas

We hope that our suite of benchmarks will increase the reproducibility of experiments and make it possible to study challenging tasks with a limited computational budget, thus making RL research both more systematic and more accessible across the community.

Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation

28 code implementations • 26 Sep 2016 • Yonghui Wu, Mike Schuster, Zhifeng Chen, Quoc V. Le, Mohammad Norouzi, Wolfgang Macherey, Maxim Krikun, Yuan Cao, Qin Gao, Klaus Macherey, Jeff Klingner, Apurva Shah, Melvin Johnson, Xiaobing Liu, Łukasz Kaiser, Stephan Gouws, Yoshikiyo Kato, Taku Kudo, Hideto Kazawa, Keith Stevens, George Kurian, Nishant Patil, Wei Wang, Cliff Young, Jason Smith, Jason Riesa, Alex Rudnick, Oriol Vinyals, Greg Corrado, Macduff Hughes, Jeffrey Dean

To improve parallelism and therefore decrease training time, our attention mechanism connects the bottom layer of the decoder to the top layer of the encoder.

Ranked #35 on

Machine Translation

on WMT2014 English-French

Ranked #35 on

Machine Translation

on WMT2014 English-French

Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding

4 code implementations • 23 May 2022 • Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily Denton, Seyed Kamyar Seyed Ghasemipour, Burcu Karagol Ayan, S. Sara Mahdavi, Rapha Gontijo Lopes, Tim Salimans, Jonathan Ho, David J Fleet, Mohammad Norouzi

We present Imagen, a text-to-image diffusion model with an unprecedented degree of photorealism and a deep level of language understanding.

Ranked #17 on

Text-to-Image Generation

on MS COCO

(using extra training data)

Ranked #17 on

Text-to-Image Generation

on MS COCO

(using extra training data)

Big Self-Supervised Models are Strong Semi-Supervised Learners

8 code implementations • NeurIPS 2020 • Ting Chen, Simon Kornblith, Kevin Swersky, Mohammad Norouzi, Geoffrey Hinton

The proposed semi-supervised learning algorithm can be summarized in three steps: unsupervised pretraining of a big ResNet model using SimCLRv2, supervised fine-tuning on a few labeled examples, and distillation with unlabeled examples for refining and transferring the task-specific knowledge.

Self-Supervised Image Classification

Self-Supervised Image Classification

Semi-Supervised Image Classification

Semi-Supervised Image Classification

Image Super-Resolution via Iterative Refinement

4 code implementations • 15 Apr 2021 • Chitwan Saharia, Jonathan Ho, William Chan, Tim Salimans, David J. Fleet, Mohammad Norouzi

We present SR3, an approach to image Super-Resolution via Repeated Refinement.

Mastering Atari with Discrete World Models

9 code implementations • ICLR 2021 • Danijar Hafner, Timothy Lillicrap, Mohammad Norouzi, Jimmy Ba

The world model uses discrete representations and is trained separately from the policy.

Ranked #3 on

Atari Games

on Atari 2600 Skiing

(using extra training data)

Ranked #3 on

Atari Games

on Atari 2600 Skiing

(using extra training data)

Video Diffusion Models

3 code implementations • 7 Apr 2022 • Jonathan Ho, Tim Salimans, Alexey Gritsenko, William Chan, Mohammad Norouzi, David J. Fleet

Generating temporally coherent high fidelity video is an important milestone in generative modeling research.

Robust and Efficient Medical Imaging with Self-Supervision

2 code implementations • 19 May 2022 • Shekoofeh Azizi, Laura Culp, Jan Freyberg, Basil Mustafa, Sebastien Baur, Simon Kornblith, Ting Chen, Patricia MacWilliams, S. Sara Mahdavi, Ellery Wulczyn, Boris Babenko, Megan Wilson, Aaron Loh, Po-Hsuan Cameron Chen, YuAn Liu, Pinal Bavishi, Scott Mayer McKinney, Jim Winkens, Abhijit Guha Roy, Zach Beaver, Fiona Ryan, Justin Krogue, Mozziyar Etemadi, Umesh Telang, Yun Liu, Lily Peng, Greg S. Corrado, Dale R. Webster, David Fleet, Geoffrey Hinton, Neil Houlsby, Alan Karthikesalingam, Mohammad Norouzi, Vivek Natarajan

These results suggest that REMEDIS can significantly accelerate the life-cycle of medical imaging AI development thereby presenting an important step forward for medical imaging AI to deliver broad impact.

Palette: Image-to-Image Diffusion Models

4 code implementations • 10 Nov 2021 • Chitwan Saharia, William Chan, Huiwen Chang, Chris A. Lee, Jonathan Ho, Tim Salimans, David J. Fleet, Mohammad Norouzi

We expect this standardized evaluation protocol to play a role in advancing image-to-image translation research.

Ranked #1 on

Colorization

on ImageNet ctest10k

Ranked #1 on

Colorization

on ImageNet ctest10k

Zero-Shot Learning by Convex Combination of Semantic Embeddings

2 code implementations • 19 Dec 2013 • Mohammad Norouzi, Tomas Mikolov, Samy Bengio, Yoram Singer, Jonathon Shlens, Andrea Frome, Greg S. Corrado, Jeffrey Dean

In other cases the semantic embedding space is established by an independent natural language processing task, and then the image transformation into that space is learned in a second stage.

Ranked #8 on

Multi-label zero-shot learning

on Open Images V4

Ranked #8 on

Multi-label zero-shot learning

on Open Images V4

Neural Combinatorial Optimization with Reinforcement Learning

10 code implementations • 29 Nov 2016 • Irwan Bello, Hieu Pham, Quoc V. Le, Mohammad Norouzi, Samy Bengio

Despite the computational expense, without much engineering and heuristic designing, Neural Combinatorial Optimization achieves close to optimal results on 2D Euclidean graphs with up to 100 nodes.

An Optimistic Perspective on Offline Reinforcement Learning

1 code implementation • 10 Jul 2019 • Rishabh Agarwal, Dale Schuurmans, Mohammad Norouzi

The DQN replay dataset can serve as an offline RL benchmark and is open-sourced.

An Optimistic Perspective on Offline Deep Reinforcement Learning

1 code implementation • ICML 2020 • Rishabh Agarwal, Dale Schuurmans, Mohammad Norouzi

The DQN replay dataset can serve as an offline RL benchmark and is open-sourced.

Your Classifier is Secretly an Energy Based Model and You Should Treat it Like One

4 code implementations • ICLR 2020 • Will Grathwohl, Kuan-Chieh Wang, Jörn-Henrik Jacobsen, David Duvenaud, Mohammad Norouzi, Kevin Swersky

In this setting, the standard class probabilities can be easily computed as well as unnormalized values of p(x) and p(x|y).

Memory Augmented Policy Optimization for Program Synthesis and Semantic Parsing

4 code implementations • NeurIPS 2018 • Chen Liang, Mohammad Norouzi, Jonathan Berant, Quoc Le, Ni Lao

We present Memory Augmented Policy Optimization (MAPO), a simple and novel way to leverage a memory buffer of promising trajectories to reduce the variance of policy gradient estimate.

Fast Exact Search in Hamming Space with Multi-Index Hashing

2 code implementations • 11 Jul 2013 • Mohammad Norouzi, Ali Punjani, David J. Fleet

There is growing interest in representing image data and feature descriptors using compact binary codes for fast near neighbor search.

Character-Aware Models Improve Visual Text Rendering

1 code implementation • 20 Dec 2022 • Rosanne Liu, Dan Garrette, Chitwan Saharia, William Chan, Adam Roberts, Sharan Narang, Irina Blok, RJ Mical, Mohammad Norouzi, Noah Constant

In the text-only domain, we find that character-aware models provide large gains on a novel spelling task (WikiSpell).

Neural Audio Synthesis of Musical Notes with WaveNet Autoencoders

5 code implementations • ICML 2017 • Jesse Engel, Cinjon Resnick, Adam Roberts, Sander Dieleman, Douglas Eck, Karen Simonyan, Mohammad Norouzi

Generative models in vision have seen rapid progress due to algorithmic improvements and the availability of high-quality image datasets.

QANet: Combining Local Convolution with Global Self-Attention for Reading Comprehension

15 code implementations • ICLR 2018 • Adams Wei Yu, David Dohan, Minh-Thang Luong, Rui Zhao, Kai Chen, Mohammad Norouzi, Quoc V. Le

On the SQuAD dataset, our model is 3x to 13x faster in training and 4x to 9x faster in inference, while achieving equivalent accuracy to recurrent models.

Ranked #27 on

Question Answering

on SQuAD1.1 dev

Ranked #27 on

Question Answering

on SQuAD1.1 dev

WaveGrad 2: Iterative Refinement for Text-to-Speech Synthesis

3 code implementations • 17 Jun 2021 • Nanxin Chen, Yu Zhang, Heiga Zen, Ron J. Weiss, Mohammad Norouzi, Najim Dehak, William Chan

The model takes an input phoneme sequence, and through an iterative refinement process, generates an audio waveform.

TryOnDiffusion: A Tale of Two UNets

1 code implementation • CVPR 2023 • Luyang Zhu, Dawei Yang, Tyler Zhu, Fitsum Reda, William Chan, Chitwan Saharia, Mohammad Norouzi, Ira Kemelmacher-Shlizerman

Given two images depicting a person and a garment worn by another person, our goal is to generate a visualization of how the garment might look on the input person.

Benchmarks for Deep Off-Policy Evaluation

3 code implementations • ICLR 2021 • Justin Fu, Mohammad Norouzi, Ofir Nachum, George Tucker, Ziyu Wang, Alexander Novikov, Mengjiao Yang, Michael R. Zhang, Yutian Chen, Aviral Kumar, Cosmin Paduraru, Sergey Levine, Tom Le Paine

Off-policy evaluation (OPE) holds the promise of being able to leverage large, offline datasets for both evaluating and selecting complex policies for decision making.

Exemplar VAE: Linking Generative Models, Nearest Neighbor Retrieval, and Data Augmentation

1 code implementation • NeurIPS 2020 • Sajad Norouzi, David J. Fleet, Mohammad Norouzi

We introduce Exemplar VAEs, a family of generative models that bridge the gap between parametric and non-parametric, exemplar based generative models.

No MCMC for me: Amortized sampling for fast and stable training of energy-based models

1 code implementation • ICLR 2021 • Will Grathwohl, Jacob Kelly, Milad Hashemi, Mohammad Norouzi, Kevin Swersky, David Duvenaud

Energy-Based Models (EBMs) present a flexible and appealing way to represent uncertainty.

Imputer: Sequence Modelling via Imputation and Dynamic Programming

1 code implementation • ICML 2020 • William Chan, Chitwan Saharia, Geoffrey Hinton, Mohammad Norouzi, Navdeep Jaitly

This paper presents the Imputer, a neural sequence model that generates output sequences iteratively via imputations.

Deep Value Networks Learn to Evaluate and Iteratively Refine Structured Outputs

1 code implementation • ICML 2017 • Michael Gygli, Mohammad Norouzi, Anelia Angelova

We approach structured output prediction by optimizing a deep value network (DVN) to precisely estimate the task loss on different output configurations for a given input.

Cartesian K-Means

1 code implementation • CVPR 2013 • Mohammad Norouzi, David J. Fleet

A fundamental limitation of quantization techniques like the k-means clustering algorithm is the storage and runtime cost associated with the large numbers of clusters required to keep quantization errors small and model fidelity high.

Understanding the impact of entropy on policy optimization

1 code implementation • 27 Nov 2018 • Zafarali Ahmed, Nicolas Le Roux, Mohammad Norouzi, Dale Schuurmans

Entropy regularization is commonly used to improve policy optimization in reinforcement learning.

Decoder Denoising Pretraining for Semantic Segmentation

1 code implementation • 23 May 2022 • Emmanuel Brempong Asiedu, Simon Kornblith, Ting Chen, Niki Parmar, Matthias Minderer, Mohammad Norouzi

We propose a decoder pretraining approach based on denoising, which can be combined with supervised pretraining of the encoder.

Device Placement Optimization with Reinforcement Learning

1 code implementation • ICML 2017 • Azalia Mirhoseini, Hieu Pham, Quoc V. Le, Benoit Steiner, Rasmus Larsen, Yuefeng Zhou, Naveen Kumar, Mohammad Norouzi, Samy Bengio, Jeff Dean

Key to our method is the use of a sequence-to-sequence model to predict which subsets of operations in a TensorFlow graph should run on which of the available devices.

Non-Autoregressive Machine Translation with Latent Alignments

2 code implementations • EMNLP 2020 • Chitwan Saharia, William Chan, Saurabh Saxena, Mohammad Norouzi

In addition, we adapt the Imputer model for non-autoregressive machine translation and demonstrate that Imputer with just 4 generation steps can match the performance of an autoregressive Transformer baseline.

Dynamic Programming Encoding for Subword Segmentation in Neural Machine Translation

1 code implementation • ACL 2020 • Xuanli He, Gholamreza Haffari, Mohammad Norouzi

This paper introduces Dynamic Programming Encoding (DPE), a new segmentation algorithm for tokenizing sentences into subword units.

Optimal Completion Distillation for Sequence Learning

2 code implementations • ICLR 2019 • Sara Sabour, William Chan, Mohammad Norouzi

We present Optimal Completion Distillation (OCD), a training procedure for optimizing sequence to sequence models based on edit distance.

Big Self-Supervised Models Advance Medical Image Classification

1 code implementation • ICCV 2021 • Shekoofeh Azizi, Basil Mustafa, Fiona Ryan, Zachary Beaver, Jan Freyberg, Jonathan Deaton, Aaron Loh, Alan Karthikesalingam, Simon Kornblith, Ting Chen, Vivek Natarajan, Mohammad Norouzi

Self-supervised pretraining followed by supervised fine-tuning has seen success in image recognition, especially when labeled examples are scarce, but has received limited attention in medical image analysis.

Detecting Cancer Metastases on Gigapixel Pathology Images

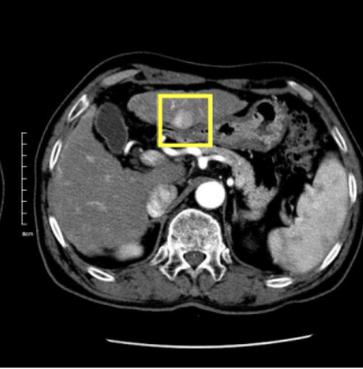

6 code implementations • 3 Mar 2017 • Yun Liu, Krishna Gadepalli, Mohammad Norouzi, George E. Dahl, Timo Kohlberger, Aleksey Boyko, Subhashini Venugopalan, Aleksei Timofeev, Philip Q. Nelson, Greg S. Corrado, Jason D. Hipp, Lily Peng, Martin C. Stumpe

At 8 false positives per image, we detect 92. 4% of the tumors, relative to 82. 7% by the previous best automated approach.

Ranked #2 on

Medical Object Detection

on Barrett’s Esophagus

Ranked #2 on

Medical Object Detection

on Barrett’s Esophagus

Generate, Annotate, and Learn: NLP with Synthetic Text

1 code implementation • 11 Jun 2021 • Xuanli He, Islam Nassar, Jamie Kiros, Gholamreza Haffari, Mohammad Norouzi

This paper studies the use of language models as a source of synthetic unlabeled text for NLP.

Parallel Architecture and Hyperparameter Search via Successive Halving and Classification

1 code implementation • 25 May 2018 • Manoj Kumar, George E. Dahl, Vijay Vasudevan, Mohammad Norouzi

We present a simple and powerful algorithm for parallel black box optimization called Successive Halving and Classification (SHAC).

Embedding Text in Hyperbolic Spaces

no code implementations • WS 2018 • Bhuwan Dhingra, Christopher J. Shallue, Mohammad Norouzi, Andrew M. Dai, George E. Dahl

Ideally, we could incorporate our prior knowledge of this hierarchical structure into unsupervised learning algorithms that work on text data.

Smoothed Action Value Functions for Learning Gaussian Policies

no code implementations • ICML 2018 • Ofir Nachum, Mohammad Norouzi, George Tucker, Dale Schuurmans

State-action value functions (i. e., Q-values) are ubiquitous in reinforcement learning (RL), giving rise to popular algorithms such as SARSA and Q-learning.

N-gram Language Modeling using Recurrent Neural Network Estimation

no code implementations • 31 Mar 2017 • Ciprian Chelba, Mohammad Norouzi, Samy Bengio

Experiments on a small corpus (UPenn Treebank, one million words of training data and 10k vocabulary) have found the LSTM cell with dropout to be the best model for encoding the $n$-gram state when compared with feed-forward and vanilla RNN models.

PixColor: Pixel Recursive Colorization

no code implementations • 19 May 2017 • Sergio Guadarrama, Ryan Dahl, David Bieber, Mohammad Norouzi, Jonathon Shlens, Kevin Murphy

Then, given the generated low-resolution color image and the original grayscale image as inputs, we train a second CNN to generate a high-resolution colorization of an image.

Ranked #3 on

Colorization

on ImageNet val

Ranked #3 on

Colorization

on ImageNet val

Pixel Recursive Super Resolution

1 code implementation • ICCV 2017 • Ryan Dahl, Mohammad Norouzi, Jonathon Shlens

A low resolution image may correspond to multiple plausible high resolution images, thus modeling the super resolution process with a pixel independent conditional model often results in averaging different details--hence blurry edges.

Improving Policy Gradient by Exploring Under-appreciated Rewards

no code implementations • 28 Nov 2016 • Ofir Nachum, Mohammad Norouzi, Dale Schuurmans

We propose a more directed exploration strategy that promotes exploration of under-appreciated reward regions.

Reward Augmented Maximum Likelihood for Neural Structured Prediction

no code implementations • NeurIPS 2016 • Mohammad Norouzi, Samy Bengio, Zhifeng Chen, Navdeep Jaitly, Mike Schuster, Yonghui Wu, Dale Schuurmans

A key problem in structured output prediction is direct optimization of the task reward function that matters for test evaluation.

Efficient non-greedy optimization of decision trees

no code implementations • NeurIPS 2015 • Mohammad Norouzi, Maxwell D. Collins, Matthew Johnson, David J. Fleet, Pushmeet Kohli

In this paper, we present an algorithm for optimizing the split functions at all levels of the tree jointly with the leaf parameters, based on a global objective.

CO2 Forest: Improved Random Forest by Continuous Optimization of Oblique Splits

no code implementations • 19 Jun 2015 • Mohammad Norouzi, Maxwell D. Collins, David J. Fleet, Pushmeet Kohli

We develop a convex-concave upper bound on the classification loss for a one-level decision tree, and optimize the bound by stochastic gradient descent at each internal node of the tree.

The Importance of Generation Order in Language Modeling

no code implementations • EMNLP 2018 • Nicolas Ford, Daniel Duckworth, Mohammad Norouzi, George E. Dahl

Neural language models are a critical component of state-of-the-art systems for machine translation, summarization, audio transcription, and other tasks.

Sequence to Sequence Mixture Model for Diverse Machine Translation

no code implementations • CONLL 2018 • Xuanli He, Gholamreza Haffari, Mohammad Norouzi

In this paper, we develop a novel sequence to sequence mixture (S2SMIX) model that improves both translation diversity and quality by adopting a committee of specialized translation models rather than a single translation model.

Contingency-Aware Exploration in Reinforcement Learning

no code implementations • ICLR 2019 • Jongwook Choi, Yijie Guo, Marcin Moczulski, Junhyuk Oh, Neal Wu, Mohammad Norouzi, Honglak Lee

This paper investigates whether learning contingency-awareness and controllable aspects of an environment can lead to better exploration in reinforcement learning.

Ranked #8 on

Atari Games

on Atari 2600 Montezuma's Revenge

Ranked #8 on

Atari Games

on Atari 2600 Montezuma's Revenge

Hamming Distance Metric Learning

no code implementations • NeurIPS 2012 • Mohammad Norouzi, David J. Fleet, Ruslan R. Salakhutdinov

Motivated by large-scale multimedia applications we propose to learn mappings from high-dimensional data to binary codes that preserve semantic similarity.

Code Synthesis with Priority Queue Training

no code implementations • ICLR 2018 • Daniel A. Abolafia, Quoc V. Le, Mohammad Norouzi

We consider the task of program synthesis in the presence of a reward function over the output of programs, where the goal is to find programs with maximal rewards.

Learning Gaussian Policies from Smoothed Action Value Functions

no code implementations • ICLR 2018 • Ofir Nachum, Mohammad Norouzi, George Tucker, Dale Schuurmans

We propose a new notion of action value defined by a Gaussian smoothed version of the expected Q-value used in SARSA.

Memory Based Trajectory-conditioned Policies for Learning from Sparse Rewards

no code implementations • NeurIPS 2020 • Yijie Guo, Jongwook Choi, Marcin Moczulski, Shengyu Feng, Samy Bengio, Mohammad Norouzi, Honglak Lee

Reinforcement learning with sparse rewards is challenging because an agent can rarely obtain non-zero rewards and hence, gradient-based optimization of parameterized policies can be incremental and slow.

Don't Blame the ELBO! A Linear VAE Perspective on Posterior Collapse

no code implementations • NeurIPS 2019 • James Lucas, George Tucker, Roger Grosse, Mohammad Norouzi

Posterior collapse in Variational Autoencoders (VAEs) arises when the variational posterior distribution closely matches the prior for a subset of latent variables.

NASA: Neural Articulated Shape Approximation

no code implementations • 6 Dec 2019 • Boyang Deng, JP Lewis, Timothy Jeruzalski, Gerard Pons-Moll, Geoffrey Hinton, Mohammad Norouzi, Andrea Tagliasacchi

Efficient representation of articulated objects such as human bodies is an important problem in computer vision and graphics.

SUMO: Unbiased Estimation of Log Marginal Probability for Latent Variable Models

no code implementations • ICLR 2020 • Yucen Luo, Alex Beatson, Mohammad Norouzi, Jun Zhu, David Duvenaud, Ryan P. Adams, Ricky T. Q. Chen

Standard variational lower bounds used to train latent variable models produce biased estimates of most quantities of interest.

NiLBS: Neural Inverse Linear Blend Skinning

no code implementations • 6 Apr 2020 • Timothy Jeruzalski, David I. W. Levin, Alec Jacobson, Paul Lalonde, Mohammad Norouzi, Andrea Tagliasacchi

In this technical report, we investigate efficient representations of articulated objects (e. g. human bodies), which is an important problem in computer vision and graphics.

NASA Neural Articulated Shape Approximation

no code implementations • ECCV 2020 • Boyang Deng, JP Lewis, Timothy Jeruzalski, Gerard Pons-Moll, Geoffrey Hinton, Mohammad Norouzi, Andrea Tagliasacchi

Efficient representation of articulated objects such as human bodies is an important problem in computer vision and graphics.

Demystifying Loss Functions for Classification

no code implementations • 1 Jan 2021 • Simon Kornblith, Honglak Lee, Ting Chen, Mohammad Norouzi

It is common to use the softmax cross-entropy loss to train neural networks on classification datasets where a single class label is assigned to each example.

Why Do Better Loss Functions Lead to Less Transferable Features?

no code implementations • NeurIPS 2021 • Simon Kornblith, Ting Chen, Honglak Lee, Mohammad Norouzi

We show that many objectives lead to statistically significant improvements in ImageNet accuracy over vanilla softmax cross-entropy, but the resulting fixed feature extractors transfer substantially worse to downstream tasks, and the choice of loss has little effect when networks are fully fine-tuned on the new tasks.

SpeechStew: Simply Mix All Available Speech Recognition Data to Train One Large Neural Network

no code implementations • 5 Apr 2021 • William Chan, Daniel Park, Chris Lee, Yu Zhang, Quoc Le, Mohammad Norouzi

We present SpeechStew, a speech recognition model that is trained on a combination of various publicly available speech recognition datasets: AMI, Broadcast News, Common Voice, LibriSpeech, Switchboard/Fisher, Tedlium, and Wall Street Journal.

Ranked #1 on

Speech Recognition

on Switchboard CallHome

Ranked #1 on

Speech Recognition

on Switchboard CallHome

Autoregressive Dynamics Models for Offline Policy Evaluation and Optimization

no code implementations • ICLR 2021 • Michael R. Zhang, Tom Le Paine, Ofir Nachum, Cosmin Paduraru, George Tucker, Ziyu Wang, Mohammad Norouzi

This modeling choice assumes that different dimensions of the next state and reward are conditionally independent given the current state and action and may be driven by the fact that fully observable physics-based simulation environments entail deterministic transition dynamics.

Learning to Efficiently Sample from Diffusion Probabilistic Models

no code implementations • 7 Jun 2021 • Daniel Watson, Jonathan Ho, Mohammad Norouzi, William Chan

Key advantages of DDPMs include ease of training, in contrast to generative adversarial networks, and speed of generation, in contrast to autoregressive models.

Cascaded Diffusion Models for High Fidelity Image Generation

no code implementations • 30 May 2021 • Jonathan Ho, Chitwan Saharia, William Chan, David J. Fleet, Mohammad Norouzi, Tim Salimans

We show that cascaded diffusion models are capable of generating high fidelity images on the class-conditional ImageNet generation benchmark, without any assistance from auxiliary image classifiers to boost sample quality.

Ranked #3 on

Image Generation

on ImageNet 64x64

Ranked #3 on

Image Generation

on ImageNet 64x64

Optimizing Few-Step Diffusion Samplers by Gradient Descent

no code implementations • ICLR 2022 • Daniel Watson, William Chan, Jonathan Ho, Mohammad Norouzi

We propose Generalized Gaussian Diffusion Processes (GGDP), a family of non-Markovian samplers for diffusion models, and we show how to improve the generated samples of pre-trained DDPMs by optimizing the degrees of freedom of the GGDP sampler family with respect to a perceptual loss.

Generate, Annotate, and Learn: Generative Models Advance Self-Training and Knowledge Distillation

no code implementations • 29 Sep 2021 • Xuanli He, Islam Nassar, Jamie Ryan Kiros, Gholamreza Haffari, Mohammad Norouzi

To obtain strong task-specific generative models, we either fine-tune a large language model (LLM) on inputs from specific tasks, or prompt a LLM with a few input examples to generate more unlabeled examples.

Understanding Posterior Collapse in Generative Latent Variable Models

no code implementations • ICLR Workshop DeepGenStruct 2019 • James Lucas, George Tucker, Roger Grosse, Mohammad Norouzi

Posterior collapse in Variational Autoencoders (VAEs) arises when the variational distribution closely matches the uninformative prior for a subset of latent variables.

Striving for Simplicity in Off-Policy Deep Reinforcement Learning

no code implementations • 25 Sep 2019 • Rishabh Agarwal, Dale Schuurmans, Mohammad Norouzi

This paper advocates the use of offline (batch) reinforcement learning (RL) to help (1) isolate the contributions of exploitation vs. exploration in off-policy deep RL, (2) improve reproducibility of deep RL research, and (3) facilitate the design of simpler deep RL algorithms.

Self-Imitation Learning via Trajectory-Conditioned Policy for Hard-Exploration Tasks

no code implementations • 25 Sep 2019 • Yijie Guo, Jongwook Choi, Marcin Moczulski, Samy Bengio, Mohammad Norouzi, Honglak Lee

We propose a new method of learning a trajectory-conditioned policy to imitate diverse trajectories from the agent's own past experiences and show that such self-imitation helps avoid myopic behavior and increases the chance of finding a globally optimal solution for hard-exploration tasks, especially when there are misleading rewards.

Learning Fast Samplers for Diffusion Models by Differentiating Through Sample Quality

no code implementations • 11 Feb 2022 • Daniel Watson, William Chan, Jonathan Ho, Mohammad Norouzi

We introduce Differentiable Diffusion Sampler Search (DDSS): a method that optimizes fast samplers for any pre-trained diffusion model by differentiating through sample quality scores.

Imagen Video: High Definition Video Generation with Diffusion Models

no code implementations • 5 Oct 2022 • Jonathan Ho, William Chan, Chitwan Saharia, Jay Whang, Ruiqi Gao, Alexey Gritsenko, Diederik P. Kingma, Ben Poole, Mohammad Norouzi, David J. Fleet, Tim Salimans

We present Imagen Video, a text-conditional video generation system based on a cascade of video diffusion models.

Ranked #1 on

Video Generation

on LAION-400M

Ranked #1 on

Video Generation

on LAION-400M

Novel View Synthesis with Diffusion Models

no code implementations • 6 Oct 2022 • Daniel Watson, William Chan, Ricardo Martin-Brualla, Jonathan Ho, Andrea Tagliasacchi, Mohammad Norouzi

We demonstrate that stochastic conditioning significantly improves the 3D consistency of a naive sampler for an image-to-image diffusion model, which involves conditioning on a single fixed view.

Meta-Learning Fast Weight Language Models

no code implementations • 5 Dec 2022 • Kevin Clark, Kelvin Guu, Ming-Wei Chang, Panupong Pasupat, Geoffrey Hinton, Mohammad Norouzi

Dynamic evaluation of language models (LMs) adapts model parameters at test time using gradient information from previous tokens and substantially improves LM performance.

Imagen Editor and EditBench: Advancing and Evaluating Text-Guided Image Inpainting

no code implementations • CVPR 2023 • Su Wang, Chitwan Saharia, Ceslee Montgomery, Jordi Pont-Tuset, Shai Noy, Stefano Pellegrini, Yasumasa Onoe, Sarah Laszlo, David J. Fleet, Radu Soricut, Jason Baldridge, Mohammad Norouzi, Peter Anderson, William Chan

Through extensive human evaluation on EditBench, we find that object-masking during training leads to across-the-board improvements in text-image alignment -- such that Imagen Editor is preferred over DALL-E 2 and Stable Diffusion -- and, as a cohort, these models are better at object-rendering than text-rendering, and handle material/color/size attributes better than count/shape attributes.

Monocular Depth Estimation using Diffusion Models

no code implementations • 28 Feb 2023 • Saurabh Saxena, Abhishek Kar, Mohammad Norouzi, David J. Fleet

To cope with the limited availability of data for supervised training, we leverage pre-training on self-supervised image-to-image translation tasks.

Ranked #21 on

Monocular Depth Estimation

on NYU-Depth V2

(using extra training data)

Ranked #21 on

Monocular Depth Estimation

on NYU-Depth V2

(using extra training data)

Synthetic Data from Diffusion Models Improves ImageNet Classification

no code implementations • 17 Apr 2023 • Shekoofeh Azizi, Simon Kornblith, Chitwan Saharia, Mohammad Norouzi, David J. Fleet

Deep generative models are becoming increasingly powerful, now generating diverse high fidelity photo-realistic samples given text prompts.

The Surprising Effectiveness of Diffusion Models for Optical Flow and Monocular Depth Estimation

no code implementations • NeurIPS 2023 • Saurabh Saxena, Charles Herrmann, Junhwa Hur, Abhishek Kar, Mohammad Norouzi, Deqing Sun, David J. Fleet

Denoising diffusion probabilistic models have transformed image generation with their impressive fidelity and diversity.