Search Results for author: Sarath Chandar

Found 72 papers, 37 papers with code

A Survey of Data Augmentation Approaches for NLP

1 code implementation • Findings (ACL) 2021 • Steven Y. Feng, Varun Gangal, Jason Wei, Sarath Chandar, Soroush Vosoughi, Teruko Mitamura, Eduard Hovy

In this paper, we present a comprehensive and unifying survey of data augmentation for NLP by summarizing the literature in a structured manner.

The Hanabi Challenge: A New Frontier for AI Research

1 code implementation • 1 Feb 2019 • Nolan Bard, Jakob N. Foerster, Sarath Chandar, Neil Burch, Marc Lanctot, H. Francis Song, Emilio Parisotto, Vincent Dumoulin, Subhodeep Moitra, Edward Hughes, Iain Dunning, Shibl Mourad, Hugo Larochelle, Marc G. Bellemare, Michael Bowling

From the early days of computing, games have been important testbeds for studying how well machines can do sophisticated decision making.

GuessWhat?! Visual object discovery through multi-modal dialogue

4 code implementations • CVPR 2017 • Harm de Vries, Florian Strub, Sarath Chandar, Olivier Pietquin, Hugo Larochelle, Aaron Courville

Our key contribution is the collection of a large-scale dataset consisting of 150K human-played games with a total of 800K visual question-answer pairs on 66K images.

Learning To Navigate The Synthetically Accessible Chemical Space Using Reinforcement Learning

1 code implementation • 26 Apr 2020 • Sai Krishna Gottipati, Boris Sattarov, Sufeng. Niu, Yashaswi Pathak, Hao-Ran Wei, Shengchao Liu, Karam M. J. Thomas, Simon Blackburn, Connor W. Coley, Jian Tang, Sarath Chandar, Yoshua Bengio

Over the last decade, there has been significant progress in the field of machine learning for de novo drug design, particularly in deep generative models.

Learning to Navigate in Synthetically Accessible Chemical Space Using Reinforcement Learning

1 code implementation • ICML 2020 • Sai Krishna Gottipati, Boris Sattarov, Sufeng. Niu, Hao-Ran Wei, Yashaswi Pathak, Shengchao Liu, Simon Blackburn, Karam Thomas, Connor Coley, Jian Tang, Sarath Chandar, Yoshua Bengio

In this work, we propose a novel reinforcement learning (RL) setup for drug discovery that addresses this challenge by embedding the concept of synthetic accessibility directly into the de novo compound design system.

Correlational Neural Networks

2 code implementations • 27 Apr 2015 • Sarath Chandar, Mitesh M. Khapra, Hugo Larochelle, Balaraman Ravindran

CCA based approaches learn a joint representation by maximizing correlation of the views when projected to the common subspace.

Generating Factoid Questions With Recurrent Neural Networks: The 30M Factoid Question-Answer Corpus

1 code implementation • ACL 2016 • Iulian Vlad Serban, Alberto García-Durán, Caglar Gulcehre, Sungjin Ahn, Sarath Chandar, Aaron Courville, Yoshua Bengio

Over the past decade, large-scale supervised learning corpora have enabled machine learning researchers to make substantial advances.

Mastering Memory Tasks with World Models

1 code implementation • 7 Mar 2024 • Mohammad Reza Samsami, Artem Zholus, Janarthanan Rajendran, Sarath Chandar

Through a diverse set of illustrative tasks, we systematically demonstrate that R2I not only establishes a new state-of-the-art for challenging memory and credit assignment RL tasks, such as BSuite and POPGym, but also showcases superhuman performance in the complex memory domain of Memory Maze.

Do Neural Dialog Systems Use the Conversation History Effectively? An Empirical Study

1 code implementation • ACL 2019 • Chinnadhurai Sankar, Sandeep Subramanian, Christopher Pal, Sarath Chandar, Yoshua Bengio

Neural generative models have been become increasingly popular when building conversational agents.

PatchUp: A Feature-Space Block-Level Regularization Technique for Convolutional Neural Networks

1 code implementation • 14 Jun 2020 • Mojtaba Faramarzi, Mohammad Amini, Akilesh Badrinaaraayanan, Vikas Verma, Sarath Chandar

Our approach improves the robustness of CNN models against the manifold intrusion problem that may occur in other state-of-the-art mixing approaches.

IIRC: Incremental Implicitly-Refined Classification

1 code implementation • CVPR 2021 • Mohamed Abdelsalam, Mojtaba Faramarzi, Shagun Sodhani, Sarath Chandar

We develop a standardized benchmark that enables evaluating models on the IIRC setup.

Continuous Coordination As a Realistic Scenario for Lifelong Learning

2 code implementations • 4 Mar 2021 • Hadi Nekoei, Akilesh Badrinaaraayanan, Aaron Courville, Sarath Chandar

Its large strategy space makes it a desirable environment for lifelong RL tasks.

Towards Non-saturating Recurrent Units for Modelling Long-term Dependencies

2 code implementations • 22 Jan 2019 • Sarath Chandar, Chinnadhurai Sankar, Eugene Vorontsov, Samira Ebrahimi Kahou, Yoshua Bengio

Modelling long-term dependencies is a challenge for recurrent neural networks.

An Empirical Investigation of the Role of Pre-training in Lifelong Learning

1 code implementation • NeurIPS 2023 • Sanket Vaibhav Mehta, Darshan Patil, Sarath Chandar, Emma Strubell

The lifelong learning paradigm in machine learning is an attractive alternative to the more prominent isolated learning scheme not only due to its resemblance to biological learning but also its potential to reduce energy waste by obviating excessive model re-training.

Bridge Correlational Neural Networks for Multilingual Multimodal Representation Learning

1 code implementation • NAACL 2016 • Janarthanan Rajendran, Mitesh M. Khapra, Sarath Chandar, Balaraman Ravindran

In this work, we address a real-world scenario where no direct parallel data is available between two views of interest (say, $V_1$ and $V_2$) but parallel data is available between each of these views and a pivot view ($V_3$).

TAG: Task-based Accumulated Gradients for Lifelong learning

1 code implementation • 11 May 2021 • Pranshu Malviya, Balaraman Ravindran, Sarath Chandar

We also show that our method performs better than several state-of-the-art methods in lifelong learning on complex datasets with a large number of tasks.

The LoCA Regret: A Consistent Metric to Evaluate Model-Based Behavior in Reinforcement Learning

2 code implementations • NeurIPS 2020 • Harm van Seijen, Hadi Nekoei, Evan Racah, Sarath Chandar

For example, the common single-task sample-efficiency metric conflates improvements due to model-based learning with various other aspects, such as representation learning, making it difficult to assess true progress on model-based RL.

Model-based Reinforcement Learning

Model-based Reinforcement Learning

Reinforcement Learning (RL)

+1

Reinforcement Learning (RL)

+1

How To Evaluate Your Dialogue System: Probe Tasks as an Alternative for Token-level Evaluation Metrics

1 code implementation • 24 Aug 2020 • Prasanna Parthasarathi, Joelle Pineau, Sarath Chandar

To bridge this gap in evaluation, we propose designing a set of probing tasks to evaluate dialogue models.

Memory Augmented Optimizers for Deep Learning

2 code implementations • ICLR 2022 • Paul-Aymeric McRae, Prasanna Parthasarathi, Mahmoud Assran, Sarath Chandar

Popular approaches for minimizing loss in data-driven learning often involve an abstraction or an explicit retention of the history of gradients for efficient parameter updates.

Do Encoder Representations of Generative Dialogue Models Encode Sufficient Information about the Task ?

1 code implementation • 20 Jun 2021 • Prasanna Parthasarathi, Joelle Pineau, Sarath Chandar

Predicting the next utterance in dialogue is contingent on encoding of users' input text to generate appropriate and relevant response in data-driven approaches.

Do Encoder Representations of Generative Dialogue Models have sufficient summary of the Information about the task ?

1 code implementation • SIGDIAL (ACL) 2021 • Prasanna Parthasarathi, Joelle Pineau, Sarath Chandar

Predicting the next utterance in dialogue is contingent on encoding of users’ input text to generate appropriate and relevant response in data-driven approaches.

Promoting Exploration in Memory-Augmented Adam using Critical Momenta

1 code implementation • 18 Jul 2023 • Pranshu Malviya, Gonçalo Mordido, Aristide Baratin, Reza Babanezhad Harikandeh, Jerry Huang, Simon Lacoste-Julien, Razvan Pascanu, Sarath Chandar

Adaptive gradient-based optimizers, particularly Adam, have left their mark in training large-scale deep learning models.

Complex Sequential Question Answering: Towards Learning to Converse Over Linked Question Answer Pairs with a Knowledge Graph

1 code implementation • 31 Jan 2018 • Amrita Saha, Vardaan Pahuja, Mitesh M. Khapra, Karthik Sankaranarayanan, Sarath Chandar

Further, unlike existing large scale QA datasets which contain simple questions that can be answered from a single tuple, the questions in our dialogs require a larger subgraph of the KG.

Towards Lossless Encoding of Sentences

1 code implementation • ACL 2019 • Gabriele Prato, Mathieu Duchesneau, Sarath Chandar, Alain Tapp

A lot of work has been done in the field of image compression via machine learning, but not much attention has been given to the compression of natural language.

Maximum Reward Formulation In Reinforcement Learning

1 code implementation • 8 Oct 2020 • Sai Krishna Gottipati, Yashaswi Pathak, Rohan Nuttall, Sahir, Raviteja Chunduru, Ahmed Touati, Sriram Ganapathi Subramanian, Matthew E. Taylor, Sarath Chandar

Reinforcement learning (RL) algorithms typically deal with maximizing the expected cumulative return (discounted or undiscounted, finite or infinite horizon).

Faithfulness Measurable Masked Language Models

1 code implementation • 11 Oct 2023 • Andreas Madsen, Siva Reddy, Sarath Chandar

This is achieved by using a novel fine-tuning method that incorporates masking, such that masking tokens become in-distribution by design.

Are self-explanations from Large Language Models faithful?

1 code implementation • 15 Jan 2024 • Andreas Madsen, Sarath Chandar, Siva Reddy

For example, if an LLM says a set of words is important for making a prediction, then it should not be able to make its prediction without these words.

Towards Evaluating Adaptivity of Model-Based Reinforcement Learning Methods

1 code implementation • 25 Apr 2022 • Yi Wan, Ali Rahimi-Kalahroudi, Janarthanan Rajendran, Ida Momennejad, Sarath Chandar, Harm van Seijen

We empirically validate these insights in the case of linear function approximation by demonstrating that a modified version of linear Dyna achieves effective adaptation to local changes.

Model-based Reinforcement Learning

Model-based Reinforcement Learning

reinforcement-learning

+1

reinforcement-learning

+1

Environments for Lifelong Reinforcement Learning

2 code implementations • 26 Nov 2018 • Khimya Khetarpal, Shagun Sodhani, Sarath Chandar, Doina Precup

To achieve general artificial intelligence, reinforcement learning (RL) agents should learn not only to optimize returns for one specific task but also to constantly build more complex skills and scaffold their knowledge about the world, without forgetting what has already been learned.

Deep Learning on a Healthy Data Diet: Finding Important Examples for Fairness

1 code implementation • 20 Nov 2022 • Abdelrahman Zayed, Prasanna Parthasarathi, Goncalo Mordido, Hamid Palangi, Samira Shabanian, Sarath Chandar

The fairness achieved by our method surpasses that of data augmentation on three text classification datasets, using no more than half of the examples in the augmented dataset.

Conditionally Optimistic Exploration for Cooperative Deep Multi-Agent Reinforcement Learning

1 code implementation • 16 Mar 2023 • Xutong Zhao, Yangchen Pan, Chenjun Xiao, Sarath Chandar, Janarthanan Rajendran

Efficient exploration is critical in cooperative deep Multi-Agent Reinforcement Learning (MARL).

EpiK-Eval: Evaluation for Language Models as Epistemic Models

1 code implementation • 23 Oct 2023 • Gabriele Prato, Jerry Huang, Prasannna Parthasarathi, Shagun Sodhani, Sarath Chandar

In the age of artificial intelligence, the role of large language models (LLMs) is becoming increasingly central.

Improving Meta-Learning Generalization with Activation-Based Early-Stopping

1 code implementation • 3 Aug 2022 • Simon Guiroy, Christopher Pal, Gonçalo Mordido, Sarath Chandar

Specifically, we analyze the evolution, during meta-training, of the neural activations at each hidden layer, on a small set of unlabelled support examples from a single task of the target tasks distribution, as this constitutes a minimal and justifiably accessible information from the target problem.

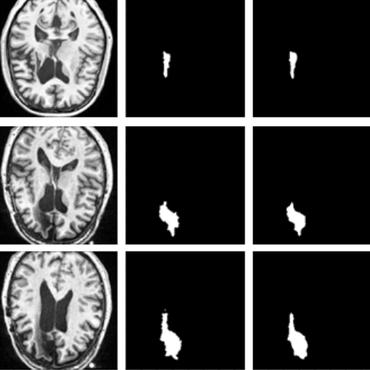

Segmentation of Multiple Sclerosis Lesions across Hospitals: Learn Continually or Train from Scratch?

1 code implementation • 27 Oct 2022 • Enamundram Naga Karthik, Anne Kerbrat, Pierre Labauge, Tobias Granberg, Jason Talbott, Daniel S. Reich, Massimo Filippi, Rohit Bakshi, Virginie Callot, Sarath Chandar, Julien Cohen-Adad

Segmentation of Multiple Sclerosis (MS) lesions is a challenging problem.

Language Expansion In Text-Based Games

no code implementations • 17 May 2018 • Ghulam Ahmed Ansari, Sagar J P, Sarath Chandar, Balaraman Ravindran

Text-based games are suitable test-beds for designing agents that can learn by interaction with the environment in the form of natural language text.

A Deep Reinforcement Learning Chatbot (Short Version)

no code implementations • 20 Jan 2018 • Iulian V. Serban, Chinnadhurai Sankar, Mathieu Germain, Saizheng Zhang, Zhouhan Lin, Sandeep Subramanian, Taesup Kim, Michael Pieper, Sarath Chandar, Nan Rosemary Ke, Sai Rajeswar, Alexandre de Brebisson, Jose M. R. Sotelo, Dendi Suhubdy, Vincent Michalski, Alexandre Nguyen, Joelle Pineau, Yoshua Bengio

We present MILABOT: a deep reinforcement learning chatbot developed by the Montreal Institute for Learning Algorithms (MILA) for the Amazon Alexa Prize competition.

A Deep Reinforcement Learning Chatbot

no code implementations • 7 Sep 2017 • Iulian V. Serban, Chinnadhurai Sankar, Mathieu Germain, Saizheng Zhang, Zhouhan Lin, Sandeep Subramanian, Taesup Kim, Michael Pieper, Sarath Chandar, Nan Rosemary Ke, Sai Rajeshwar, Alexandre de Brebisson, Jose M. R. Sotelo, Dendi Suhubdy, Vincent Michalski, Alexandre Nguyen, Joelle Pineau, Yoshua Bengio

By applying reinforcement learning to crowdsourced data and real-world user interactions, the system has been trained to select an appropriate response from the models in its ensemble.

Dynamic Neural Turing Machine with Soft and Hard Addressing Schemes

no code implementations • 30 Jun 2016 • Caglar Gulcehre, Sarath Chandar, Kyunghyun Cho, Yoshua Bengio

We investigate the mechanisms and effects of learning to read and write into a memory through experiments on Facebook bAbI tasks using both a feedforward and GRUcontroller.

Ranked #5 on

Question Answering

on bAbi

Ranked #5 on

Question Answering

on bAbi

Memory Augmented Neural Networks with Wormhole Connections

no code implementations • 30 Jan 2017 • Caglar Gulcehre, Sarath Chandar, Yoshua Bengio

We use discrete addressing for read/write operations which helps to substantially to reduce the vanishing gradient problem with very long sequences.

A Correlational Encoder Decoder Architecture for Pivot Based Sequence Generation

no code implementations • COLING 2016 • Amrita Saha, Mitesh M. Khapra, Sarath Chandar, Janarthanan Rajendran, Kyunghyun Cho

However, there is no parallel training data available between X and Y but, training data is available between X & Z and Z & Y (as is often the case in many real world applications).

Hierarchical Memory Networks

no code implementations • 24 May 2016 • Sarath Chandar, Sungjin Ahn, Hugo Larochelle, Pascal Vincent, Gerald Tesauro, Yoshua Bengio

In this paper, we explore a form of hierarchical memory network, which can be considered as a hybrid between hard and soft attention memory networks.

Clustering is Efficient for Approximate Maximum Inner Product Search

no code implementations • 21 Jul 2015 • Alex Auvolat, Sarath Chandar, Pascal Vincent, Hugo Larochelle, Yoshua Bengio

Efficient Maximum Inner Product Search (MIPS) is an important task that has a wide applicability in recommendation systems and classification with a large number of classes.

TSEB: More Efficient Thompson Sampling for Policy Learning

no code implementations • 10 Oct 2015 • P. Prasanna, Sarath Chandar, Balaraman Ravindran

In this paper, we propose TSEB, a Thompson Sampling based algorithm with adaptive exploration bonus that aims to solve the problem with tighter PAC guarantees, while being cautious on the regret as well.

Reasoning about Linguistic Regularities in Word Embeddings using Matrix Manifolds

no code implementations • 28 Jul 2015 • Sridhar Mahadevan, Sarath Chandar

In this paper, we introduce a new approach to capture analogies in continuous word representations, based on modeling not just individual word vectors, but rather the subspaces spanned by groups of words.

Towards Training Recurrent Neural Networks for Lifelong Learning

no code implementations • 16 Nov 2018 • Shagun Sodhani, Sarath Chandar, Yoshua Bengio

Both these models are proposed in the context of feedforward networks and we evaluate the feasibility of using them for recurrent networks.

Structure Learning for Neural Module Networks

no code implementations • WS 2019 • Vardaan Pahuja, Jie Fu, Sarath Chandar, Christopher J. Pal

In current formulations of such networks only the parameters of the neural modules and/or the order of their execution is learned.

Slot Contrastive Networks: A Contrastive Approach for Representing Objects

no code implementations • 18 Jul 2020 • Evan Racah, Sarath Chandar

Unsupervised extraction of objects from low-level visual data is an important goal for further progress in machine learning.

MLMLM: Link Prediction with Mean Likelihood Masked Language Model

no code implementations • Findings (ACL) 2021 • Louis Clouatre, Philippe Trempe, Amal Zouaq, Sarath Chandar

They however scale with man-hours and high-quality data.

Ranked #11 on

Link Prediction

on WN18RR

(using extra training data)

Ranked #11 on

Link Prediction

on WN18RR

(using extra training data)

Out-of-Distribution Classification and Clustering

no code implementations • 1 Jan 2021 • Gabriele Prato, Sarath Chandar

This includes left out classes from the same dataset, as well as entire datasets never trained on.

A Brief Study on the Effects of Training Generative Dialogue Models with a Semantic loss

1 code implementation • SIGDIAL (ACL) 2021 • Prasanna Parthasarathi, Mohamed Abdelsalam, Joelle Pineau, Sarath Chandar

Neural models trained for next utterance generation in dialogue task learn to mimic the n-gram sequences in the training set with training objectives like negative log-likelihood (NLL) or cross-entropy.

Local Structure Matters Most: Perturbation Study in NLU

no code implementations • Findings (ACL) 2022 • Louis Clouatre, Prasanna Parthasarathi, Amal Zouaq, Sarath Chandar

Recent research analyzing the sensitivity of natural language understanding models to word-order perturbations has shown that neural models are surprisingly insensitive to the order of words.

Post-hoc Interpretability for Neural NLP: A Survey

no code implementations • 10 Aug 2021 • Andreas Madsen, Siva Reddy, Sarath Chandar

Neural networks for NLP are becoming increasingly complex and widespread, and there is a growing concern if these models are responsible to use.

Early-Stopping for Meta-Learning: Estimating Generalization from the Activation Dynamics

no code implementations • 29 Sep 2021 • Simon Guiroy, Christopher Pal, Sarath Chandar

To this end, we empirically show that as meta-training progresses, a model's generalization to a target distribution of novel tasks can be estimated by analysing the dynamics of its neural activations.

Scaling Laws for the Few-Shot Adaptation of Pre-trained Image Classifiers

no code implementations • 13 Oct 2021 • Gabriele Prato, Simon Guiroy, Ethan Caballero, Irina Rish, Sarath Chandar

Empirical science of neural scaling laws is a rapidly growing area of significant importance to the future of machine learning, particularly in the light of recent breakthroughs achieved by large-scale pre-trained models such as GPT-3, CLIP and DALL-e.

Chaotic Continual Learning

no code implementations • ICML Workshop LifelongML 2020 • Touraj Laleh, Mojtaba Faramarzi, Irina Rish, Sarath Chandar

Most proposed approaches for this issue try to compensate for the effects of parameter updates in the batch incremental setup in which the training model visits a lot of samples for several epochs.

Improving Sample Efficiency of Value Based Models Using Attention and Vision Transformers

no code implementations • 1 Feb 2022 • Amir Ardalan Kalantari, Mohammad Amini, Sarath Chandar, Doina Precup

Much of recent Deep Reinforcement Learning success is owed to the neural architecture's potential to learn and use effective internal representations of the world.

An Introduction to Lifelong Supervised Learning

no code implementations • 10 Jul 2022 • Shagun Sodhani, Mojtaba Faramarzi, Sanket Vaibhav Mehta, Pranshu Malviya, Mohamed Abdelsalam, Janarthanan Janarthanan, Sarath Chandar

Following these different classes of learning algorithms, we discuss the commonly used evaluation benchmarks and metrics for lifelong learning (Chapter 6) and wrap up with a discussion of future challenges and important research directions in Chapter 7.

Local Structure Matters Most in Most Languages

no code implementations • 9 Nov 2022 • Louis Clouâtre, Prasanna Parthasarathi, Amal Zouaq, Sarath Chandar

In this work, we replicate a study on the importance of local structure, and the relative unimportance of global structure, in a multilingual setting.

Detecting Languages Unintelligible to Multilingual Models through Local Structure Probes

no code implementations • 9 Nov 2022 • Louis Clouâtre, Prasanna Parthasarathi, Amal Zouaq, Sarath Chandar

However, this transfer is not universal, with many languages not currently understood by multilingual approaches.

SAMSON: Sharpness-Aware Minimization Scaled by Outlier Normalization for Improving DNN Generalization and Robustness

no code implementations • 18 Nov 2022 • Gonçalo Mordido, Sébastien Henwood, Sarath Chandar, François Leduc-Primeau

In this work, we show that applying sharpness-aware training, by optimizing for both the loss value and loss sharpness, significantly improves robustness to noisy hardware at inference time without relying on any assumptions about the target hardware.

PatchBlender: A Motion Prior for Video Transformers

no code implementations • 11 Nov 2022 • Gabriele Prato, Yale Song, Janarthanan Rajendran, R Devon Hjelm, Neel Joshi, Sarath Chandar

We show that our method is successful at enabling vision transformers to encode the temporal component of video data.

Dealing With Non-stationarity in Decentralized Cooperative Multi-Agent Deep Reinforcement Learning via Multi-Timescale Learning

no code implementations • 6 Feb 2023 • Hadi Nekoei, Akilesh Badrinaaraayanan, Amit Sinha, Mohammad Amini, Janarthanan Rajendran, Aditya Mahajan, Sarath Chandar

In our proposed method, when one agent updates its policy, other agents are allowed to update their policies as well, but at a slower rate.

Replay Buffer with Local Forgetting for Adapting to Local Environment Changes in Deep Model-Based Reinforcement Learning

no code implementations • 15 Mar 2023 • Ali Rahimi-Kalahroudi, Janarthanan Rajendran, Ida Momennejad, Harm van Seijen, Sarath Chandar

This is challenging for deep-learning-based world models due to catastrophic forgetting.

Model-based Reinforcement Learning

Model-based Reinforcement Learning

reinforcement-learning

+1

reinforcement-learning

+1

Should We Attend More or Less? Modulating Attention for Fairness

no code implementations • 22 May 2023 • Abdelrahman Zayed, Goncalo Mordido, Samira Shabanian, Sarath Chandar

In this work, we investigate the role of attention, a widely-used technique in current state-of-the-art NLP models, in the propagation of social biases.

Measuring the Knowledge Acquisition-Utilization Gap in Pretrained Language Models

no code implementations • 24 May 2023 • Amirhossein Kazemnejad, Mehdi Rezagholizadeh, Prasanna Parthasarathi, Sarath Chandar

We propose a systematic framework to measure parametric knowledge utilization in PLMs.

Thompson sampling for improved exploration in GFlowNets

no code implementations • 30 Jun 2023 • Jarrid Rector-Brooks, Kanika Madan, Moksh Jain, Maksym Korablyov, Cheng-Hao Liu, Sarath Chandar, Nikolay Malkin, Yoshua Bengio

Generative flow networks (GFlowNets) are amortized variational inference algorithms that treat sampling from a distribution over compositional objects as a sequential decision-making problem with a learnable action policy.

Lookbehind-SAM: k steps back, 1 step forward

no code implementations • 31 Jul 2023 • Gonçalo Mordido, Pranshu Malviya, Aristide Baratin, Sarath Chandar

Sharpness-aware minimization (SAM) methods have gained increasing popularity by formulating the problem of minimizing both loss value and loss sharpness as a minimax objective.

Towards Few-shot Coordination: Revisiting Ad-hoc Teamplay Challenge In the Game of Hanabi

1 code implementation • 20 Aug 2023 • Hadi Nekoei, Xutong Zhao, Janarthanan Rajendran, Miao Liu, Sarath Chandar

In this work, we show empirically that state-of-the-art ZSC algorithms have poor performance when paired with agents trained with different learning methods, and they require millions of interaction samples to adapt to these new partners.

Self-Influence Guided Data Reweighting for Language Model Pre-training

no code implementations • 2 Nov 2023 • Megh Thakkar, Tolga Bolukbasi, Sriram Ganapathy, Shikhar Vashishth, Sarath Chandar, Partha Talukdar

Once the pre-training corpus has been assembled, all data samples in the corpus are treated with equal importance during LM pre-training.

Language Model-In-The-Loop: Data Optimal Approach to Learn-To-Recommend Actions in Text Games

no code implementations • 13 Nov 2023 • Arjun Vaithilingam Sudhakar, Prasanna Parthasarathi, Janarthanan Rajendran, Sarath Chandar

In this work, we explore and evaluate updating LLM used for candidate recommendation during the learning of the text based game as well to mitigate the reliance on the human annotated gameplays, which are costly to acquire.

Fairness-Aware Structured Pruning in Transformers

1 code implementation • 24 Dec 2023 • Abdelrahman Zayed, Goncalo Mordido, Samira Shabanian, Ioana Baldini, Sarath Chandar

The increasing size of large language models (LLMs) has introduced challenges in their training and inference.

Towards Practical Tool Usage for Continually Learning LLMs

no code implementations • 14 Apr 2024 • Jerry Huang, Prasanna Parthasarathi, Mehdi Rezagholizadeh, Sarath Chandar

Large language models (LLMs) show an innate skill for solving language based tasks.