Search Results for author: Sebastian Lapuschkin

Found 46 papers, 23 papers with code

Explainable concept mappings of MRI: Revealing the mechanisms underlying deep learning-based brain disease classification

no code implementations • 16 Apr 2024 • Christian Tinauer, Anna Damulina, Maximilian Sackl, Martin Soellradl, Reduan Achtibat, Maximilian Dreyer, Frederik Pahde, Sebastian Lapuschkin, Reinhold Schmidt, Stefan Ropele, Wojciech Samek, Christian Langkammer

Using quantitative R2* maps we separated Alzheimer's patients (n=117) from normal controls (n=219) by using a convolutional neural network and systematically investigated the learned concepts using Concept Relevance Propagation and compared these results to a conventional region of interest-based analysis.

Reactive Model Correction: Mitigating Harm to Task-Relevant Features via Conditional Bias Suppression

no code implementations • 15 Apr 2024 • Dilyara Bareeva, Maximilian Dreyer, Frederik Pahde, Wojciech Samek, Sebastian Lapuschkin

Deep Neural Networks are prone to learning and relying on spurious correlations in the training data, which, for high-risk applications, can have fatal consequences.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

PURE: Turning Polysemantic Neurons Into Pure Features by Identifying Relevant Circuits

1 code implementation • 9 Apr 2024 • Maximilian Dreyer, Erblina Purelku, Johanna Vielhaben, Wojciech Samek, Sebastian Lapuschkin

The field of mechanistic interpretability aims to study the role of individual neurons in Deep Neural Networks.

DualView: Data Attribution from the Dual Perspective

2 code implementations • 19 Feb 2024 • Galip Ümit Yolcu, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

In this work we present DualView, a novel method for post-hoc data attribution based on surrogate modelling, demonstrating both high computational efficiency, as well as good evaluation results.

AttnLRP: Attention-Aware Layer-wise Relevance Propagation for Transformers

1 code implementation • 8 Feb 2024 • Reduan Achtibat, Sayed Mohammad Vakilzadeh Hatefi, Maximilian Dreyer, Aakriti Jain, Thomas Wiegand, Sebastian Lapuschkin, Wojciech Samek

Large Language Models are prone to biased predictions and hallucinations, underlining the paramount importance of understanding their model-internal reasoning process.

Explaining Predictive Uncertainty by Exposing Second-Order Effects

no code implementations • 30 Jan 2024 • Florian Bley, Sebastian Lapuschkin, Wojciech Samek, Grégoire Montavon

So far, the question of explaining predictive uncertainty, i. e. why a model 'doubts', has been scarcely studied.

Sanity Checks Revisited: An Exploration to Repair the Model Parameter Randomisation Test

1 code implementation • 12 Jan 2024 • Anna Hedström, Leander Weber, Sebastian Lapuschkin, Marina MC Höhne

The Model Parameter Randomisation Test (MPRT) is widely acknowledged in the eXplainable Artificial Intelligence (XAI) community for its well-motivated evaluative principle: that the explanation function should be sensitive to changes in the parameters of the model function.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Understanding the (Extra-)Ordinary: Validating Deep Model Decisions with Prototypical Concept-based Explanations

1 code implementation • 28 Nov 2023 • Maximilian Dreyer, Reduan Achtibat, Wojciech Samek, Sebastian Lapuschkin

What sets our approach apart is the combination of local and global strategies, enabling a clearer understanding of the (dis-)similarities in model decisions compared to the expected (prototypical) concept use, ultimately reducing the dependence on human long-term assessment.

Generative Fractional Diffusion Models

no code implementations • 26 Oct 2023 • Gabriel Nobis, Marco Aversa, Maximilian Springenberg, Michael Detzel, Stefano Ermon, Shinichi Nakajima, Roderick Murray-Smith, Sebastian Lapuschkin, Christoph Knochenhauer, Luis Oala, Wojciech Samek

We generalize the continuous time framework for score-based generative models from an underlying Brownian motion (BM) to an approximation of fractional Brownian motion (FBM).

Human-Centered Evaluation of XAI Methods

no code implementations • 11 Oct 2023 • Karam Dawoud, Wojciech Samek, Peter Eisert, Sebastian Lapuschkin, Sebastian Bosse

In the ever-evolving field of Artificial Intelligence, a critical challenge has been to decipher the decision-making processes within the so-called "black boxes" in deep learning.

Layer-wise Feedback Propagation

no code implementations • 23 Aug 2023 • Leander Weber, Jim Berend, Alexander Binder, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

In this paper, we present Layer-wise Feedback Propagation (LFP), a novel training approach for neural-network-like predictors that utilizes explainability, specifically Layer-wise Relevance Propagation(LRP), to assign rewards to individual connections based on their respective contributions to solving a given task.

From Hope to Safety: Unlearning Biases of Deep Models via Gradient Penalization in Latent Space

1 code implementation • 18 Aug 2023 • Maximilian Dreyer, Frederik Pahde, Christopher J. Anders, Wojciech Samek, Sebastian Lapuschkin

Deep Neural Networks are prone to learning spurious correlations embedded in the training data, leading to potentially biased predictions.

XAI-based Comparison of Input Representations for Audio Event Classification

no code implementations • 27 Apr 2023 • Annika Frommholz, Fabian Seipel, Sebastian Lapuschkin, Wojciech Samek, Johanna Vielhaben

Deep neural networks are a promising tool for Audio Event Classification.

Bridging the Gap: Gaze Events as Interpretable Concepts to Explain Deep Neural Sequence Models

1 code implementation • 12 Apr 2023 • Daniel G. Krakowczyk, Paul Prasse, David R. Reich, Sebastian Lapuschkin, Tobias Scheffer, Lena A. Jäger

In this work, we employ established gaze event detection algorithms for fixations and saccades and quantitatively evaluate the impact of these events by determining their concept influence.

Reveal to Revise: An Explainable AI Life Cycle for Iterative Bias Correction of Deep Models

1 code implementation • 22 Mar 2023 • Frederik Pahde, Maximilian Dreyer, Wojciech Samek, Sebastian Lapuschkin

To tackle this problem, we propose Reveal to Revise (R2R), a framework entailing the entire eXplainable Artificial Intelligence (XAI) life cycle, enabling practitioners to iteratively identify, mitigate, and (re-)evaluate spurious model behavior with a minimal amount of human interaction.

Explainable AI for Time Series via Virtual Inspection Layers

no code implementations • 11 Mar 2023 • Johanna Vielhaben, Sebastian Lapuschkin, Grégoire Montavon, Wojciech Samek

In this way, we extend the applicability of a family of XAI methods to domains (e. g. speech) where the input is only interpretable after a transformation.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

+3

Explainable Artificial Intelligence (XAI)

+3

The Meta-Evaluation Problem in Explainable AI: Identifying Reliable Estimators with MetaQuantus

1 code implementation • 14 Feb 2023 • Anna Hedström, Philine Bommer, Kristoffer K. Wickstrøm, Wojciech Samek, Sebastian Lapuschkin, Marina M. -C. Höhne

We address this problem through a meta-evaluation of different quality estimators in XAI, which we define as ''the process of evaluating the evaluation method''.

Optimizing Explanations by Network Canonization and Hyperparameter Search

no code implementations • 30 Nov 2022 • Frederik Pahde, Galip Ümit Yolcu, Alexander Binder, Wojciech Samek, Sebastian Lapuschkin

We further suggest a XAI evaluation framework with which we quantify and compare the effect sof model canonization for various XAI methods in image classification tasks on the Pascal-VOC and ILSVRC2017 datasets, as well as for Visual Question Answering using CLEVR-XAI.

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Image Classification

+2

Image Classification

+2

Shortcomings of Top-Down Randomization-Based Sanity Checks for Evaluations of Deep Neural Network Explanations

no code implementations • CVPR 2023 • Alexander Binder, Leander Weber, Sebastian Lapuschkin, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

To address shortcomings of this test, we start by observing an experimental gap in the ranking of explanation methods between randomization-based sanity checks [1] and model output faithfulness measures (e. g. [25]).

Revealing Hidden Context Bias in Segmentation and Object Detection through Concept-specific Explanations

no code implementations • 21 Nov 2022 • Maximilian Dreyer, Reduan Achtibat, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

Applying traditional post-hoc attribution methods to segmentation or object detection predictors offers only limited insights, as the obtained feature attribution maps at input level typically resemble the models' predicted segmentation mask or bounding box.

Explaining automated gender classification of human gait

no code implementations • 16 Oct 2022 • Fabian Horst, Djordje Slijepcevic, Matthias Zeppelzauer, Anna-Maria Raberger, Sebastian Lapuschkin, Wojciech Samek, Wolfgang I. Schöllhorn, Christian Breiteneder, Brian Horsak

State-of-the-art machine learning (ML) models are highly effective in classifying gait analysis data, however, they lack in providing explanations for their predictions.

Explaining machine learning models for age classification in human gait analysis

no code implementations • 16 Oct 2022 • Djordje Slijepcevic, Fabian Horst, Marvin Simak, Sebastian Lapuschkin, Anna-Maria Raberger, Wojciech Samek, Christian Breiteneder, Wolfgang I. Schöllhorn, Matthias Zeppelzauer, Brian Horsak

Machine learning (ML) models have proven effective in classifying gait analysis data, e. g., binary classification of young vs. older adults.

From Attribution Maps to Human-Understandable Explanations through Concept Relevance Propagation

2 code implementations • 7 Jun 2022 • Reduan Achtibat, Maximilian Dreyer, Ilona Eisenbraun, Sebastian Bosse, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

In this work we introduce the Concept Relevance Propagation (CRP) approach, which combines the local and global perspectives and thus allows answering both the "where" and "what" questions for individual predictions.

Explain to Not Forget: Defending Against Catastrophic Forgetting with XAI

no code implementations • 4 May 2022 • Sami Ede, Serop Baghdadlian, Leander Weber, An Nguyen, Dario Zanca, Wojciech Samek, Sebastian Lapuschkin

The ability to continuously process and retain new information like we do naturally as humans is a feat that is highly sought after when training neural networks.

But that's not why: Inference adjustment by interactive prototype revision

no code implementations • 18 Mar 2022 • Michael Gerstenberger, Sebastian Lapuschkin, Peter Eisert, Sebastian Bosse

It shows that even correct classifications can rely on unreasonable prototypes that result from confounding variables in a dataset.

Beyond Explaining: Opportunities and Challenges of XAI-Based Model Improvement

no code implementations • 15 Mar 2022 • Leander Weber, Sebastian Lapuschkin, Alexander Binder, Wojciech Samek

We conclude that while model improvement based on XAI can have significant beneficial effects even on complex and not easily quantifyable model properties, these methods need to be applied carefully, since their success can vary depending on a multitude of factors, such as the model and dataset used, or the employed explanation method.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Measurably Stronger Explanation Reliability via Model Canonization

no code implementations • 14 Feb 2022 • Franz Motzkus, Leander Weber, Sebastian Lapuschkin

While rule-based attribution methods have proven useful for providing local explanations for Deep Neural Networks, explaining modern and more varied network architectures yields new challenges in generating trustworthy explanations, since the established rule sets might not be sufficient or applicable to novel network structures.

Quantus: An Explainable AI Toolkit for Responsible Evaluation of Neural Network Explanations and Beyond

1 code implementation • NeurIPS 2023 • Anna Hedström, Leander Weber, Dilyara Bareeva, Daniel Krakowczyk, Franz Motzkus, Wojciech Samek, Sebastian Lapuschkin, Marina M. -C. Höhne

The evaluation of explanation methods is a research topic that has not yet been explored deeply, however, since explainability is supposed to strengthen trust in artificial intelligence, it is necessary to systematically review and compare explanation methods in order to confirm their correctness.

Navigating Neural Space: Revisiting Concept Activation Vectors to Overcome Directional Divergence

no code implementations • 7 Feb 2022 • Frederik Pahde, Maximilian Dreyer, Leander Weber, Moritz Weckbecker, Christopher J. Anders, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

With a growing interest in understanding neural network prediction strategies, Concept Activation Vectors (CAVs) have emerged as a popular tool for modeling human-understandable concepts in the latent space.

ECQ$^{\text{x}}$: Explainability-Driven Quantization for Low-Bit and Sparse DNNs

no code implementations • 9 Sep 2021 • Daniel Becking, Maximilian Dreyer, Wojciech Samek, Karsten Müller, Sebastian Lapuschkin

The remarkable success of deep neural networks (DNNs) in various applications is accompanied by a significant increase in network parameters and arithmetic operations.

Software for Dataset-wide XAI: From Local Explanations to Global Insights with Zennit, CoRelAy, and ViRelAy

3 code implementations • 24 Jun 2021 • Christopher J. Anders, David Neumann, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin

Deep Neural Networks (DNNs) are known to be strong predictors, but their prediction strategies can rarely be understood.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Explanation-Guided Training for Cross-Domain Few-Shot Classification

1 code implementation • 17 Jul 2020 • Jiamei Sun, Sebastian Lapuschkin, Wojciech Samek, Yunqing Zhao, Ngai-Man Cheung, Alexander Binder

It leverages on the explanation scores, obtained from existing explanation methods when applied to the predictions of FSC models, computed for intermediate feature maps of the models.

Ranked #8 on

Cross-Domain Few-Shot

on ISIC2018

Ranked #8 on

Cross-Domain Few-Shot

on ISIC2018

Understanding Integrated Gradients with SmoothTaylor for Deep Neural Network Attribution

1 code implementation • arXiv 2020 • Gary S. W. Goh, Sebastian Lapuschkin, Leander Weber, Wojciech Samek, Alexander Binder

From our experiments, we find that the SmoothTaylor approach together with adaptive noising is able to generate better quality saliency maps with lesser noise and higher sensitivity to the relevant points in the input space as compared to Integrated Gradients.

Explaining Deep Neural Networks and Beyond: A Review of Methods and Applications

no code implementations • 17 Mar 2020 • Wojciech Samek, Grégoire Montavon, Sebastian Lapuschkin, Christopher J. Anders, Klaus-Robert Müller

With the broader and highly successful usage of machine learning in industry and the sciences, there has been a growing demand for Explainable AI.

Explain and Improve: LRP-Inference Fine-Tuning for Image Captioning Models

1 code implementation • 4 Jan 2020 • Jiamei Sun, Sebastian Lapuschkin, Wojciech Samek, Alexander Binder

We develop variants of layer-wise relevance propagation (LRP) and gradient-based explanation methods, tailored to image captioning models with attention mechanisms.

Finding and Removing Clever Hans: Using Explanation Methods to Debug and Improve Deep Models

2 code implementations • 22 Dec 2019 • Christopher J. Anders, Leander Weber, David Neumann, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin

Based on a recent technique - Spectral Relevance Analysis - we propose the following technical contributions and resulting findings: (a) a scalable quantification of artifactual and poisoned classes where the machine learning models under study exhibit CH behavior, (b) several approaches denoted as Class Artifact Compensation (ClArC), which are able to effectively and significantly reduce a model's CH behavior.

Pruning by Explaining: A Novel Criterion for Deep Neural Network Pruning

1 code implementation • 18 Dec 2019 • Seul-Ki Yeom, Philipp Seegerer, Sebastian Lapuschkin, Alexander Binder, Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek

The success of convolutional neural networks (CNNs) in various applications is accompanied by a significant increase in computation and parameter storage costs.

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Model Compression

+2

Model Compression

+2

On the Explanation of Machine Learning Predictions in Clinical Gait Analysis

2 code implementations • 16 Dec 2019 • Djordje Slijepcevic, Fabian Horst, Sebastian Lapuschkin, Anna-Maria Raberger, Matthias Zeppelzauer, Wojciech Samek, Christian Breiteneder, Wolfgang I. Schöllhorn, Brian Horsak

Machine learning (ML) is increasingly used to support decision-making in the healthcare sector.

Towards Best Practice in Explaining Neural Network Decisions with LRP

1 code implementation • 22 Oct 2019 • Maximilian Kohlbrenner, Alexander Bauer, Shinichi Nakajima, Alexander Binder, Wojciech Samek, Sebastian Lapuschkin

In this paper, we focus on a popular and widely used method of XAI, the Layer-wise Relevance Propagation (LRP).

Ranked #1 on

Object Detection

on SIXray

Ranked #1 on

Object Detection

on SIXray

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

+3

Explainable Artificial Intelligence (XAI)

+3

Resolving challenges in deep learning-based analyses of histopathological images using explanation methods

no code implementations • 15 Aug 2019 • Miriam Hägele, Philipp Seegerer, Sebastian Lapuschkin, Michael Bockmayr, Wojciech Samek, Frederick Klauschen, Klaus-Robert Müller, Alexander Binder

Deep learning has recently gained popularity in digital pathology due to its high prediction quality.

Unmasking Clever Hans Predictors and Assessing What Machines Really Learn

1 code implementation • 26 Feb 2019 • Sebastian Lapuschkin, Stephan Wäldchen, Alexander Binder, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller

Current learning machines have successfully solved hard application problems, reaching high accuracy and displaying seemingly "intelligent" behavior.

Explaining the Unique Nature of Individual Gait Patterns with Deep Learning

1 code implementation • 13 Aug 2018 • Fabian Horst, Sebastian Lapuschkin, Wojciech Samek, Klaus-Robert Müller, Wolfgang I. Schöllhorn

Machine learning (ML) techniques such as (deep) artificial neural networks (DNN) are solving very successfully a plethora of tasks and provide new predictive models for complex physical, chemical, biological and social systems.

iNNvestigate neural networks!

1 code implementation • 13 Aug 2018 • Maximilian Alber, Sebastian Lapuschkin, Philipp Seegerer, Miriam Hägele, Kristof T. Schütt, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller, Sven Dähne, Pieter-Jan Kindermans

The presented library iNNvestigate addresses this by providing a common interface and out-of-the- box implementation for many analysis methods, including the reference implementation for PatternNet and PatternAttribution as well as for LRP-methods.

AudioMNIST: Exploring Explainable Artificial Intelligence for Audio Analysis on a Simple Benchmark

2 code implementations • 9 Jul 2018 • Sören Becker, Johanna Vielhaben, Marcel Ackermann, Klaus-Robert Müller, Sebastian Lapuschkin, Wojciech Samek

Explainable Artificial Intelligence (XAI) is targeted at understanding how models perform feature selection and derive their classification decisions.

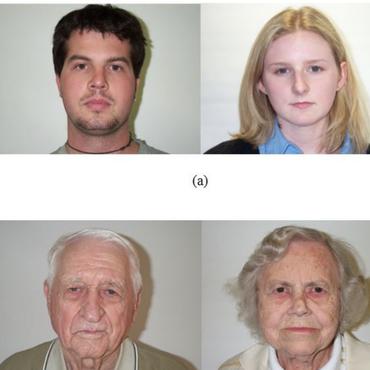

Understanding and Comparing Deep Neural Networks for Age and Gender Classification

no code implementations • 25 Aug 2017 • Sebastian Lapuschkin, Alexander Binder, Klaus-Robert Müller, Wojciech Samek

Recently, deep neural networks have demonstrated excellent performances in recognizing the age and gender on human face images.

Interpreting the Predictions of Complex ML Models by Layer-wise Relevance Propagation

no code implementations • 24 Nov 2016 • Wojciech Samek, Grégoire Montavon, Alexander Binder, Sebastian Lapuschkin, Klaus-Robert Müller

Complex nonlinear models such as deep neural network (DNNs) have become an important tool for image classification, speech recognition, natural language processing, and many other fields of application.