Search Results for author: Ser-Nam Lim

Found 95 papers, 46 papers with code

Combining Label Propagation and Simple Models Out-performs Graph Neural Networks

7 code implementations • ICLR 2021 • Qian Huang, Horace He, Abhay Singh, Ser-Nam Lim, Austin R. Benson

Graph Neural Networks (GNNs) are the predominant technique for learning over graphs.

Node Classification on Non-Homophilic (Heterophilic) Graphs

Node Classification on Non-Homophilic (Heterophilic) Graphs

Node Property Prediction

Node Property Prediction

A Metric Learning Reality Check

4 code implementations • ECCV 2020 • Kevin Musgrave, Serge Belongie, Ser-Nam Lim

Deep metric learning papers from the past four years have consistently claimed great advances in accuracy, often more than doubling the performance of decade-old methods.

PyTorch Metric Learning

1 code implementation • 20 Aug 2020 • Kevin Musgrave, Serge Belongie, Ser-Nam Lim

Deep metric learning algorithms have a wide variety of applications, but implementing these algorithms can be tedious and time consuming.

PyTorch Adapt

2 code implementations • 28 Nov 2022 • Kevin Musgrave, Serge Belongie, Ser-Nam Lim

PyTorch Adapt is a library for domain adaptation, a type of machine learning algorithm that re-purposes existing models to work in new domains.

HorNet: Efficient High-Order Spatial Interactions with Recursive Gated Convolutions

7 code implementations • 28 Jul 2022 • Yongming Rao, Wenliang Zhao, Yansong Tang, Jie zhou, Ser-Nam Lim, Jiwen Lu

In this paper, we show that the key ingredients behind the vision Transformers, namely input-adaptive, long-range and high-order spatial interactions, can also be efficiently implemented with a convolution-based framework.

Ranked #20 on

Semantic Segmentation

on ADE20K

Ranked #20 on

Semantic Segmentation

on ADE20K

Visual Prompt Tuning

6 code implementations • 23 Mar 2022 • Menglin Jia, Luming Tang, Bor-Chun Chen, Claire Cardie, Serge Belongie, Bharath Hariharan, Ser-Nam Lim

The current modus operandi in adapting pre-trained models involves updating all the backbone parameters, ie, full fine-tuning.

Ranked #2 on

Prompt Engineering

on ImageNet-21k

Ranked #2 on

Prompt Engineering

on ImageNet-21k

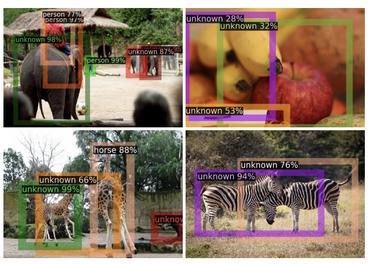

Detecting Everything in the Open World: Towards Universal Object Detection

1 code implementation • CVPR 2023 • Zhenyu Wang, YaLi Li, Xi Chen, Ser-Nam Lim, Antonio Torralba, Hengshuang Zhao, Shengjin Wang

In this paper, we formally address universal object detection, which aims to detect every scene and predict every category.

Three New Validators and a Large-Scale Benchmark Ranking for Unsupervised Domain Adaptation

1 code implementation • 15 Aug 2022 • Kevin Musgrave, Serge Belongie, Ser-Nam Lim

In a supervised setting, these validators evaluate checkpoints by computing accuracy on a validation set that has labels.

NeRV: Neural Representations for Videos

3 code implementations • NeurIPS 2021 • Hao Chen, Bo He, Hanyu Wang, Yixuan Ren, Ser-Nam Lim, Abhinav Shrivastava

In contrast, with NeRV, we can use any neural network compression method as a proxy for video compression, and achieve comparable performance to traditional frame-based video compression approaches (H. 264, HEVC \etc).

Ranked #6 on

Video Reconstruction

on UVG

Ranked #6 on

Video Reconstruction

on UVG

Edge Proposal Sets for Link Prediction

2 code implementations • 30 Jun 2021 • Abhay Singh, Qian Huang, Sijia Linda Huang, Omkar Bhalerao, Horace He, Ser-Nam Lim, Austin R. Benson

Here, we demonstrate how simply adding a set of edges, which we call a \emph{proposal set}, to the graph as a pre-processing step can improve the performance of several link prediction algorithms.

Ranked #1 on

Link Property Prediction

on ogbl-ddi

Ranked #1 on

Link Property Prediction

on ogbl-ddi

Unconstrained Facial Expression Transfer using Style-based Generator

1 code implementation • 12 Dec 2019 • Chao Yang, Ser-Nam Lim

Given two face images, our method can create plausible results that combine the appearance of one image and the expression of the other.

RegMixup: Mixup as a Regularizer Can Surprisingly Improve Accuracy and Out Distribution Robustness

2 code implementations • 29 Jun 2022 • Francesco Pinto, Harry Yang, Ser-Nam Lim, Philip H. S. Torr, Puneet K. Dokania

We show that the effectiveness of the well celebrated Mixup [Zhang et al., 2018] can be further improved if instead of using it as the sole learning objective, it is utilized as an additional regularizer to the standard cross-entropy loss.

On Feature Normalization and Data Augmentation

1 code implementation • CVPR 2021 • Boyi Li, Felix Wu, Ser-Nam Lim, Serge Belongie, Kilian Q. Weinberger

The moments (a. k. a., mean and standard deviation) of latent features are often removed as noise when training image recognition models, to increase stability and reduce training time.

Ranked #32 on

Domain Generalization

on ImageNet-A

Ranked #32 on

Domain Generalization

on ImageNet-A

Enhancing Adversarial Example Transferability with an Intermediate Level Attack

2 code implementations • ICCV 2019 • Qian Huang, Isay Katsman, Horace He, Zeqi Gu, Serge Belongie, Ser-Nam Lim

We show that we can select a layer of the source model to perturb without any knowledge of the target models while achieving high transferability.

What makes fake images detectable? Understanding properties that generalize

1 code implementation • ECCV 2020 • Lucy Chai, David Bau, Ser-Nam Lim, Phillip Isola

The quality of image generation and manipulation is reaching impressive levels, making it increasingly difficult for a human to distinguish between what is real and what is fake.

New Benchmarks for Learning on Non-Homophilous Graphs

1 code implementation • 3 Apr 2021 • Derek Lim, Xiuyu Li, Felix Hohne, Ser-Nam Lim

Much data with graph structures satisfy the principle of homophily, meaning that connected nodes tend to be similar with respect to a specific attribute.

Ranked #4 on

Node Classification

on Yelp-Fraud

Ranked #4 on

Node Classification

on Yelp-Fraud

Large Scale Learning on Non-Homophilous Graphs: New Benchmarks and Strong Simple Methods

3 code implementations • NeurIPS 2021 • Derek Lim, Felix Hohne, Xiuyu Li, Sijia Linda Huang, Vaishnavi Gupta, Omkar Bhalerao, Ser-Nam Lim

Many widely used datasets for graph machine learning tasks have generally been homophilous, where nodes with similar labels connect to each other.

Ranked #2 on

Node Classification

on wiki

Ranked #2 on

Node Classification

on wiki

Graph Learning

Graph Learning

Node Classification on Non-Homophilic (Heterophilic) Graphs

Node Classification on Non-Homophilic (Heterophilic) Graphs

Neural Manifold Ordinary Differential Equations

3 code implementations • NeurIPS 2020 • Aaron Lou, Derek Lim, Isay Katsman, Leo Huang, Qingxuan Jiang, Ser-Nam Lim, Christopher De Sa

To better conform to data geometry, recent deep generative modelling techniques adapt Euclidean constructions to non-Euclidean spaces.

HNeRV: A Hybrid Neural Representation for Videos

1 code implementation • CVPR 2023 • Hao Chen, Matt Gwilliam, Ser-Nam Lim, Abhinav Shrivastava

Such embedding largely limits the regression capacity and internal generalization for video interpolation.

Ranked #3 on

Video Reconstruction

on UVG

Ranked #3 on

Video Reconstruction

on UVG

Object Recognition as Next Token Prediction

1 code implementation • 4 Dec 2023 • Kaiyu Yue, Bor-Chun Chen, Jonas Geiping, Hengduo Li, Tom Goldstein, Ser-Nam Lim

We present an approach to pose object recognition as next token prediction.

MA-LMM: Memory-Augmented Large Multimodal Model for Long-Term Video Understanding

1 code implementation • 8 Apr 2024 • Bo He, Hengduo Li, Young Kyun Jang, Menglin Jia, Xuefei Cao, Ashish Shah, Abhinav Shrivastava, Ser-Nam Lim

However, existing LLM-based large multimodal models (e. g., Video-LLaMA, VideoChat) can only take in a limited number of frames for short video understanding.

Ranked #1 on

Video Classification

on COIN

Ranked #1 on

Video Classification

on COIN

M2TR: Multi-modal Multi-scale Transformers for Deepfake Detection

1 code implementation • 20 Apr 2021 • Junke Wang, Zuxuan Wu, Wenhao Ouyang, Xintong Han, Jingjing Chen, Ser-Nam Lim, Yu-Gang Jiang

The widespread dissemination of Deepfakes demands effective approaches that can detect perceptually convincing forged images.

Graph Inductive Biases in Transformers without Message Passing

1 code implementation • 27 May 2023 • Liheng Ma, Chen Lin, Derek Lim, Adriana Romero-Soriano, Puneet K. Dokania, Mark Coates, Philip Torr, Ser-Nam Lim

Graph inductive biases are crucial for Graph Transformers, and previous works incorporate them using message-passing modules and/or positional encodings.

Ranked #1 on

Node Classification

on PATTERN

Ranked #1 on

Node Classification

on PATTERN

Differentiating through the Fréchet Mean

2 code implementations • ICML 2020 • Aaron Lou, Isay Katsman, Qingxuan Jiang, Serge Belongie, Ser-Nam Lim, Christopher De Sa

Recent advances in deep representation learning on Riemannian manifolds extend classical deep learning operations to better capture the geometry of the manifold.

Rethinking Nearest Neighbors for Visual Classification

1 code implementation • 15 Dec 2021 • Menglin Jia, Bor-Chun Chen, Zuxuan Wu, Claire Cardie, Serge Belongie, Ser-Nam Lim

In this paper, we investigate $k$-Nearest-Neighbor (k-NN) classifiers, a classical model-free learning method from the pre-deep learning era, as an augmentation to modern neural network based approaches.

Intentonomy: a Dataset and Study towards Human Intent Understanding

1 code implementation • CVPR 2021 • Menglin Jia, Zuxuan Wu, Austin Reiter, Claire Cardie, Serge Belongie, Ser-Nam Lim

Based on our findings, we conduct further study to quantify the effect of attending to object and context classes as well as textual information in the form of hashtags when training an intent classifier.

Better Set Representations For Relational Reasoning

1 code implementation • NeurIPS 2020 • Qian Huang, Horace He, Abhay Singh, Yan Zhang, Ser-Nam Lim, Austin Benson

Incorporating relational reasoning into neural networks has greatly expanded their capabilities and scope.

Making an Invisibility Cloak: Real World Adversarial Attacks on Object Detectors

2 code implementations • ECCV 2020 • Zuxuan Wu, Ser-Nam Lim, Larry Davis, Tom Goldstein

We present a systematic study of adversarial attacks on state-of-the-art object detection frameworks.

Towards Adversarial Evaluations for Inexact Machine Unlearning

3 code implementations • 17 Jan 2022 • Shashwat Goel, Ameya Prabhu, Amartya Sanyal, Ser-Nam Lim, Philip Torr, Ponnurangam Kumaraguru

Machine Learning models face increased concerns regarding the storage of personal user data and adverse impacts of corrupted data like backdoors or systematic bias.

Spartan: Differentiable Sparsity via Regularized Transportation

1 code implementation • 27 May 2022 • Kai Sheng Tai, Taipeng Tian, Ser-Nam Lim

We present Spartan, a method for training sparse neural network models with a predetermined level of sparsity.

Test-Time Distribution Normalization for Contrastively Learned Vision-language Models

2 code implementations • 22 Feb 2023 • Yifei Zhou, Juntao Ren, Fengyu Li, Ramin Zabih, Ser-Nam Lim

Advances in the field of vision-language contrastive learning have made it possible for many downstream applications to be carried out efficiently and accurately by simply taking the dot product between image and text representations.

Computationally Budgeted Continual Learning: What Does Matter?

1 code implementation • CVPR 2023 • Ameya Prabhu, Hasan Abed Al Kader Hammoud, Puneet Dokania, Philip H. S. Torr, Ser-Nam Lim, Bernard Ghanem, Adel Bibi

Our conclusions are consistent in a different number of stream time steps, e. g., 20 to 200, and under several computational budgets.

When in Doubt: Improving Classification Performance with Alternating Normalization

1 code implementation • Findings (EMNLP) 2021 • Menglin Jia, Austin Reiter, Ser-Nam Lim, Yoav Artzi, Claire Cardie

We introduce Classification with Alternating Normalization (CAN), a non-parametric post-processing step for classification.

Deep Co-Training with Task Decomposition for Semi-Supervised Domain Adaptation

1 code implementation • ICCV 2021 • Luyu Yang, Yan Wang, Mingfei Gao, Abhinav Shrivastava, Kilian Q. Weinberger, Wei-Lun Chao, Ser-Nam Lim

To integrate the strengths of the two classifiers, we apply the well-established co-training framework, in which the two classifiers exchange their high confident predictions to iteratively "teach each other" so that both classifiers can excel in the target domain.

Semi-supervised Domain Adaptation

Semi-supervised Domain Adaptation

Unsupervised Domain Adaptation

Unsupervised Domain Adaptation

Measuring Dataset Granularity

1 code implementation • 21 Dec 2019 • Yin Cui, Zeqi Gu, Dhruv Mahajan, Laurens van der Maaten, Serge Belongie, Ser-Nam Lim

We also investigate the interplay between dataset granularity with a variety of factors and find that fine-grained datasets are more difficult to learn from, more difficult to transfer to, more difficult to perform few-shot learning with, and more vulnerable to adversarial attacks.

Exploring Visual Engagement Signals for Representation Learning

1 code implementation • ICCV 2021 • Menglin Jia, Zuxuan Wu, Austin Reiter, Claire Cardie, Serge Belongie, Ser-Nam Lim

Visual engagement in social media platforms comprises interactions with photo posts including comments, shares, and likes.

Equivariant Manifold Flows

1 code implementation • NeurIPS 2021 • Isay Katsman, Aaron Lou, Derek Lim, Qingxuan Jiang, Ser-Nam Lim, Christopher De Sa

Tractably modelling distributions over manifolds has long been an important goal in the natural sciences.

TIPI: Test Time Adaptation With Transformation Invariance

1 code implementation • CVPR 2023 • A. Tuan Nguyen, Thanh Nguyen-Tang, Ser-Nam Lim, Philip H.S. Torr

Test Time Adaptation offers a means to combat this problem, as it allows the model to adapt during test time to the new data distribution, using only unlabeled test data batches.

Object-Centric Unsupervised Image Captioning

1 code implementation • 2 Dec 2021 • Zihang Meng, David Yang, Xuefei Cao, Ashish Shah, Ser-Nam Lim

Our work in this paper overcomes this by harvesting objects corresponding to a given sentence from the training set, even if they don't belong to the same image.

Quantization Guided JPEG Artifact Correction

1 code implementation • ECCV 2020 • Max Ehrlich, Larry Davis, Ser-Nam Lim, Abhinav Shrivastava

The JPEG image compression algorithm is the most popular method of image compression because of its ability for large compression ratios.

Sample-dependent Adaptive Temperature Scaling for Improved Calibration

1 code implementation • 13 Jul 2022 • Tom Joy, Francesco Pinto, Ser-Nam Lim, Philip H. S. Torr, Puneet K. Dokania

The most common post-hoc approach to compensate for this is to perform temperature scaling, which adjusts the confidences of the predictions on any input by scaling the logits by a fixed value.

$BT^2$: Backward-compatible Training with Basis Transformation

1 code implementation • 8 Nov 2022 • Yifei Zhou, Zilu Li, Abhinav Shrivastava, Hengshuang Zhao, Antonio Torralba, Taipeng Tian, Ser-Nam Lim

In this way, the new representation can be directly compared with the old representation, in principle avoiding the need for any backfilling.

Rapid Adaptation in Online Continual Learning: Are We Evaluating It Right?

1 code implementation • ICCV 2023 • Hasan Abed Al Kader Hammoud, Ameya Prabhu, Ser-Nam Lim, Philip H. S. Torr, Adel Bibi, Bernard Ghanem

We revisit the common practice of evaluating adaptation of Online Continual Learning (OCL) algorithms through the metric of online accuracy, which measures the accuracy of the model on the immediate next few samples.

BT^2: Backward-compatible Training with Basis Transformation

1 code implementation • ICCV 2023 • Yifei Zhou, Zilu Li, Abhinav Shrivastava, Hengshuang Zhao, Antonio Torralba, Taipeng Tian, Ser-Nam Lim

In this way, the new representation can be directly compared with the old representation, in principle avoiding the need for any backfilling.

GAPX: Generalized Autoregressive Paraphrase-Identification X

1 code implementation • 5 Oct 2022 • Yifei Zhou, Renyu Li, Hayden Housen, Ser-Nam Lim

Paraphrase Identification is a fundamental task in Natural Language Processing.

Language-free Compositional Action Generation via Decoupling Refinement

1 code implementation • 7 Jul 2023 • Xiao Liu, Guangyi Chen, Yansong Tang, Guangrun Wang, Xiao-Ping Zhang, Ser-Nam Lim

Composing simple elements into complex concepts is crucial yet challenging, especially for 3D action generation.

Intermediate Level Adversarial Attack for Enhanced Transferability

no code implementations • 20 Nov 2018 • Qian Huang, Zeqi Gu, Isay Katsman, Horace He, Pian Pawakapan, Zhiqiu Lin, Serge Belongie, Ser-Nam Lim

Neural networks are vulnerable to adversarial examples, malicious inputs crafted to fool trained models.

Adversarial Example Decomposition

no code implementations • 4 Dec 2018 • Horace He, Aaron Lou, Qingxuan Jiang, Isay Katsman, Serge Belongie, Ser-Nam Lim

Research has shown that widely used deep neural networks are vulnerable to carefully crafted adversarial perturbations.

Efficient Object Embedding for Spliced Image Retrieval

no code implementations • CVPR 2021 • Bor-Chun Chen, Zuxuan Wu, Larry S. Davis, Ser-Nam Lim

Detecting spliced images is one of the emerging challenges in computer vision.

Cross-X Learning for Fine-Grained Visual Categorization

no code implementations • ICCV 2019 • Wei Luo, Xitong Yang, Xianjie Mo, Yuheng Lu, Larry S. Davis, Jun Li, Jian Yang, Ser-Nam Lim

Recognizing objects from subcategories with very subtle differences remains a challenging task due to the large intra-class and small inter-class variation.

Ranked #18 on

Fine-Grained Image Classification

on NABirds

(using extra training data)

Ranked #18 on

Fine-Grained Image Classification

on NABirds

(using extra training data)

Fine-Grained Image Classification

Fine-Grained Image Classification

Fine-Grained Visual Categorization

Fine-Grained Visual Categorization

Unsupervised Deep Metric Learning via Auxiliary Rotation Loss

no code implementations • 16 Nov 2019 • Xuefei Cao, Bor-Chun Chen, Ser-Nam Lim

In this work, we propose to generate pseudo-labels for deep metric learning directly from clustering assignment and we introduce unsupervised deep metric learning (UDML) regularized by a self-supervision (SS) task.

Fine-grained Synthesis of Unrestricted Adversarial Examples

no code implementations • 20 Nov 2019 • Omid Poursaeed, Tianxing Jiang, Yordanos Goshu, Harry Yang, Serge Belongie, Ser-Nam Lim

We propose a novel approach for generating unrestricted adversarial examples by manipulating fine-grained aspects of image generation.

Deep Multi-Modal Sets

no code implementations • 3 Mar 2020 • Austin Reiter, Menglin Jia, Pu Yang, Ser-Nam Lim

Most deep learning-based methods rely on a late fusion technique whereby multiple feature types are encoded and concatenated and then a multi layer perceptron (MLP) combines the fused embedding to make predictions.

One-Shot Domain Adaptation For Face Generation

no code implementations • CVPR 2020 • Chao Yang, Ser-Nam Lim

To generate images of the same distribution, we introduce a style-mixing technique that transfers the low-level statistics from the target to faces randomly generated with the model.

Detecting Deep-Fake Videos from Appearance and Behavior

no code implementations • 29 Apr 2020 • Shruti Agarwal, Tarek El-Gaaly, Hany Farid, Ser-Nam Lim

Synthetically-generated audios and videos -- so-called deep fakes -- continue to capture the imagination of the computer-graphics and computer-vision communities.

Curriculum Manager for Source Selection in Multi-Source Domain Adaptation

no code implementations • ECCV 2020 • Luyu Yang, Yogesh Balaji, Ser-Nam Lim, Abhinav Shrivastava

In this paper, we proposed an adversarial agent that learns a dynamic curriculum for source samples, called Curriculum Manager for Source Selection (CMSS).

Multi-Source Unsupervised Domain Adaptation

Multi-Source Unsupervised Domain Adaptation

Unsupervised Domain Adaptation

Unsupervised Domain Adaptation

Analyzing and Mitigating JPEG Compression Defects in Deep Learning

no code implementations • 17 Nov 2020 • Max Ehrlich, Larry Davis, Ser-Nam Lim, Abhinav Shrivastava

We show that there is a significant penalty on common performance metrics for high compression.

GTA: Global Temporal Attention for Video Action Understanding

no code implementations • 15 Dec 2020 • Bo He, Xitong Yang, Zuxuan Wu, Hao Chen, Ser-Nam Lim, Abhinav Shrivastava

To this end, we introduce Global Temporal Attention (GTA), which performs global temporal attention on top of spatial attention in a decoupled manner.

Deep Video Inpainting Detection

no code implementations • 26 Jan 2021 • Peng Zhou, Ning Yu, Zuxuan Wu, Larry S. Davis, Abhinav Shrivastava, Ser-Nam Lim

This paper studies video inpainting detection, which localizes an inpainted region in a video both spatially and temporally.

THAT: Two Head Adversarial Training for Improving Robustness at Scale

no code implementations • 25 Mar 2021 • Zuxuan Wu, Tom Goldstein, Larry S. Davis, Ser-Nam Lim

Many variants of adversarial training have been proposed, with most research focusing on problems with relatively few classes.

Multimodal Fusion Refiner Networks

no code implementations • 8 Apr 2021 • Sethuraman Sankaran, David Yang, Ser-Nam Lim

In this work, we develop a Refiner Fusion Network (ReFNet) that enables fusion modules to combine strong unimodal representation with strong multimodal representations.

Cross-Modal Retrieval Augmentation for Multi-Modal Classification

no code implementations • Findings (EMNLP) 2021 • Shir Gur, Natalia Neverova, Chris Stauffer, Ser-Nam Lim, Douwe Kiela, Austin Reiter

Recent advances in using retrieval components over external knowledge sources have shown impressive results for a variety of downstream tasks in natural language processing.

Joint Audio-Visual Deepfake Detection

no code implementations • ICCV 2021 • Yipin Zhou, Ser-Nam Lim

Deepfakes ("deep learning" + "fake") are synthetically-generated videos from AI algorithms.

Ranked #6 on

DeepFake Detection

on FakeAVCeleb

Ranked #6 on

DeepFake Detection

on FakeAVCeleb

Mix-MaxEnt: Creating High Entropy Barriers To Improve Accuracy and Uncertainty Estimates of Deterministic Neural Networks

no code implementations • 29 Sep 2021 • Francesco Pinto, Harry Yang, Ser-Nam Lim, Philip Torr, Puneet K. Dokania

We propose an extremely simple approach to regularize a single deterministic neural network to obtain improved accuracy and reliable uncertainty estimates.

Testing-Time Adaptation through Online Normalization Estimation

no code implementations • 29 Sep 2021 • Xuefeng Hu, Mustafa Uzunbas, Bor-Chun Chen, Rui Wang, Ashish Shah, Ram Nevatia, Ser-Nam Lim

We present a simple and effective way to estimate the batch-norm statistics during test time, to fast adapt a source model to target test samples.

Refining Multimodal Representations using a modality-centric self-supervised module

no code implementations • 29 Sep 2021 • Sethuraman Sankaran, David Yang, Ser-Nam Lim

Tasks that rely on multi-modal information typically include a fusion module that combines information from different modalities.

Fast Adaptive Anomaly Detection

no code implementations • 29 Sep 2021 • Ze Wang, Yipin Zhou, Rui Wang, Tsung-Yu Lin, Ashish Shah, Ser-Nam Lim

Anything outside of a given normal population is by definition an anomaly.

Differential Motion Evolution for Fine-Grained Motion Deformation in Unsupervised Image Animation

no code implementations • 9 Oct 2021 • Peirong Liu, Rui Wang, Xuefei Cao, Yipin Zhou, Ashish Shah, Ser-Nam Lim

Key findings are twofold: (1) by capturing the motion transfer with an ordinary differential equation (ODE), it helps to regularize the motion field, and (2) by utilizing the source image itself, we are able to inpaint occluded/missing regions arising from large motion changes.

MixNorm: Test-Time Adaptation Through Online Normalization Estimation

no code implementations • 21 Oct 2021 • Xuefeng Hu, Gokhan Uzunbas, Sirius Chen, Rui Wang, Ashish Shah, Ram Nevatia, Ser-Nam Lim

We present a simple and effective way to estimate the batch-norm statistics during test time, to fast adapt a source model to target test samples.

Learning to Ground Multi-Agent Communication with Autoencoders

no code implementations • NeurIPS 2021 • Toru Lin, Minyoung Huh, Chris Stauffer, Ser-Nam Lim, Phillip Isola

Communication requires having a common language, a lingua franca, between agents.

A Frequency Perspective of Adversarial Robustness

no code implementations • 26 Oct 2021 • Shishira R Maiya, Max Ehrlich, Vatsal Agarwal, Ser-Nam Lim, Tom Goldstein, Abhinav Shrivastava

Our analysis shows that adversarial examples are neither in high-frequency nor in low-frequency components, but are simply dataset dependent.

AdaViT: Adaptive Vision Transformers for Efficient Image Recognition

no code implementations • CVPR 2022 • Lingchen Meng, Hengduo Li, Bor-Chun Chen, Shiyi Lan, Zuxuan Wu, Yu-Gang Jiang, Ser-Nam Lim

To this end, we introduce AdaViT, an adaptive computation framework that learns to derive usage policies on which patches, self-attention heads and transformer blocks to use throughout the backbone on a per-input basis, aiming to improve inference efficiency of vision transformers with a minimal drop of accuracy for image recognition.

Unsupervised Domain Adaptation: A Reality Check

no code implementations • 30 Nov 2021 • Kevin Musgrave, Serge Belongie, Ser-Nam Lim

Interest in unsupervised domain adaptation (UDA) has surged in recent years, resulting in a plethora of new algorithms.

ObjectFormer for Image Manipulation Detection and Localization

no code implementations • CVPR 2022 • Junke Wang, Zuxuan Wu, Jingjing Chen, Xintong Han, Abhinav Shrivastava, Ser-Nam Lim, Yu-Gang Jiang

Recent advances in image editing techniques have posed serious challenges to the trustworthiness of multimedia data, which drives the research of image tampering detection.

VRAG: Region Attention Graphs for Content-Based Video Retrieval

no code implementations • 18 May 2022 • Kennard Ng, Ser-Nam Lim, Gim Hee Lee

In this paper, we introduce Video Region Attention Graph Networks (VRAG) that improves the state-of-the-art of video-level methods.

Ranked #13 on

Video Retrieval

on FIVR-200K

Ranked #13 on

Video Retrieval

on FIVR-200K

Raising the Bar on the Evaluation of Out-of-Distribution Detection

no code implementations • 24 Sep 2022 • Jishnu Mukhoti, Tsung-Yu Lin, Bor-Chun Chen, Ashish Shah, Philip H. S. Torr, Puneet K. Dokania, Ser-Nam Lim

In this paper, we define 2 categories of OoD data using the subtly different concepts of perceptual/visual and semantic similarity to in-distribution (iD) data.

Out-of-Distribution Detection

Out-of-Distribution Detection

Out of Distribution (OOD) Detection

+2

Out of Distribution (OOD) Detection

+2

Diversified Dynamic Routing for Vision Tasks

no code implementations • 26 Sep 2022 • Botos Csaba, Adel Bibi, Yanwei Li, Philip Torr, Ser-Nam Lim

Deep learning models for vision tasks are trained on large datasets under the assumption that there exists a universal representation that can be used to make predictions for all samples.

Totems: Physical Objects for Verifying Visual Integrity

no code implementations • 26 Sep 2022 • Jingwei Ma, Lucy Chai, Minyoung Huh, Tongzhou Wang, Ser-Nam Lim, Phillip Isola, Antonio Torralba

We introduce a new approach to image forensics: placing physical refractive objects, which we call totems, into a scene so as to protect any photograph taken of that scene.

CNeRV: Content-adaptive Neural Representation for Visual Data

no code implementations • 18 Nov 2022 • Hao Chen, Matt Gwilliam, Bo He, Ser-Nam Lim, Abhinav Shrivastava

We match the performance of NeRV, a state-of-the-art implicit neural representation, on the reconstruction task for frames seen during training while far surpassing for frames that are skipped during training (unseen images).

Unifying Tracking and Image-Video Object Detection

no code implementations • 20 Nov 2022 • Peirong Liu, Rui Wang, Pengchuan Zhang, Omid Poursaeed, Yipin Zhou, Xuefei Cao, Sreya Dutta Roy, Ashish Shah, Ser-Nam Lim

We propose TrIVD (Tracking and Image-Video Detection), the first framework that unifies image OD, video OD, and MOT within one end-to-end model.

Open Vocabulary Semantic Segmentation with Patch Aligned Contrastive Learning

no code implementations • CVPR 2023 • Jishnu Mukhoti, Tsung-Yu Lin, Omid Poursaeed, Rui Wang, Ashish Shah, Philip H. S. Torr, Ser-Nam Lim

We introduce Patch Aligned Contrastive Learning (PACL), a modified compatibility function for CLIP's contrastive loss, intending to train an alignment between the patch tokens of the vision encoder and the CLS token of the text encoder.

Online Backfilling with No Regret for Large-Scale Image Retrieval

no code implementations • 10 Jan 2023 • Seonguk Seo, Mustafa Gokhan Uzunbas, Bohyung Han, Sara Cao, Joena Zhang, Taipeng Tian, Ser-Nam Lim

Backfilling is the process of re-extracting all gallery embeddings from upgraded models in image retrieval systems.

Open-vocabulary Panoptic Segmentation with Embedding Modulation

no code implementations • ICCV 2023 • Xi Chen, Shuang Li, Ser-Nam Lim, Antonio Torralba, Hengshuang Zhao

Open-vocabulary image segmentation is attracting increasing attention due to its critical applications in the real world.

ScribbleSeg: Scribble-based Interactive Image Segmentation

no code implementations • 20 Mar 2023 • Xi Chen, Yau Shing Jonathan Cheung, Ser-Nam Lim, Hengshuang Zhao

We hope this could serve as a more powerful and general solution for interactive segmentation.

Towards Scalable Neural Representation for Diverse Videos

no code implementations • CVPR 2023 • Bo He, Xitong Yang, Hanyu Wang, Zuxuan Wu, Hao Chen, Shuaiyi Huang, Yixuan Ren, Ser-Nam Lim, Abhinav Shrivastava

Implicit neural representations (INR) have gained increasing attention in representing 3D scenes and images, and have been recently applied to encode videos (e. g., NeRV, E-NeRV).

LASER: A Neuro-Symbolic Framework for Learning Spatial-Temporal Scene Graphs with Weak Supervision

no code implementations • 15 Apr 2023 • Jiani Huang, Ziyang Li, Mayur Naik, Ser-Nam Lim

We propose LASER, a neuro-symbolic approach to learn semantic video representations that capture rich spatial and temporal properties in video data by leveraging high-level logic specifications.

Stable Estimation of Survival Causal Effects

no code implementations • 1 Oct 2023 • Khiem Pham, David A. Hirshberg, Phuong-Mai Huynh-Pham, Michele Santacatterina, Ser-Nam Lim, Ramin Zabih

Our experiments on synthetic and semi-synthetic data demonstrate that our method has competitive bias and smaller variance than debiased machine learning approaches.

From Categories to Classifier: Name-Only Continual Learning by Exploring the Web

no code implementations • 19 Nov 2023 • Ameya Prabhu, Hasan Abed Al Kader Hammoud, Ser-Nam Lim, Bernard Ghanem, Philip H. S. Torr, Adel Bibi

Continual Learning (CL) often relies on the availability of extensive annotated datasets, an assumption that is unrealistically time-consuming and costly in practice.

CLAMP: Contrastive LAnguage Model Prompt-tuning

no code implementations • 4 Dec 2023 • Piotr Teterwak, Ximeng Sun, Bryan A. Plummer, Kate Saenko, Ser-Nam Lim

Our results show that LLMs can, indeed, achieve good image classification performance when adapted this way.

Label Delay in Continual Learning

no code implementations • 1 Dec 2023 • Botos Csaba, Wenxuan Zhang, Matthias Müller, Ser-Nam Lim, Mohamed Elhoseiny, Philip Torr, Adel Bibi

We introduce a new continual learning framework with explicit modeling of the label delay between data and label streams over time steps.

On the Robustness of Large Multimodal Models Against Image Adversarial Attacks

no code implementations • 6 Dec 2023 • Xuanming Cui, Alejandro Aparcedo, Young Kyun Jang, Ser-Nam Lim

Recent advances in instruction tuning have led to the development of State-of-the-Art Large Multimodal Models (LMMs).

Video Dynamics Prior: An Internal Learning Approach for Robust Video Enhancements

no code implementations • NeurIPS 2023 • Gaurav Shrivastava, Ser-Nam Lim, Abhinav Shrivastava

In this paper, we present a novel robust framework for low-level vision tasks, including denoising, object removal, frame interpolation, and super-resolution, that does not require any external training data corpus.

Jack of All Tasks, Master of Many: Designing General-purpose Coarse-to-Fine Vision-Language Model

no code implementations • 19 Dec 2023 • Shraman Pramanick, Guangxing Han, Rui Hou, Sayan Nag, Ser-Nam Lim, Nicolas Ballas, Qifan Wang, Rama Chellappa, Amjad Almahairi

In this work, we introduce VistaLLM, a powerful visual system that addresses coarse- and fine-grained VL tasks over single and multiple input images using a unified framework.

Universal Pyramid Adversarial Training for Improved ViT Performance

no code implementations • 26 Dec 2023 • Ping-Yeh Chiang, Yipin Zhou, Omid Poursaeed, Satya Narayan Shukla, Ashish Shah, Tom Goldstein, Ser-Nam Lim

Recently, Pyramid Adversarial training (Herrmann et al., 2022) has been shown to be very effective for improving clean accuracy and distribution-shift robustness of vision transformers.

Mitigating Dialogue Hallucination for Large Multi-modal Models via Adversarial Instruction Tuning

no code implementations • 15 Mar 2024 • Dongmin Park, Zhaofang Qian, Guangxing Han, Ser-Nam Lim

To precisely measure this, we first present an evaluation benchmark by extending popular multi-modal benchmark datasets with prepended hallucinatory dialogues generated by our novel Adversarial Question Generator, which can automatically generate image-related yet adversarial dialogues by adopting adversarial attacks on LMMs.