Search Results for author: Shouling Ji

Found 80 papers, 35 papers with code

Deep Dual Consecutive Network for Human Pose Estimation

1 code implementation • CVPR 2021 • Zhenguang Liu, Haoming Chen, Runyang Feng, Shuang Wu, Shouling Ji, Bailin Yang, Xun Wang

Multi-frame human pose estimation in complicated situations is challenging.

Ranked #1 on

Multi-Person Pose Estimation

on PoseTrack2017

(using extra training data)

Ranked #1 on

Multi-Person Pose Estimation

on PoseTrack2017

(using extra training data)

A Tale of Evil Twins: Adversarial Inputs versus Poisoned Models

1 code implementation • 5 Nov 2019 • Ren Pang, Hua Shen, Xinyang Zhang, Shouling Ji, Yevgeniy Vorobeychik, Xiapu Luo, Alex Liu, Ting Wang

Specifically, (i) we develop a new attack model that jointly optimizes adversarial inputs and poisoned models; (ii) with both analytical and empirical evidence, we reveal that there exist intriguing "mutual reinforcement" effects between the two attack vectors -- leveraging one vector significantly amplifies the effectiveness of the other; (iii) we demonstrate that such effects enable a large design spectrum for the adversary to enhance the existing attacks that exploit both vectors (e. g., backdoor attacks), such as maximizing the attack evasiveness with respect to various detection methods; (iv) finally, we discuss potential countermeasures against such optimized attacks and their technical challenges, pointing to several promising research directions.

AdvMind: Inferring Adversary Intent of Black-Box Attacks

1 code implementation • 16 Jun 2020 • Ren Pang, Xinyang Zhang, Shouling Ji, Xiapu Luo, Ting Wang

Deep neural networks (DNNs) are inherently susceptible to adversarial attacks even under black-box settings, in which the adversary only has query access to the target models.

TrojanZoo: Towards Unified, Holistic, and Practical Evaluation of Neural Backdoors

1 code implementation • 16 Dec 2020 • Ren Pang, Zheng Zhang, Xiangshan Gao, Zhaohan Xi, Shouling Ji, Peng Cheng, Xiapu Luo, Ting Wang

To bridge this gap, we design and implement TROJANZOO, the first open-source platform for evaluating neural backdoor attacks/defenses in a unified, holistic, and practical manner.

Dual Encoding for Zero-Example Video Retrieval

1 code implementation • CVPR 2019 • Jianfeng Dong, Xirong Li, Chaoxi Xu, Shouling Ji, Yuan He, Gang Yang, Xun Wang

This paper attacks the challenging problem of zero-example video retrieval.

Investigating Pose Representations and Motion Contexts Modeling for 3D Motion Prediction

1 code implementation • 30 Dec 2021 • Zhenguang Liu, Shuang Wu, Shuyuan Jin, Shouling Ji, Qi Liu, Shijian Lu, Li Cheng

One aspect that has been obviated so far, is the fact that how we represent the skeletal pose has a critical impact on the prediction results.

Label Inference Attacks Against Vertical Federated Learning

2 code implementations • USENIX Security 22 2022 • Chong Fu, Xuhong Zhang, Shouling Ji, Jinyin Chen, Jingzheng Wu, Shanqing Guo, Jun Zhou, Alex X. Liu, Ting Wang

However, we discover that the bottom model structure and the gradient update mechanism of VFL can be exploited by a malicious participant to gain the power to infer the privately owned labels.

Smart Contract Vulnerability Detection: From Pure Neural Network to Interpretable Graph Feature and Expert Pattern Fusion

1 code implementation • 17 Jun 2021 • Zhenguang Liu, Peng Qian, Xiang Wang, Lei Zhu, Qinming He, Shouling Ji

In this paper, we explore combining deep learning with expert patterns in an explainable fashion.

Fine-Grained Fashion Similarity Learning by Attribute-Specific Embedding Network

1 code implementation • 7 Feb 2020 • Zhe Ma, Jianfeng Dong, Yao Zhang, Zhongzi Long, Yuan He, Hui Xue, Shouling Ji

This paper strives to learn fine-grained fashion similarity.

Adversarial Attacks against Windows PE Malware Detection: A Survey of the State-of-the-Art

1 code implementation • 23 Dec 2021 • Xiang Ling, Lingfei Wu, Jiangyu Zhang, Zhenqing Qu, Wei Deng, Xiang Chen, Yaguan Qian, Chunming Wu, Shouling Ji, Tianyue Luo, Jingzheng Wu, Yanjun Wu

Then, we conduct a comprehensive and systematic review to categorize the state-of-the-art adversarial attacks against PE malware detection, as well as corresponding defenses to increase the robustness of Windows PE malware detection.

Graph Backdoor

2 code implementations • 21 Jun 2020 • Zhaohan Xi, Ren Pang, Shouling Ji, Ting Wang

One intriguing property of deep neural networks (DNNs) is their inherent vulnerability to backdoor attacks -- a trojan model responds to trigger-embedded inputs in a highly predictable manner while functioning normally otherwise.

UNIFUZZ: A Holistic and Pragmatic Metrics-Driven Platform for Evaluating Fuzzers

1 code implementation • 5 Oct 2020 • Yuwei Li, Shouling Ji, Yuan Chen, Sizhuang Liang, Wei-Han Lee, Yueyao Chen, Chenyang Lyu, Chunming Wu, Raheem Beyah, Peng Cheng, Kangjie Lu, Ting Wang

We hope that our findings can shed light on reliable fuzzing evaluation, so that we can discover promising fuzzing primitives to effectively facilitate fuzzer designs in the future.

Cryptography and Security

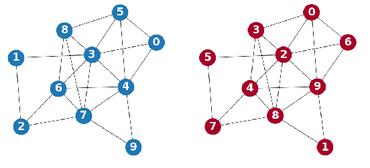

Multilevel Graph Matching Networks for Deep Graph Similarity Learning

1 code implementation • 8 Jul 2020 • Xiang Ling, Lingfei Wu, Saizhuo Wang, Tengfei Ma, Fangli Xu, Alex X. Liu, Chunming Wu, Shouling Ji

In particular, the proposed MGMN consists of a node-graph matching network for effectively learning cross-level interactions between each node of one graph and the other whole graph, and a siamese graph neural network to learn global-level interactions between two input graphs.

Differentially Private Releasing via Deep Generative Model (Technical Report)

2 code implementations • 5 Jan 2018 • Xinyang Zhang, Shouling Ji, Ting Wang

Privacy-preserving releasing of complex data (e. g., image, text, audio) represents a long-standing challenge for the data mining research community.

Fine-Grained Fashion Similarity Prediction by Attribute-Specific Embedding Learning

1 code implementation • 6 Apr 2021 • Jianfeng Dong, Zhe Ma, Xiaofeng Mao, Xun Yang, Yuan He, Richang Hong, Shouling Ji

In this similarity paradigm, one should pay more attention to the similarity in terms of a specific design/attribute between fashion items.

TextBugger: Generating Adversarial Text Against Real-world Applications

1 code implementation • 13 Dec 2018 • Jinfeng Li, Shouling Ji, Tianyu Du, Bo Li, Ting Wang

Deep Learning-based Text Understanding (DLTU) is the backbone technique behind various applications, including question answering, machine translation, and text classification.

Trojaning Language Models for Fun and Profit

1 code implementation • 1 Aug 2020 • Xinyang Zhang, Zheng Zhang, Shouling Ji, Ting Wang

Recent years have witnessed the emergence of a new paradigm of building natural language processing (NLP) systems: general-purpose, pre-trained language models (LMs) are composed with simple downstream models and fine-tuned for a variety of NLP tasks.

EfficientTDNN: Efficient Architecture Search for Speaker Recognition

1 code implementation • 25 Mar 2021 • Rui Wang, Zhihua Wei, Haoran Duan, Shouling Ji, Yang Long, Zhen Hong

Compared with hand-designed approaches, neural architecture search (NAS) appears as a practical technique in automating the manual architecture design process and has attracted increasing interest in spoken language processing tasks such as speaker recognition.

An Embarrassingly Simple Backdoor Attack on Self-supervised Learning

3 code implementations • ICCV 2023 • Changjiang Li, Ren Pang, Zhaohan Xi, Tianyu Du, Shouling Ji, Yuan YAO, Ting Wang

As a new paradigm in machine learning, self-supervised learning (SSL) is capable of learning high-quality representations of complex data without relying on labels.

Unsupervised Reference-Free Summary Quality Evaluation via Contrastive Learning

1 code implementation • EMNLP 2020 • Hanlu Wu, Tengfei Ma, Lingfei Wu, Tariro Manyumwa, Shouling Ji

Experiments on Newsroom and CNN/Daily Mail demonstrate that our new evaluation method outperforms other metrics even without reference summaries.

GIFT: Graph-guIded Feature Transfer for Cold-Start Video Click-Through Rate Prediction

1 code implementation • 21 Feb 2022 • Sihao Hu, Yi Cao, Yu Gong, Zhao Li, Yazheng Yang, Qingwen Liu, Shouling Ji

Specifically, we establish a heterogeneous graph that contains physical and semantic linkages to guide the feature transfer process from warmed-up video to cold-start videos.

FreeEagle: Detecting Complex Neural Trojans in Data-Free Cases

1 code implementation • 28 Feb 2023 • Chong Fu, Xuhong Zhang, Shouling Ji, Ting Wang, Peng Lin, Yanghe Feng, Jianwei Yin

Thus, in this paper, we propose FreeEagle, the first data-free backdoor detection method that can effectively detect complex backdoor attacks on deep neural networks, without relying on the access to any clean samples or samples with the trigger.

Efficient Query-Based Attack against ML-Based Android Malware Detection under Zero Knowledge Setting

1 code implementation • 5 Sep 2023 • Ping He, Yifan Xia, Xuhong Zhang, Shouling Ji

The widespread adoption of the Android operating system has made malicious Android applications an appealing target for attackers.

On the Security Risks of AutoML

1 code implementation • 12 Oct 2021 • Ren Pang, Zhaohan Xi, Shouling Ji, Xiapu Luo, Ting Wang

Neural Architecture Search (NAS) represents an emerging machine learning (ML) paradigm that automatically searches for models tailored to given tasks, which greatly simplifies the development of ML systems and propels the trend of ML democratization.

NeuronFair: Interpretable White-Box Fairness Testing through Biased Neuron Identification

1 code implementation • 25 Dec 2021 • Haibin Zheng, Zhiqing Chen, Tianyu Du, Xuhong Zhang, Yao Cheng, Shouling Ji, Jingyi Wang, Yue Yu, Jinyin Chen

To overcome the challenges, we propose NeuronFair, a new DNN fairness testing framework that differs from previous work in several key aspects: (1) interpretable - it quantitatively interprets DNNs' fairness violations for the biased decision; (2) effective - it uses the interpretation results to guide the generation of more diverse instances in less time; (3) generic - it can handle both structured and unstructured data.

Hierarchical Similarity Learning for Language-based Product Image Retrieval

1 code implementation • 18 Feb 2021 • Zhe Ma, Fenghao Liu, Jianfeng Dong, Xiaoye Qu, Yuan He, Shouling Ji

In this paper, we focus on the cross-modal similarity measurement, and propose a novel Hierarchical Similarity Learning (HSL) network.

Backdoor Pre-trained Models Can Transfer to All

1 code implementation • 30 Oct 2021 • Lujia Shen, Shouling Ji, Xuhong Zhang, Jinfeng Li, Jing Chen, Jie Shi, Chengfang Fang, Jianwei Yin, Ting Wang

However, a pre-trained model with backdoor can be a severe threat to the applications.

ORL-AUDITOR: Dataset Auditing in Offline Deep Reinforcement Learning

1 code implementation • 6 Sep 2023 • Linkang Du, Min Chen, Mingyang Sun, Shouling Ji, Peng Cheng, Jiming Chen, Zhikun Zhang

In safety-critical domains such as autonomous vehicles, offline deep reinforcement learning (offline DRL) is frequently used to train models on pre-collected datasets, as opposed to training these models by interacting with the real-world environment as the online DRL.

CP-BCS: Binary Code Summarization Guided by Control Flow Graph and Pseudo Code

1 code implementation • 24 Oct 2023 • Tong Ye, Lingfei Wu, Tengfei Ma, Xuhong Zhang, Yangkai Du, Peiyu Liu, Shouling Ji, Wenhai Wang

Automatically generating function summaries for binaries is an extremely valuable but challenging task, since it involves translating the execution behavior and semantics of the low-level language (assembly code) into human-readable natural language.

Constructing Contrastive samples via Summarization for Text Classification with limited annotations

1 code implementation • Findings (EMNLP) 2021 • Yangkai Du, Tengfei Ma, Lingfei Wu, Fangli Xu, Xuhong Zhang, Bo Long, Shouling Ji

Unlike vision tasks, the data augmentation method for contrastive learning has not been investigated sufficiently in language tasks.

Tram: A Token-level Retrieval-augmented Mechanism for Source Code Summarization

1 code implementation • 18 May 2023 • Tong Ye, Lingfei Wu, Tengfei Ma, Xuhong Zhang, Yangkai Du, Peiyu Liu, Shouling Ji, Wenhai Wang

In this paper, we propose a fine-grained Token-level retrieval-augmented mechanism (Tram) on the decoder side rather than the encoder side to enhance the performance of neural models and produce more low-frequency tokens in generating summaries.

On the Security Risks of Knowledge Graph Reasoning

1 code implementation • 3 May 2023 • Zhaohan Xi, Tianyu Du, Changjiang Li, Ren Pang, Shouling Ji, Xiapu Luo, Xusheng Xiao, Fenglong Ma, Ting Wang

Knowledge graph reasoning (KGR) -- answering complex logical queries over large knowledge graphs -- represents an important artificial intelligence task, entailing a range of applications (e. g., cyber threat hunting).

Let All be Whitened: Multi-teacher Distillation for Efficient Visual Retrieval

1 code implementation • 15 Dec 2023 • Zhe Ma, Jianfeng Dong, Shouling Ji, Zhenguang Liu, Xuhong Zhang, Zonghui Wang, Sifeng He, Feng Qian, Xiaobo Zhang, Lei Yang

Instead of crafting a new method pursuing further improvement on accuracy, in this paper we propose a multi-teacher distillation framework Whiten-MTD, which is able to transfer knowledge from off-the-shelf pre-trained retrieval models to a lightweight student model for efficient visual retrieval.

Interpretable Deep Learning under Fire

no code implementations • 3 Dec 2018 • Xinyang Zhang, Ningfei Wang, Hua Shen, Shouling Ji, Xiapu Luo, Ting Wang

The improved interpretability is believed to offer a sense of security by involving human in the decision-making process.

Provable Defenses against Spatially Transformed Adversarial Inputs: Impossibility and Possibility Results

no code implementations • ICLR 2019 • Xinyang Zhang, Yifan Huang, Chanh Nguyen, Shouling Ji, Ting Wang

On the possibility side, we show that it is still feasible to construct adversarial training methods to significantly improve the resilience of networks against adversarial inputs over empirical datasets.

Adversarial Examples Versus Cloud-based Detectors: A Black-box Empirical Study

no code implementations • 4 Jan 2019 • Xurong Li, Shouling Ji, Meng Han, Juntao Ji, Zhenyu Ren, Yushan Liu, Chunming Wu

Through the comprehensive evaluations on five major cloud platforms: AWS, Azure, Google Cloud, Baidu Cloud, and Alibaba Cloud, we demonstrate that our image processing based attacks can reach a success rate of approximately 100%, and the semantic segmentation based attacks have a success rate over 90% among different detection services, such as violence, politician, and pornography detection.

A Truthful FPTAS Mechanism for Emergency Demand Response in Colocation Data Centers

1 code implementation • 10 Jan 2019 • Jian-hai Chen, Deshi Ye, Shouling Ji, Qinming He, Yang Xiang, Zhenguang Liu

Next, we prove that our mechanism is an FPTAS, i. e., it can be approximated within $1 + \epsilon$ for any given $\epsilon > 0$, while the running time of our mechanism is polynomial in $n$ and $1/\epsilon$, where $n$ is the number of tenants in the datacenter.

Computer Science and Game Theory

Attend To Count: Crowd Counting with Adaptive Capacity Multi-scale CNNs

no code implementations • 7 Aug 2019 • Zhikang Zou, Yu Cheng, Xiaoye Qu, Shouling Ji, Xiaoxiao Guo, Pan Zhou

ACM-CNN consists of three types of modules: a coarse network, a fine network, and a smooth network.

Model-Reuse Attacks on Deep Learning Systems

no code implementations • 2 Dec 2018 • Yujie Ji, Xinyang Zhang, Shouling Ji, Xiapu Luo, Ting Wang

By empirically studying four deep learning systems (including both individual and ensemble systems) used in skin cancer screening, speech recognition, face verification, and autonomous steering, we show that such attacks are (i) effective - the host systems misbehave on the targeted inputs as desired by the adversary with high probability, (ii) evasive - the malicious models function indistinguishably from their benign counterparts on non-targeted inputs, (iii) elastic - the malicious models remain effective regardless of various system design choices and tuning strategies, and (iv) easy - the adversary needs little prior knowledge about the data used for system tuning or inference.

Cryptography and Security

Efficient Global String Kernel with Random Features: Beyond Counting Substructures

no code implementations • 25 Nov 2019 • Lingfei Wu, Ian En-Hsu Yen, Siyu Huo, Liang Zhao, Kun Xu, Liang Ma, Shouling Ji, Charu Aggarwal

In this paper, we present a new class of global string kernels that aims to (i) discover global properties hidden in the strings through global alignments, (ii) maintain positive-definiteness of the kernel, without introducing a diagonal dominant kernel matrix, and (iii) have a training cost linear with respect to not only the length of the string but also the number of training string samples.

V-Fuzz: Vulnerability-Oriented Evolutionary Fuzzing

no code implementations • 4 Jan 2019 • Yuwei Li, Shouling Ji, Chenyang Lv, Yu-An Chen, Jian-hai Chen, Qinchen Gu, Chunming Wu

Given a binary program to V-Fuzz, the vulnerability prediction model will give a prior estimation on which parts of the software are more likely to be vulnerable.

Cryptography and Security

SmartSeed: Smart Seed Generation for Efficient Fuzzing

no code implementations • 7 Jul 2018 • Chenyang Lyu, Shouling Ji, Yuwei Li, Junfeng Zhou, Jian-hai Chen, Jing Chen

In total, our system discovers more than twice unique crashes and 5, 040 extra unique paths than the existing best seed selection strategy for the evaluated 12 applications.

Cryptography and Security

SirenAttack: Generating Adversarial Audio for End-to-End Acoustic Systems

no code implementations • 23 Jan 2019 • Tianyu Du, Shouling Ji, Jinfeng Li, Qinchen Gu, Ting Wang, Raheem Beyah

Despite their immense popularity, deep learning-based acoustic systems are inherently vulnerable to adversarial attacks, wherein maliciously crafted audios trigger target systems to misbehave.

Cryptography and Security

Multi-level Graph Matching Networks for Deep and Robust Graph Similarity Learning

no code implementations • 1 Jan 2021 • Xiang Ling, Lingfei Wu, Saizhuo Wang, Tengfei Ma, Fangli Xu, Alex X. Liu, Chunming Wu, Shouling Ji

The proposed MGMN model consists of a node-graph matching network for effectively learning cross-level interactions between nodes of a graph and the other whole graph, and a siamese graph neural network to learn global-level interactions between two graphs.

Exploiting Heterogeneous Graph Neural Networks with Latent Worker/Task Correlation Information for Label Aggregation in Crowdsourcing

no code implementations • 25 Oct 2020 • Hanlu Wu, Tengfei Ma, Lingfei Wu, Shouling Ji

Besides, we exploit the unknown latent interaction between the same type of nodes (workers or tasks) by adding a homogeneous attention layer in the graph neural networks.

Deep Graph Matching and Searching for Semantic Code Retrieval

no code implementations • 24 Oct 2020 • Xiang Ling, Lingfei Wu, Saizhuo Wang, Gaoning Pan, Tengfei Ma, Fangli Xu, Alex X. Liu, Chunming Wu, Shouling Ji

To this end, we first represent both natural language query texts and programming language code snippets with the unified graph-structured data, and then use the proposed graph matching and searching model to retrieve the best matching code snippet.

Visually Imperceptible Adversarial Patch Attacks on Digital Images

no code implementations • 2 Dec 2020 • Yaguan Qian, Jiamin Wang, Bin Wang, Shaoning Zeng, Zhaoquan Gu, Shouling Ji, Wassim Swaileh

With this soft mask, we develop a new loss function with inverse temperature to search for optimal perturbations in CFR.

i-Algebra: Towards Interactive Interpretability of Deep Neural Networks

no code implementations • 22 Jan 2021 • Xinyang Zhang, Ren Pang, Shouling Ji, Fenglong Ma, Ting Wang

Providing explanations for deep neural networks (DNNs) is essential for their use in domains wherein the interpretability of decisions is a critical prerequisite.

Towards Speeding up Adversarial Training in Latent Spaces

no code implementations • 1 Feb 2021 • Yaguan Qian, Qiqi Shao, Tengteng Yao, Bin Wang, Shouling Ji, Shaoning Zeng, Zhaoquan Gu, Wassim Swaileh

Adversarial training is wildly considered as one of the most effective way to defend against adversarial examples.

Enhancing Model Robustness By Incorporating Adversarial Knowledge Into Semantic Representation

no code implementations • 23 Feb 2021 • Jinfeng Li, Tianyu Du, Xiangyu Liu, Rong Zhang, Hui Xue, Shouling Ji

Extensive experiments on two real-world tasks show that AdvGraph exhibits better performance compared with previous work: (i) effective - it significantly strengthens the model robustness even under the adaptive attacks setting without negative impact on model performance over legitimate input; (ii) generic - its key component, i. e., the representation of connotative adversarial knowledge is task-agnostic, which can be reused in any Chinese-based NLP models without retraining; and (iii) efficient - it is a light-weight defense with sub-linear computational complexity, which can guarantee the efficiency required in practical scenarios.

Aggregated Multi-GANs for Controlled 3D Human Motion Prediction

no code implementations • 17 Mar 2021 • Zhenguang Liu, Kedi Lyu, Shuang Wu, Haipeng Chen, Yanbin Hao, Shouling Ji

Our method is compelling in that it enables manipulable motion prediction across activity types and allows customization of the human movement in a variety of fine-grained ways.

GGT: Graph-Guided Testing for Adversarial Sample Detection of Deep Neural Network

no code implementations • 9 Jul 2021 • Zuohui Chen, Renxuan Wang, Jingyang Xiang, Yue Yu, Xin Xia, Shouling Ji, Qi Xuan, Xiaoniu Yang

Deep Neural Networks (DNN) are known to be vulnerable to adversarial samples, the detection of which is crucial for the wide application of these DNN models.

Hierarchical Graph Matching Networks for Deep Graph Similarity Learning

no code implementations • 25 Sep 2019 • Xiang Ling, Lingfei Wu, Saizhuo Wang, Tengfei Ma, Fangli Xu, Chunming Wu, Shouling Ji

The proposed HGMN model consists of a multi-perspective node-graph matching network for effectively learning cross-level interactions between parts of a graph and a whole graph, and a siamese graph neural network for learning global-level interactions between two graphs.

NIP: Neuron-level Inverse Perturbation Against Adversarial Attacks

no code implementations • 24 Dec 2021 • Ruoxi Chen, Haibo Jin, Jinyin Chen, Haibin Zheng, Yue Yu, Shouling Ji

From the perspective of image feature space, some of them cannot reach satisfying results due to the shift of features.

Seeing is Living? Rethinking the Security of Facial Liveness Verification in the Deepfake Era

no code implementations • 22 Feb 2022 • Changjiang Li, Li Wang, Shouling Ji, Xuhong Zhang, Zhaohan Xi, Shanqing Guo, Ting Wang

Facial Liveness Verification (FLV) is widely used for identity authentication in many security-sensitive domains and offered as Platform-as-a-Service (PaaS) by leading cloud vendors.

Model Inversion Attack against Transfer Learning: Inverting a Model without Accessing It

no code implementations • 13 Mar 2022 • Dayong Ye, Huiqiang Chen, Shuai Zhou, Tianqing Zhu, Wanlei Zhou, Shouling Ji

However, they may not mean that transfer learning models are impervious to model inversion attacks.

Transfer Attacks Revisited: A Large-Scale Empirical Study in Real Computer Vision Settings

no code implementations • 7 Apr 2022 • Yuhao Mao, Chong Fu, Saizhuo Wang, Shouling Ji, Xuhong Zhang, Zhenguang Liu, Jun Zhou, Alex X. Liu, Raheem Beyah, Ting Wang

To bridge this critical gap, we conduct the first large-scale systematic empirical study of transfer attacks against major cloud-based MLaaS platforms, taking the components of a real transfer attack into account.

Improving Long Tailed Document-Level Relation Extraction via Easy Relation Augmentation and Contrastive Learning

no code implementations • 21 May 2022 • Yangkai Du, Tengfei Ma, Lingfei Wu, Yiming Wu, Xuhong Zhang, Bo Long, Shouling Ji

Towards real-world information extraction scenario, research of relation extraction is advancing to document-level relation extraction(DocRE).

Ranked #25 on

Relation Extraction

on DocRED

Ranked #25 on

Relation Extraction

on DocRED

VeriFi: Towards Verifiable Federated Unlearning

no code implementations • 25 May 2022 • Xiangshan Gao, Xingjun Ma, Jingyi Wang, Youcheng Sun, Bo Li, Shouling Ji, Peng Cheng, Jiming Chen

One desirable property for FL is the implementation of the right to be forgotten (RTBF), i. e., a leaving participant has the right to request to delete its private data from the global model.

"Is your explanation stable?": A Robustness Evaluation Framework for Feature Attribution

no code implementations • 5 Sep 2022 • Yuyou Gan, Yuhao Mao, Xuhong Zhang, Shouling Ji, Yuwen Pu, Meng Han, Jianwei Yin, Ting Wang

Experiment results show that the MeTFA-smoothed explanation can significantly increase the robust faithfulness.

Reasoning over Multi-view Knowledge Graphs

no code implementations • 27 Sep 2022 • Zhaohan Xi, Ren Pang, Changjiang Li, Tianyu Du, Shouling Ji, Fenglong Ma, Ting Wang

(ii) It supports complex logical queries with varying relation and view constraints (e. g., with complex topology and/or from multiple views); (iii) It scales up to KGs of large sizes (e. g., millions of facts) and fine-granular views (e. g., dozens of views); (iv) It generalizes to query structures and KG views that are unobserved during training.

Neural Architectural Backdoors

no code implementations • 21 Oct 2022 • Ren Pang, Changjiang Li, Zhaohan Xi, Shouling Ji, Ting Wang

This paper asks the intriguing question: is it possible to exploit neural architecture search (NAS) as a new attack vector to launch previously improbable attacks?

Hijack Vertical Federated Learning Models As One Party

no code implementations • 1 Dec 2022 • Pengyu Qiu, Xuhong Zhang, Shouling Ji, Changjiang Li, Yuwen Pu, Xing Yang, Ting Wang

Vertical federated learning (VFL) is an emerging paradigm that enables collaborators to build machine learning models together in a distributed fashion.

HashVFL: Defending Against Data Reconstruction Attacks in Vertical Federated Learning

no code implementations • 1 Dec 2022 • Pengyu Qiu, Xuhong Zhang, Shouling Ji, Chong Fu, Xing Yang, Ting Wang

Our work shows that hashing is a promising solution to counter data reconstruction attacks.

TextDefense: Adversarial Text Detection based on Word Importance Entropy

no code implementations • 12 Feb 2023 • Lujia Shen, Xuhong Zhang, Shouling Ji, Yuwen Pu, Chunpeng Ge, Xing Yang, Yanghe Feng

TextDefense differs from previous approaches, where it utilizes the target model for detection and thus is attack type agnostic.

Edge Deep Learning Model Protection via Neuron Authorization

1 code implementation • 22 Mar 2023 • Jinyin Chen, Haibin Zheng, Tao Liu, Rongchang Li, Yao Cheng, Xuhong Zhang, Shouling Ji

With the development of deep learning processors and accelerators, deep learning models have been widely deployed on edge devices as part of the Internet of Things.

Watch Out for the Confusing Faces: Detecting Face Swapping with the Probability Distribution of Face Identification Models

no code implementations • 23 Mar 2023 • Yuxuan Duan, Xuhong Zhang, Chuer Yu, Zonghui Wang, Shouling Ji, Wenzhi Chen

We reflect this nature with the confusion of a face identification model and measure the confusion with the maximum value of the output probability distribution.

Diff-ID: An Explainable Identity Difference Quantification Framework for DeepFake Detection

no code implementations • 30 Mar 2023 • Chuer Yu, Xuhong Zhang, Yuxuan Duan, Senbo Yan, Zonghui Wang, Yang Xiang, Shouling Ji, Wenzhi Chen

We then visualize the identity loss between the test and the reference image from the image differences of the aligned pairs, and design a custom metric to quantify the identity loss.

RNN-Guard: Certified Robustness Against Multi-frame Attacks for Recurrent Neural Networks

no code implementations • 17 Apr 2023 • Yunruo Zhang, Tianyu Du, Shouling Ji, Peng Tang, Shanqing Guo

In this paper, we propose the first certified defense against multi-frame attacks for RNNs called RNN-Guard.

G$^2$uardFL: Safeguarding Federated Learning Against Backdoor Attacks through Attributed Client Graph Clustering

no code implementations • 8 Jun 2023 • Hao Yu, Chuan Ma, Meng Liu, Tianyu Du, Ming Ding, Tao Xiang, Shouling Ji, Xinwang Liu

Through empirical evaluation, comparing G$^2$uardFL with cutting-edge defenses, such as FLAME (USENIX Security 2022) [28] and DeepSight (NDSS 2022) [36], against various backdoor attacks including 3DFed (SP 2023) [20], our results demonstrate its significant effectiveness in mitigating backdoor attacks while having a negligible impact on the aggregated model's performance on benign samples (i. e., the primary task performance).

F$^2$AT: Feature-Focusing Adversarial Training via Disentanglement of Natural and Perturbed Patterns

no code implementations • 23 Oct 2023 • Yaguan Qian, Chenyu Zhao, Zhaoquan Gu, Bin Wang, Shouling Ji, Wei Wang, Boyang Zhou, Pan Zhou

We propose a Feature-Focusing Adversarial Training (F$^2$AT), which differs from previous work in that it enforces the model to focus on the core features from natural patterns and reduce the impact of spurious features from perturbed patterns.

Facial Data Minimization: Shallow Model as Your Privacy Filter

no code implementations • 24 Oct 2023 • Yuwen Pu, Jiahao Chen, JiaYu Pan, Hao Li, Diqun Yan, Xuhong Zhang, Shouling Ji

Face recognition service has been used in many fields and brings much convenience to people.

How ChatGPT is Solving Vulnerability Management Problem

no code implementations • 11 Nov 2023 • Peiyu Liu, Junming Liu, Lirong Fu, Kangjie Lu, Yifan Xia, Xuhong Zhang, Wenzhi Chen, Haiqin Weng, Shouling Ji, Wenhai Wang

Prior works show that ChatGPT has the capabilities of processing foundational code analysis tasks, such as abstract syntax tree generation, which indicates the potential of using ChatGPT to comprehend code syntax and static behaviors.

AdaCCD: Adaptive Semantic Contrasts Discovery Based Cross Lingual Adaptation for Code Clone Detection

no code implementations • 13 Nov 2023 • Yangkai Du, Tengfei Ma, Lingfei Wu, Xuhong Zhang, Shouling Ji

Code Clone Detection, which aims to retrieve functionally similar programs from large code bases, has been attracting increasing attention.

Improving the Robustness of Transformer-based Large Language Models with Dynamic Attention

no code implementations • 29 Nov 2023 • Lujia Shen, Yuwen Pu, Shouling Ji, Changjiang Li, Xuhong Zhang, Chunpeng Ge, Ting Wang

Extensive experiments demonstrate that dynamic attention significantly mitigates the impact of adversarial attacks, improving up to 33\% better performance than previous methods against widely-used adversarial attacks.

On the Difficulty of Defending Contrastive Learning against Backdoor Attacks

no code implementations • 14 Dec 2023 • Changjiang Li, Ren Pang, Bochuan Cao, Zhaohan Xi, Jinghui Chen, Shouling Ji, Ting Wang

Recent studies have shown that contrastive learning, like supervised learning, is highly vulnerable to backdoor attacks wherein malicious functions are injected into target models, only to be activated by specific triggers.

MEAOD: Model Extraction Attack against Object Detectors

no code implementations • 22 Dec 2023 • Zeyu Li, Chenghui Shi, Yuwen Pu, Xuhong Zhang, Yu Li, Jinbao Li, Shouling Ji

The widespread use of deep learning technology across various industries has made deep neural network models highly valuable and, as a result, attractive targets for potential attackers.

The Risk of Federated Learning to Skew Fine-Tuning Features and Underperform Out-of-Distribution Robustness

no code implementations • 25 Jan 2024 • Mengyao Du, Miao Zhang, Yuwen Pu, Kai Xu, Shouling Ji, Quanjun Yin

To tackle the scarcity and privacy issues associated with domain-specific datasets, the integration of federated learning in conjunction with fine-tuning has emerged as a practical solution.

SUB-PLAY: Adversarial Policies against Partially Observed Multi-Agent Reinforcement Learning Systems

no code implementations • 6 Feb 2024 • Oubo Ma, Yuwen Pu, Linkang Du, Yang Dai, Ruo Wang, Xiaolei Liu, Yingcai Wu, Shouling Ji

Furthermore, we evaluate three potential defenses aimed at exploring ways to mitigate security threats posed by adversarial policies, providing constructive recommendations for deploying MARL in competitive environments.

PRSA: Prompt Reverse Stealing Attacks against Large Language Models

no code implementations • 29 Feb 2024 • Yong Yang, Xuhong Zhang, Yi Jiang, Xi Chen, Haoyu Wang, Shouling Ji, Zonghui Wang

In the mutation phase, we propose a prompt attention algorithm based on differential feedback to capture these critical features for effectively inferring the target prompts.