Search Results for author: Subhashini Venugopalan

Found 31 papers, 13 papers with code

Scaling Symbolic Methods using Gradients for Neural Model Explanation

2 code implementations • ICLR 2021 • Subham Sekhar Sahoo, Subhashini Venugopalan, Li Li, Rishabh Singh, Patrick Riley

In this work, we propose a technique for combining gradient-based methods with symbolic techniques to scale such analyses and demonstrate its application for model explanation.

Attribution in Scale and Space

1 code implementation • CVPR 2020 • Shawn Xu, Subhashini Venugopalan, Mukund Sundararajan

Third, it eliminates the need for a 'baseline' parameter for Integrated Gradients [31] for perception tasks.

Guided Integrated Gradients: An Adaptive Path Method for Removing Noise

1 code implementation • CVPR 2021 • Andrei Kapishnikov, Subhashini Venugopalan, Besim Avci, Ben Wedin, Michael Terry, Tolga Bolukbasi

To minimize the effect of this source of noise, we propose adapting the attribution path itself -- conditioning the path not just on the image but also on the model being explained.

Scientific Discovery by Generating Counterfactuals using Image Translation

1 code implementation • 10 Jul 2020 • Arunachalam Narayanaswamy, Subhashini Venugopalan, Dale R. Webster, Lily Peng, Greg Corrado, Paisan Ruamviboonsuk, Pinal Bavishi, Rory Sayres, Abigail Huang, Siva Balasubramanian, Michael Brenner, Philip Nelson, Avinash V. Varadarajan

Model explanation techniques play a critical role in understanding the source of a model's performance and making its decisions transparent.

Is Attention All That NeRF Needs?

1 code implementation • 27 Jul 2022 • Mukund Varma T, Peihao Wang, Xuxi Chen, Tianlong Chen, Subhashini Venugopalan, Zhangyang Wang

While prior works on NeRFs optimize a scene representation by inverting a handcrafted rendering equation, GNT achieves neural representation and rendering that generalizes across scenes using transformers at two stages.

Ranked #1 on

Generalizable Novel View Synthesis

on LLFF

Ranked #1 on

Generalizable Novel View Synthesis

on LLFF

Long-term Recurrent Convolutional Networks for Visual Recognition and Description

7 code implementations • CVPR 2015 • Jeff Donahue, Lisa Anne Hendricks, Marcus Rohrbach, Subhashini Venugopalan, Sergio Guadarrama, Kate Saenko, Trevor Darrell

Models based on deep convolutional networks have dominated recent image interpretation tasks; we investigate whether models which are also recurrent, or "temporally deep", are effective for tasks involving sequences, visual and otherwise.

Ranked #3 on

Human Interaction Recognition

on BIT

Ranked #3 on

Human Interaction Recognition

on BIT

Deep Compositional Captioning: Describing Novel Object Categories without Paired Training Data

1 code implementation • CVPR 2016 • Lisa Anne Hendricks, Subhashini Venugopalan, Marcus Rohrbach, Raymond Mooney, Kate Saenko, Trevor Darrell

Current deep caption models can only describe objects contained in paired image-sentence corpora, despite the fact that they are pre-trained with large object recognition datasets, namely ImageNet.

Sequence to Sequence -- Video to Text

4 code implementations • 3 May 2015 • Subhashini Venugopalan, Marcus Rohrbach, Jeff Donahue, Raymond Mooney, Trevor Darrell, Kate Saenko

Our LSTM model is trained on video-sentence pairs and learns to associate a sequence of video frames to a sequence of words in order to generate a description of the event in the video clip.

Translating Videos to Natural Language Using Deep Recurrent Neural Networks

1 code implementation • HLT 2015 • Subhashini Venugopalan, Huijuan Xu, Jeff Donahue, Marcus Rohrbach, Raymond Mooney, Kate Saenko

Solving the visual symbol grounding problem has long been a goal of artificial intelligence.

Improving LSTM-based Video Description with Linguistic Knowledge Mined from Text

3 code implementations • EMNLP 2016 • Subhashini Venugopalan, Lisa Anne Hendricks, Raymond Mooney, Kate Saenko

This paper investigates how linguistic knowledge mined from large text corpora can aid the generation of natural language descriptions of videos.

Captioning Images with Diverse Objects

1 code implementation • CVPR 2017 • Subhashini Venugopalan, Lisa Anne Hendricks, Marcus Rohrbach, Raymond Mooney, Trevor Darrell, Kate Saenko

We propose minimizing a joint objective which can learn from these diverse data sources and leverage distributional semantic embeddings, enabling the model to generalize and describe novel objects outside of image-caption datasets.

Sparse Winning Tickets are Data-Efficient Image Recognizers

1 code implementation • NIPS 2022 • Mukund Varma T, Xuxi Chen, Zhenyu Zhang, Tianlong Chen, Subhashini Venugopalan, Zhangyang Wang

Improving the performance of deep networks in data-limited regimes has warranted much attention.

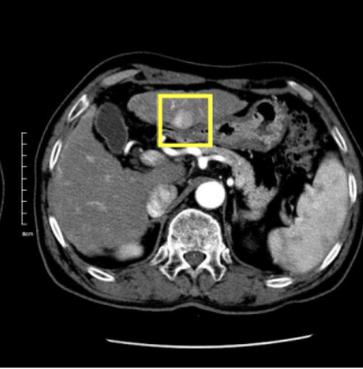

Detecting Cancer Metastases on Gigapixel Pathology Images

6 code implementations • 3 Mar 2017 • Yun Liu, Krishna Gadepalli, Mohammad Norouzi, George E. Dahl, Timo Kohlberger, Aleksey Boyko, Subhashini Venugopalan, Aleksei Timofeev, Philip Q. Nelson, Greg S. Corrado, Jason D. Hipp, Lily Peng, Martin C. Stumpe

At 8 false positives per image, we detect 92. 4% of the tumors, relative to 82. 7% by the previous best automated approach.

Ranked #2 on

Medical Object Detection

on Barrett’s Esophagus

Ranked #2 on

Medical Object Detection

on Barrett’s Esophagus

Utilizing Large Scale Vision and Text Datasets for Image Segmentation from Referring Expressions

no code implementations • 30 Aug 2016 • Ronghang Hu, Marcus Rohrbach, Subhashini Venugopalan, Trevor Darrell

Image segmentation from referring expressions is a joint vision and language modeling task, where the input is an image and a textual expression describing a particular region in the image; and the goal is to localize and segment the specific image region based on the given expression.

A Multi-scale Multiple Instance Video Description Network

no code implementations • 21 May 2015 • Huijuan Xu, Subhashini Venugopalan, Vasili Ramanishka, Marcus Rohrbach, Kate Saenko

Most state-of-the-art methods for solving this problem borrow existing deep convolutional neural network (CNN) architectures (AlexNet, GoogLeNet) to extract a visual representation of the input video.

Predicting optical coherence tomography-derived diabetic macular edema grades from fundus photographs using deep learning

no code implementations • 18 Oct 2018 • Avinash Varadarajan, Pinal Bavishi, Paisan Raumviboonsuk, Peranut Chotcomwongse, Subhashini Venugopalan, Arunachalam Narayanaswamy, Jorge Cuadros, Kuniyoshi Kanai, George Bresnick, Mongkol Tadarati, Sukhum Silpa-archa, Jirawut Limwattanayingyong, Variya Nganthavee, Joe Ledsam, Pearse A. Keane, Greg S. Corrado, Lily Peng, Dale R. Webster

To improve the accuracy of DME screening, we trained a deep learning model to use color fundus photographs to predict ci-DME.

Sequence to Sequence - Video to Text

no code implementations • ICCV 2015 • Subhashini Venugopalan, Marcus Rohrbach, Jeffrey Donahue, Raymond Mooney, Trevor Darrell, Kate Saenko

Our LSTM model is trained on video-sentence pairs and learns to associate a sequence of video frames to a sequence of words in order to generate a description of the event in the video clip.

It's easy to fool yourself: Case studies on identifying bias and confounding in bio-medical datasets

no code implementations • 12 Dec 2019 • Subhashini Venugopalan, Arunachalam Narayanaswamy, Samuel Yang, Anton Geraschenko, Scott Lipnick, Nina Makhortova, James Hawrot, Christine Marques, Joao Pereira, Michael Brenner, Lee Rubin, Brian Wainger, Marc Berndl

Confounding variables are a well known source of nuisance in biomedical studies.

Predicting Risk of Developing Diabetic Retinopathy using Deep Learning

no code implementations • 10 Aug 2020 • Ashish Bora, Siva Balasubramanian, Boris Babenko, Sunny Virmani, Subhashini Venugopalan, Akinori Mitani, Guilherme de Oliveira Marinho, Jorge Cuadros, Paisan Ruamviboonsuk, Greg S. Corrado, Lily Peng, Dale R. Webster, Avinash V. Varadarajan, Naama Hammel, Yun Liu, Pinal Bavishi

We created and validated two versions of a deep learning system (DLS) to predict the development of mild-or-worse ("Mild+") DR in diabetic patients undergoing DR screening.

Comparing Supervised Models And Learned Speech Representations For Classifying Intelligibility Of Disordered Speech On Selected Phrases

no code implementations • 8 Jul 2021 • Subhashini Venugopalan, Joel Shor, Manoj Plakal, Jimmy Tobin, Katrin Tomanek, Jordan R. Green, Michael P. Brenner

Automatic classification of disordered speech can provide an objective tool for identifying the presence and severity of speech impairment.

Using a Cross-Task Grid of Linear Probes to Interpret CNN Model Predictions On Retinal Images

no code implementations • 23 Jul 2021 • Katy Blumer, Subhashini Venugopalan, Michael P. Brenner, Jon Kleinberg

We find that some target tasks are easily predicted irrespective of the source task, and that some other target tasks are more accurately predicted from correlated source tasks than from embeddings trained on the same task.

TRILLsson: Distilled Universal Paralinguistic Speech Representations

no code implementations • 1 Mar 2022 • Joel Shor, Subhashini Venugopalan

Our largest distilled model is less than 15% the size of the original model (314MB vs 2. 2GB), achieves over 96% the accuracy on 6 of 7 tasks, and is trained on 6. 5% the data.

Context-Aware Abbreviation Expansion Using Large Language Models

no code implementations • NAACL 2022 • Shanqing Cai, Subhashini Venugopalan, Katrin Tomanek, Ajit Narayanan, Meredith Ringel Morris, Michael P. Brenner

Motivated by the need for accelerating text entry in augmentative and alternative communication (AAC) for people with severe motor impairments, we propose a paradigm in which phrases are abbreviated aggressively as primarily word-initial letters.

Assessing ASR Model Quality on Disordered Speech using BERTScore

no code implementations • 21 Sep 2022 • Jimmy Tobin, Qisheng Li, Subhashini Venugopalan, Katie Seaver, Richard Cave, Katrin Tomanek

BERTScore was found to be more correlated with human assessment of error type and assessment.

Automatic Speech Recognition

Automatic Speech Recognition

Automatic Speech Recognition (ASR)

+2

Automatic Speech Recognition (ASR)

+2

Clinical BERTScore: An Improved Measure of Automatic Speech Recognition Performance in Clinical Settings

no code implementations • 10 Mar 2023 • Joel Shor, Ruyue Agnes Bi, Subhashini Venugopalan, Steven Ibara, Roman Goldenberg, Ehud Rivlin

We demonstrate that this metric more closely aligns with clinician preferences on medical sentences as compared to other metrics (WER, BLUE, METEOR, etc), sometimes by wide margins.

Automatic Speech Recognition

Automatic Speech Recognition

Automatic Speech Recognition (ASR)

+1

Automatic Speech Recognition (ASR)

+1

Speech Intelligibility Classifiers from 550k Disordered Speech Samples

no code implementations • 13 Mar 2023 • Subhashini Venugopalan, Jimmy Tobin, Samuel J. Yang, Katie Seaver, Richard J. N. Cave, Pan-Pan Jiang, Neil Zeghidour, Rus Heywood, Jordan Green, Michael P. Brenner

We developed dysarthric speech intelligibility classifiers on 551, 176 disordered speech samples contributed by a diverse set of 468 speakers, with a range of self-reported speaking disorders and rated for their overall intelligibility on a five-point scale.

Using Large Language Models to Accelerate Communication for Users with Severe Motor Impairments

no code implementations • 3 Dec 2023 • Shanqing Cai, Subhashini Venugopalan, Katie Seaver, Xiang Xiao, Katrin Tomanek, Sri Jalasutram, Meredith Ringel Morris, Shaun Kane, Ajit Narayanan, Robert L. MacDonald, Emily Kornman, Daniel Vance, Blair Casey, Steve M. Gleason, Philip Q. Nelson, Michael P. Brenner

A pilot study with 19 non-AAC participants typing on a mobile device by hand demonstrated gains in motor savings in line with the offline simulation, while introducing relatively small effects on overall typing speed.

Parameter Efficient Tuning Allows Scalable Personalization of LLMs for Text Entry: A Case Study on Abbreviation Expansion

no code implementations • 21 Dec 2023 • Katrin Tomanek, Shanqing Cai, Subhashini Venugopalan

Abbreviation expansion is a strategy used to speed up communication by limiting the amount of typing and using a language model to suggest expansions.

Quantum Many-Body Physics Calculations with Large Language Models

no code implementations • 5 Mar 2024 • Haining Pan, Nayantara Mudur, Will Taranto, Maria Tikhanovskaya, Subhashini Venugopalan, Yasaman Bahri, Michael P. Brenner, Eun-Ah Kim

We evaluate GPT-4's performance in executing the calculation for 15 research papers from the past decade, demonstrating that, with correction of intermediate steps, it can correctly derive the final Hartree-Fock Hamiltonian in 13 cases and makes minor errors in 2 cases.

A Design Space for Intelligent and Interactive Writing Assistants

no code implementations • 21 Mar 2024 • Mina Lee, Katy Ilonka Gero, John Joon Young Chung, Simon Buckingham Shum, Vipul Raheja, Hua Shen, Subhashini Venugopalan, Thiemo Wambsganss, David Zhou, Emad A. Alghamdi, Tal August, Avinash Bhat, Madiha Zahrah Choksi, Senjuti Dutta, Jin L. C. Guo, Md Naimul Hoque, Yewon Kim, Simon Knight, Seyed Parsa Neshaei, Agnia Sergeyuk, Antonette Shibani, Disha Shrivastava, Lila Shroff, Jessi Stark, Sarah Sterman, Sitong Wang, Antoine Bosselut, Daniel Buschek, Joseph Chee Chang, Sherol Chen, Max Kreminski, Joonsuk Park, Roy Pea, Eugenia H. Rho, Shannon Zejiang Shen, Pao Siangliulue

In our era of rapid technological advancement, the research landscape for writing assistants has become increasingly fragmented across various research communities.