Search Results for author: Vikas Chandra

Found 60 papers, 17 papers with code

Hello Edge: Keyword Spotting on Microcontrollers

18 code implementations • 20 Nov 2017 • Yundong Zhang, Naveen Suda, Liangzhen Lai, Vikas Chandra

We train various neural network architectures for keyword spotting published in literature to compare their accuracy and memory/compute requirements.

Ranked #13 on

Keyword Spotting

on Google Speech Commands

Ranked #13 on

Keyword Spotting

on Google Speech Commands

MiniGPT-v2: large language model as a unified interface for vision-language multi-task learning

1 code implementation • 14 Oct 2023 • Jun Chen, Deyao Zhu, Xiaoqian Shen, Xiang Li, Zechun Liu, Pengchuan Zhang, Raghuraman Krishnamoorthi, Vikas Chandra, Yunyang Xiong, Mohamed Elhoseiny

Motivated by this, we target to build a unified interface for completing many vision-language tasks including image description, visual question answering, and visual grounding, among others.

Ranked #10 on

Visual Question Answering

on BenchLMM

Ranked #10 on

Visual Question Answering

on BenchLMM

EfficientSAM: Leveraged Masked Image Pretraining for Efficient Segment Anything

1 code implementation • 1 Dec 2023 • Yunyang Xiong, Bala Varadarajan, Lemeng Wu, Xiaoyu Xiang, Fanyi Xiao, Chenchen Zhu, Xiaoliang Dai, Dilin Wang, Fei Sun, Forrest Iandola, Raghuraman Krishnamoorthi, Vikas Chandra

On segment anything task such as zero-shot instance segmentation, our EfficientSAMs with SAMI-pretrained lightweight image encoders perform favorably with a significant gain (e. g., ~4 AP on COCO/LVIS) over other fast SAM models.

Ranked #3 on

Zero-Shot Instance Segmentation

on LVIS v1.0 val

Ranked #3 on

Zero-Shot Instance Segmentation

on LVIS v1.0 val

CMSIS-NN: Efficient Neural Network Kernels for Arm Cortex-M CPUs

1 code implementation • 19 Jan 2018 • Liangzhen Lai, Naveen Suda, Vikas Chandra

Deep Neural Networks are becoming increasingly popular in always-on IoT edge devices performing data analytics right at the source, reducing latency as well as energy consumption for data communication.

Multi-Scale High-Resolution Vision Transformer for Semantic Segmentation

1 code implementation • CVPR 2022 • Jiaqi Gu, Hyoukjun Kwon, Dilin Wang, Wei Ye, Meng Li, Yu-Hsin Chen, Liangzhen Lai, Vikas Chandra, David Z. Pan

Therefore, we propose HRViT, which enhances ViTs to learn semantically-rich and spatially-precise multi-scale representations by integrating high-resolution multi-branch architectures with ViTs.

Ranked #24 on

Semantic Segmentation

on Cityscapes val

Ranked #24 on

Semantic Segmentation

on Cityscapes val

AttentiveNAS: Improving Neural Architecture Search via Attentive Sampling

2 code implementations • CVPR 2021 • Dilin Wang, Meng Li, Chengyue Gong, Vikas Chandra

Our discovered model family, AttentiveNAS models, achieves top-1 accuracy from 77. 3% to 80. 7% on ImageNet, and outperforms SOTA models, including BigNAS and Once-for-All networks.

Ranked #21 on

Neural Architecture Search

on ImageNet

Ranked #21 on

Neural Architecture Search

on ImageNet

AlphaNet: Improved Training of Supernets with Alpha-Divergence

2 code implementations • 16 Feb 2021 • Dilin Wang, Chengyue Gong, Meng Li, Qiang Liu, Vikas Chandra

Weight-sharing NAS builds a supernet that assembles all the architectures as its sub-networks and jointly trains the supernet with the sub-networks.

Ranked #12 on

Neural Architecture Search

on ImageNet

Ranked #12 on

Neural Architecture Search

on ImageNet

Federated Learning with Non-IID Data

2 code implementations • 2 Jun 2018 • Yue Zhao, Meng Li, Liangzhen Lai, Naveen Suda, Damon Civin, Vikas Chandra

Experiments show that accuracy can be increased by 30% for the CIFAR-10 dataset with only 5% globally shared data.

Vision Transformers with Patch Diversification

1 code implementation • 26 Apr 2021 • Chengyue Gong, Dilin Wang, Meng Li, Vikas Chandra, Qiang Liu

To alleviate this problem, in this work, we introduce novel loss functions in vision transformer training to explicitly encourage diversity across patch representations for more discriminative feature extraction.

Ranked #19 on

Semantic Segmentation

on Cityscapes val

Ranked #19 on

Semantic Segmentation

on Cityscapes val

NASViT: Neural Architecture Search for Efficient Vision Transformers with Gradient Conflict aware Supernet Training

1 code implementation • ICLR 2022 • Chengyue Gong, Dilin Wang, Meng Li, Xinlei Chen, Zhicheng Yan, Yuandong Tian, Qiang Liu, Vikas Chandra

In this work, we observe that the poor performance is due to a gradient conflict issue: the gradients of different sub-networks conflict with that of the supernet more severely in ViTs than CNNs, which leads to early saturation in training and inferior convergence.

Ranked #7 on

Neural Architecture Search

on ImageNet

Ranked #7 on

Neural Architecture Search

on ImageNet

DepthShrinker: A New Compression Paradigm Towards Boosting Real-Hardware Efficiency of Compact Neural Networks

1 code implementation • 2 Jun 2022 • Yonggan Fu, Haichuan Yang, Jiayi Yuan, Meng Li, Cheng Wan, Raghuraman Krishnamoorthi, Vikas Chandra, Yingyan Lin

Efficient deep neural network (DNN) models equipped with compact operators (e. g., depthwise convolutions) have shown great potential in reducing DNNs' theoretical complexity (e. g., the total number of weights/operations) while maintaining a decent model accuracy.

CPT: Efficient Deep Neural Network Training via Cyclic Precision

1 code implementation • ICLR 2021 • Yonggan Fu, Han Guo, Meng Li, Xin Yang, Yining Ding, Vikas Chandra, Yingyan Lin

In this paper, we attempt to explore low-precision training from a new perspective as inspired by recent findings in understanding DNN training: we conjecture that DNNs' precision might have a similar effect as the learning rate during DNN training, and advocate dynamic precision along the training trajectory for further boosting the time/energy efficiency of DNN training.

Mind Mappings: Enabling Efficient Algorithm-Accelerator Mapping Space Search

1 code implementation • 2 Mar 2021 • Kartik Hegde, Po-An Tsai, Sitao Huang, Vikas Chandra, Angshuman Parashar, Christopher W. Fletcher

The key idea is to derive a smooth, differentiable approximation to the otherwise non-smooth, non-convex search space.

Fast Point Cloud Generation with Straight Flows

1 code implementation • CVPR 2023 • Lemeng Wu, Dilin Wang, Chengyue Gong, Xingchao Liu, Yunyang Xiong, Rakesh Ranjan, Raghuraman Krishnamoorthi, Vikas Chandra, Qiang Liu

We perform evaluations on multiple 3D tasks and find that our PSF performs comparably to the standard diffusion model, outperforming other efficient 3D point cloud generation methods.

KeepAugment: A Simple Information-Preserving Data Augmentation Approach

1 code implementation • CVPR 2021 • Chengyue Gong, Dilin Wang, Meng Li, Vikas Chandra, Qiang Liu

Data augmentation (DA) is an essential technique for training state-of-the-art deep learning systems.

Energy-Aware Neural Architecture Optimization with Fast Splitting Steepest Descent

1 code implementation • ICLR 2020 • Dilin Wang, Meng Li, Lemeng Wu, Vikas Chandra, Qiang Liu

Designing energy-efficient networks is of critical importance for enabling state-of-the-art deep learning in mobile and edge settings where the computation and energy budgets are highly limited.

Revisiting Sample Size Determination in Natural Language Understanding

1 code implementation • 1 Jul 2023 • Ernie Chang, Muhammad Hassan Rashid, Pin-Jie Lin, Changsheng Zhao, Vera Demberg, Yangyang Shi, Vikas Chandra

Knowing exactly how many data points need to be labeled to achieve a certain model performance is a hugely beneficial step towards reducing the overall budgets for annotation.

Bit Fusion: Bit-Level Dynamically Composable Architecture for Accelerating Deep Neural Networks

no code implementations • 5 Dec 2017 • Hardik Sharma, Jongse Park, Naveen Suda, Liangzhen Lai, Benson Chau, Joon Kyung Kim, Vikas Chandra, Hadi Esmaeilzadeh

Compared to Stripes, BitFusion provides 2. 6x speedup and 3. 9x energy reduction at 45 nm node when BitFusion area and frequency are set to those of Stripes.

Not All Ops Are Created Equal!

no code implementations • 12 Jan 2018 • Liangzhen Lai, Naveen Suda, Vikas Chandra

Efficient and compact neural network models are essential for enabling the deployment on mobile and embedded devices.

PrivyNet: A Flexible Framework for Privacy-Preserving Deep Neural Network Training

no code implementations • ICLR 2018 • Meng Li, Liangzhen Lai, Naveen Suda, Vikas Chandra, David Z. Pan

Massive data exist among user local platforms that usually cannot support deep neural network (DNN) training due to computation and storage resource constraints.

Deep Convolutional Neural Network Inference with Floating-point Weights and Fixed-point Activations

no code implementations • 8 Mar 2017 • Liangzhen Lai, Naveen Suda, Vikas Chandra

To alleviate these problems to some extent, prior research utilize low precision fixed-point numbers to represent the CNN weights and activations.

Heterogeneous Dataflow Accelerators for Multi-DNN Workloads

no code implementations • 13 Sep 2019 • Hyoukjun Kwon, Liangzhen Lai, Tushar Krishna, Vikas Chandra

The results suggest that HDA is an alternative class of Pareto-optimal accelerators to RDA with strength in energy, which can be a better choice than RDAs depending on the use cases.

Distributed, Parallel, and Cluster Computing

Co-Exploration of Neural Architectures and Heterogeneous ASIC Accelerator Designs Targeting Multiple Tasks

no code implementations • 10 Feb 2020 • Lei Yang, Zheyu Yan, Meng Li, Hyoukjun Kwon, Liangzhen Lai, Tushar Krishna, Vikas Chandra, Weiwen Jiang, Yiyu Shi

Neural Architecture Search (NAS) has demonstrated its power on various AI accelerating platforms such as Field Programmable Gate Arrays (FPGAs) and Graphic Processing Units (GPUs).

Improving Efficiency in Neural Network Accelerator Using Operands Hamming Distance optimization

no code implementations • 13 Feb 2020 • Meng Li, Yilei Li, Pierce Chuang, Liangzhen Lai, Vikas Chandra

Neural network accelerator is a key enabler for the on-device AI inference, for which energy efficiency is an important metric.

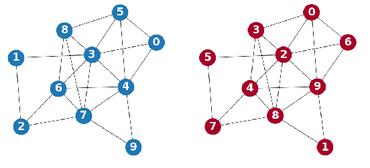

NASGEM: Neural Architecture Search via Graph Embedding Method

no code implementations • 8 Jul 2020 • Hsin-Pai Cheng, Tunhou Zhang, Yixing Zhang, Shi-Yu Li, Feng Liang, Feng Yan, Meng Li, Vikas Chandra, Hai Li, Yiran Chen

To preserve graph correlation information in encoding, we propose NASGEM which stands for Neural Architecture Search via Graph Embedding Method.

One Weight Bitwidth to Rule Them All

no code implementations • 22 Aug 2020 • Ting-Wu Chin, Pierce I-Jen Chuang, Vikas Chandra, Diana Marculescu

Weight quantization for deep ConvNets has shown promising results for applications such as image classification and semantic segmentation and is especially important for applications where memory storage is limited.

DNA: Differentiable Network-Accelerator Co-Search

no code implementations • 28 Oct 2020 • Yongan Zhang, Yonggan Fu, Weiwen Jiang, Chaojian Li, Haoran You, Meng Li, Vikas Chandra, Yingyan Lin

Powerful yet complex deep neural networks (DNNs) have fueled a booming demand for efficient DNN solutions to bring DNN-powered intelligence into numerous applications.

Can Temporal Information Help with Contrastive Self-Supervised Learning?

no code implementations • 25 Nov 2020 • Yutong Bai, Haoqi Fan, Ishan Misra, Ganesh Venkatesh, Yongyi Lu, Yuyin Zhou, Qihang Yu, Vikas Chandra, Alan Yuille

To this end, we present Temporal-aware Contrastive self-supervised learningTaCo, as a general paradigm to enhance video CSL.

ScaleNAS: One-Shot Learning of Scale-Aware Representations for Visual Recognition

no code implementations • 30 Nov 2020 • Hsin-Pai Cheng, Feng Liang, Meng Li, Bowen Cheng, Feng Yan, Hai Li, Vikas Chandra, Yiran Chen

We use ScaleNAS to create high-resolution models for two different tasks, ScaleNet-P for human pose estimation and ScaleNet-S for semantic segmentation.

Ranked #5 on

Multi-Person Pose Estimation

on COCO test-dev

Ranked #5 on

Multi-Person Pose Estimation

on COCO test-dev

EVRNet: Efficient Video Restoration on Edge Devices

no code implementations • 3 Dec 2020 • Sachin Mehta, Amit Kumar, Fitsum Reda, Varun Nasery, Vikram Mulukutla, Rakesh Ranjan, Vikas Chandra

Video transmission applications (e. g., conferencing) are gaining momentum, especially in times of global health pandemic.

Memory-efficient Speech Recognition on Smart Devices

no code implementations • 23 Feb 2021 • Ganesh Venkatesh, Alagappan Valliappan, Jay Mahadeokar, Yuan Shangguan, Christian Fuegen, Michael L. Seltzer, Vikas Chandra

Recurrent transducer models have emerged as a promising solution for speech recognition on the current and next generation smart devices.

Feature-Align Network with Knowledge Distillation for Efficient Denoising

no code implementations • 2 Mar 2021 • Lucas D. Young, Fitsum A. Reda, Rakesh Ranjan, Jon Morton, Jun Hu, Yazhu Ling, Xiaoyu Xiang, David Liu, Vikas Chandra

(2) A novel Feature Matching Loss that allows knowledge distillation from large denoising networks in the form of a perceptual content loss.

Collaborative Training of Acoustic Encoders for Speech Recognition

no code implementations • 16 Jun 2021 • Varun Nagaraja, Yangyang Shi, Ganesh Venkatesh, Ozlem Kalinli, Michael L. Seltzer, Vikas Chandra

On-device speech recognition requires training models of different sizes for deploying on devices with various computational budgets.

Noisy Training Improves E2E ASR for the Edge

no code implementations • 9 Jul 2021 • Dilin Wang, Yuan Shangguan, Haichuan Yang, Pierce Chuang, Jiatong Zhou, Meng Li, Ganesh Venkatesh, Ozlem Kalinli, Vikas Chandra

We apply noisy training to improve both dense and sparse state-of-the-art Emformer models and observe consistent WER reduction.

Automatic Speech Recognition

Automatic Speech Recognition

Automatic Speech Recognition (ASR)

+2

Automatic Speech Recognition (ASR)

+2

Contrastive Quant: Quantization Makes Stronger Contrastive Learning

no code implementations • 29 Sep 2021 • Yonggan Fu, Qixuan Yu, Meng Li, Xu Ouyang, Vikas Chandra, Yingyan Lin

Contrastive learning, which learns visual representations by enforcing feature consistency under different augmented views, has emerged as one of the most effective unsupervised learning methods.

Omni-sparsity DNN: Fast Sparsity Optimization for On-Device Streaming E2E ASR via Supernet

no code implementations • 15 Oct 2021 • Haichuan Yang, Yuan Shangguan, Dilin Wang, Meng Li, Pierce Chuang, Xiaohui Zhang, Ganesh Venkatesh, Ozlem Kalinli, Vikas Chandra

From wearables to powerful smart devices, modern automatic speech recognition (ASR) models run on a variety of edge devices with different computational budgets.

Automatic Speech Recognition

Automatic Speech Recognition

Automatic Speech Recognition (ASR)

+2

Automatic Speech Recognition (ASR)

+2

Low-Rank+Sparse Tensor Compression for Neural Networks

no code implementations • 2 Nov 2021 • Cole Hawkins, Haichuan Yang, Meng Li, Liangzhen Lai, Vikas Chandra

Low-rank tensor compression has been proposed as a promising approach to reduce the memory and compute requirements of neural networks for their deployment on edge devices.

On the Pareto Efficiency of Quantized CNN

no code implementations • 25 Sep 2019 • Ting-Wu Chin, Pierce I-Jen Chuang, Vikas Chandra, Diana Marculescu

Weight Quantization for deep convolutional neural networks (CNNs) has shown promising results in compressing and accelerating CNN-powered applications such as semantic segmentation, gesture recognition, and scene understanding.

Streaming parallel transducer beam search with fast-slow cascaded encoders

no code implementations • 29 Mar 2022 • Jay Mahadeokar, Yangyang Shi, Ke Li, Duc Le, Jiedan Zhu, Vikas Chandra, Ozlem Kalinli, Michael L Seltzer

Streaming ASR with strict latency constraints is required in many speech recognition applications.

LiCo-Net: Linearized Convolution Network for Hardware-efficient Keyword Spotting

no code implementations • 9 Nov 2022 • Haichuan Yang, Zhaojun Yang, Li Wan, Biqiao Zhang, Yangyang Shi, Yiteng Huang, Ivaylo Enchev, Limin Tang, Raziel Alvarez, Ming Sun, Xin Lei, Raghuraman Krishnamoorthi, Vikas Chandra

This paper proposes a hardware-efficient architecture, Linearized Convolution Network (LiCo-Net) for keyword spotting.

XRBench: An Extended Reality (XR) Machine Learning Benchmark Suite for the Metaverse

no code implementations • 16 Nov 2022 • Hyoukjun Kwon, Krishnakumar Nair, Jamin Seo, Jason Yik, Debabrata Mohapatra, Dongyuan Zhan, Jinook Song, Peter Capak, Peizhao Zhang, Peter Vajda, Colby Banbury, Mark Mazumder, Liangzhen Lai, Ashish Sirasao, Tushar Krishna, Harshit Khaitan, Vikas Chandra, Vijay Janapa Reddi

We hope that our work will stimulate research and lead to the development of a new generation of ML systems for XR use cases.

DREAM: A Dynamic Scheduler for Dynamic Real-time Multi-model ML Workloads

no code implementations • 7 Dec 2022 • Seah Kim, Hyoukjun Kwon, Jinook Song, Jihyuck Jo, Yu-Hsin Chen, Liangzhen Lai, Vikas Chandra

Such dynamic behaviors introduce new challenges to the system software in an ML system since the overall system load is not completely predictable, unlike traditional ML workloads.

PathFusion: Path-consistent Lidar-Camera Deep Feature Fusion

no code implementations • 12 Dec 2022 • Lemeng Wu, Dilin Wang, Meng Li, Yunyang Xiong, Raghuraman Krishnamoorthi, Qiang Liu, Vikas Chandra

Fusing 3D LiDAR features with 2D camera features is a promising technique for enhancing the accuracy of 3D detection, thanks to their complementary physical properties.

LLM-QAT: Data-Free Quantization Aware Training for Large Language Models

no code implementations • 29 May 2023 • Zechun Liu, Barlas Oguz, Changsheng Zhao, Ernie Chang, Pierre Stock, Yashar Mehdad, Yangyang Shi, Raghuraman Krishnamoorthi, Vikas Chandra

Several post-training quantization methods have been applied to large language models (LLMs), and have been shown to perform well down to 8-bits.

Mixture-of-Supernets: Improving Weight-Sharing Supernet Training with Architecture-Routed Mixture-of-Experts

no code implementations • 8 Jun 2023 • Ganesh Jawahar, Haichuan Yang, Yunyang Xiong, Zechun Liu, Dilin Wang, Fei Sun, Meng Li, Aasish Pappu, Barlas Oguz, Muhammad Abdul-Mageed, Laks V. S. Lakshmanan, Raghuraman Krishnamoorthi, Vikas Chandra

In addition, the proposed method achieves the SOTA performance in NAS for building fast machine translation models, yielding better latency-BLEU tradeoff compared to HAT, state-of-the-art NAS for MT.

TODM: Train Once Deploy Many Efficient Supernet-Based RNN-T Compression For On-device ASR Models

no code implementations • 5 Sep 2023 • Yuan Shangguan, Haichuan Yang, Danni Li, Chunyang Wu, Yassir Fathullah, Dilin Wang, Ayushi Dalmia, Raghuraman Krishnamoorthi, Ozlem Kalinli, Junteng Jia, Jay Mahadeokar, Xin Lei, Mike Seltzer, Vikas Chandra

Results demonstrate that our TODM Supernet either matches or surpasses the performance of manually tuned models by up to a relative of 3% better in word error rate (WER), while efficiently keeping the cost of training many models at a small constant.

Automatic Speech Recognition

Automatic Speech Recognition

Automatic Speech Recognition (ASR)

+2

Automatic Speech Recognition (ASR)

+2

Folding Attention: Memory and Power Optimization for On-Device Transformer-based Streaming Speech Recognition

no code implementations • 14 Sep 2023 • Yang Li, Liangzhen Lai, Yuan Shangguan, Forrest N. Iandola, Zhaoheng Ni, Ernie Chang, Yangyang Shi, Vikas Chandra

Instead, the bottleneck lies in the linear projection layers of multi-head attention and feedforward networks, constituting a substantial portion of the model size and contributing significantly to computation, memory, and power usage.

Enhance audio generation controllability through representation similarity regularization

no code implementations • 15 Sep 2023 • Yangyang Shi, Gael Le Lan, Varun Nagaraja, Zhaoheng Ni, Xinhao Mei, Ernie Chang, Forrest Iandola, Yang Liu, Vikas Chandra

This paper presents an innovative approach to enhance control over audio generation by emphasizing the alignment between audio and text representations during model training.

Stack-and-Delay: a new codebook pattern for music generation

no code implementations • 15 Sep 2023 • Gael Le Lan, Varun Nagaraja, Ernie Chang, David Kant, Zhaoheng Ni, Yangyang Shi, Forrest Iandola, Vikas Chandra

In language modeling based music generation, a generated waveform is represented by a sequence of hierarchical token stacks that can be decoded either in an auto-regressive manner or in parallel, depending on the codebook patterns.

FoleyGen: Visually-Guided Audio Generation

no code implementations • 19 Sep 2023 • Xinhao Mei, Varun Nagaraja, Gael Le Lan, Zhaoheng Ni, Ernie Chang, Yangyang Shi, Vikas Chandra

A prevalent problem in V2A generation is the misalignment of generated audio with the visible actions in the video.

Exploring Speech Enhancement for Low-resource Speech Synthesis

no code implementations • 19 Sep 2023 • Zhaoheng Ni, Sravya Popuri, Ning Dong, Kohei Saijo, Xiaohui Zhang, Gael Le Lan, Yangyang Shi, Vikas Chandra, Changhan Wang

High-quality and intelligible speech is essential to text-to-speech (TTS) model training, however, obtaining high-quality data for low-resource languages is challenging and expensive.

Automatic Speech Recognition

Automatic Speech Recognition

Automatic Speech Recognition (ASR)

+3

Automatic Speech Recognition (ASR)

+3

On The Open Prompt Challenge In Conditional Audio Generation

no code implementations • 1 Nov 2023 • Ernie Chang, Sidd Srinivasan, Mahi Luthra, Pin-Jie Lin, Varun Nagaraja, Forrest Iandola, Zechun Liu, Zhaoheng Ni, Changsheng Zhao, Yangyang Shi, Vikas Chandra

Text-to-audio generation (TTA) produces audio from a text description, learning from pairs of audio samples and hand-annotated text.

In-Context Prompt Editing For Conditional Audio Generation

no code implementations • 1 Nov 2023 • Ernie Chang, Pin-Jie Lin, Yang Li, Sidd Srinivasan, Gael Le Lan, David Kant, Yangyang Shi, Forrest Iandola, Vikas Chandra

We show that the framework enhanced the audio quality across the set of collected user prompts, which were edited with reference to the training captions as exemplars.

SqueezeSAM: User friendly mobile interactive segmentation

no code implementations • 11 Dec 2023 • Balakrishnan Varadarajan, Bilge Soran, Forrest Iandola, Xiaoyu Xiang, Yunyang Xiong, Chenchen Zhu, Raghuraman Krishnamoorthi, Vikas Chandra

Finally, when a user clicks on an object, they typically expect all related pieces of the object to be segmented.

SteinDreamer: Variance Reduction for Text-to-3D Score Distillation via Stein Identity

no code implementations • 31 Dec 2023 • Peihao Wang, Zhiwen Fan, Dejia Xu, Dilin Wang, Sreyas Mohan, Forrest Iandola, Rakesh Ranjan, Yilei Li, Qiang Liu, Zhangyang Wang, Vikas Chandra

In this paper, we reveal that the gradient estimation in score distillation is inherent to high variance.

Taming Mode Collapse in Score Distillation for Text-to-3D Generation

no code implementations • 31 Dec 2023 • Peihao Wang, Dejia Xu, Zhiwen Fan, Dilin Wang, Sreyas Mohan, Forrest Iandola, Rakesh Ranjan, Yilei Li, Qiang Liu, Zhangyang Wang, Vikas Chandra

In this paper, we reveal that the existing score distillation-based text-to-3D generation frameworks degenerate to maximal likelihood seeking on each view independently and thus suffer from the mode collapse problem, manifesting as the Janus artifact in practice.

MVDiffusion++: A Dense High-resolution Multi-view Diffusion Model for Single or Sparse-view 3D Object Reconstruction

no code implementations • 20 Feb 2024 • Shitao Tang, Jiacheng Chen, Dilin Wang, Chengzhou Tang, Fuyang Zhang, Yuchen Fan, Vikas Chandra, Yasutaka Furukawa, Rakesh Ranjan

MVDiffusion++ achieves superior flexibility and scalability with two surprisingly simple ideas: 1) A ``pose-free architecture'' where standard self-attention among 2D latent features learns 3D consistency across an arbitrary number of conditional and generation views without explicitly using camera pose information; and 2) A ``view dropout strategy'' that discards a substantial number of output views during training, which reduces the training-time memory footprint and enables dense and high-resolution view synthesis at test time.

Not All Weights Are Created Equal: Enhancing Energy Efficiency in On-Device Streaming Speech Recognition

no code implementations • 20 Feb 2024 • Yang Li, Yuan Shangguan, Yuhao Wang, Liangzhen Lai, Ernie Chang, Changsheng Zhao, Yangyang Shi, Vikas Chandra

This study delves into how weight parameters in speech recognition models influence the overall power consumption of these models.

MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases

no code implementations • 22 Feb 2024 • Zechun Liu, Changsheng Zhao, Forrest Iandola, Chen Lai, Yuandong Tian, Igor Fedorov, Yunyang Xiong, Ernie Chang, Yangyang Shi, Raghuraman Krishnamoorthi, Liangzhen Lai, Vikas Chandra

The resultant models, denoted as MobileLLM-LS, demonstrate a further accuracy enhancement of 0. 7%/0. 8% than MobileLLM 125M/350M.

CoherentGS: Sparse Novel View Synthesis with Coherent 3D Gaussians

no code implementations • 28 Mar 2024 • Avinash Paliwal, Wei Ye, Jinhui Xiong, Dmytro Kotovenko, Rakesh Ranjan, Vikas Chandra, Nima Khademi Kalantari

The field of 3D reconstruction from images has rapidly evolved in the past few years, first with the introduction of Neural Radiance Field (NeRF) and more recently with 3D Gaussian Splatting (3DGS).