Search Results for author: Wei Ye

Found 70 papers, 37 papers with code

Deep Dynamic Boosted Forest

no code implementations • 19 Apr 2018 • Haixin Wang, Xingzhang Ren, Jinan Sun, Wei Ye, Long Chen, Muzhi Yu, Shikun Zhang

Specically, we propose to measure the quality of each leaf node of every decision tree in the random forest to determine hard examples.

PKUSE at SemEval-2019 Task 3: Emotion Detection with Emotion-Oriented Neural Attention Network

no code implementations • SEMEVAL 2019 • Luyao Ma, Long Zhang, Wei Ye, Wenhui Hu

This paper presents the system in SemEval-2019 Task 3, {``}EmoContext: Contextual Emotion Detection in Text{''}.

Exploiting Entity BIO Tag Embeddings and Multi-task Learning for Relation Extraction with Imbalanced Data

no code implementations • ACL 2019 • Wei Ye, Bo Li, Rui Xie, Zhonghao Sheng, Long Chen, Shikun Zhang

In practical scenario, relation extraction needs to first identify entity pairs that have relation and then assign a correct relation class.

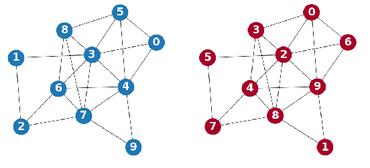

Tree++: Truncated Tree Based Graph Kernels

1 code implementation • 23 Feb 2020 • Wei Ye, Zhen Wang, Rachel Redberg, Ambuj Singh

At the heart of Tree++ is a graph kernel called the path-pattern graph kernel.

Leveraging Code Generation to Improve Code Retrieval and Summarization via Dual Learning

no code implementations • 24 Feb 2020 • Wei Ye, Rui Xie, Jinglei Zhang, Tianxiang Hu, Xiaoyin Wang, Shikun Zhang

Since both tasks aim to model the association between natural language and programming language, recent studies have combined these two tasks to improve their performance.

Incorporating User's Preference into Attributed Graph Clustering

1 code implementation • 24 Mar 2020 • Wei Ye, Dominik Mautz, Christian Boehm, Ambuj Singh, Claudia Plant

In contrast to global clustering, local clustering aims to find only one cluster that is concentrating on the given seed vertex (and also on the designated attributes for attributed graphs).

Learning Deep Graph Representations via Convolutional Neural Networks

1 code implementation • 5 Apr 2020 • Wei Ye, Omid Askarisichani, Alex Jones, Ambuj Singh

The learned deep representation for a graph is a dense and low-dimensional vector that captures complex high-order interactions in a vertex neighborhood.

Graph Enhanced Dual Attention Network for Document-Level Relation Extraction

no code implementations • COLING 2020 • Bo Li, Wei Ye, Zhonghao Sheng, Rui Xie, Xiangyu Xi, Shikun Zhang

Document-level relation extraction requires inter-sentence reasoning capabilities to capture local and global contextual information for multiple relational facts.

SongMASS: Automatic Song Writing with Pre-training and Alignment Constraint

1 code implementation • 9 Dec 2020 • Zhonghao Sheng, Kaitao Song, Xu Tan, Yi Ren, Wei Ye, Shikun Zhang, Tao Qin

Automatic song writing aims to compose a song (lyric and/or melody) by machine, which is an interesting topic in both academia and industry.

Exploiting Method Names to Improve Code Summarization: A Deliberation Multi-Task Learning Approach

no code implementations • 21 Mar 2021 • Rui Xie, Wei Ye, Jinan Sun, Shikun Zhang

Code summaries are brief natural language descriptions of source code pieces.

Multi-view Inference for Relation Extraction with Uncertain Knowledge

1 code implementation • 28 Apr 2021 • Bo Li, Wei Ye, Canming Huang, Shikun Zhang

Knowledge graphs (KGs) are widely used to facilitate relation extraction (RE) tasks.

Ranked #32 on

Relation Extraction

on DocRED

(using extra training data)

Ranked #32 on

Relation Extraction

on DocRED

(using extra training data)

QuadrupletBERT: An Efficient Model For Embedding-Based Large-Scale Retrieval

no code implementations • NAACL 2021 • Peiyang Liu, Sen Wang, Xi Wang, Wei Ye, Shikun Zhang

The embedding-based large-scale query-document retrieval problem is a hot topic in the information retrieval (IR) field.

Multi-Hop Transformer for Document-Level Machine Translation

no code implementations • NAACL 2021 • Long Zhang, Tong Zhang, Haibo Zhang, Baosong Yang, Wei Ye, Shikun Zhang

Document-level neural machine translation (NMT) has proven to be of profound value for its effectiveness on capturing contextual information.

Capturing Event Argument Interaction via A Bi-Directional Entity-Level Recurrent Decoder

no code implementations • ACL 2021 • Xiangyu Xi, Wei Ye, Shikun Zhang, Quanxiu Wang, Huixing Jiang, Wei Wu

Capturing interactions among event arguments is an essential step towards robust event argument extraction (EAE).

Legal Judgment Prediction with Multi-Stage CaseRepresentation Learning in the Real Court Setting

1 code implementation • 12 Jul 2021 • Luyao Ma, Yating Zhang, Tianyi Wang, Xiaozhong Liu, Wei Ye, Changlong Sun, Shikun Zhang

Legal judgment prediction(LJP) is an essential task for legal AI.

Point, Disambiguate and Copy: Incorporating Bilingual Dictionaries for Neural Machine Translation

no code implementations • ACL 2021 • Tong Zhang, Long Zhang, Wei Ye, Bo Li, Jinan Sun, Xiaoyu Zhu, Wen Zhao, Shikun Zhang

This paper proposes a sophisticated neural architecture to incorporate bilingual dictionaries into Neural Machine Translation (NMT) models.

Deep Embedded K-Means Clustering

2 code implementations • 30 Sep 2021 • Wengang Guo, Kaiyan Lin, Wei Ye

To this end, we discard the decoder and propose a greedy method to optimize the representation.

Ranked #1 on

Deep Clustering

on MNIST

Ranked #1 on

Deep Clustering

on MNIST

Multi-Scale High-Resolution Vision Transformer for Semantic Segmentation

1 code implementation • CVPR 2022 • Jiaqi Gu, Hyoukjun Kwon, Dilin Wang, Wei Ye, Meng Li, Yu-Hsin Chen, Liangzhen Lai, Vikas Chandra, David Z. Pan

Therefore, we propose HRViT, which enhances ViTs to learn semantically-rich and spatially-precise multi-scale representations by integrating high-resolution multi-branch architectures with ViTs.

Ranked #24 on

Semantic Segmentation

on Cityscapes val

Ranked #24 on

Semantic Segmentation

on Cityscapes val

Temporally Consistent Online Depth Estimation in Dynamic Scenes

no code implementations • 17 Nov 2021 • Zhaoshuo Li, Wei Ye, Dilin Wang, Francis X. Creighton, Russell H. Taylor, Ganesh Venkatesh, Mathias Unberath

We present a framework named Consistent Online Dynamic Depth (CODD) to produce temporally consistent depth estimates in dynamic scenes in an online setting.

Frequency-Aware Contrastive Learning for Neural Machine Translation

no code implementations • 29 Dec 2021 • Tong Zhang, Wei Ye, Baosong Yang, Long Zhang, Xingzhang Ren, Dayiheng Liu, Jinan Sun, Shikun Zhang, Haibo Zhang, Wen Zhao

Inspired by the observation that low-frequency words form a more compact embedding space, we tackle this challenge from a representation learning perspective.

Modeling Human-AI Team Decision Making

1 code implementation • 8 Jan 2022 • Wei Ye, Francesco Bullo, Noah Friedkin, Ambuj K Singh

AI and humans bring complementary skills to group deliberations.

Graph Neural Diffusion Networks for Semi-supervised Learning

1 code implementation • 24 Jan 2022 • Wei Ye, Zexi Huang, Yunqi Hong, Ambuj Singh

To solve these two issues, we propose a new graph neural network called GND-Nets (for Graph Neural Diffusion Networks) that exploits the local and global neighborhood information of a vertex in a single layer.

Incorporating Heterophily into Graph Neural Networks for Graph Classification

1 code implementation • 15 Mar 2022 • Wei Ye, Jiayi Yang, Sourav Medya, Ambuj Singh

Graph neural networks (GNNs) often assume strong homophily in graphs, seldom considering heterophily which means connected nodes tend to have different class labels and dissimilar features.

A Low-Cost, Controllable and Interpretable Task-Oriented Chatbot: With Real-World After-Sale Services as Example

no code implementations • 13 May 2022 • Xiangyu Xi, Chenxu Lv, Yuncheng Hua, Wei Ye, Chaobo Sun, Shuaipeng Liu, Fan Yang, Guanglu Wan

Though widely used in industry, traditional task-oriented dialogue systems suffer from three bottlenecks: (i) difficult ontology construction (e. g., intents and slots); (ii) poor controllability and interpretability; (iii) annotation-hungry.

Multi-scale Wasserstein Shortest-path Graph Kernels for Graph Classification

1 code implementation • 2 Jun 2022 • Wei Ye, Hao Tian, Qijun Chen

To mitigate the two challenges, we propose a novel graph kernel called the Multi-scale Wasserstein Shortest-Path graph kernel (MWSP), at the heart of which is the multi-scale shortest-path node feature map, of which each element denotes the number of occurrences of a shortest path around a node.

USB: A Unified Semi-supervised Learning Benchmark for Classification

4 code implementations • 12 Aug 2022 • Yidong Wang, Hao Chen, Yue Fan, Wang Sun, Ran Tao, Wenxin Hou, RenJie Wang, Linyi Yang, Zhi Zhou, Lan-Zhe Guo, Heli Qi, Zhen Wu, Yu-Feng Li, Satoshi Nakamura, Wei Ye, Marios Savvides, Bhiksha Raj, Takahiro Shinozaki, Bernt Schiele, Jindong Wang, Xing Xie, Yue Zhang

We further provide the pre-trained versions of the state-of-the-art neural models for CV tasks to make the cost affordable for further tuning.

Conv-Adapter: Exploring Parameter Efficient Transfer Learning for ConvNets

no code implementations • 15 Aug 2022 • Hao Chen, Ran Tao, Han Zhang, Yidong Wang, Xiang Li, Wei Ye, Jindong Wang, Guosheng Hu, Marios Savvides

Beyond classification, Conv-Adapter can generalize to detection and segmentation tasks with more than 50% reduction of parameters but comparable performance to the traditional full fine-tuning.

Exploiting Hybrid Semantics of Relation Paths for Multi-hop Question Answering Over Knowledge Graphs

no code implementations • COLING 2022 • Zile Qiao, Wei Ye, Tong Zhang, Tong Mo, Weiping Li, Shikun Zhang

Answering natural language questions on knowledge graphs (KGQA) remains a great challenge in terms of understanding complex questions via multi-hop reasoning.

Museformer: Transformer with Fine- and Coarse-Grained Attention for Music Generation

1 code implementation • 19 Oct 2022 • Botao Yu, Peiling Lu, Rui Wang, Wei Hu, Xu Tan, Wei Ye, Shikun Zhang, Tao Qin, Tie-Yan Liu

A recent trend is to use Transformer or its variants in music generation, which is, however, suboptimal, because the full attention cannot efficiently model the typically long music sequences (e. g., over 10, 000 tokens), and the existing models have shortcomings in generating musical repetition structures.

DeS3: Adaptive Attention-driven Self and Soft Shadow Removal using ViT Similarity

1 code implementation • 15 Nov 2022 • Yeying Jin, Wei Ye, Wenhan Yang, Yuan Yuan, Robby T. Tan

Most existing methods rely on binary shadow masks, without considering the ambiguous boundaries of soft and self shadows.

Consistent Direct Time-of-Flight Video Depth Super-Resolution

1 code implementation • CVPR 2023 • Zhanghao Sun, Wei Ye, Jinhui Xiong, Gyeongmin Choe, Jialiang Wang, Shuochen Su, Rakesh Ranjan

We believe the methods and dataset are beneficial to a broad community as dToF depth sensing is becoming mainstream on mobile devices.

MUSIED: A Benchmark for Event Detection from Multi-Source Heterogeneous Informal Texts

1 code implementation • 25 Nov 2022 • Xiangyu Xi, Jianwei Lv, Shuaipeng Liu, Wei Ye, Fan Yang, Guanglu Wan

As a pioneering exploration that expands event detection to the scenarios involving informal and heterogeneous texts, we propose a new large-scale Chinese event detection dataset based on user reviews, text conversations, and phone conversations in a leading e-commerce platform for food service.

Sequence Generation with Label Augmentation for Relation Extraction

1 code implementation • 29 Dec 2022 • Bo Li, Dingyao Yu, Wei Ye, Jinglei Zhang, Shikun Zhang

Sequence generation demonstrates promising performance in recent information extraction efforts, by incorporating large-scale pre-trained Seq2Seq models.

Ranked #1 on

Relation Extraction

on sciERC-sent

Ranked #1 on

Relation Extraction

on sciERC-sent

Reviewing Labels: Label Graph Network with Top-k Prediction Set for Relation Extraction

no code implementations • 29 Dec 2022 • Bo Li, Wei Ye, Jinglei Zhang, Shikun Zhang

Specifically, for a given sample, we build a label graph to review candidate labels in the Top-k prediction set and learn the connections between them.

Ranked #1 on

Relation Extraction

on TACRED-Revisited

Ranked #1 on

Relation Extraction

on TACRED-Revisited

BUS: Efficient and Effective Vision-Language Pre-Training with Bottom-Up Patch Summarization.

no code implementations • ICCV 2023 • Chaoya Jiang, Haiyang Xu, Wei Ye, Qinghao Ye, Chenliang Li, Ming Yan, Bin Bi, Shikun Zhang, Fei Huang, Songfang Huang

In this paper, we propose a Bottom-Up Patch Summarization approach named BUS which is inspired by the Document Summarization Task in NLP to learn a concise visual summary of lengthy visual token sequences, guided by textual semantics.

On the Robustness of ChatGPT: An Adversarial and Out-of-distribution Perspective

1 code implementation • 22 Feb 2023 • Jindong Wang, Xixu Hu, Wenxin Hou, Hao Chen, Runkai Zheng, Yidong Wang, Linyi Yang, Haojun Huang, Wei Ye, Xiubo Geng, Binxin Jiao, Yue Zhang, Xing Xie

In this paper, we conduct a thorough evaluation of the robustness of ChatGPT from the adversarial and out-of-distribution (OOD) perspective.

Dialog-to-Actions: Building Task-Oriented Dialogue System via Action-Level Generation

no code implementations • 3 Apr 2023 • Yuncheng Hua, Xiangyu Xi, Zheng Jiang, Guanwei Zhang, Chaobo Sun, Guanglu Wan, Wei Ye

End-to-end generation-based approaches have been investigated and applied in task-oriented dialogue systems.

Exploring Vision-Language Models for Imbalanced Learning

1 code implementation • 4 Apr 2023 • Yidong Wang, Zhuohao Yu, Jindong Wang, Qiang Heng, Hao Chen, Wei Ye, Rui Xie, Xing Xie, Shikun Zhang

However, their performance on imbalanced dataset is relatively poor, where the distribution of classes in the training dataset is skewed, leading to poor performance in predicting minority classes.

Evaluating ChatGPT's Information Extraction Capabilities: An Assessment of Performance, Explainability, Calibration, and Faithfulness

1 code implementation • 23 Apr 2023 • Bo Li, Gexiang Fang, Yang Yang, Quansen Wang, Wei Ye, Wen Zhao, Shikun Zhang

The capability of Large Language Models (LLMs) like ChatGPT to comprehend user intent and provide reasonable responses has made them extremely popular lately.

Vision Language Pre-training by Contrastive Learning with Cross-Modal Similarity Regulation

no code implementations • 8 May 2023 • Chaoya Jiang, Wei Ye, Haiyang Xu, Miang yan, Shikun Zhang, Jie Zhang, Fei Huang

Cross-modal contrastive learning in vision language pretraining (VLP) faces the challenge of (partial) false negatives.

Exploiting Pseudo Image Captions for Multimodal Summarization

no code implementations • 9 May 2023 • Chaoya Jiang, Rui Xie, Wei Ye, Jinan Sun, Shikun Zhang

Cross-modal contrastive learning in vision language pretraining (VLP) faces the challenge of (partial) false negatives.

GETMusic: Generating Any Music Tracks with a Unified Representation and Diffusion Framework

1 code implementation • 18 May 2023 • Ang Lv, Xu Tan, Peiling Lu, Wei Ye, Shikun Zhang, Jiang Bian, Rui Yan

Our proposed representation, coupled with the non-autoregressive generative model, empowers GETMusic to generate music with any arbitrary source-target track combinations.

PromptBench: Towards Evaluating the Robustness of Large Language Models on Adversarial Prompts

1 code implementation • 7 Jun 2023 • Kaijie Zhu, Jindong Wang, Jiaheng Zhou, Zichen Wang, Hao Chen, Yidong Wang, Linyi Yang, Wei Ye, Yue Zhang, Neil Zhenqiang Gong, Xing Xie

The increasing reliance on Large Language Models (LLMs) across academia and industry necessitates a comprehensive understanding of their robustness to prompts.

Cross-Lingual Paraphrase Identification

Cross-Lingual Paraphrase Identification

Machine Translation

+5

Machine Translation

+5

PandaLM: An Automatic Evaluation Benchmark for LLM Instruction Tuning Optimization

2 code implementations • 8 Jun 2023 • Yidong Wang, Zhuohao Yu, Zhengran Zeng, Linyi Yang, Cunxiang Wang, Hao Chen, Chaoya Jiang, Rui Xie, Jindong Wang, Xing Xie, Wei Ye, Shikun Zhang, Yue Zhang

To ensure the reliability of PandaLM, we collect a diverse human-annotated test dataset, where all contexts are generated by humans and labels are aligned with human preferences.

Exploiting Pseudo Future Contexts for Emotion Recognition in Conversations

1 code implementation • 27 Jun 2023 • Yinyi Wei, Shuaipeng Liu, Hailei Yan, Wei Ye, Tong Mo, Guanglu Wan

Specifically, for an utterance, we generate its future context with pre-trained language models, potentially containing extra beneficial knowledge in a conversational form homogeneous with the historical ones.

EmoGen: Eliminating Subjective Bias in Emotional Music Generation

1 code implementation • 3 Jul 2023 • Chenfei Kang, Peiling Lu, Botao Yu, Xu Tan, Wei Ye, Shikun Zhang, Jiang Bian

In this paper, we propose EmoGen, an emotional music generation system that leverages a set of emotion-related music attributes as the bridge between emotion and music, and divides the generation into two stages: emotion-to-attribute mapping with supervised clustering, and attribute-to-music generation with self-supervised learning.

A Survey on Evaluation of Large Language Models

1 code implementation • 6 Jul 2023 • Yupeng Chang, Xu Wang, Jindong Wang, Yuan Wu, Linyi Yang, Kaijie Zhu, Hao Chen, Xiaoyuan Yi, Cunxiang Wang, Yidong Wang, Wei Ye, Yue Zhang, Yi Chang, Philip S. Yu, Qiang Yang, Xing Xie

Large language models (LLMs) are gaining increasing popularity in both academia and industry, owing to their unprecedented performance in various applications.

BUS:Efficient and Effective Vision-language Pre-training with Bottom-Up Patch Summarization

no code implementations • 17 Jul 2023 • Chaoya Jiang, Haiyang Xu, Wei Ye, Qinghao Ye, Chenliang Li, Ming Yan, Bin Bi, Shikun Zhang, Fei Huang, Songfang Huang

Specifically, We incorporate a Text-Semantics-Aware Patch Selector (TSPS) into the ViT backbone to perform a coarse-grained visual token extraction and then attach a flexible Transformer-based Patch Abstraction Decoder (PAD) upon the backbone for top-level visual abstraction.

Enhancing Visibility in Nighttime Haze Images Using Guided APSF and Gradient Adaptive Convolution

1 code implementation • 3 Aug 2023 • Yeying Jin, Beibei Lin, Wending Yan, Yuan Yuan, Wei Ye, Robby T. Tan

In this paper, we enhance the visibility from a single nighttime haze image by suppressing glow and enhancing low-light regions.

MusicAgent: An AI Agent for Music Understanding and Generation with Large Language Models

1 code implementation • 18 Oct 2023 • Dingyao Yu, Kaitao Song, Peiling Lu, Tianyu He, Xu Tan, Wei Ye, Shikun Zhang, Jiang Bian

For developers and amateurs, it is very difficult to grasp all of these task to satisfy their requirements in music processing, especially considering the huge differences in the representations of music data and the model applicability across platforms among various tasks.

Hallucination Augmented Contrastive Learning for Multimodal Large Language Model

1 code implementation • 12 Dec 2023 • Chaoya Jiang, Haiyang Xu, Mengfan Dong, Jiaxing Chen, Wei Ye, Ming Yan, Qinghao Ye, Ji Zhang, Fei Huang, Shikun Zhang

We first analyzed the representation distribution of textual and visual tokens in MLLM, revealing two important findings: 1) there is a significant gap between textual and visual representations, indicating unsatisfactory cross-modal representation alignment; 2) representations of texts that contain and do not contain hallucinations are entangled, making it challenging to distinguish them.

Ranked #72 on

Visual Question Answering

on MM-Vet

Ranked #72 on

Visual Question Answering

on MM-Vet

COMBHelper: A Neural Approach to Reduce Search Space for Graph Combinatorial Problems

1 code implementation • 14 Dec 2023 • Hao Tian, Sourav Medya, Wei Ye

Combinatorial Optimization (CO) problems over graphs appear routinely in many applications such as in optimizing traffic, viral marketing in social networks, and matching for job allocation.

Labels Need Prompts Too: Mask Matching for Natural Language Understanding Tasks

no code implementations • 14 Dec 2023 • Bo Li, Wei Ye, Quansen Wang, Wen Zhao, Shikun Zhang

Textual label names (descriptions) are typically semantically rich in many natural language understanding (NLU) tasks.

TiMix: Text-aware Image Mixing for Effective Vision-Language Pre-training

1 code implementation • 14 Dec 2023 • Chaoya Jiang, Wei Ye, Haiyang Xu, Qinghao Ye, Ming Yan, Ji Zhang, Shikun Zhang

Self-supervised Multi-modal Contrastive Learning (SMCL) remarkably advances modern Vision-Language Pre-training (VLP) models by aligning visual and linguistic modalities.

PICNN: A Pathway towards Interpretable Convolutional Neural Networks

1 code implementation • 19 Dec 2023 • Wengang Guo, Jiayi Yang, Huilin Yin, Qijun Chen, Wei Ye

Experimental results have demonstrated that our method PICNN (the combination of standard CNNs with our proposed pathway) exhibits greater interpretability than standard CNNs while achieving higher or comparable discrimination power.

Supervised Knowledge Makes Large Language Models Better In-context Learners

1 code implementation • 26 Dec 2023 • Linyi Yang, Shuibai Zhang, Zhuohao Yu, Guangsheng Bao, Yidong Wang, Jindong Wang, Ruochen Xu, Wei Ye, Xing Xie, Weizhu Chen, Yue Zhang

Large Language Models (LLMs) exhibit emerging in-context learning abilities through prompt engineering.

NightRain: Nighttime Video Deraining via Adaptive-Rain-Removal and Adaptive-Correction

no code implementations • 1 Jan 2024 • Beibei Lin, Yeying Jin, Wending Yan, Wei Ye, Yuan Yuan, Shunli Zhang, Robby Tan

However, the intricacies of the real world, particularly with the presence of light effects and low-light regions affected by noise, create significant domain gaps, hampering synthetic-trained models in removing rain streaks properly and leading to over-saturation and color shifts.

Efficient Vision-and-Language Pre-training with Text-Relevant Image Patch Selection

no code implementations • 11 Jan 2024 • Wei Ye, Chaoya Jiang, Haiyang Xu, Chenhao Ye, Chenliang Li, Ming Yan, Shikun Zhang, Songhang Huang, Fei Huang

Vision Transformers (ViTs) have become increasingly popular in large-scale Vision and Language Pre-training (VLP) models.

KIEval: A Knowledge-grounded Interactive Evaluation Framework for Large Language Models

2 code implementations • 23 Feb 2024 • Zhuohao Yu, Chang Gao, Wenjin Yao, Yidong Wang, Wei Ye, Jindong Wang, Xing Xie, Yue Zhang, Shikun Zhang

Automatic evaluation methods for large language models (LLMs) are hindered by data contamination, leading to inflated assessments of their effectiveness.

Hal-Eval: A Universal and Fine-grained Hallucination Evaluation Framework for Large Vision Language Models

no code implementations • 24 Feb 2024 • Chaoya Jiang, Wei Ye, Mengfan Dong, Hongrui Jia, Haiyang Xu, Ming Yan, Ji Zhang, Shikun Zhang

Large Vision Language Models exhibit remarkable capabilities but struggle with hallucinations inconsistencies between images and their descriptions.

Can Large Language Models Recall Reference Location Like Humans?

no code implementations • 26 Feb 2024 • Ye Wang, Xinrun Xu, Rui Xie, Wenxin Hu, Wei Ye

When completing knowledge-intensive tasks, humans sometimes need not just an answer but also a corresponding reference passage for auxiliary reading.

NaturalSpeech 3: Zero-Shot Speech Synthesis with Factorized Codec and Diffusion Models

no code implementations • 5 Mar 2024 • Zeqian Ju, Yuancheng Wang, Kai Shen, Xu Tan, Detai Xin, Dongchao Yang, Yanqing Liu, Yichong Leng, Kaitao Song, Siliang Tang, Zhizheng Wu, Tao Qin, Xiang-Yang Li, Wei Ye, Shikun Zhang, Jiang Bian, Lei He, Jinyu Li, Sheng Zhao

Specifically, 1) we design a neural codec with factorized vector quantization (FVQ) to disentangle speech waveform into subspaces of content, prosody, timbre, and acoustic details; 2) we propose a factorized diffusion model to generate attributes in each subspace following its corresponding prompt.

NightHaze: Nighttime Image Dehazing via Self-Prior Learning

no code implementations • 12 Mar 2024 • Beibei Lin, Yeying Jin, Wending Yan, Wei Ye, Yuan Yuan, Robby T. Tan

By increasing the noise values to approach as high as the pixel intensity values of the glow and light effect blended images, our augmentation becomes severe, resulting in stronger priors.

CodeShell Technical Report

no code implementations • 23 Mar 2024 • Rui Xie, Zhengran Zeng, Zhuohao Yu, Chang Gao, Shikun Zhang, Wei Ye

Through this process, We have curated 100 billion high-quality pre-training data from GitHub.

CoherentGS: Sparse Novel View Synthesis with Coherent 3D Gaussians

no code implementations • 28 Mar 2024 • Avinash Paliwal, Wei Ye, Jinhui Xiong, Dmytro Kotovenko, Rakesh Ranjan, Vikas Chandra, Nima Khademi Kalantari

The field of 3D reconstruction from images has rapidly evolved in the past few years, first with the introduction of Neural Radiance Field (NeRF) and more recently with 3D Gaussian Splatting (3DGS).

FreeEval: A Modular Framework for Trustworthy and Efficient Evaluation of Large Language Models

2 code implementations • 9 Apr 2024 • Zhuohao Yu, Chang Gao, Wenjin Yao, Yidong Wang, Zhengran Zeng, Wei Ye, Jindong Wang, Yue Zhang, Shikun Zhang

The rapid development of large language model (LLM) evaluation methodologies and datasets has led to a profound challenge: integrating state-of-the-art evaluation techniques cost-effectively while ensuring reliability, reproducibility, and efficiency.

Stacking Networks Dynamically for Image Restoration Based on the Plug-and-Play Framework

no code implementations • ECCV 2020 • Haixin Wang, Tianhao Zhang, Muzhi Yu, Jinan Sun, Wei Ye, Chen Wang , Shikun Zhang

Recently, stacked networks show powerful performance in Image Restoration, such as challenging motion deblurring problems.

Improving Embedding-based Large-scale Retrieval via Label Enhancement

no code implementations • Findings (EMNLP) 2021 • Peiyang Liu, Xi Wang, Sen Wang, Wei Ye, Xiangyu Xi, Shikun Zhang

Current embedding-based large-scale retrieval models are trained with 0-1 hard label that indicates whether a query is relevant to a document, ignoring rich information of the relevance degree.

Label Smoothing for Text Mining

no code implementations • COLING 2022 • Peiyang Liu, Xiangyu Xi, Wei Ye, Shikun Zhang

This paper presents a novel keyword-based LS method to automatically generate soft labels from hard labels via exploiting the relevance between labels and text instances.

DESED: Dialogue-based Explanation for Sentence-level Event Detection

1 code implementation • COLING 2022 • Yinyi Wei, Shuaipeng Liu, Jianwei Lv, Xiangyu Xi, Hailei Yan, Wei Ye, Tong Mo, Fan Yang, Guanglu Wan

Many recent sentence-level event detection efforts focus on enriching sentence semantics, e. g., via multi-task or prompt-based learning.