Search Results for author: Wojciech Samek

Found 114 papers, 47 papers with code

Explainable concept mappings of MRI: Revealing the mechanisms underlying deep learning-based brain disease classification

no code implementations • 16 Apr 2024 • Christian Tinauer, Anna Damulina, Maximilian Sackl, Martin Soellradl, Reduan Achtibat, Maximilian Dreyer, Frederik Pahde, Sebastian Lapuschkin, Reinhold Schmidt, Stefan Ropele, Wojciech Samek, Christian Langkammer

Using quantitative R2* maps we separated Alzheimer's patients (n=117) from normal controls (n=219) by using a convolutional neural network and systematically investigated the learned concepts using Concept Relevance Propagation and compared these results to a conventional region of interest-based analysis.

Reactive Model Correction: Mitigating Harm to Task-Relevant Features via Conditional Bias Suppression

no code implementations • 15 Apr 2024 • Dilyara Bareeva, Maximilian Dreyer, Frederik Pahde, Wojciech Samek, Sebastian Lapuschkin

Deep Neural Networks are prone to learning and relying on spurious correlations in the training data, which, for high-risk applications, can have fatal consequences.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

PURE: Turning Polysemantic Neurons Into Pure Features by Identifying Relevant Circuits

1 code implementation • 9 Apr 2024 • Maximilian Dreyer, Erblina Purelku, Johanna Vielhaben, Wojciech Samek, Sebastian Lapuschkin

The field of mechanistic interpretability aims to study the role of individual neurons in Deep Neural Networks.

Explain to Question not to Justify

no code implementations • 21 Feb 2024 • Przemyslaw Biecek, Wojciech Samek

Explainable Artificial Intelligence (XAI) is a young but very promising field of research.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

DualView: Data Attribution from the Dual Perspective

2 code implementations • 19 Feb 2024 • Galip Ümit Yolcu, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

In this work we present DualView, a novel method for post-hoc data attribution based on surrogate modelling, demonstrating both high computational efficiency, as well as good evaluation results.

AttnLRP: Attention-Aware Layer-wise Relevance Propagation for Transformers

1 code implementation • 8 Feb 2024 • Reduan Achtibat, Sayed Mohammad Vakilzadeh Hatefi, Maximilian Dreyer, Aakriti Jain, Thomas Wiegand, Sebastian Lapuschkin, Wojciech Samek

Large Language Models are prone to biased predictions and hallucinations, underlining the paramount importance of understanding their model-internal reasoning process.

Explaining Predictive Uncertainty by Exposing Second-Order Effects

no code implementations • 30 Jan 2024 • Florian Bley, Sebastian Lapuschkin, Wojciech Samek, Grégoire Montavon

So far, the question of explaining predictive uncertainty, i. e. why a model 'doubts', has been scarcely studied.

Understanding the (Extra-)Ordinary: Validating Deep Model Decisions with Prototypical Concept-based Explanations

1 code implementation • 28 Nov 2023 • Maximilian Dreyer, Reduan Achtibat, Wojciech Samek, Sebastian Lapuschkin

What sets our approach apart is the combination of local and global strategies, enabling a clearer understanding of the (dis-)similarities in model decisions compared to the expected (prototypical) concept use, ultimately reducing the dependence on human long-term assessment.

DMLR: Data-centric Machine Learning Research -- Past, Present and Future

no code implementations • 21 Nov 2023 • Luis Oala, Manil Maskey, Lilith Bat-Leah, Alicia Parrish, Nezihe Merve Gürel, Tzu-Sheng Kuo, Yang Liu, Rotem Dror, Danilo Brajovic, Xiaozhe Yao, Max Bartolo, William A Gaviria Rojas, Ryan Hileman, Rainier Aliment, Michael W. Mahoney, Meg Risdal, Matthew Lease, Wojciech Samek, Debojyoti Dutta, Curtis G Northcutt, Cody Coleman, Braden Hancock, Bernard Koch, Girmaw Abebe Tadesse, Bojan Karlaš, Ahmed Alaa, Adji Bousso Dieng, Natasha Noy, Vijay Janapa Reddi, James Zou, Praveen Paritosh, Mihaela van der Schaar, Kurt Bollacker, Lora Aroyo, Ce Zhang, Joaquin Vanschoren, Isabelle Guyon, Peter Mattson

Drawing from discussions at the inaugural DMLR workshop at ICML 2023 and meetings prior, in this report we outline the relevance of community engagement and infrastructure development for the creation of next-generation public datasets that will advance machine learning science.

Explainable Artificial Intelligence (XAI) 2.0: A Manifesto of Open Challenges and Interdisciplinary Research Directions

no code implementations • 30 Oct 2023 • Luca Longo, Mario Brcic, Federico Cabitza, Jaesik Choi, Roberto Confalonieri, Javier Del Ser, Riccardo Guidotti, Yoichi Hayashi, Francisco Herrera, Andreas Holzinger, Richard Jiang, Hassan Khosravi, Freddy Lecue, Gianclaudio Malgieri, Andrés Páez, Wojciech Samek, Johannes Schneider, Timo Speith, Simone Stumpf

As systems based on opaque Artificial Intelligence (AI) continue to flourish in diverse real-world applications, understanding these black box models has become paramount.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Generative Fractional Diffusion Models

no code implementations • 26 Oct 2023 • Gabriel Nobis, Marco Aversa, Maximilian Springenberg, Michael Detzel, Stefano Ermon, Shinichi Nakajima, Roderick Murray-Smith, Sebastian Lapuschkin, Christoph Knochenhauer, Luis Oala, Wojciech Samek

We generalize the continuous time framework for score-based generative models from an underlying Brownian motion (BM) to an approximation of fractional Brownian motion (FBM).

Human-Centered Evaluation of XAI Methods

no code implementations • 11 Oct 2023 • Karam Dawoud, Wojciech Samek, Peter Eisert, Sebastian Lapuschkin, Sebastian Bosse

In the ever-evolving field of Artificial Intelligence, a critical challenge has been to decipher the decision-making processes within the so-called "black boxes" in deep learning.

Layer-wise Feedback Propagation

no code implementations • 23 Aug 2023 • Leander Weber, Jim Berend, Alexander Binder, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

In this paper, we present Layer-wise Feedback Propagation (LFP), a novel training approach for neural-network-like predictors that utilizes explainability, specifically Layer-wise Relevance Propagation(LRP), to assign rewards to individual connections based on their respective contributions to solving a given task.

From Hope to Safety: Unlearning Biases of Deep Models via Gradient Penalization in Latent Space

1 code implementation • 18 Aug 2023 • Maximilian Dreyer, Frederik Pahde, Christopher J. Anders, Wojciech Samek, Sebastian Lapuschkin

Deep Neural Networks are prone to learning spurious correlations embedded in the training data, leading to potentially biased predictions.

XAI-based Comparison of Input Representations for Audio Event Classification

no code implementations • 27 Apr 2023 • Annika Frommholz, Fabian Seipel, Sebastian Lapuschkin, Wojciech Samek, Johanna Vielhaben

Deep neural networks are a promising tool for Audio Event Classification.

Reveal to Revise: An Explainable AI Life Cycle for Iterative Bias Correction of Deep Models

1 code implementation • 22 Mar 2023 • Frederik Pahde, Maximilian Dreyer, Wojciech Samek, Sebastian Lapuschkin

To tackle this problem, we propose Reveal to Revise (R2R), a framework entailing the entire eXplainable Artificial Intelligence (XAI) life cycle, enabling practitioners to iteratively identify, mitigate, and (re-)evaluate spurious model behavior with a minimal amount of human interaction.

Explainable AI for Time Series via Virtual Inspection Layers

no code implementations • 11 Mar 2023 • Johanna Vielhaben, Sebastian Lapuschkin, Grégoire Montavon, Wojciech Samek

In this way, we extend the applicability of a family of XAI methods to domains (e. g. speech) where the input is only interpretable after a transformation.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

+3

Explainable Artificial Intelligence (XAI)

+3

A Privacy Preserving System for Movie Recommendations Using Federated Learning

no code implementations • 7 Mar 2023 • David Neumann, Andreas Lutz, Karsten Müller, Wojciech Samek

A recent distributed learning scheme called federated learning has made it possible to learn from personal user data without its central collection.

The Meta-Evaluation Problem in Explainable AI: Identifying Reliable Estimators with MetaQuantus

1 code implementation • 14 Feb 2023 • Anna Hedström, Philine Bommer, Kristoffer K. Wickstrøm, Wojciech Samek, Sebastian Lapuschkin, Marina M. -C. Höhne

We address this problem through a meta-evaluation of different quality estimators in XAI, which we define as ''the process of evaluating the evaluation method''.

Optimizing Explanations by Network Canonization and Hyperparameter Search

no code implementations • 30 Nov 2022 • Frederik Pahde, Galip Ümit Yolcu, Alexander Binder, Wojciech Samek, Sebastian Lapuschkin

We further suggest a XAI evaluation framework with which we quantify and compare the effect sof model canonization for various XAI methods in image classification tasks on the Pascal-VOC and ILSVRC2017 datasets, as well as for Visual Question Answering using CLEVR-XAI.

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Image Classification

+2

Image Classification

+2

Shortcomings of Top-Down Randomization-Based Sanity Checks for Evaluations of Deep Neural Network Explanations

no code implementations • CVPR 2023 • Alexander Binder, Leander Weber, Sebastian Lapuschkin, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

To address shortcomings of this test, we start by observing an experimental gap in the ranking of explanation methods between randomization-based sanity checks [1] and model output faithfulness measures (e. g. [25]).

Revealing Hidden Context Bias in Segmentation and Object Detection through Concept-specific Explanations

no code implementations • 21 Nov 2022 • Maximilian Dreyer, Reduan Achtibat, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

Applying traditional post-hoc attribution methods to segmentation or object detection predictors offers only limited insights, as the obtained feature attribution maps at input level typically resemble the models' predicted segmentation mask or bounding box.

Data Models for Dataset Drift Controls in Machine Learning With Optical Images

1 code implementation • 4 Nov 2022 • Luis Oala, Marco Aversa, Gabriel Nobis, Kurt Willis, Yoan Neuenschwander, Michèle Buck, Christian Matek, Jerome Extermann, Enrico Pomarico, Wojciech Samek, Roderick Murray-Smith, Christoph Clausen, Bruno Sanguinetti

This limits our ability to study and understand the relationship between data generation and downstream machine learning model performance in a physically accurate manner.

Explaining automated gender classification of human gait

no code implementations • 16 Oct 2022 • Fabian Horst, Djordje Slijepcevic, Matthias Zeppelzauer, Anna-Maria Raberger, Sebastian Lapuschkin, Wojciech Samek, Wolfgang I. Schöllhorn, Christian Breiteneder, Brian Horsak

State-of-the-art machine learning (ML) models are highly effective in classifying gait analysis data, however, they lack in providing explanations for their predictions.

Explaining machine learning models for age classification in human gait analysis

no code implementations • 16 Oct 2022 • Djordje Slijepcevic, Fabian Horst, Marvin Simak, Sebastian Lapuschkin, Anna-Maria Raberger, Wojciech Samek, Christian Breiteneder, Wolfgang I. Schöllhorn, Matthias Zeppelzauer, Brian Horsak

Machine learning (ML) models have proven effective in classifying gait analysis data, e. g., binary classification of young vs. older adults.

From Attribution Maps to Human-Understandable Explanations through Concept Relevance Propagation

2 code implementations • 7 Jun 2022 • Reduan Achtibat, Maximilian Dreyer, Ilona Eisenbraun, Sebastian Bosse, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

In this work we introduce the Concept Relevance Propagation (CRP) approach, which combines the local and global perspectives and thus allows answering both the "where" and "what" questions for individual predictions.

FedAUXfdp: Differentially Private One-Shot Federated Distillation

no code implementations • 30 May 2022 • Haley Hoech, Roman Rischke, Karsten Müller, Wojciech Samek

Federated learning suffers in the case of non-iid local datasets, i. e., when the distributions of the clients' data are heterogeneous.

Decentral and Incentivized Federated Learning Frameworks: A Systematic Literature Review

no code implementations • 7 May 2022 • Leon Witt, Mathis Heyer, Kentaroh Toyoda, Wojciech Samek, Dan Li

This is the first systematic literature review analyzing holistic FLFs in the domain of both, decentralized and incentivized federated learning.

Explain to Not Forget: Defending Against Catastrophic Forgetting with XAI

no code implementations • 4 May 2022 • Sami Ede, Serop Baghdadlian, Leander Weber, An Nguyen, Dario Zanca, Wojciech Samek, Sebastian Lapuschkin

The ability to continuously process and retain new information like we do naturally as humans is a feat that is highly sought after when training neural networks.

Adaptive Differential Filters for Fast and Communication-Efficient Federated Learning

no code implementations • 9 Apr 2022 • Daniel Becking, Heiner Kirchhoffer, Gerhard Tech, Paul Haase, Karsten Müller, Heiko Schwarz, Wojciech Samek

Federated learning (FL) scenarios inherently generate a large communication overhead by frequently transmitting neural network updates between clients and server.

Beyond Explaining: Opportunities and Challenges of XAI-Based Model Improvement

no code implementations • 15 Mar 2022 • Leander Weber, Sebastian Lapuschkin, Alexander Binder, Wojciech Samek

We conclude that while model improvement based on XAI can have significant beneficial effects even on complex and not easily quantifyable model properties, these methods need to be applied carefully, since their success can vary depending on a multitude of factors, such as the model and dataset used, or the employed explanation method.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Quantus: An Explainable AI Toolkit for Responsible Evaluation of Neural Network Explanations and Beyond

1 code implementation • NeurIPS 2023 • Anna Hedström, Leander Weber, Dilyara Bareeva, Daniel Krakowczyk, Franz Motzkus, Wojciech Samek, Sebastian Lapuschkin, Marina M. -C. Höhne

The evaluation of explanation methods is a research topic that has not yet been explored deeply, however, since explainability is supposed to strengthen trust in artificial intelligence, it is necessary to systematically review and compare explanation methods in order to confirm their correctness.

Navigating Neural Space: Revisiting Concept Activation Vectors to Overcome Directional Divergence

no code implementations • 7 Feb 2022 • Frederik Pahde, Maximilian Dreyer, Leander Weber, Moritz Weckbecker, Christopher J. Anders, Thomas Wiegand, Wojciech Samek, Sebastian Lapuschkin

With a growing interest in understanding neural network prediction strategies, Concept Activation Vectors (CAVs) have emerged as a popular tool for modeling human-understandable concepts in the latent space.

Toward Explainable AI for Regression Models

1 code implementation • 21 Dec 2021 • Simon Letzgus, Patrick Wagner, Jonas Lederer, Wojciech Samek, Klaus-Robert Müller, Gregoire Montavon

In addition to the impressive predictive power of machine learning (ML) models, more recently, explanation methods have emerged that enable an interpretation of complex non-linear learning models such as deep neural networks.

Evaluating deep transfer learning for whole-brain cognitive decoding

1 code implementation • 1 Nov 2021 • Armin W. Thomas, Ulman Lindenberger, Wojciech Samek, Klaus-Robert Müller

Here, we systematically evaluate TL for the application of DL models to the decoding of cognitive states (e. g., viewing images of faces or houses) from whole-brain functional Magnetic Resonance Imaging (fMRI) data.

ECQ$^{\text{x}}$: Explainability-Driven Quantization for Low-Bit and Sparse DNNs

no code implementations • 9 Sep 2021 • Daniel Becking, Maximilian Dreyer, Wojciech Samek, Karsten Müller, Sebastian Lapuschkin

The remarkable success of deep neural networks (DNNs) in various applications is accompanied by a significant increase in network parameters and arithmetic operations.

Reward-Based 1-bit Compressed Federated Distillation on Blockchain

no code implementations • 27 Jun 2021 • Leon Witt, Usama Zafar, KuoYeh Shen, Felix Sattler, Dan Li, Wojciech Samek

The recent advent of various forms of Federated Knowledge Distillation (FD) paves the way for a new generation of robust and communication-efficient Federated Learning (FL), where mere soft-labels are aggregated, rather than whole gradients of Deep Neural Networks (DNN) as done in previous FL schemes.

On the Robustness of Pretraining and Self-Supervision for a Deep Learning-based Analysis of Diabetic Retinopathy

no code implementations • 25 Jun 2021 • Vignesh Srinivasan, Nils Strodthoff, Jackie Ma, Alexander Binder, Klaus-Robert Müller, Wojciech Samek

Our results indicate that models initialized from ImageNet pretraining report a significant increase in performance, generalization and robustness to image distortions.

Software for Dataset-wide XAI: From Local Explanations to Global Insights with Zennit, CoRelAy, and ViRelAy

3 code implementations • 24 Jun 2021 • Christopher J. Anders, David Neumann, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin

Deep Neural Networks (DNNs) are known to be strong predictors, but their prediction strategies can rarely be understood.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

FedAUX: Leveraging Unlabeled Auxiliary Data in Federated Learning

1 code implementation • 4 Feb 2021 • Felix Sattler, Tim Korjakow, Roman Rischke, Wojciech Samek

Federated Distillation (FD) is a popular novel algorithmic paradigm for Federated Learning, which achieves training performance competitive to prior parameter averaging based methods, while additionally allowing the clients to train different model architectures, by distilling the client predictions on an unlabeled auxiliary set of data into a student model.

FantastIC4: A Hardware-Software Co-Design Approach for Efficiently Running 4bit-Compact Multilayer Perceptrons

no code implementations • 17 Dec 2020 • Simon Wiedemann, Suhas Shivapakash, Pablo Wiedemann, Daniel Becking, Wojciech Samek, Friedel Gerfers, Thomas Wiegand

With the growing demand for deploying deep learning models to the "edge", it is paramount to develop techniques that allow to execute state-of-the-art models within very tight and limited resource constraints.

Communication-Efficient Federated Distillation

no code implementations • 1 Dec 2020 • Felix Sattler, Arturo Marban, Roman Rischke, Wojciech Samek

Communication constraints are one of the major challenges preventing the wide-spread adoption of Federated Learning systems.

A Unifying Review of Deep and Shallow Anomaly Detection

no code implementations • 24 Sep 2020 • Lukas Ruff, Jacob R. Kauffmann, Robert A. Vandermeulen, Grégoire Montavon, Wojciech Samek, Marius Kloft, Thomas G. Dietterich, Klaus-Robert Müller

Deep learning approaches to anomaly detection have recently improved the state of the art in detection performance on complex datasets such as large collections of images or text.

Langevin Cooling for Domain Translation

1 code implementation • 31 Aug 2020 • Vignesh Srinivasan, Klaus-Robert Müller, Wojciech Samek, Shinichi Nakajima

Domain translation is the task of finding correspondence between two domains.

Explanation-Guided Training for Cross-Domain Few-Shot Classification

1 code implementation • 17 Jul 2020 • Jiamei Sun, Sebastian Lapuschkin, Wojciech Samek, Yunqing Zhao, Ngai-Man Cheung, Alexander Binder

It leverages on the explanation scores, obtained from existing explanation methods when applied to the predictions of FSC models, computed for intermediate feature maps of the models.

Ranked #8 on

Cross-Domain Few-Shot

on ISIC2018

Ranked #8 on

Cross-Domain Few-Shot

on ISIC2018

MixMOOD: A systematic approach to class distribution mismatch in semi-supervised learning using deep dataset dissimilarity measures

1 code implementation • 14 Jun 2020 • Saul Calderon-Ramirez, Luis Oala, Jordina Torrents-Barrena, Shengxiang Yang, Armaghan Moemeni, Wojciech Samek, Miguel A. Molina-Cabello

In this work, we propose MixMOOD - a systematic approach to mitigate effect of class distribution mismatch in semi-supervised deep learning (SSDL) with MixMatch.

Deep Learning for ECG Analysis: Benchmarks and Insights from PTB-XL

2 code implementations • 28 Apr 2020 • Nils Strodthoff, Patrick Wagner, Tobias Schaeffter, Wojciech Samek

Electrocardiography is a very common, non-invasive diagnostic procedure and its interpretation is increasingly supported by automatic interpretation algorithms.

Understanding Integrated Gradients with SmoothTaylor for Deep Neural Network Attribution

1 code implementation • arXiv 2020 • Gary S. W. Goh, Sebastian Lapuschkin, Leander Weber, Wojciech Samek, Alexander Binder

From our experiments, we find that the SmoothTaylor approach together with adaptive noising is able to generate better quality saliency maps with lesser noise and higher sensitivity to the relevant points in the input space as compared to Integrated Gradients.

Risk Estimation of SARS-CoV-2 Transmission from Bluetooth Low Energy Measurements

no code implementations • 22 Apr 2020 • Felix Sattler, Jackie Ma, Patrick Wagner, David Neumann, Markus Wenzel, Ralf Schäfer, Wojciech Samek, Klaus-Robert Müller, Thomas Wiegand

Digital contact tracing approaches based on Bluetooth low energy (BLE) have the potential to efficiently contain and delay outbreaks of infectious diseases such as the ongoing SARS-CoV-2 pandemic.

Dithered backprop: A sparse and quantized backpropagation algorithm for more efficient deep neural network training

no code implementations • 9 Apr 2020 • Simon Wiedemann, Temesgen Mehari, Kevin Kepp, Wojciech Samek

In this work we propose a method for reducing the computational cost of backprop, which we named dithered backprop.

Learning Sparse & Ternary Neural Networks with Entropy-Constrained Trained Ternarization (EC2T)

2 code implementations • 2 Apr 2020 • Arturo Marban, Daniel Becking, Simon Wiedemann, Wojciech Samek

To address this problem, we propose Entropy-Constrained Trained Ternarization (EC2T), a general framework to create sparse and ternary neural networks which are efficient in terms of storage (e. g., at most two binary-masks and two full-precision values are required to save a weight matrix) and computation (e. g., MAC operations are reduced to a few accumulations plus two multiplications).

Interval Neural Networks as Instability Detectors for Image Reconstructions

1 code implementation • 27 Mar 2020 • Jan Macdonald, Maximilian März, Luis Oala, Wojciech Samek

This work investigates the detection of instabilities that may occur when utilizing deep learning models for image reconstruction tasks.

Interval Neural Networks: Uncertainty Scores

1 code implementation • 25 Mar 2020 • Luis Oala, Cosmas Heiß, Jan Macdonald, Maximilian März, Wojciech Samek, Gitta Kutyniok

We propose a fast, non-Bayesian method for producing uncertainty scores in the output of pre-trained deep neural networks (DNNs) using a data-driven interval propagating network.

Explaining Deep Neural Networks and Beyond: A Review of Methods and Applications

no code implementations • 17 Mar 2020 • Wojciech Samek, Grégoire Montavon, Sebastian Lapuschkin, Christopher J. Anders, Klaus-Robert Müller

With the broader and highly successful usage of machine learning in industry and the sciences, there has been a growing demand for Explainable AI.

Ground Truth Evaluation of Neural Network Explanations with CLEVR-XAI

2 code implementations • 16 Mar 2020 • Leila Arras, Ahmed Osman, Wojciech Samek

The rise of deep learning in today's applications entailed an increasing need in explaining the model's decisions beyond prediction performances in order to foster trust and accountability.

Trends and Advancements in Deep Neural Network Communication

no code implementations • 6 Mar 2020 • Felix Sattler, Thomas Wiegand, Wojciech Samek

Due to their great performance and scalability properties neural networks have become ubiquitous building blocks of many applications.

Explain and Improve: LRP-Inference Fine-Tuning for Image Captioning Models

1 code implementation • 4 Jan 2020 • Jiamei Sun, Sebastian Lapuschkin, Wojciech Samek, Alexander Binder

We develop variants of layer-wise relevance propagation (LRP) and gradient-based explanation methods, tailored to image captioning models with attention mechanisms.

Finding and Removing Clever Hans: Using Explanation Methods to Debug and Improve Deep Models

2 code implementations • 22 Dec 2019 • Christopher J. Anders, Leander Weber, David Neumann, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin

Based on a recent technique - Spectral Relevance Analysis - we propose the following technical contributions and resulting findings: (a) a scalable quantification of artifactual and poisoned classes where the machine learning models under study exhibit CH behavior, (b) several approaches denoted as Class Artifact Compensation (ClArC), which are able to effectively and significantly reduce a model's CH behavior.

Pruning by Explaining: A Novel Criterion for Deep Neural Network Pruning

1 code implementation • 18 Dec 2019 • Seul-Ki Yeom, Philipp Seegerer, Sebastian Lapuschkin, Alexander Binder, Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek

The success of convolutional neural networks (CNNs) in various applications is accompanied by a significant increase in computation and parameter storage costs.

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Model Compression

+2

Model Compression

+2

On the Explanation of Machine Learning Predictions in Clinical Gait Analysis

2 code implementations • 16 Dec 2019 • Djordje Slijepcevic, Fabian Horst, Sebastian Lapuschkin, Anna-Maria Raberger, Matthias Zeppelzauer, Wojciech Samek, Christian Breiteneder, Wolfgang I. Schöllhorn, Brian Horsak

Machine learning (ML) is increasingly used to support decision-making in the healthcare sector.

Asymptotically unbiased estimation of physical observables with neural samplers

no code implementations • 29 Oct 2019 • Kim A. Nicoli, Shinichi Nakajima, Nils Strodthoff, Wojciech Samek, Klaus-Robert Müller, Pan Kessel

We propose a general framework for the estimation of observables with generative neural samplers focusing on modern deep generative neural networks that provide an exact sampling probability.

Towards Best Practice in Explaining Neural Network Decisions with LRP

1 code implementation • 22 Oct 2019 • Maximilian Kohlbrenner, Alexander Bauer, Shinichi Nakajima, Alexander Binder, Wojciech Samek, Sebastian Lapuschkin

In this paper, we focus on a popular and widely used method of XAI, the Layer-wise Relevance Propagation (LRP).

Ranked #1 on

Object Detection

on SIXray

Ranked #1 on

Object Detection

on SIXray

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

+3

Explainable Artificial Intelligence (XAI)

+3

Clustered Federated Learning: Model-Agnostic Distributed Multi-Task Optimization under Privacy Constraints

2 code implementations • 4 Oct 2019 • Felix Sattler, Klaus-Robert Müller, Wojciech Samek

Federated Learning (FL) is currently the most widely adopted framework for collaborative training of (deep) machine learning models under privacy constraints.

Towards Explainable Artificial Intelligence

no code implementations • 26 Sep 2019 • Wojciech Samek, Klaus-Robert Müller

Deep learning models are at the forefront of this development.

Explaining and Interpreting LSTMs

no code implementations • 25 Sep 2019 • Leila Arras, Jose A. Arjona-Medina, Michael Widrich, Grégoire Montavon, Michael Gillhofer, Klaus-Robert Müller, Sepp Hochreiter, Wojciech Samek

While neural networks have acted as a strong unifying force in the design of modern AI systems, the neural network architectures themselves remain highly heterogeneous due to the variety of tasks to be solved.

Resolving challenges in deep learning-based analyses of histopathological images using explanation methods

no code implementations • 15 Aug 2019 • Miriam Hägele, Philipp Seegerer, Sebastian Lapuschkin, Michael Bockmayr, Wojciech Samek, Frederick Klauschen, Klaus-Robert Müller, Alexander Binder

Deep learning has recently gained popularity in digital pathology due to its high prediction quality.

DeepCABAC: A Universal Compression Algorithm for Deep Neural Networks

1 code implementation • 27 Jul 2019 • Simon Wiedemann, Heiner Kirchoffer, Stefan Matlage, Paul Haase, Arturo Marban, Talmaj Marinc, David Neumann, Tung Nguyen, Ahmed Osman, Detlev Marpe, Heiko Schwarz, Thomas Wiegand, Wojciech Samek

The field of video compression has developed some of the most sophisticated and efficient compression algorithms known in the literature, enabling very high compressibility for little loss of information.

Deep Transfer Learning For Whole-Brain fMRI Analyses

no code implementations • 2 Jul 2019 • Armin W. Thomas, Klaus-Robert Müller, Wojciech Samek

Even further, the pre-trained DL model variant is already able to correctly decode 67. 51% of the cognitive states from a test dataset with 100 individuals, when fine-tuned on a dataset of the size of only three subjects.

From Clustering to Cluster Explanations via Neural Networks

no code implementations • 18 Jun 2019 • Jacob Kauffmann, Malte Esders, Lukas Ruff, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller

Cluster predictions of the obtained networks can then be quickly and accurately attributed to the input features.

Achieving Generalizable Robustness of Deep Neural Networks by Stability Training

no code implementations • 3 Jun 2019 • Jan Laermann, Wojciech Samek, Nils Strodthoff

We study the recently introduced stability training as a general-purpose method to increase the robustness of deep neural networks against input perturbations.

DeepCABAC: Context-adaptive binary arithmetic coding for deep neural network compression

no code implementations • 15 May 2019 • Simon Wiedemann, Heiner Kirchhoffer, Stefan Matlage, Paul Haase, Arturo Marban, Talmaj Marinc, David Neumann, Ahmed Osman, Detlev Marpe, Heiko Schwarz, Thomas Wiegand, Wojciech Samek

We present DeepCABAC, a novel context-adaptive binary arithmetic coder for compressing deep neural networks.

Evaluating Recurrent Neural Network Explanations

1 code implementation • WS 2019 • Leila Arras, Ahmed Osman, Klaus-Robert Müller, Wojciech Samek

Recently, several methods have been proposed to explain the predictions of recurrent neural networks (RNNs), in particular of LSTMs.

Black-Box Decision based Adversarial Attack with Symmetric $α$-stable Distribution

no code implementations • 11 Apr 2019 • Vignesh Srinivasan, Ercan E. Kuruoglu, Klaus-Robert Müller, Wojciech Samek, Shinichi Nakajima

Many existing methods employ Gaussian random variables for exploring the data space to find the most adversarial (for attacking) or least adversarial (for defense) point.

Comment on "Solving Statistical Mechanics Using VANs": Introducing saVANt - VANs Enhanced by Importance and MCMC Sampling

no code implementations • 26 Mar 2019 • Kim Nicoli, Pan Kessel, Nils Strodthoff, Wojciech Samek, Klaus-Robert Müller, Shinichi Nakajima

In this comment on "Solving Statistical Mechanics Using Variational Autoregressive Networks" by Wu et al., we propose a subtle yet powerful modification of their approach.

Robust and Communication-Efficient Federated Learning from Non-IID Data

1 code implementation • 7 Mar 2019 • Felix Sattler, Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek

Federated Learning allows multiple parties to jointly train a deep learning model on their combined data, without any of the participants having to reveal their local data to a centralized server.

Unmasking Clever Hans Predictors and Assessing What Machines Really Learn

1 code implementation • 26 Feb 2019 • Sebastian Lapuschkin, Stephan Wäldchen, Alexander Binder, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller

Current learning machines have successfully solved hard application problems, reaching high accuracy and displaying seemingly "intelligent" behavior.

Multi-Kernel Prediction Networks for Denoising of Burst Images

2 code implementations • 5 Feb 2019 • Talmaj Marinč, Vignesh Srinivasan, Serhan Gül, Cornelius Hellge, Wojciech Samek

The advantages of our method are two fold: (a) the different sized kernels help in extracting different information from the image which results in better reconstruction and (b) kernel fusion assures retaining of the extracted information while maintaining computational efficiency.

Entropy-Constrained Training of Deep Neural Networks

no code implementations • 18 Dec 2018 • Simon Wiedemann, Arturo Marban, Klaus-Robert Müller, Wojciech Samek

We propose a general framework for neural network compression that is motivated by the Minimum Description Length (MDL) principle.

Analyzing Neuroimaging Data Through Recurrent Deep Learning Models

1 code implementation • 23 Oct 2018 • Armin W. Thomas, Hauke R. Heekeren, Klaus-Robert Müller, Wojciech Samek

We further demonstrate DeepLight's ability to study the fine-grained temporo-spatial variability of brain activity over sequences of single fMRI samples.

Compact and Computationally Efficient Representations of Deep Neural Networks

no code implementations • NIPS Workshop CDNNRIA 2018 • Simon Wiedemann, Klaus-Robert Mueller, Wojciech Samek

However, most of these common matrix storage formats make strong statistical assumptions about the distribution of the elements in the matrix, and can therefore not efficiently represent the entire set of matrices that exhibit low entropy statistics (thus, the entire set of compressed neural network weight matrices).

iNNvestigate neural networks!

1 code implementation • 13 Aug 2018 • Maximilian Alber, Sebastian Lapuschkin, Philipp Seegerer, Miriam Hägele, Kristof T. Schütt, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller, Sven Dähne, Pieter-Jan Kindermans

The presented library iNNvestigate addresses this by providing a common interface and out-of-the- box implementation for many analysis methods, including the reference implementation for PatternNet and PatternAttribution as well as for LRP-methods.

Explaining the Unique Nature of Individual Gait Patterns with Deep Learning

1 code implementation • 13 Aug 2018 • Fabian Horst, Sebastian Lapuschkin, Wojciech Samek, Klaus-Robert Müller, Wolfgang I. Schöllhorn

Machine learning (ML) techniques such as (deep) artificial neural networks (DNN) are solving very successfully a plethora of tasks and provide new predictive models for complex physical, chemical, biological and social systems.

Enhanced Machine Learning Techniques for Early HARQ Feedback Prediction in 5G

no code implementations • 27 Jul 2018 • Nils Strodthoff, Barış Göktepe, Thomas Schierl, Cornelius Hellge, Wojciech Samek

We investigate Early Hybrid Automatic Repeat reQuest (E-HARQ) feedback schemes enhanced by machine learning techniques as a path towards ultra-reliable and low-latency communication (URLLC).

AudioMNIST: Exploring Explainable Artificial Intelligence for Audio Analysis on a Simple Benchmark

2 code implementations • 9 Jul 2018 • Sören Becker, Johanna Vielhaben, Marcel Ackermann, Klaus-Robert Müller, Sebastian Lapuschkin, Wojciech Samek

Explainable Artificial Intelligence (XAI) is targeted at understanding how models perform feature selection and derive their classification decisions.

Accurate and Robust Neural Networks for Security Related Applications Exampled by Face Morphing Attacks

no code implementations • 11 Jun 2018 • Clemens Seibold, Wojciech Samek, Anna Hilsmann, Peter Eisert

Artificial neural networks tend to learn only what they need for a task.

Understanding Patch-Based Learning by Explaining Predictions

no code implementations • 11 Jun 2018 • Christopher Anders, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller

We apply the deep Taylor / LRP technique to understand the deep network's classification decisions, and identify a "border effect": a tendency of the classifier to look mainly at the bordering frames of the input.

Robustifying Models Against Adversarial Attacks by Langevin Dynamics

no code implementations • 30 May 2018 • Vignesh Srinivasan, Arturo Marban, Klaus-Robert Müller, Wojciech Samek, Shinichi Nakajima

Adversarial attacks on deep learning models have compromised their performance considerably.

Compact and Computationally Efficient Representation of Deep Neural Networks

no code implementations • 27 May 2018 • Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek

These new matrix formats have the novel property that their memory and algorithmic complexity are implicitly bounded by the entropy of the matrix, consequently implying that they are guaranteed to become more efficient as the entropy of the matrix is being reduced.

Sparse Binary Compression: Towards Distributed Deep Learning with minimal Communication

no code implementations • 22 May 2018 • Felix Sattler, Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek

A major issue in distributed training is the limited communication bandwidth between contributing nodes or prohibitive communication cost in general.

A Recurrent Convolutional Neural Network Approach for Sensorless Force Estimation in Robotic Surgery

no code implementations • 22 May 2018 • Arturo Marban, Vignesh Srinivasan, Wojciech Samek, Josep Fernández, Alicia Casals

The results suggest that the force estimation quality is better when both, the tool data and video sequences, are processed by the neural network model.

Dual Recurrent Attention Units for Visual Question Answering

1 code implementation • 1 Feb 2018 • Ahmed Osman, Wojciech Samek

First, we introduce a baseline VQA model with visual attention and test the performance difference between convolutional and recurrent attention on the VQA 2. 0 dataset.

The Convergence of Machine Learning and Communications

no code implementations • 28 Aug 2017 • Wojciech Samek, Slawomir Stanczak, Thomas Wiegand

The areas of machine learning and communication technology are converging.

Explainable Artificial Intelligence: Understanding, Visualizing and Interpreting Deep Learning Models

no code implementations • 28 Aug 2017 • Wojciech Samek, Thomas Wiegand, Klaus-Robert Müller

With the availability of large databases and recent improvements in deep learning methodology, the performance of AI systems is reaching or even exceeding the human level on an increasing number of complex tasks.

Explainable artificial intelligence

Explainable artificial intelligence

General Classification

+2

General Classification

+2

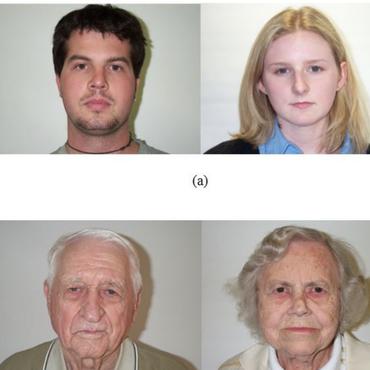

Understanding and Comparing Deep Neural Networks for Age and Gender Classification

no code implementations • 25 Aug 2017 • Sebastian Lapuschkin, Alexander Binder, Klaus-Robert Müller, Wojciech Samek

Recently, deep neural networks have demonstrated excellent performances in recognizing the age and gender on human face images.

Discovering topics in text datasets by visualizing relevant words

1 code implementation • 18 Jul 2017 • Franziska Horn, Leila Arras, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

When dealing with large collections of documents, it is imperative to quickly get an overview of the texts' contents.

Exploring text datasets by visualizing relevant words

2 code implementations • 17 Jul 2017 • Franziska Horn, Leila Arras, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

When working with a new dataset, it is important to first explore and familiarize oneself with it, before applying any advanced machine learning algorithms.

Methods for Interpreting and Understanding Deep Neural Networks

no code implementations • 24 Jun 2017 • Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller

This paper provides an entry point to the problem of interpreting a deep neural network model and explaining its predictions.

Explaining Recurrent Neural Network Predictions in Sentiment Analysis

1 code implementation • WS 2017 • Leila Arras, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

Recently, a technique called Layer-wise Relevance Propagation (LRP) was shown to deliver insightful explanations in the form of input space relevances for understanding feed-forward neural network classification decisions.

"What is Relevant in a Text Document?": An Interpretable Machine Learning Approach

1 code implementation • 23 Dec 2016 • Leila Arras, Franziska Horn, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

Text documents can be described by a number of abstract concepts such as semantic category, writing style, or sentiment.

Deep Neural Networks for No-Reference and Full-Reference Image Quality Assessment

2 code implementations • 6 Dec 2016 • Sebastian Bosse, Dominique Maniry, Klaus-Robert Müller, Thomas Wiegand, Wojciech Samek

We present a deep neural network-based approach to image quality assessment (IQA).

Interpreting the Predictions of Complex ML Models by Layer-wise Relevance Propagation

no code implementations • 24 Nov 2016 • Wojciech Samek, Grégoire Montavon, Alexander Binder, Sebastian Lapuschkin, Klaus-Robert Müller

Complex nonlinear models such as deep neural network (DNNs) have become an important tool for image classification, speech recognition, natural language processing, and many other fields of application.

Sharing Hash Codes for Multiple Purposes

no code implementations • 11 Sep 2016 • Wikor Pronobis, Danny Panknin, Johannes Kirschnick, Vignesh Srinivasan, Wojciech Samek, Volker Markl, Manohar Kaul, Klaus-Robert Mueller, Shinichi Nakajima

In this paper, we propose {multiple purpose LSH (mp-LSH) which shares the hash codes for different dissimilarities.

Object Boundary Detection and Classification with Image-level Labels

no code implementations • 29 Jun 2016 • Jing Yu Koh, Wojciech Samek, Klaus-Robert Müller, Alexander Binder

We propose a novel strategy for solving this task, when pixel-level annotations are not available, performing it in an almost zero-shot manner by relying on conventional whole image neural net classifiers that were trained using large bounding boxes.

Identifying individual facial expressions by deconstructing a neural network

no code implementations • 23 Jun 2016 • Farhad Arbabzadah, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

We further observe that the explanation method provides important insights into the nature of features of the base model, which allow one to assess the aptitude of the base model for a given transfer learning task.

Explaining Predictions of Non-Linear Classifiers in NLP

1 code implementation • WS 2016 • Leila Arras, Franziska Horn, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

Layer-wise relevance propagation (LRP) is a recently proposed technique for explaining predictions of complex non-linear classifiers in terms of input variables.

Interpretable Deep Neural Networks for Single-Trial EEG Classification

no code implementations • 27 Apr 2016 • Irene Sturm, Sebastian Bach, Wojciech Samek, Klaus-Robert Müller

With LRP a new quality of high-resolution assessment of neural activity can be reached.

Layer-wise Relevance Propagation for Neural Networks with Local Renormalization Layers

no code implementations • 4 Apr 2016 • Alexander Binder, Grégoire Montavon, Sebastian Bach, Klaus-Robert Müller, Wojciech Samek

Layer-wise relevance propagation is a framework which allows to decompose the prediction of a deep neural network computed over a sample, e. g. an image, down to relevance scores for the single input dimensions of the sample such as subpixels of an image.

Controlling Explanatory Heatmap Resolution and Semantics via Decomposition Depth

no code implementations • 21 Mar 2016 • Sebastian Bach, Alexander Binder, Klaus-Robert Müller, Wojciech Samek

We present an application of the Layer-wise Relevance Propagation (LRP) algorithm to state of the art deep convolutional neural networks and Fisher Vector classifiers to compare the image perception and prediction strategies of both classifiers with the use of visualized heatmaps.

Explaining NonLinear Classification Decisions with Deep Taylor Decomposition

4 code implementations • 8 Dec 2015 • Grégoire Montavon, Sebastian Bach, Alexander Binder, Wojciech Samek, Klaus-Robert Müller

Although our focus is on image classification, the method is applicable to a broad set of input data, learning tasks and network architectures.

Analyzing Classifiers: Fisher Vectors and Deep Neural Networks

no code implementations • CVPR 2016 • Sebastian Bach, Alexander Binder, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

Fisher Vector classifiers and Deep Neural Networks (DNNs) are popular and successful algorithms for solving image classification problems.

Evaluating the visualization of what a Deep Neural Network has learned

1 code implementation • 21 Sep 2015 • Wojciech Samek, Alexander Binder, Grégoire Montavon, Sebastian Bach, Klaus-Robert Müller

Our main result is that the recently proposed Layer-wise Relevance Propagation (LRP) algorithm qualitatively and quantitatively provides a better explanation of what made a DNN arrive at a particular classification decision than the sensitivity-based approach or the deconvolution method.

Robust Spatial Filtering with Beta Divergence

no code implementations • NeurIPS 2013 • Wojciech Samek, Duncan Blythe, Klaus-Robert Müller, Motoaki Kawanabe

The efficiency of Brain-Computer Interfaces (BCI) largely depends upon a reliable extraction of informative features from the high-dimensional EEG signal.

Multiple Kernel Learning for Brain-Computer Interfacing

no code implementations • 22 Oct 2013 • Wojciech Samek, Alexander Binder, Klaus-Robert Müller

Combining information from different sources is a common way to improve classification accuracy in Brain-Computer Interfacing (BCI).

Transferring Subspaces Between Subjects in Brain-Computer Interfacing

no code implementations • 18 Sep 2012 • Wojciech Samek, Frank C. Meinecke, Klaus-Robert Müller

Compensating changes between a subjects' training and testing session in Brain Computer Interfacing (BCI) is challenging but of great importance for a robust BCI operation.