Search Results for author: Yen-Yu Lin

Found 46 papers, 21 papers with code

Image-Text Co-Decomposition for Text-Supervised Semantic Segmentation

no code implementations • 5 Apr 2024 • Ji-Jia Wu, Andy Chia-Hao Chang, Chieh-Yu Chuang, Chun-Pei Chen, Yu-Lun Liu, Min-Hung Chen, Hou-Ning Hu, Yung-Yu Chuang, Yen-Yu Lin

This paper addresses text-supervised semantic segmentation, aiming to learn a model capable of segmenting arbitrary visual concepts within images by using only image-text pairs without dense annotations.

ViStripformer: A Token-Efficient Transformer for Versatile Video Restoration

1 code implementation • 22 Dec 2023 • Fu-Jen Tsai, Yan-Tsung Peng, Chen-Yu Chang, Chan-Yu Li, Yen-Yu Lin, Chung-Chi Tsai, Chia-Wen Lin

Besides, ViStripformer is an effective and efficient transformer architecture with much lower memory usage than the vanilla transformer.

ID-Blau: Image Deblurring by Implicit Diffusion-based reBLurring AUgmentation

no code implementations • 18 Dec 2023 • Jia-Hao Wu, Fu-Jen Tsai, Yan-Tsung Peng, Chung-Chi Tsai, Chia-Wen Lin, Yen-Yu Lin

We parameterize the blur patterns of a blurred image with their orientations and magnitudes as a pixel-wise blur condition map to simulate motion trajectories and implicitly represent them in a continuous space.

PartDistill: 3D Shape Part Segmentation by Vision-Language Model Distillation

1 code implementation • 7 Dec 2023 • Ardian Umam, Cheng-Kun Yang, Min-Hung Chen, Jen-Hui Chuang, Yen-Yu Lin

This paper proposes a cross-modal distillation framework, PartDistill, which transfers 2D knowledge from vision-language models (VLMs) to facilitate 3D shape part segmentation.

Diffusion-SS3D: Diffusion Model for Semi-supervised 3D Object Detection

1 code implementation • NeurIPS 2023 • Cheng-Ju Ho, Chen-Hsuan Tai, Yen-Yu Lin, Ming-Hsuan Yang, Yi-Hsuan Tsai

Semi-supervised object detection is crucial for 3D scene understanding, efficiently addressing the limitation of acquiring large-scale 3D bounding box annotations.

2D-3D Interlaced Transformer for Point Cloud Segmentation with Scene-Level Supervision

no code implementations • ICCV 2023 • Cheng-Kun Yang, Min-Hung Chen, Yung-Yu Chuang, Yen-Yu Lin

Considering the high annotation cost of point clouds, effective 2D and 3D feature fusion based on weakly supervised learning is in great demand.

Learning Continuous Exposure Value Representations for Single-Image HDR Reconstruction

no code implementations • ICCV 2023 • Su-Kai Chen, Hung-Lin Yen, Yu-Lun Liu, Min-Hung Chen, Hou-Ning Hu, Wen-Hsiao Peng, Yen-Yu Lin

To address this, we propose the continuous exposure value representation (CEVR), which uses an implicit function to generate LDR images with arbitrary EVs, including those unseen during training.

MoTIF: Learning Motion Trajectories with Local Implicit Neural Functions for Continuous Space-Time Video Super-Resolution

1 code implementation • ICCV 2023 • Si-Cun Chen, Yi-Hsin Chen, Yen-Yu Lin, Wen-Hsiao Peng

We motivate the use of forward motion from the perspective of learning individual motion trajectories, as opposed to learning a mixture of motion trajectories with backward motion.

Learning Object-level Point Augmentor for Semi-supervised 3D Object Detection

1 code implementation • 19 Dec 2022 • Cheng-Ju Ho, Chen-Hsuan Tai, Yi-Hsuan Tsai, Yen-Yu Lin, Ming-Hsuan Yang

In this work, we propose an object-level point augmentor (OPA) that performs local transformations for semi-supervised 3D object detection.

Meta Transferring for Deblurring

1 code implementation • 14 Oct 2022 • Po-Sheng Liu, Fu-Jen Tsai, Yan-Tsung Peng, Chung-Chi Tsai, Chia-Wen Lin, Yen-Yu Lin

Most previous deblurring methods were built with a generic model trained on blurred images and their sharp counterparts.

Stripformer: Strip Transformer for Fast Image Deblurring

1 code implementation • 10 Apr 2022 • Fu-Jen Tsai, Yan-Tsung Peng, Yen-Yu Lin, Chung-Chi Tsai, Chia-Wen Lin

Images taken in dynamic scenes may contain unwanted motion blur, which significantly degrades visual quality.

Ranked #2 on

Deblurring

on RealBlur-R

Ranked #2 on

Deblurring

on RealBlur-R

An MIL-Derived Transformer for Weakly Supervised Point Cloud Segmentation

no code implementations • CVPR 2022 • Cheng-Kun Yang, Ji-Jia Wu, Kai-Syun Chen, Yung-Yu Chuang, Yen-Yu Lin

We address weakly supervised point cloud segmentation by proposing a new model, MIL-derived transformer, to mine additional supervisory signals.

Exploring Cross-Video and Cross-Modality Signals for Weakly-Supervised Audio-Visual Video Parsing

1 code implementation • NeurIPS 2021 • Yan-Bo Lin, Hung-Yu Tseng, Hsin-Ying Lee, Yen-Yu Lin, Ming-Hsuan Yang

The audio-visual video parsing task aims to temporally parse a video into audio or visual event categories.

Unsupervised Sound Localization via Iterative Contrastive Learning

no code implementations • 1 Apr 2021 • Yan-Bo Lin, Hung-Yu Tseng, Hsin-Ying Lee, Yen-Yu Lin, Ming-Hsuan Yang

Sound localization aims to find the source of the audio signal in the visual scene.

BANet: Blur-aware Attention Networks for Dynamic Scene Deblurring

1 code implementation • 19 Jan 2021 • Fu-Jen Tsai, Yan-Tsung Peng, Yen-Yu Lin, Chung-Chi Tsai, Chia-Wen Lin

Image motion blur results from a combination of object motions and camera shakes, and such blurring effect is generally directional and non-uniform.

Ranked #5 on

Deblurring

on RealBlur-R

Ranked #5 on

Deblurring

on RealBlur-R

Unsupervised Point Cloud Object Co-Segmentation by Co-Contrastive Learning and Mutual Attention Sampling

1 code implementation • ICCV 2021 • Cheng-Kun Yang, Yung-Yu Chuang, Yen-Yu Lin

We formulate this task as an object point sampling problem, and develop two techniques, the mutual attention module and co-contrastive learning, to enable it.

Temporal-Aware Self-Supervised Learning for 3D Hand Pose and Mesh Estimation in Videos

no code implementations • 6 Dec 2020 • Liangjian Chen, Shih-Yao Lin, Yusheng Xie, Yen-Yu Lin, Xiaohui Xie

Experiments show that our modelachieves surprisingly good results, with 3D estimation ac-curacy on par with the state-of-the-art models trained with3D annotations, highlighting the benefit of the temporalconsistency in constraining 3D prediction models.

MVHM: A Large-Scale Multi-View Hand Mesh Benchmark for Accurate 3D Hand Pose Estimation

no code implementations • 6 Dec 2020 • Liangjian Chen, Shih-Yao Lin, Yusheng Xie, Yen-Yu Lin, Xiaohui Xie

Based on the match algorithm, we propose an efficient pipeline to generate a large-scale multi-view hand mesh (MVHM) dataset with accurate 3D hand mesh and joint labels.

DGGAN: Depth-image Guided Generative Adversarial Networks for Disentangling RGB and Depth Images in 3D Hand Pose Estimation

no code implementations • 6 Dec 2020 • Liangjian Chen, Shih-Yao Lin, Yusheng Xie, Yen-Yu Lin, Wei Fan, Xiaohui Xie

Estimating3D hand poses from RGB images is essentialto a wide range of potential applications, but is challengingowing to substantial ambiguity in the inference of depth in-formation from RGB images.

MM-Hand: 3D-Aware Multi-Modal Guided Hand Generative Network for 3D Hand Pose Synthesis

1 code implementation • 2 Oct 2020 • Zhenyu Wu, Duc Hoang, Shih-Yao Lin, Yusheng Xie, Liangjian Chen, Yen-Yu Lin, Zhangyang Wang, Wei Fan

Estimating the 3D hand pose from a monocular RGB image is important but challenging.

Every Pixel Matters: Center-aware Feature Alignment for Domain Adaptive Object Detector

1 code implementation • ECCV 2020 • Cheng-Chun Hsu, Yi-Hsuan Tsai, Yen-Yu Lin, Ming-Hsuan Yang

A domain adaptive object detector aims to adapt itself to unseen domains that may contain variations of object appearance, viewpoints or backgrounds.

Regularizing Meta-Learning via Gradient Dropout

1 code implementation • 13 Apr 2020 • Hung-Yu Tseng, Yi-Wen Chen, Yi-Hsuan Tsai, Sifei Liu, Yen-Yu Lin, Ming-Hsuan Yang

With the growing attention on learning-to-learn new tasks using only a few examples, meta-learning has been widely used in numerous problems such as few-shot classification, reinforcement learning, and domain generalization.

Deep Semantic Matching with Foreground Detection and Cycle-Consistency

no code implementations • 31 Mar 2020 • Yun-Chun Chen, Po-Hsiang Huang, Li-Yu Yu, Jia-Bin Huang, Ming-Hsuan Yang, Yen-Yu Lin

Establishing dense semantic correspondences between object instances remains a challenging problem due to background clutter, significant scale and pose differences, and large intra-class variations.

Cross-Resolution Adversarial Dual Network for Person Re-Identification and Beyond

no code implementations • 19 Feb 2020 • Yu-Jhe Li, Yun-Chun Chen, Yen-Yu Lin, Yu-Chiang Frank Wang

Person re-identification (re-ID) aims at matching images of the same person across camera views.

CrDoCo: Pixel-level Domain Transfer with Cross-Domain Consistency

no code implementations • CVPR 2019 • Yun-Chun Chen, Yen-Yu Lin, Ming-Hsuan Yang, Jia-Bin Huang

Unsupervised domain adaptation algorithms aim to transfer the knowledge learned from one domain to another (e. g., synthetic to real images).

Weakly Supervised Instance Segmentation using the Bounding Box Tightness Prior

1 code implementation • NeurIPS 2019 • Cheng-Chun Hsu, Kuang-Jui Hsu, Chung-Chi Tsai, Yen-Yu Lin, Yung-Yu Chuang

This paper presents a weakly supervised instance segmentation method that consumes training data with tight bounding box annotations.

Box-supervised Instance Segmentation

Box-supervised Instance Segmentation

Multiple Instance Learning

+4

Multiple Instance Learning

+4

Referring Expression Object Segmentation with Caption-Aware Consistency

1 code implementation • 10 Oct 2019 • Yi-Wen Chen, Yi-Hsuan Tsai, Tiantian Wang, Yen-Yu Lin, Ming-Hsuan Yang

To this end, we propose an end-to-end trainable comprehension network that consists of the language and visual encoders to extract feature representations from both domains.

Ranked #19 on

Referring Expression Segmentation

on RefCOCO testB

Ranked #19 on

Referring Expression Segmentation

on RefCOCO testB

Recover and Identify: A Generative Dual Model for Cross-Resolution Person Re-Identification

no code implementations • ICCV 2019 • Yu-Jhe Li, Yun-Chun Chen, Yen-Yu Lin, Xiaofei Du, Yu-Chiang Frank Wang

Person re-identification (re-ID) aims at matching images of the same identity across camera views.

Show, Match and Segment: Joint Weakly Supervised Learning of Semantic Matching and Object Co-segmentation

1 code implementation • 13 Jun 2019 • Yun-Chun Chen, Yen-Yu Lin, Ming-Hsuan Yang, Jia-Bin Huang

In contrast to existing algorithms that tackle the tasks of semantic matching and object co-segmentation in isolation, our method exploits the complementary nature of the two tasks.

Deep Video Frame Interpolation using Cyclic Frame Generation

1 code implementation • AAAI 2019 • Yu-Lun Liu, Yi-Tung Liao, Yen-Yu Lin, Yung-Yu Chuang1, 2

In addition to the cycle consistency loss, we propose two extensions: motion linearity loss and edge-guided training.

Unseen Object Segmentation in Videos via Transferable Representations

no code implementations • 8 Jan 2019 • Yi-Wen Chen, Yi-Hsuan Tsai, Chu-Ya Yang, Yen-Yu Lin, Ming-Hsuan Yang

The entire process is decomposed into two tasks: 1) solving a submodular function for selecting object-like segments, and 2) learning a CNN model with a transferable module for adapting seen categories in the source domain to the unseen target video.

Generating Realistic Training Images Based on Tonality-Alignment Generative Adversarial Networks for Hand Pose Estimation

no code implementations • 25 Nov 2018 • Liangjian Chen, Shih-Yao Lin, Yusheng Xie, Hui Tang, Yufan Xue, Xiaohui Xie, Yen-Yu Lin, Wei Fan

Hand pose estimation from a monocular RGB image is an important but challenging task.

Learning Conditional Random Fields with Augmented Observations for Partially Observed Action Recognition

no code implementations • 25 Nov 2018 • Shih-Yao Lin, Yen-Yu Lin, Chu-Song Chen, Yi-Ping Hung

This paper aims at recognizing partially observed human actions in videos.

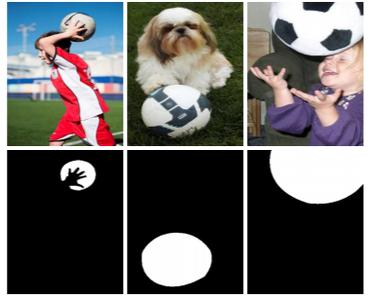

Unsupervised CNN-based Co-Saliency Detection with Graphical Optimization

no code implementations • ECCV 2018 • Kuang-Jui Hsu, Chung-Chi Tsai, Yen-Yu Lin, Xiaoning Qian, Yung-Yu Chuang

In this paper, we address co-saliency detection in a set of images jointly covering objects of a specific class by an unsupervised convolutional neural network (CNN).

Adversarial Learning for Semi-Supervised Semantic Segmentation

13 code implementations • ICLR 2018 • Wei-Chih Hung, Yi-Hsuan Tsai, Yan-Ting Liou, Yen-Yu Lin, Ming-Hsuan Yang

We propose a method for semi-supervised semantic segmentation using an adversarial network.

DeepCD: Learning Deep Complementary Descriptors for Patch Representations

1 code implementation • ICCV 2017 • Tsun-Yi Yang, Jo-Han Hsu, Yen-Yu Lin, Yung-Yu Chuang

This paper presents the DeepCD framework which learns a pair of complementary descriptors jointly for a patch by employing deep learning techniques.

Deep Co-Occurrence Feature Learning for Visual Object Recognition

1 code implementation • CVPR 2017 • Ya-Fang Shih, Yang-Ming Yeh, Yen-Yu Lin, Ming-Fang Weng, Yi-Chang Lu, Yung-Yu Chuang

We tackle the three issues by introducing a new network layer, called co-occurrence layer.

Accumulated Stability Voting: A Robust Descriptor From Descriptors of Multiple Scales

1 code implementation • CVPR 2016 • Tsun-Yi Yang, Yen-Yu Lin, Yung-Yu Chuang

Experiments on popular benchmarks demonstrate the effectiveness of our descriptors and their superiority to the state-of-the-art descriptors.

Progressive Feature Matching With Alternate Descriptor Selection and Correspondence Enrichment

no code implementations • CVPR 2016 • Yuan-Ting Hu, Yen-Yu Lin

We address two difficulties in establishing an accurate system for image matching.

Robust Image Alignment With Multiple Feature Descriptors and Matching-Guided Neighborhoods

no code implementations • CVPR 2015 • Kuang-Jui Hsu, Yen-Yu Lin, Yung-Yu Chuang

First, the performance of descriptor-based approaches to image alignment relies on the chosen descriptor, but the optimal descriptor typically varies from image to image, or even pixel to pixel.

Blur Kernel Estimation Using Normalized Color-Line Prior

no code implementations • CVPR 2015 • Wei-Sheng Lai, Jian-Jiun Ding, Yen-Yu Lin, Yung-Yu Chuang

The intermediate patches can then guide the estimation of the blur kernel.

Descriptor Ensemble: An Unsupervised Approach to Descriptor Fusion in the Homography Space

no code implementations • 13 Dec 2014 • Yuan-Ting Hu, Yen-Yu Lin, Hsin-Yi Chen, Kuang-Jui Hsu, Bing-Yu Chen

Inspired by the observation that the homographies of correct feature correspondences vary smoothly along the spatial domain, our approach stands on the unsupervised nature of feature matching, and can select a good descriptor for matching each feature point.

Multiple Structured-Instance Learning for Semantic Segmentation with Uncertain Training Data

no code implementations • CVPR 2014 • Feng-Ju Chang, Yen-Yu Lin, Kuang-Jui Hsu

By treating a bounding box as a bag with its segment hypotheses as structured instances, MSIL-CRF selects the most likely segment hypotheses by leveraging the knowledge derived from both the labeled and uncertain training data.

Depth and Skeleton Associated Action Recognition without Online Accessible RGB-D Cameras

no code implementations • CVPR 2014 • Yen-Yu Lin, Ju-Hsuan Hua, Nick C. Tang, Min-Hung Chen, Hong-Yuan Mark Liao

Our approach aims to enhance action recognition in RGB videos by leveraging the extra database.

Robust Feature Matching with Alternate Hough and Inverted Hough Transforms

no code implementations • CVPR 2013 • Hsin-Yi Chen, Yen-Yu Lin, Bing-Yu Chen

Inspired by the fact that nearby features on the same object share coherent homographies in matching, we cast the task of feature matching as a density estimation problem in the Hough space spanned by the hypotheses of homographies.

Dimensionality Reduction for Data in Multiple Feature Representations

no code implementations • NeurIPS 2008 • Yen-Yu Lin, Tyng-Luh Liu, Chiou-Shann Fuh

In solving complex visual learning tasks, adopting multiple descriptors to more precisely characterize the data has been a feasible way for improving performance.